diff --git a/.devcontainer/devcontainer.json b/.devcontainer/devcontainer.json

index e998ee7..0b1ce02 100644

--- a/.devcontainer/devcontainer.json

+++ b/.devcontainer/devcontainer.json

@@ -13,6 +13,8 @@

"ghcr.io/devcontainers/features/node:1": {}

},

+ "postCreateCommand": "cd docs; npm install && npx astro telemetry disable && npm run dev",

+

"hostRequirements": {

"cpus": 8,

"memory": "4gb",

diff --git a/contoso-chat/docs/01 | Introduction/1-paradigm-shift.md b/contoso-chat/docs/01 | Introduction/1-paradigm-shift.md

new file mode 100644

index 0000000..c3f8a29

--- /dev/null

+++ b/contoso-chat/docs/01 | Introduction/1-paradigm-shift.md

@@ -0,0 +1,64 @@

+# 01 | The Paradigm Shift

+

+Streamlining the end-to-end development workflow for modern "AI apps" requires a paradigm shift from **MLOps** to **LLMOps** that acknowledges the common roots while being mindful of the growing differences. We can view this shift in terms of how it causes us to rethink three things (_mindset_, _workflows_, _tools_) to be more effective in developing generative AI applications.

+

+

+---

+

+## Rethink Mindset

+

+Traditional "AI apps" can be viewed as "ML apps". They took data inputs and used custom-trained models to return relevant predictions as output. Modern "AI apps" tend to refer to generative AI apps that take natural language inputs (prompts), use pre-trained large language models (LLM), and return original content to users as the response. This shift is reflected in many ways.

+

+ - **The target audience is different** ➡ App developers, not data scientists.

+ - **The generated assets are different** ➡ Emphasize integrations, not predictions.

+ - **The evaluation metrics are different** ➡ Focus on fairness, groundedness, token usage.

+ - **The underlying ML models are different.** Pre-trained "Models-as-a-Service" vs. build.

+

+

+

+---

+

+## Rethink Workflow

+

+**With MLOps**, end-to-end application development involved a [complex data science lifecycle](https://learn.microsoft.com/azure/architecture/ai-ml/guide/_images/data-science-lifecycle-diag.png). To "fit" into software development processes, this was mapped to [a higher-level workflow](https://learn.microsoft.com/azure/architecture/ai-ml/guide/mlops-technical-paper#machine-learning-model-solution) visualized as shown below. The complex data science lifecycle steps (data preparation, model engineering & model evaluation) are now encapsulated into the _experimentation_ phase.

+

+

+

+**With LLMOps**, those steps need to be rethought in the context of new requirements like using natural langauge inputs (prompts), new techniques for improving quality (RAG, Fine-Tuning), new metrics for evaluation (groundedness, coherence, fluency) and responsible AI (assessment). This leads us to a revised versio of the 3-phase application development lifecycle as shown:

+

+

+

+We can unpack each phase to get a sense of individual steps in workflows that are now designed around prompt-based inputs, token-based pricing, and region-based availability of large language models and Azure AI services for provisioning.

+

+

+

+!!!example "Building LLM Apps: From Prompt Engineering to LLM Ops"

+

+ In the accompanying workshop, we'll walk through the end-to-end development process for our RAG-based LLM App from _prompt engineering_ (ideation, augmentation) to _LLM Ops_ (operationalization). We hope that helps make some of these abstract concepts feel more concrete when viewed in action.

+

+---

+

+## Rethink Tools

+

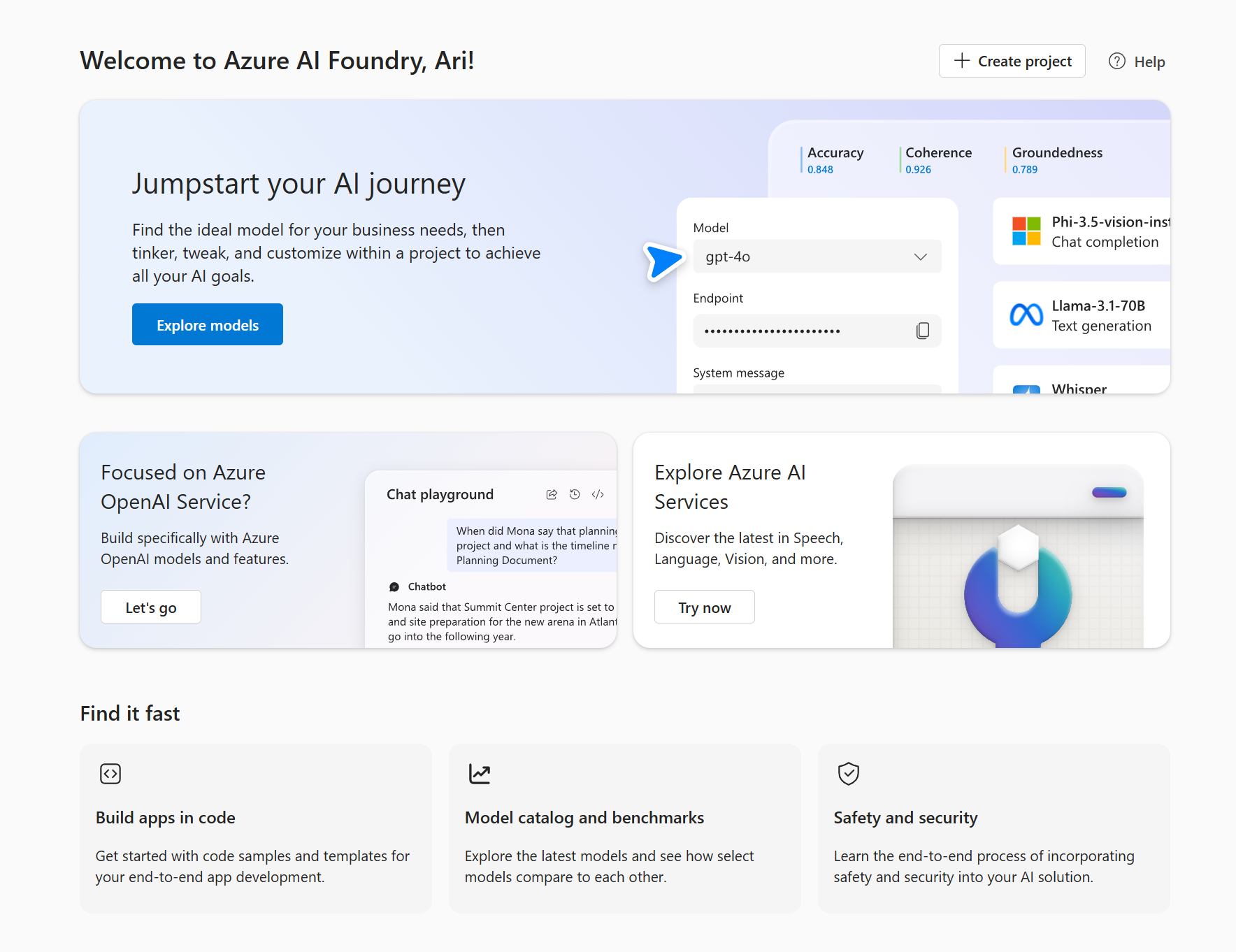

+We can immediately see how this new application development lifecycle requires corresponding _innovation in tooling_ to streamline the end-to-end development process from ideation to operationalization. The [Azure AI platform](https://learn.microsoft.com/ai) has been retooled with exactly these requirements in mind. It is centered around [Azure AI Studio](https://ai.azure.comx), a unified web portal that:

+

+ - lets you "Explore" models, capabilities, samples & responsible AI tools

+ - gives you _single pane of glass_ visibility to "Manage" Azure AI resources

+ - provides UI-based development flows to "Build" your Azure AI projects

+ - has Azure AI SDK and Azure AI CLI options for "Code-first" development

+

+

+

+The Azure AI platform is enhanced by other developer tools and resources including [PromptFlow](https://github.com/microsoft/promptflow), [Visual Studio Code Extensions](https://marketplace.visualstudio.com/VSCode) and [Responsible AI guidance](https://learn.microsoft.com/azure/ai-services/responsible-use-of-ai-overview) with built-in support for [content-filtering](https://learn.microsoft.com/azure/ai-studio/concepts/content-filtering). We'll cover some of these in the Concepts and Tooling sections of this guide.

+

+!!!abstract "Using The Azure AI Platform"

+ The abstract workflow will feel more concrete when we apply the concepts to a real use case. In the Workshop section, you'll get hands-on experience with these tools to give you a sense of their roles in streamlining your end-to-end developer experience.

+

+ - **Azure AI Studio**: Build & Manage Azure AI project and resources.

+ - **Prompt Flow**: Build, Evaluate & Deploy a RAG-based LLM App.

+ - **Visual Studio Code**: Use Azure, PromptFlow, GitHub Copilot, Jupyter Notebook extensions.

+ - **Responsible AI**: Content filtering, guidance for responsible prompts usage.

+

+

+

+

diff --git a/contoso-chat/docs/01 | Introduction/2-scenario.md b/contoso-chat/docs/01 | Introduction/2-scenario.md

new file mode 100644

index 0000000..f558891

--- /dev/null

+++ b/contoso-chat/docs/01 | Introduction/2-scenario.md

@@ -0,0 +1,92 @@

+# 02 | The App Scenario

+!!!example "Consider this familiar enterprise scenario!"

+

+ You're a new hire in the Contoso Outdoors organization. They are an e-commerce company with a successful product catalog and loyal customer base focused on outdoor activities like camping and hiking. Their website is hugely popular but their customer service agents are being overwhelmed by calls that could be answered by information currently on the site.

+

+ For every call they fail to answer, they are potentially losing not just revenue, but customer loyalty. **You are part of the developer team tasked to build a Customer Support AI into the website** to meet that demand. The objective is to build and deploy a customer service agent that is _friendly, helpful, responsible, and relevant_ in its support interactions.

+

+Let's walk through how you can make this happen in your organization, using the Azure AI Platform. Well start with the _ideation_ phase which involves identifying the business case, connecting to your data, building the basic prompt flow (LLM App), then iterating locally to extend it for your app requirements. Let's understand how this maps to our workshop.

+

+---

+

+## Contoso Outdoors (Website)

+

+The Contoso Chat (LLM App) is being designed for integration into the Contoso Outdoors site (Web App) via the _chat icon_ seen at the bottom right. The website landing page features the Contoso Outdoors _product catalog_ organized neatly into categories like _Tents_ and _Backpacks_ to simplify discovery by customers.

+

+

+

+When a customer clicks an item, they are taken to the _product details_ page with extensive information that they can use to guide their decisions towards a purchase.

+

+

+

+!!!info "Step 1: Identify Business Case"

+

+The Contoso Chat AI should meet two business objectives. It should _reduce customer support calls_ (to manual operator) by proactively answering customer questions onsite. It should _increase customer product purchases_ by providing timely and contextual information to help them finalize the purchase decision.

+

+---

+

+## Chat Completion (Basic)

+

+Let's move to the next step - designing our Contoso Chat AI using the relevant Large Language Model (LLM). Based on our manual customer service calls, we know questions can broadly fall into two categories:

+

+ - **Product** focus ➡ _"What should I buy for my hiking trip to Andalusia?"_

+ - **Customer** focus ➡ _"What backpack should I buy given my previous purchases here?"_

+

+We know that Azure OpenAI provides a number of pre-trained models for _chat completion_ so let's see how the baseline model works for our requirements, by using the [Azure AI Studio](https://ai.azure.com) **Playground** capability with a [`gpt-3.5-turbo`](https://learn.microsoft.com/azure/ai-services/openai/concepts/models) model deployment. This model can understand inputs (and generate responses) using natural language.

+

+Let's see how it responds to the two questions above.

+

+ 1. **No Product Context**. The pre-trained model provides a perfectly valid response to the question _but it lacks the product catalog context for Contoso Outdoors!_. We need to refine this model to use our data!

+

+

+ 2. **No Customer History**. The pre-trained model makes it clear that it has no access to customer history _and consequently makes general recommendations that may be irrelevant to customer query_. We need to refine this model to understand customer identity and access their purchase history.

+

+

+!!!info "Step 2: Connect To Your Data"

+

+We need a way to _fine-tune_ the model to take our product catalog and customer history into account as relevant context for the query. The first step is to make the data sources available to our workflow. [Azure AI Studio](https://ai.azure.com) makes this easy by helping you setup and manage _connections_ to relevant Azure search and database resources.

+

+---

+

+## Chat Augmentation (RAG)

+

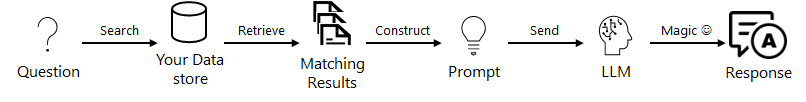

+That brings us to the next step - _prompt engineering_. We need to **augment** the user question (default prompt) with additional query context that ensures Contoso Outdoor product data is prioritized in responses. We use a popular technique know as [Retrieval Augmented Generation (RAG)](https://learn.microsoft.com/azure/ai-studio/concepts/retrieval-augmented-generation) that works as shown below, to _generate responses that are specific to your data_.

+

+

+

+We can now get a more _grounded response_ in our Contoso Chat AI, as shown.

+

+

+

+!!!info "Step 3: Build Basic PromptFlow"

+

+We now need to add in a step that also takes _customer history_ into account. To implement this, we need a tool that helps us _orchestrate_ these various steps in a more intuitive way, allowing user query and data to "flow" through the processing pipeline to generate the final response.

+

+---

+

+## Chat Orchestration (Flow)

+

+The previous step gives us a basic flow that augments predefined model behaviors to add product context. Now, we want to add _another tool_ (or processing function) that looks up customer details for additional prompt engineering. The end result should be a user experience that looks something like this, helping move the user closer to a purchase decision.

+

+

+

+!!!info "Step 4: Develop & Extend Flow"

+

+Flow orchestration is hard. This is where [PromptFlow](https://aka.ms/promptflow) helps, allowing us to insert a _customer lookup function_ seamlessly into the flow graph, to extend it. With the Azure AI platform, you get PromptFlow capabilities integrated seamlessly into both development (VS Code) and deployment (Azure AI Studio) environments for a streamlined end-to-end developer experience.

+

+---

+

+## Evaluate & Deploy (E2E)

+

+This ends the _ideation_ phase of the application lifecycle we saw earlier. PromptFlow works seamlessly with Azure AI Studio to streamline the next two steps of the lifecycle (_evaluate_ and _deploy_), helping get deliver the final Contoso Chat Support Agent AI experience on the Contoso Outdoors website. Here is what a multi-turn conversation with customers might look like now:

+

+

+

+Your customer support AI is a hit!

+

+Not only can it answer questions grounded in your product catalog, but it can refine or recommend responses based on the customer's purchase history. The conversational experience feels more natural to your customers and reduces their effort in finding relevant products in information-dense websites.

+

+You find customers are spending more time in chat conversations with your support agent AI, and finding new reasons to purchase your products.

+

+!!!example "Workshop: Build a production RAG with PromptFlow & Azure AI Studio"

+ In the next section, we'll look at how we can bring this story to life, step-by-step, using Visual Studio Code, Azure AI Studio and Prompt Flow. You'll learn how to provision Azure AI Services, engineer prompts with Retrieval-Augmented Generation to use your product data, then extend the PromptFlow to include customer lookup before evaluating and deploying the Chat AI application to Azure for real-world use.

\ No newline at end of file

diff --git a/contoso-chat/docs/01 | Introduction/3-environment.md b/contoso-chat/docs/01 | Introduction/3-environment.md

new file mode 100644

index 0000000..e3143c4

--- /dev/null

+++ b/contoso-chat/docs/01 | Introduction/3-environment.md

@@ -0,0 +1,35 @@

+# 03 | The Dev Environment

+

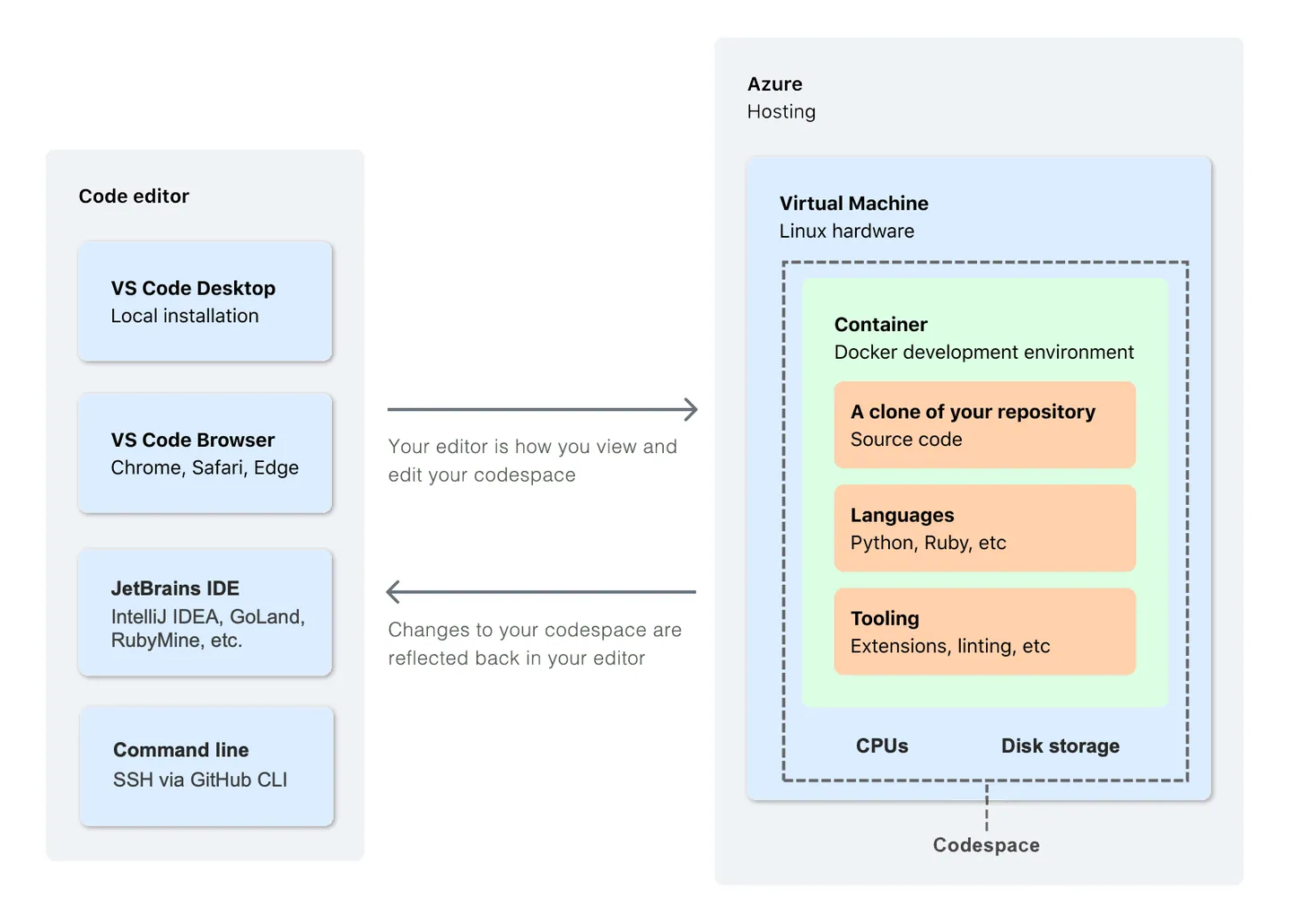

+The repository is instrumented with [dev container](https://containers.dev) configuration that provides a consistent pre-built development environment deployed in a Docker container. Launch this in the cloud with [GitHub Codespaces](https://docs.github.com/codespaces), or in your local device with [Docker Desktop](https://www.docker.com/products/docker-desktop/).

+

+---

+

+## Dev Tools

+

+In addition, we make use of these tools:

+

+- **[Visual Studio Code](https://code.visualstudio.com/) as the default editor** | Works seamlessly with dev containers. Extensions streamline development with Azure and Prompt Flow.

+- **[Azure Portal](https://portal.azure.com) for Azure subscription management** | Single pane of glass view into all Azure resources, activities, billing and more.

+- **[Azure AI Studio (Preview)](https://ai.azure.com)** | Single pane of glass view into all resources and assets for your Azure AI projects. Currently in preview (expect it to evolve rapidly).

+- **[Azure ML Studio](https://ml.azure.com)** | Enterprise-grade AI service for managing end-to-end ML lifecycle for operationalizing AI models. Used for some configuration operations in our workshop (expect support to move to Azure AI Studio).

+- **[Prompt Flow](https://github.com/microsoft/promptflow)** | Open-source tooling for orchestrating end-to-end development workflow (design, implementation, execution, evaluation, deployment) for modern LLM applications.

+

+---

+

+## Required Resources

+

+We make use of the following resources in this lab:

+

+!!!info "Azure Samples Used | **Give them a ⭐️ on GitHub**"

+

+ - [Contoso Chat](https://github.com/Azure-Samples/contoso-chat) - as the RAG-based AI app _we will build_.

+ - [Contoso Outdoors](https://github.com/Azure-Samples/contoso-web) - as the web-based app _using our AI_.

+

+!!!info "Azure Resources Used | **Check out the Documentation**"

+

+ - [Azure AI Resource](https://learn.microsoft.com/azure/ai-studio/concepts/ai-resources) - Top-level Azure resource for AI Studio, establishes working environment.

+ - [Azure AI Project](https://learn.microsoft.com/azure/ai-studio/how-to/create-projects) - saves state and organizes work for AI app development.

+ - [Azure AI Search](https://learn.microsoft.com/azure/search/search-what-is-azure-search) - get secure information retrieval at scale over user-owned content

+ - [Azure Open AI](https://learn.microsoft.com/azure/ai-services/openai/overview) - provides REST API access to OpenAI's powerful language models.

+ - [Azure Cosmos DB](https://learn.microsoft.com/azure/cosmos-db/) - Fully managed, distributed NoSQL & relational database for modern app development.

+ - [Deployment Models](https://learn.microsoft.com/azure/ai-studio/how-to/model-catalog) Deployment from model catalog by various criteria.

diff --git a/contoso-chat/docs/02 | Workshop/01 | Lab Overview/README.md b/contoso-chat/docs/02 | Workshop/01 | Lab Overview/README.md

new file mode 100644

index 0000000..0509e09

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/01 | Lab Overview/README.md

@@ -0,0 +1,66 @@

+# 1.1 | What You'll Learn

+

+This is a 60-75 minute workshop that consists of a series of lab exercises that teach you how to build a production RAG (Retrieval Augmented Generation) based LLM application using Promptflow and Azure AI Studio.

+

+You'll gain hands-on experience with the various steps involved in the _end-to-end application development lifecycle_ from prompt engineering to LLM Ops.

+

+---

+

+## Learning Objectives

+

+!!!info "By the end of this lab, you should be able to:"

+

+1. Explain **LLMOps** - concepts & differentiation from MLOps.

+1. Explain **Prompt Flow** - concepts & tools for building LLM Apps.

+1. Explain **Azure AI Studio** - features & functionality for streamlining E2E app development.

+1. **Design, run & evaluate** RAG apps - using the Promptflow Extension on VS Code

+1. **Deploy, test & use** RAG apps - from Azure AI Studio UI (no code experience)

+

+---

+

+## Pre-Requisites

+

+!!!info "We assume you have familiarity with the following:"

+

+1. Machine Learning & Generative AI _concepts_

+1. Python & Jupyter Notebook _programming_

+1. Azure, GitHub & Visual Studio Code _tooling_

+

+!!!info "You will need the following to complete the lab:"

+

+1. Your own laptop (charged) with a modern browser

+1. A GitHub account with GitHub Codespaces quota.

+1. An Azure subscription with Azure OpenAI access.

+1. An Azure AI Search resource with Semantic Ranker enabled.

+

+---

+

+## Dev Environment

+

+You'll make use of the following resources in this workshop:

+

+!!!info "Code Samples (GitHub Repositories)"

+

+ - [Contoso Chat](https://github.com/Azure-Samples/contoso-chat) - source code for the RAG-based LLM app.

+ - [Contoso Web](https://github.com/Azure-Samples/contoso-web) - source code for the Next.js-based Web app.

+

+

+!!!info "Developer Tools (local and cloud)"

+

+ - [Visual Studio Code](https://code.visualstudio.com/) - as the default editor

+ - [Github Codespaces](https://github.com/codespaces) - as the dev container

+ - [Azure AI Studio (Preview)](https://ai.azure.com) - for AI projects

+ - [Azure ML Studio](https://ml.azure.com) - for minor configuration

+ - [Azure Portal](https://portal.azure.com) - for managing Azure resources

+ - [Prompt Flow](https://github.com/microsoft/promptflow) - for streamlining end-to-end LLM app dev

+

+!!!info "Azure Resources (Provisioned in Subscription)"

+

+ - [Azure AI Resource](https://learn.microsoft.com/azure/ai-studio/concepts/ai-resources) - top-level AI resource, provides hosting environment for apps

+ - [Azure AI Project](https://learn.microsoft.com/azure/ai-studio/how-to/create-projects) - organize work & save state for AI apps.

+ - [Azure AI Search](https://learn.microsoft.com/azure/search/search-create-service-portal) - full-text search, indexing & information retrieval. (product data)

+ - [Azure OpenAI Service](https://learn.microsoft.com/azure/ai-services/openai/overview) - chat completion & text embedding models. (chat UI, RAG)

+ - [Azure Cosmos DB](https://learn.microsoft.com/azure/cosmos-db/nosql/quickstart-portal) - globally-distributed multi-model database. (customer data)

+ - [Azure Static Web Apps](https://learn.microsoft.com/azure/static-web-apps/overview) - optional, deploy Contoso Web application. (chat integration)

+

+===

\ No newline at end of file

diff --git a/contoso-chat/docs/02 | Workshop/02 | Setup Dev Environment/01-get-started.md b/contoso-chat/docs/02 | Workshop/02 | Setup Dev Environment/01-get-started.md

new file mode 100644

index 0000000..aa5b113

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/02 | Setup Dev Environment/01-get-started.md

@@ -0,0 +1,44 @@

+# 2.1 | Get Started

+

+!!!info "Step 01 | Launch browser. Open tab to Azure Portal. Log in."

+

+!!!example "Watch: Click [**here**](https://youtu.be/1Z4sgjXTKkU?t=94) for a video walkthrough of this step, for reference"

+

+---

+

+!!!warning "These instructions are for **self-guided learners** only."

+

+You must have an active Azure subscription with access to the Azure OpenAI services used in this lab. You should also have your own laptop with a charger (or sufficient battery to power a 75-minute session). Complete these steps to get started:

+

+ - [ ] **01** | Launch the Edge Browser (Full-Screen)

+ - [ ] **02** | Navigate to [https://portal.azure.com](https://portal.azure.com)

+ - [ ] **03** | Login with **your** Azure credentials

+ - [ ] **04** | Click on **Resource Groups** option under _Navigate_

+ - [ ] **05** | Leave this browser tab open to this Azure Portal page.

+

+You can now move to the [**2.2 | Launch Codespaces**](./02-launch-codespaces.md) step.

+

+

+

+!!!warning "These instructions are for **Skillable platform learners** only."

+

+**You will be given a link to launch the Skillable Lab**. When launched, it will show you a screen (see below) with a built-in instruction manual on the right and a login screen on the left. Read the tips below, then switch over to that manual and continue following instructions there.

+

+

+

+The Skillable Platform comes with:

+

+- [X] **a built-in instruction manual**. See screen at right for inline instructions.

+- [X] **a pre-assigned Azure subscription**. Look for the (_username_, _password_) details.

+- [X] **handy one-click text entry cues**. See green elements with "T" prefix.

+

+Here are a few tips to keep in mind:

+

+ - The _text entry cues_ in the manual will auto-enter the associated text into the screen at left, at the current cursor location. Use this to reduce manual effort and ensure you use consistent and correct values for the many variables and literals in the lab.

+ - The _Azure subscription provided_ is recycled at the end of each lab. You don't need to worry about deleting resources or taking any cleanup actions on Azure. That will be done for you.

+ - You will still use _your personal GitHub login_ to launch GitHub Codespaces for the session. The lab can be completed within the generous "free quota" provided by GitHub Codespaces for personal accounts. If you have already used by that quota, you will either need a paid account - or need to setup a new account to get a fresh quota allocation.

+ - To prevent unnecessary depletion of that free quota, **please remember to delete the Codespaces session** at the end of this workshop.

+

+---

+

+!!!success "Congratulations! You're authenticated on Azure. Move to step [02 | Launch Codespaces](02-launch-codespaces.md)."

diff --git a/contoso-chat/docs/02 | Workshop/02 | Setup Dev Environment/02-launch-codespaces.md b/contoso-chat/docs/02 | Workshop/02 | Setup Dev Environment/02-launch-codespaces.md

new file mode 100644

index 0000000..e3c3844

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/02 | Setup Dev Environment/02-launch-codespaces.md

@@ -0,0 +1,30 @@

+# 2.2 | Launch Codespaces

+

+!!!info "Step 02 | Fork the repo. Launch GitHub Codespaces on fork."

+

+!!!example "Click [**here**](https://youtu.be/1Z4sgjXTKkU?t=165) for a video walkthrough of _this step_ (from a Skillable-based session) for reference"

+

+---

+

+The [contoso-chat](https://aka.ms/aitour/contoso-chat) application sample comes with a _devcontainer.json_ configuration file that provides a pre-built development environment with minimal manual effort required in setup. Let's get that running.

+

+ - [ ] **01** | Log into GitHub with _your_ personal account.

+ - [ ] **02** | Navigate to this repo: [Azure-Samples/contoso-chat](https://aka.ms/aitour/contoso-chat)

+ - [ ] **03** | Fork the repo into your GitHub profile

+ - [ ] **04** | Click "Code" dropdown, select "Codespaces" tab

+ - [ ] **05** | Click "+" to create new codespace

+ - [ ] **06** | Verify you see 'Setting up your codespace' in new tab

+ - [ ] **07** | Click "View Logs" to track progress

+

+

+

+This step takes a few minutes to complete. It is configuring a Docker container (with a defined base image), installing the dependencies we've specified, and launching it with a built-in Visual Studio Code editor that is configured with required extensions.

+

+While we wait for setup to complete, let's move to the next step: [**3 | Provision Azure**](./../3%20|%20Provision%20Azure/03-create-airesource.md)

+

+---

+

+!!!success "Congratulations! Your development environment is being setup for you."

+

+

+

diff --git a/contoso-chat/docs/02 | Workshop/03 | Provision Azure/03-create-airesource.md b/contoso-chat/docs/02 | Workshop/03 | Provision Azure/03-create-airesource.md

new file mode 100644

index 0000000..6e3f08e

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/03 | Provision Azure/03-create-airesource.md

@@ -0,0 +1,52 @@

+# 3.1 | Azure AI Resource

+

+!!!info "Step 03 | Provision an Azure AI Resource to host your application environment."

+

+!!!example "Click [**here**](https://youtu.be/1Z4sgjXTKkU?t=324) for a video walkthrough of _this step_ in a Skillable session, for reference."

+

+

+## Why Do We Need it?

+

+The [Azure AI resource](https://learn.microsoft.com/azure/ai-studio/concepts/ai-resources) provides the working environment for a team to build and manage AI applications. It is used to _provision and access _multiple Azure AI services in a single step_. The [list of Azure AI services](https://learn.microsoft.com/azure/ai-services/what-are-ai-services?context=%2Fazure%2Fai-studio%2Fcontext%2Fcontext#available-azure-ai-services) tells you what's available. The default AI Resource setup gives you these:

+

+ - [Azure OpenAI Service](https://learn.microsoft.com/azure/ai-services/openai/) - perform a wide variety of natural language tasks (with LLMs)

+ - [Azure Content Safety](https://learn.microsoft.com/azure/ai-services/content-safety/) - detect & filter unwanted content (for responsible AI)

+ - [Azure Speech](https://learn.microsoft.com/azure/ai-services/speech-service/) - speech↔text conversions, translation, speaker recognition

+ - [Azure Vision](https://learn.microsoft.com/azure/ai-services/computer-vision/) - analyze content in images & videos

+

+The resource provides a centralized place for billing and configuring Azure AI services, adding connections to service endpoints, and provisioning compute for running relevant tasks.

+

+This is what the Azure AI Resource looks like at the end of our workshop. Note that a single AI resource can be associated with multiple _Azure AI projects_ (example underlined in red) that make use of the same provisioned AI services.

+

+

+

+

+

+## How Do We Create It?

+

+In this step, let's provision the Azure AI resource manually.

+

+- [ ] **01** | Navigate to **[https://ai.azure.com](https://ai.azure.com)** in a new browser tab.

+- [ ] **02** | Click **Login**. You will be automatically logged-in with prior Azure auth.

+- [ ] **03** | Click **Manage** in navbar.

+- [ ] **04** | Click **"+ New Azure AI resource"** in page.

+- [ ] **05** | Complete the pop-up dialog with these details:

+ - **Resource name:** _contoso-chat-ai_

+ - **Azure subscription:** (leave default)

+ - Click **"Create new resource group"**

+ - **Resource group**: _contoso-chat-rg_

+ - **Location**: _Sweden Central_

+- [ ] **06** | Click "Next: in pop-up dialog

+ - Click **Create** to confirm resource creation.

+ - This takes a few minutes (see below). Wait for completion.

+- [ ] **07** | Return to "Manage" page & Refresh.

+ - Verify this Azure AI resource is listed.

+

+

+

+## What's Next?

+

+!!!success "Congratulations! Your Azure AI Resource was created successfully."

+

+Clicking the resource gives you a detail page similar to the one below, except you won't see any Projects listed (like the one underlined in red) that make use of this newly-created resource. Let's create our first Azure AI project - next.

+

diff --git a/contoso-chat/docs/02 | Workshop/03 | Provision Azure/04-create-aiproject.md b/contoso-chat/docs/02 | Workshop/03 | Provision Azure/04-create-aiproject.md

new file mode 100644

index 0000000..8b7cc72

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/03 | Provision Azure/04-create-aiproject.md

@@ -0,0 +1,34 @@

+# 3.2 | Azure AI Project

+

+!!!info "Step 04 | Provision an Azure AI Project to organize work and save state."

+

+!!!example "Click [**here**](https://youtu.be/1Z4sgjXTKkU?t=529) for a video walkthrough of _this step_ in a Skillable session, for reference."

+

+## Why Do We Need It?

+

+The [Azure AI project](https://learn.microsoft.com/azure/ai-studio/how-to/create-projects) is used to _organize your work and save state, while you build custom AI apps_. Projects are hosted on an Azure AI resource. This is what the **final Azure AI project** looks like at the end of our workshop. Note how it is the single destination for discovering and configuring the various components required to _build_ our AI application.

+

+

+

+## How Do We Create It?

+

+> [!NOTE]

+_Continue here and create resource manually only if your Azure subscription was not pre-provisioned with a lab Resource Group_

+

+* [] **01** | Navigate to +++**https://ai.azure.com**+++

+ - Click the **"Build"** option in navbar.

+ - Click **"+ New project"** in Projects table.

+ - You should see a dialog pop up.

+* [] **02** | Complete "Project details" dialog

+ - **Project name**: +++contoso-chat-aiproj+++

+ - **Azure AI resource**: select resource you created.

+ - Click **"Create a project"**

+ - This will take a few minutes to complete

+ - You should be taken to created AI Project page

+* [] **03** | Go back to "Build" home page

+ - Click **Refresh**. (may take a few secs to appear)

+ - Verify your Azure AI Project resource is listed.

+

+## What's Next?

+

+🥳 **Congratulations!** You're Azure AI Project resource is ready.

diff --git a/contoso-chat/docs/02 | Workshop/03 | Provision Azure/05-model-deploy.md b/contoso-chat/docs/02 | Workshop/03 | Provision Azure/05-model-deploy.md

new file mode 100644

index 0000000..5c7698f

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/03 | Provision Azure/05-model-deploy.md

@@ -0,0 +1,39 @@

+# 05. Create Model Deployments

+

+> [!NOTE]

+_Continue here and create resource manually only if your Azure subscription was not pre-provisioned with a lab Resource Group_

+

+* [] **01** | Switch browser tab to +++**https://ai.azure.com**+++

+ - Click the **"Build"** option in navbar.

+ - Click your AI Project to view its details page.

+

+* [] **02** | Create _gpt-3.5-turbo_ deployment

+ - Click the **"Deployments"** option in sidebar.

+ - Click **+Create**. Search for +++gpt-35-turbo+++

+ - Select & click **"Confirm"**.

+ - **Deployment Name**: +++gpt-35-turbo+++

+ - **Model version**: select **0613** (for quota)

+ - Click **Deploy**. Should be fairly quick.

+

+* [] **03** | Create _gpt-4_ deployment

+ - Click the **"Deployments"** option in sidebar.

+ - Click **+Create**. Search for +++gpt-4+++

+ - Select & click **"Confirm"**.

+ - **Deployment Name**: +++gpt-4+++

+ - **Model version**: leave it as default.

+ - Click **Deploy**. Should be fairly quick.

+

+* [] **04** | Create _text-embedding-ada-002_ deployment

+ - Click the **"Deployments"** option in sidebar.

+ - Click **+Create** . Search for +++text-embedding-ada-002+++

+ - Select & click **"Confirm"**.

+ - **Deployment Name**: +++text-embedding-ada-002+++

+ - **Model version**: leave it as default.

+ - Click **Deploy**. Should be fairly quick.

+

+* [] **05** | Return to "Deployments" home page

+ - Verify all 3 models are listed correctly

+

+---

+

+🥳 **Congratulations!** You're Model Deployments are ready.

diff --git a/contoso-chat/docs/02 | Workshop/03 | Provision Azure/06-create-aisearch.md b/contoso-chat/docs/02 | Workshop/03 | Provision Azure/06-create-aisearch.md

new file mode 100644

index 0000000..8dea4e0

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/03 | Provision Azure/06-create-aisearch.md

@@ -0,0 +1,27 @@

+# 06. Create AI Search Resource

+

+> [!NOTE]

+_Continue here and create resource manually only if your Azure subscription was not pre-provisioned with a lab Resource Group_

+

+This step is done on the Azure Portal, not Azure AI Studio.

+

+* [] **01** | Switch browser tab to +++**https://portal.azure.com**+++

+ - Click on **"Create a resource"** on the home page

+ - **Search** for +++Azure AI Search+++ in Create hub page.

+ - Click the **Create** drop down in the matching result.

+* [] **02** | Complete the "Create a search service" flow

+ - **Subscription** - leave default

+ - **Resource Group** - select the resource group for AI project

+ - **Service Name** - use +++contoso-chat-aisearch+++

+ - **Location** - use +++East US+++ (*Note the changed region!!*)

+ - **Pricing tier** - check that it is set to **Standard**

+* [] **03** | Review and Create resource

+ - Click **"Review and Create"** for a last review

+ - Click **"Create"** to confirm creation

+ - You should see a "Deployment in progress" indicator

+* [] **04** | Activate Semantic Search capability

+ - Wait for the deployment to complete'

+ - Click **Go to resource** to visit AI Search resource page

+ - Click the **Semantic Ranker** option in the sidebar (left)

+ - Check **Select Plan** under Standard, to enable capability.

+ - Click **Yes** on the popup regarding service costs.

diff --git a/contoso-chat/docs/02 | Workshop/03 | Provision Azure/07-create-cosmosdb.md b/contoso-chat/docs/02 | Workshop/03 | Provision Azure/07-create-cosmosdb.md

new file mode 100644

index 0000000..3257527

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/03 | Provision Azure/07-create-cosmosdb.md

@@ -0,0 +1,32 @@

+# 07. Create CosmosDB Resource

+

+> [!NOTE]

+_Continue here and create resource manually only if your Azure subscription was not pre-provisioned with a lab Resource Group_

+

+_We'll create this on Azure Portal, not Azure AI Studio._

+

+* [] **01** | Switch browser tab to +++**https://portal.azure.com**+++

+ - Click on **"Create a resource"** on the home page

+ - **Search** for +++Azure CosmosDB+++ in Create hub page.

+ - Click the **Create** drop down in the matching result.

+ - You should see **"Which API best suits your workload?"**

+* [] **02** | Complete the Azure CosmosDB for NoSQL flow

+ - Click **Create** on the "Azure CosmosDB for NoSQL" option

+ - **Subscription** - leave default

+ - **Resource Group** - select the resource group for AI project

+ - **Account Name** - use +++contoso-chat-cosmosdb+++

+ - **Location** - use +++Sweden Central+++ (same as others)

+ - Leave other options at defaults.

+

+> [!hint] If you get an error indicating the _Account Name_ already exists, just add a number to make it unique - e.g., _contoso-chat-cosmosdb-1_

+

+* [] **03** | Review and Create resource

+ - Click **"Review and Create"** for a last review

+ - Click **"Create"** to confirm creation

+ - You should see a "Deployment in progress" indicator

+

+Deployment completes in minutes. Visit resource to verify creation.

+

+---

+

+🥳 **Congratulations!** You're Azure CosmosDB Resource is ready.

diff --git a/contoso-chat/docs/02 | Workshop/04 | Configure VS Code/08-az-login.md b/contoso-chat/docs/02 | Workshop/04 | Configure VS Code/08-az-login.md

new file mode 100644

index 0000000..cda49bb

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/04 | Configure VS Code/08-az-login.md

@@ -0,0 +1,21 @@

+# 08. VSCode Azure Login

+

+> [!NOTE]

+_This assumes you did the **[02. Launch GitHub Codespaces](#2-launch-github-codespaces)** step previously and left that tab open for dev container setup to complete._

+

+* [] **01** | Switch browser tab to your Visual Code editor session

+ - You should see a Visual Studio Code editor

+ - You should see a terminal open in editor

+

+> [!hint]

+> If VS Code Terminal is not open by default, click hamburger menu (top left), look for _Terminal_ option & Open New Terminal.

+

+* [] **02** | Use Azure CLI from terminal, to login

+ - Enter command: +++az login --use-device-code+++

+ - Open +++https://microsoft.com/devicelogin+++ in new tab

+ - Copy-paste code from Azure CLI into the dialog you see here

+ - On success, close this tab and return to VS Code tab

+

+---

+

+🥳 **Congratulations!** You're logged into Azure on VS Code.

\ No newline at end of file

diff --git a/contoso-chat/docs/02 | Workshop/04 | Configure VS Code/09-az-config.md b/contoso-chat/docs/02 | Workshop/04 | Configure VS Code/09-az-config.md

new file mode 100644

index 0000000..e2b619a

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/04 | Configure VS Code/09-az-config.md

@@ -0,0 +1,19 @@

+# 09. VSCode Config Azure

+

+> [!NOTE]

+_This assumes you have the Azure Resource Group and related resources provisioned correctly from prior steps. We'll now configure Visual Studio Code to use our provisioned Azure resources._

+

+* [] **01** | Download the 'config.json' for this Azure AI project

+ - Visit +++https://portal.azure.com+++ in a new browser tab

+ - Click on your created resource group (_contoso-chat-rg_)

+ - Click on your Azure AI project resource (_contoso-chat-aiproj_)

+ - Look for the **download config.json** option under Overview

+ - Click to download the file to the Windows 11 VM

+ - Open the file and **Copy** the contents to clipboard.

+

+* [] **02** | Update your VS Code project with these values

+ - Switch browser tab to your Visual Studio Code editor

+ - Open VS Code Terminal, enter: +++touch config.json+++

+ - This creates an empty config.json file in root directory.

+ - Open file in VS Code and **Paste** data from clipboard

+ - Save the file.

\ No newline at end of file

diff --git a/contoso-chat/docs/02 | Workshop/04 | Configure VS Code/10-env-config.md b/contoso-chat/docs/02 | Workshop/04 | Configure VS Code/10-env-config.md

new file mode 100644

index 0000000..4aa9dcd

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/04 | Configure VS Code/10-env-config.md

@@ -0,0 +1,38 @@

+# 10. VSCode Config Env

+

+> [!hint]

+_This assumes you the Azure Resource Group and related resources were setup previously. We'll now configure service endpoints and keys as env vars for programmatic access from Jupyter Notebooks._

+

+* [] **01** | Keep your Visual Studio Code editor open in one tab

+ - Find the **local.env** file in the root directory

+ - Open VS Code Terminal, enter: +++cp local.env .env+++

+ - This should copy "local.env" to a new **.env** file.

+ - Open ".env" in Visual Studio Code, keep tab open.

+

+> [!hint]

+This involves multiple Copy-Paste actions. If you have trouble pasting into VS Code window, right-click and choose **Paste** from the menu.

+

+* [] **02** | Update the Azure OpenAI environment variables

+ - Open +++https://ai.azure.com+++ in a new tab

+ - Click **"Build"**, then open your AI project page.

+ - Click **"Settings"**, click **"Show endpoints"** in the first tile

+ - Copy **Azure.OpenAI** endpoint value, To "CONTOSO_AI_SERVICES_ENDPOINT" value in ".env"

+ - Copy **Primary key** value To "CONTOSO_AI_SERVICES_KEY" value in ".env"

+

+* [] **03** | Update the Azure AI Search environment variables

+ - Open +++https://portal.azure.com+++ in a new tab

+ - Open your Azure AI Search resource page (_contoso-chat-aisearch_)

+ - Copy **Uri** value under Overview page To "CONTOSO_SEARCH_SERVICE" in ".env"

+ - Copy **Primary admin key** value under Keys page To "CONTOSO_SEARCH_KEY" in ".env"

+

+* [] **04** | Locate the Azure CosmosDB environment variables

+ - Open +++https://portal.azure.com+++ in a new tab

+ - Open your Azure CosmosDB resource page, click **Keys**

+ - Copy **URI** value, To "COSMOS_ENDPOINT" value in ".env"

+ - Copy **PRIMARY KEY** value To "COSMOS_KEY" value in ".env"

+

+* [] **05** | Save the ".env" file.

+

+---

+

+🥳 **Congratulations!** Your VS Code env variables are updated!

diff --git a/contoso-chat/docs/02 | Workshop/05 | Setup Promptflow/11-populate-index.md b/contoso-chat/docs/02 | Workshop/05 | Setup Promptflow/11-populate-index.md

new file mode 100644

index 0000000..96ded30

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/05 | Setup Promptflow/11-populate-index.md

@@ -0,0 +1,23 @@

+# 11. VSCode Populate Search

+

+> [!NOTE]

+_This assumes you setup the Azure AI Search resource earlier. In this section, we'll populate it with product data and create the index._

+

+* [] **01** | Return to the Visual Studio Code editor tab

+ - Locate the "data/product_info/" folder

+ - Open the **create-azure-search.ipynb** Jupyter Notebook.

+

+* [] **02** | Run the notebook to populate search index

+ - Click **Select Kernel** (top right)

+ - Pick "Python Environments" and select recommended option

+ - Click **Clear All Outputs** then **Run All**

+ - Verify that all code cells executed correctly.

+

+* [] **03** | Verify the search index was created

+ - Open +++https://portal.azure.com+++ to Azure AI Search resource

+ - Click the **Indexes** option in sidebar to view indexes

+ - Verify that the **contoso-products** search index was created.

+

+---

+

+🥳 **Congratulations!** Your Azure AI Search index is ready!

diff --git a/contoso-chat/docs/02 | Workshop/05 | Setup Promptflow/12-populate-db.md b/contoso-chat/docs/02 | Workshop/05 | Setup Promptflow/12-populate-db.md

new file mode 100644

index 0000000..c680636

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/05 | Setup Promptflow/12-populate-db.md

@@ -0,0 +1,22 @@

+# 12. VSCode Populate Database

+

+> [!NOTE]

+_This assumes you setup the Azure CosmosDB resource earlier. In this section, we'll populate it with customer data._

+

+* [] **01** | Return to the Visual Studio Code editor tab

+ - Locate the "data/customer_info/" folder

+ - Open the **create-cosmos-db.ipynb** Jupyter Notebook.

+

+* [] **02** | Run the notebook to populate customer database

+ - Click **Select Kernel**, set recommended Python environment

+ - Click **Clear All Outputs** then **Run All** & verify completion

+

+* [] **03** | Verify the customer database was created

+ - Open +++https://portal.azure.com+++ to Azure CosmosDB resource

+ - Click the **Data Explorer** option in sidebar to view data

+ - Verify that the **contoso-outdoor** container was created

+ - Verify that it contains a **customers** database

+

+---

+

+🥳 **Congratulations!** Your Azure CosmosDB database is ready!

diff --git a/contoso-chat/docs/02 | Workshop/05 | Setup Promptflow/13-local-connections.md b/contoso-chat/docs/02 | Workshop/05 | Setup Promptflow/13-local-connections.md

new file mode 100644

index 0000000..7abc722

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/05 | Setup Promptflow/13-local-connections.md

@@ -0,0 +1,26 @@

+# 13. VSCode Config Connections

+

+> [!NOTE]

+_This assumes you completed all Azure resource setup and VS Code configuration for those resources. Now let's setup **local Connections** so we can run the prompt flow in VS Code later._

+

+* [] **01** | Return to the Visual Studio Code editor tab

+

+* [] **02** | Setup a local third-party backend to store keys

+ - Open the Visual Studio Code terminal

+ - Type +++pip install keyrings-alt+++ and hit Enter

+ - Installation should complete quickly

+

+* [] **03** | Run the notebook to set local prompt flow connections

+ - Locate the "connections/" folder

+ - Open the **create-connections.ipynb** Jupyter Notebook.

+ - Click **Select Kernel**, set recommended Python environment

+ - Click **Clear All Outputs** then **Run All** & verify completion

+

+* [] **04** | Validate connections were created

+ - Return to Visual Studio Code terminal

+ - Type +++pf connection list+++ and hit Enter

+ - Verify 3 connections were created *with these names* **"contoso-search", "contoso-cosmos", "aoai-connection"**

+

+---

+

+🥳 **Congratulations!** Your *local connections* to the Azure AI project are ready!

diff --git a/contoso-chat/docs/02 | Workshop/05 | Setup Promptflow/14-azure-connections.md b/contoso-chat/docs/02 | Workshop/05 | Setup Promptflow/14-azure-connections.md

new file mode 100644

index 0000000..517c162

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/05 | Setup Promptflow/14-azure-connections.md

@@ -0,0 +1,49 @@

+# 14. Azure Config Connections

+

+> [!NOTE]

+_Your Azure resources are setup. Your local connections are configured. Now let's create **cloud connections** to run prompt flow in Azure later._

+

+* [] **01** | Switch browser tab to +++**https://ai.azure.com**+++

+ - Click the **"Build"** option in navbar.

+ - Click your AI Project to view its details page.

+ - Click the **"Settings"** option

+ - Locate the "Connections" tab and click **"View All"**.

+

+> [!IMPORTANT]

+_Connection names used are critical and must match the names given below. Keep ".env" open for quick access to required values. **Do not copy the enclosing quotes** when you copy/paste values from ".env"._

+

+* [] **02** | Click **New Connection** (create +++contoso-search+++)

+ - **Service** = "Azure AI Search (Cognitive Services"

+ - **Endpoint** = from .env "CONTOSO_SEARCH_SERVICE_ENDPOINT"

+ - **API key** = from .env "CONTOSO_SEARCH_KEY"

+ - **Connection name** = +++contoso-search+++

+

+* [] **03** | Click **New Connection** (create +++aoai-connection+++)

+ - **Service** = "Azure OpenAI"

+ - **API base** = from .env "CONTOSO_AI_SERVICES_ENDPOINT"

+ - **API key** = from .env "CONTOSO_AI_SERVICES_KEY"

+ - **Connection name** = +++aoai-connection+++

+

+> [!hint]

+The next step uses Azure Machine Learning Studio instead of Azure AI Studio. We expect this to change with a future product update, allowing you to create _all_ connections from Azure AI Studio.

+

+* [] **04** | Creating **Custom Connection** (+++contoso-cosmos+++)

+ - Visit +++https://ml.azure.com+++ instead

+ - Under **Recent Workspaces**, click project (_contoso-chat-aiproj_)

+ - Select **Prompt flow** (sidebar), then **Connections** (tab)

+ - Click **Create** and select **Custom** from dropdown

+ - **Name**: +++contoso-cosmos+++

+ - **Provider**: Custom (default)

+ - **Key-value pairs**: Add 4 entries (get env var values from .env)

+ - key: +++key+++, value: "COSMOS_KEY", **check "is secret"**

+ - key: +++_endpoint_+++ , value: "COSMOS_ENDPOINT"

+ - key: +++_containerId_+++, value: +++customers+++

+ - key: +++_databaseId_+++, value: +++contoso-outdoor+++

+ - Click **Save** to complete step.

+

+* [] **06** | Click **Refresh** on menu bar to validate creation

+ - Verify 3 connections were created *with these names* **"contoso-search", "contoso-cosmos", "aoai-connection"**

+

+---

+

+🥳 **Congratulations!** Your *cloud connections* to the Azure AI project are ready!

diff --git a/contoso-chat/docs/02 | Workshop/06 | Run & Evaluate Flow/15-pf-explore.md b/contoso-chat/docs/02 | Workshop/06 | Run & Evaluate Flow/15-pf-explore.md

new file mode 100644

index 0000000..e927434

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/06 | Run & Evaluate Flow/15-pf-explore.md

@@ -0,0 +1,43 @@

+# 15. PromptFlow: Codebase

+

+> [!NOTE]

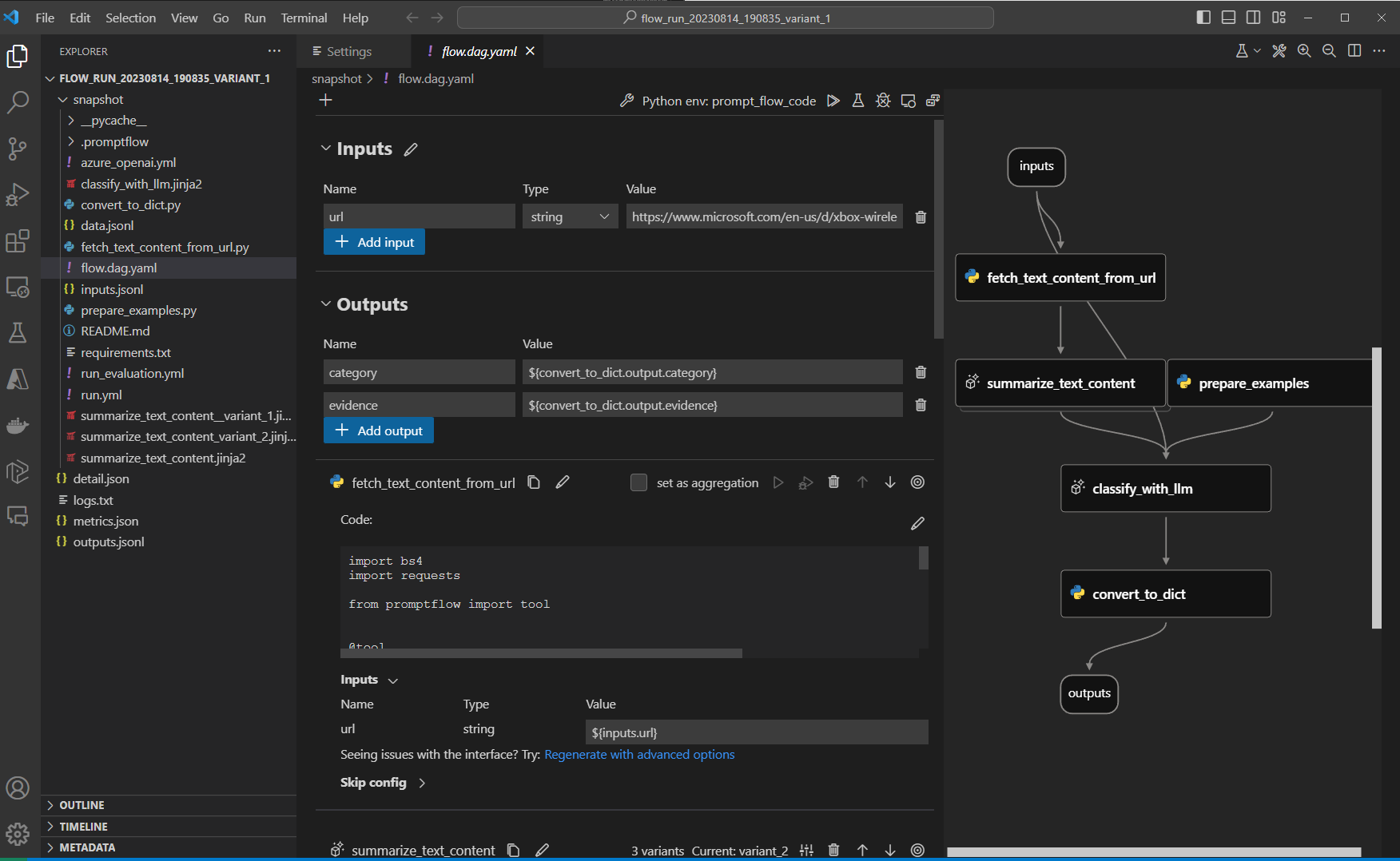

+> Our environment, resources and connections are configured. Now, let's learn about prompt flow and how it works. A **prompt flow is a DAG (directed acyclic graph)** made of up **nodes** connected together in a **flow**. Each node is a **function tool** (written in Python) that can be edited and customized to suit your needs.

+

+* [] **01** | Let's explore the Prompt Flow extension

+ - Click the "Prompt Flow" icon in the Visual Studio Code sidebar

+ - You should see a slide-out menu with the following sections

+ - **Quick Access** - Create new flows, install dependencies etc,

+ - **Flows** - Lists flows in project (defined by _flow.dag.yaml_)

+ - **Tools** - Lists available _function_ tools (used in flow nodes)

+ - **Batch Run History** - flows run against data or other runs

+ - **Connections** - Lists connections & helps create them

+ - We'll revisit this later as needed, when executing prompt flows.

+

+* [] **02** | Let's understand prompt flow folders & structure

+ - Click the "Explorer" icon in the Visual Studio Code sidebar

+ - Promptflow can create [three kinds of flows](https://microsoft.github.io/promptflow/how-to-guides/init-and-test-a-flow.html#initialize-flow):

+ - standard = basic flow folder structure

+ - chat = enhances standard flow for **conversations**

+ - evaluation = special flow, **assesses** outputs of other flows

+ - Explore the "contoso_chat" folder for a chat flow:

+ - **flow.dag.yaml** - defines the flow (inputs, outputs, nodes)

+ - **source code** (.py, .jinja2) - function _tools_ used by flow

+ - **requirements.txt** - defines Python dependencies for flow

+ - Explore the "eval/" folder for examples of eval flows

+ - **eval/groundedness** - tests for single metric (groundedness)

+ - **eval/multi_flow** - tests for multiple metrics (groundedness, fluency, coherance, relevance)

+ - **eval/evaluate-chat-prompt-flow.ipynb** - shows how these are used to evaluate the_contoso_chat_ flow.

+

+* [] **03** | Let's explore a prompt flow in code

+ - Open Visual Studio Code file: _contoso-chat/**flow.dag.yaml**_

+ - You should see a declarative file with these sections:

+ - **environment** - requirements.txt to install dependencies

+ - **inputs** - named inputs & properties for flow

+ - **outputs** - named outputs & properties for flow

+ - **nodes** - processing functions (tools) for workflow

+

+The "prompt flow" is defined by the **flow.dag.yaml** but the text view does not help us understand the "flow" of this process. Thankfully, the Prompt Flow extension gives us a **Visual Editor** that can help. Let's explore it.

+

+---

+

+🥳 **Congratulations!** You're ready to explore a prompt flow visually!

diff --git a/contoso-chat/docs/02 | Workshop/06 | Run & Evaluate Flow/16-pf-visual.md b/contoso-chat/docs/02 | Workshop/06 | Run & Evaluate Flow/16-pf-visual.md

new file mode 100644

index 0000000..0c6b2b4

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/06 | Run & Evaluate Flow/16-pf-visual.md

@@ -0,0 +1,36 @@

+# 16. PromptFlow: Visual Editor

+

+> [!hint]

+> In the previous section, you should have opened Visual Studio Code, navigated to the _contoso-chat_ folder, and opened the _flow.dag.yaml_ file in the editor pane. We also assume you have the _Prompt Flow_ extension installed correctly (see VS Code extensions sidebar).

+

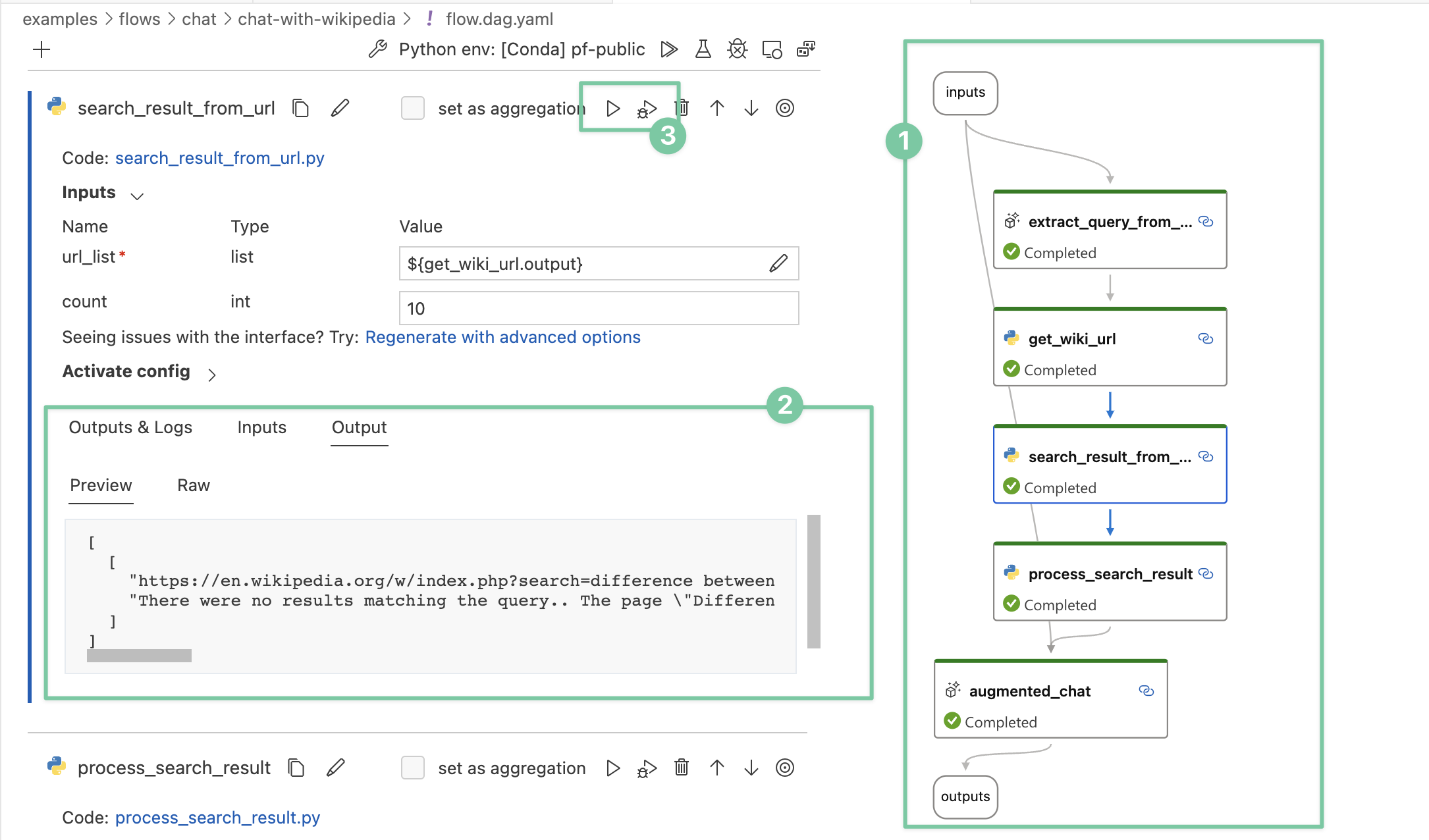

+* [] **01** | View _contoso-chat/flow.dag.yaml_ in the Visual Studio Code editor

+ - Make sure your cursor is at the top of the file in editor panel.

+ - You should see a line of menu options similar to the image below. _Note: This is an example and not an exact screenshot for this project_. **It may take a couple of seconds for the menu options to appear** so be patient.

+

+

+* [] **02** | Click _Visual editor_ link **or** use keyboard shortcut: Ctrl + k,v

+ - You should get a split screen view with a visual graph on the right and sectioned forms on the left, as show below. _Note: This is an example and not an exact screenshot for this project_.

+

+ - Click on any of the "nodes" in the graph on the right

+ - The left pane should scroll to the corresponding declarative view, to show the node details.

+ - Let's explore **our** prompt flow components visually, next.

+

+* [] **03** | Explore prompt flow **inputs**. These start the flow.

+ - **customer_id** - to look up customer in CosmosDB

+ - **question** - the question that customer is asking

+ - **chat_history** - the conversation history with customer

+

+* [] **04** | Explore prompt flow **nodes**. These are the processing functions.

+ - **queston_embedding** - use _embedding model_ to embed question text in vector search query

+ - **retrieve_documents** - uses query to retrieve most relevant docs from AI Search index

+ - **customer_lookup** - looks up customer record in parallel, from Azure Cosmos DB database

+ - **customer_prompt** - populate customer prompt "template" with customer & search results

+ - **llm_response** - uses _chat completion model_ to generate a response to customer query using this enhanced prompt

+* [] **04** | Explore prompt flow **outputs**. These end the flow.

+ - Returns the LLM-generated response to the customer

+

+This defines the _processing pipeline_ for your LLM application from user input, to returned response. To _execute_ the flow, we need a valid Python runtime. We can use the default runtime available to use in GitHub Codespaces to run this from Visual Studio Code. Let's do that next.

+

+---

+

+🥳 **Congratulations!** You're ready to run your Prompt flow.

diff --git a/contoso-chat/docs/02 | Workshop/06 | Run & Evaluate Flow/17-pf-run.md b/contoso-chat/docs/02 | Workshop/06 | Run & Evaluate Flow/17-pf-run.md

new file mode 100644

index 0000000..5d78d49

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/06 | Run & Evaluate Flow/17-pf-run.md

@@ -0,0 +1,50 @@

+# 17. PromptFlow: Run

+

+> [!hint]

+> In the previous section, you should have opened Visual Studio Code, navigated to the _contoso-chat_ folder, and opened the _flow.dag.yaml_ file in the Visual Editor view.

+

+* [] **01** | View _contoso-chat/flow.dag.yaml_ in the Visual Studio Code editor

+ - Make sure your cursor is at the top of the file in editor panel.

+ - Make sure you are in the Visual editor with a view like this. _Note: this is an example screenshot, and not the exact one for this lab_.

+

+

+* [] **02** | Run the prompt flow locally

+ - _Tip:_ Keep VS Code terminal open to view console output.

+ - Look at the 2nd line (starting with **"+LLM"**) in Visual Editor.

+ - Look for a 'tool' icon at right: _It should show a valid Python env_.

+ - Look for a 'play' icon next to it: _The tooltip should read "Run All"_.

+ - Click _Run All_ (or use "Shift + F5" on keyboard)

+ - Select "Run it with standard mode" in dropdown

+

+* [] **03** | Explore inputs and outputs of flow

+ - The _Inputs_ section will have these values:

+ - **chat_history**: prior turns in chat (default=blank)

+ - **question**: customer's most recent question

+ - **customerid**: to help look up relevant customer context (e.g., order history) to refine response with more relevant context. This is the basis for RAG (retrieval-augmented generation).

+ - The _Contoso Outdoors_ web app provides these inputs (in demo)

+ - Note the contents of the _Flow run outputs_ tab under "Outputs" section

+ - Use the navigation tools to show the **complete answer**: _Is it a good answer to the input question?_

+ - Use the nvaigation tools to explore the **returned context** (products relevant to the customer's question). _Are these good selections for context?

+

+* [] **04** | Explore Individual Flow Nodes

+ - Observe node status colors in VS Code (green=success, red=error)

+ - Click any node. The left pane will scroll to show execution details.

+ - Click the _Code:_ link in component to see function executed here.

+

+* [] **05** | Explore Run Stats & Traces

+ - Click the "Terminal" tab. It should show final response returned.

+ - Click the "Prompt Flow" tab. Select a node in visual editor.

+ - Tab shows "node name, Tokens, duration" stats for node.

+ - Click the line in table. You should see more details in pane.

+

+* [] **06** | Try a new input

+ - In Inputs, **change question** to _"What is a good tent for a beginner?"_

+ - Click **Run All**, explore outputs as before.

+ - In Inputs, **change customerId** (to value between 1 and 12)

+ - Click **Run All**, compare this output to before.

+ - Experiment with other input values and analyze outputs.

+

+

+---

+

+🥳 **Congratulations!** You ran your contoso-chat prompt flow successfully in the local runtime on GitHub Codespaces. Next, we want to _evaluate_ the performance of the flow.

diff --git a/contoso-chat/docs/02 | Workshop/06 | Run & Evaluate Flow/18-pf-evaluate.md b/contoso-chat/docs/02 | Workshop/06 | Run & Evaluate Flow/18-pf-evaluate.md

new file mode 100644

index 0000000..75ea070

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/06 | Run & Evaluate Flow/18-pf-evaluate.md

@@ -0,0 +1,61 @@

+# 18. PromptFlow: Evaluate

+

+> [!NOTE]

+> You've built and run the _contoso-chat_ prompt flow locally using the Visual Studio Code Prompt Flow extension and SDK. Now it's time to **evaluate** the quality of your LLM app response to see if it's performing up to expectations. Let's dive in.

+

+* [] **01** | First, run **evaluate-chat-prompt-flow.ipynb**

+ - Locate the "eval/" folder

+ - Open **evaluate-chat-prompt0flow.ipynb**.

+ - Click **Select Kernel**, use default Python env

+ - Click **Clear All Outputs**, then **Run All**

+ - Execution **takes some time**. Until then ....

+

+* [] **02** | Let's explore what the notebook does

+ - Keep Jupyter notebook open "Editor" pane

+ - Open VS Code "Explorer" pane, select **Outline**

+ - You should see these code sections:

+ - Local Evaluation - **Groundedness**

+ - Local Evaluation - **Multiple Metrics**

+ - AI Studio Azure - **Batch run, json dataset**

+ - Cloud Evaluation - **Multi-flow, json dataset**

+ - Let's understand what each does.

+

+* [] **03** | Local Evalution : Explore **Groundedness**

+ - Evaluates **contoso-chat** for _groundedness_

+ - This [**measures**](https://learn.microsoft.com/azure/ai-studio/concepts/evaluation-metrics-built-in#ai-assisted-groundedness-1) how well the model's generated answers align with information from the source data (user-defined context).

+ - **Example**: We test if the answer to the question _"Can you tell me about your jackets"_ is grounded in the product data we indexed previously.

+

+* [] **04** | Local Evaluation : Explore **Multiple Metrics**

+ - Evaluates **contoso-chat** using [4 key metrics](https://learn.microsoft.com/azure/ai-studio/concepts/evaluation-metrics-built-in#metrics-for-multi-turn-or-single-turn-chat-with-retrieval-augmentation-rag):

+ - **Groundedness** = How well does model's generated answers align with information from the source (product) data?

+ - **Relevance** = Extent to which the model's generated responses are pertinent and directly related to the given questions.

+ - **Coherence** = Ability to generate text that reads naturally, flows smoothly, and resembles human-like language in responses.

+ - **Fluency** = Measures the grammatical proficiency of a generative AI's predicted answer.

+

+> [!NOTE]

+> The above evaluation tests ran against a single test question. For more comprehensive testing, we can use Azure AI Studio to run **batch tests** using the same evaluation prompt, with the **data/salestestdata.jsonl** dataset. Let's understand how that works.

+

+* [] **05** | Base Run : **With evaluation JSON dataset**

+ - Use Azure AI Studio with automatic runtime

+ - Use "data/salestestdata.jsonl" as _batch test data_

+ - Do a _base-run_ using contoso-chat prompt flow

+ - View results in notebook - visualized as table

+

+* [] **06** | Eval Run : **With evaluation JSON dataset**

+ - Use Azure AI Studio with automatic runtime

+ - Use "data/salestestdata.jsonl" as _batch test data_

+ - Do multi-flow eval with _base run as variant_

+ - View results in notebook - visualized as table

+ - Results should now show the 4 eval metrics

+ - Optionally, click **Web View** link in final output

+ - See **Visualize outputs** (Azure AI Studio)

+ - Learn more on [viewing eval results](https://learn.microsoft.com/azure/ai-studio/how-to/flow-bulk-test-evaluation#view-the-evaluation-result-and-metrics)

+

+* [] **07** | Review Evaluation Output

+ - Check the Jupyter Notebook outputs

+ - Verify execution run completed successfully

+ - Review evaluation metrics to gain insight

+

+---

+

+🥳 **Congratulations!** You've evaluated your contoso-chat flow for single-data and batch data runs, using single-metric and multi-metric eval flows. _Now you're ready to deploy the flow so apps can use it_.

\ No newline at end of file

diff --git a/contoso-chat/docs/02 | Workshop/07 | Deploy & Use Flow/19-pf-deploy.md b/contoso-chat/docs/02 | Workshop/07 | Deploy & Use Flow/19-pf-deploy.md

new file mode 100644

index 0000000..376d340

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/07 | Deploy & Use Flow/19-pf-deploy.md

@@ -0,0 +1,94 @@

+# 19. PromptFlow: Deploy

+

+> [!hint]

+> Till now, you've explored, built, tested, and evaluated, the prompt flow _from Visual Studio Code_, as a developer. Now it's time to _deploy the flow to production_ so applications can use the endpoint to make requests and receive responses in real time.

+

+**Deployment Options**: We will be [using Azure AI Studio](https://learn.microsoft.com/azure/ai-studio/how-to/flow-deploy?tabs=azure-studio) to deploy our prompt flow from a UI. You can also deploy the flow programmatically [using the Azure AI Python SDK](https://learn.microsoft.com/azure/ai-studio/how-to/flow-deploy?tabs=python).

+

+**Deployment Process**: We'll discuss the 4 main steps:

+- First, upload the prompt flow to Azure AI Studio

+- Next, test upload then deploy it interactively

+- Finally, use deployed endpoint (from built-in test)

+- Optionally: use deployed endpoint (from real app)

+

+>[!note] **1: Upload Prompt Flow** to Azure AI Studio.

+

+* [] **01** | Return to the Visual Studio Code editor tab

+ - Locate the "deployment/" folder

+ - Open **push_and_deploy_pf.ipynb**.

+ - Click **Select Kernel**, use default Python env

+ - Click **Clear All Outputs**, then **Run All**

+ - This should complete in just a few minutes.

+

+* [] **02** | Verify Prompt Flow was created

+ - Click the **flow_portal_url** link in output

+ - It should open Azure AI Studio to flow page

+ - Verify that the visual DAG is for contoso-chat

+

+* [] **03** | Setup Automated Runtime in Azure

+ - Click **Select runtime** dropdown

+ - Select Automatic Runtime, click **Start**

+ - Takes a few mins, watch progress indicator.chat

+

+* [] **04** | Run Prompt Flow in Azure

+ - On completion, you should see a ✅

+ - Now click the blue **Run** button

+ - Run should complete in a few minutes.

+ - Verify that all graph nodes are green (success)

+

+>[!note] **2: Deploy Prompt Flow** now that it's tested

+

+* [] **01** | Click the **Deploy** option in flow page

+ - Opens a Deploy wizard flow

+ - **Endpoint name:** use +++contoso-chat-aiproj-ep+++

+ - **Deployment name:** use +++contoso-chat-aiproj-deploy+++

+ - Keep defaults, click **Review+Create**.

+ - Review configuration, click **Create**.

+

+* [] **02-A** | Check **Deployment status** (option A)

+ - Navigate to +++https://ai.azure.com+++

+ - Click Build > Your AI Project (_contoso-chat-aiproj_)

+ - Click **Deployments** and hit Refresh

+ - You should see "Endpoint" listing with _Updating_

+ - Refresh periodically till it shows _Succeeded_

+

+* [] **02-B** | Check **Deployment status** (option B)

+ - Navigate to +++https://ml.azure.com+++

+ - Click the notifications icon (bell) in navbar

+ - This should slide out a list of status items

+ - Watch for all pending tasks to go green.

+

+> [!alert]

+> The deployment process **can take 10 minutes or more**. Use the time to explore other things.

+

+* [] **03** | Deployment succeeded

+ - Go back to the Deployments list in step **02-A**

+ - Click your deployment to view details page.

+ - **Wait** till page loads and menu items update

+ - You should see a menu with these items

+ - **Details** - status & endpoint info

+ - **Consume** - code samples, URL & keys

+ - **Test** - interactive testing UI

+ - **Monitoring** and **Logs** - for LLMOps

+

+* [] **04** | Consume Deployment

+ - Click the **Consume** tab

+ - You should see

+ - the REST URL for endpoint

+ - the authentication keys for endpoint

+ - code snippets for key languages

+ - Use this if testing from an app. In the next step, we'll explore using a built-in test instead.

+

+>[!note] **1: Use Deployed Endpoint** with a built-in test.

+

+* [] **01** | Click the **Test** option in deployment page

+ - Enter "[]" for **chat_history**

+ - Enter +++What can you tell me about your jackets?+++ for **question**

+ - Click **Test** and watch _Test result_ pane

+ - Test result output should show LLM app response

+

+Explore this with other questions or by using different customer Id or chat_history values if time permits.

+

+---

+

+🥳 **Congratulations!** You made it!! You just _setup, built, ran, evaluated, and deployed_ a RAG-based LLM application using Azure AI Studio and Prompt Flow.

diff --git a/contoso-chat/docs/02 | Workshop/07 | Deploy & Use Flow/20-lab-recap.md b/contoso-chat/docs/02 | Workshop/07 | Deploy & Use Flow/20-lab-recap.md

new file mode 100644

index 0000000..1a4e87c

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/07 | Deploy & Use Flow/20-lab-recap.md

@@ -0,0 +1,31 @@

+# Lab Recap

+

+> [!hint] What We Learned Today

+

+**We started with a simple goal:** Build an LLM-based chat app that used Retrieval Augmented Generation (RAG) to answer questions relevant to a product catalog.

+

+**We learned about LLM Ops:** Specifically, we identified a number of steps that need to be chained together in a workflow, to build, deploy & use **performant LLM Apps**.

+

+**We learned about Azure AI Studio:** Specifically, we learned how to provision an Azure AI project using an Azure AI resource with selected Model deployments. We learned to build a RAG solution with Azure AI Search and Azure Cosmos DB. And we learned to upload, deploy, run, and test, prompt flows in Azure.

+

+**We learned about Prompt Flow:** Specifically, we learned how to create, evalute, test, and deploy, a prompt flow using a VS Code extension to streamline end-to-end development for an LLM-based app. And we learned how to upload the flow to Azure AI Studio, and replicate the steps completely in the cloud.

+

+Along the way, we learned what LLM Ops is and why having these tools to simplify and orchestrate end-to-end development workflows is critical for building the next generation of Generative AI applications at cloud scale.

+

+> [!hint] What We Can Try Next

+

+- **Explore Next Steps for LLMOpss**.

+ - Add GitHub Actions, Explore Intents

+ - See README: +++https://github.com/Azure-Samples/contoso-chat+++ README

+- **Explore Usage in Real Application.**

+ - Integrate & use deployed endpoint in web app

+ - See README: +++https://github.com/Azure-Samples/contoso-web+++

+

+> [!hint] Where Can You Learn More?

+

+- **Explore Developer Resources**: [Azure AI Developer Hub](https://learn.microsoft.com/ai)

+- **Join The Community**: [Azure AI Discord](https://discord.gg/yrTeVQwpWm)

+

+---

+

+🏆 | **THANK YOU FOR JOINING US!**

diff --git a/contoso-chat/docs/02 | Workshop/08 | Bonus Exercises/1-App-Integration.md b/contoso-chat/docs/02 | Workshop/08 | Bonus Exercises/1-App-Integration.md

new file mode 100644

index 0000000..9eeb74f

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/08 | Bonus Exercises/1-App-Integration.md

@@ -0,0 +1 @@

+# 1 | Contoso Website Chat

\ No newline at end of file

diff --git a/contoso-chat/docs/02 | Workshop/08 | Bonus Exercises/2-Actions-Integration.md b/contoso-chat/docs/02 | Workshop/08 | Bonus Exercises/2-Actions-Integration.md

new file mode 100644

index 0000000..1b50cbb

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/08 | Bonus Exercises/2-Actions-Integration.md

@@ -0,0 +1 @@

+# 2 | GitHub Action Deploy

\ No newline at end of file

diff --git a/contoso-chat/docs/02 | Workshop/08 | Bonus Exercises/3-Intents-Integration.md b/contoso-chat/docs/02 | Workshop/08 | Bonus Exercises/3-Intents-Integration.md

new file mode 100644

index 0000000..22cc86f

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/08 | Bonus Exercises/3-Intents-Integration.md

@@ -0,0 +1 @@

+# 3 | Intent-Based Routing

\ No newline at end of file

diff --git a/contoso-chat/docs/02 | Workshop/08 | Bonus Exercises/4-Content-Filtering.md b/contoso-chat/docs/02 | Workshop/08 | Bonus Exercises/4-Content-Filtering.md

new file mode 100644

index 0000000..103b913

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/08 | Bonus Exercises/4-Content-Filtering.md

@@ -0,0 +1 @@

+# 4 | Content Filtering

\ No newline at end of file

diff --git a/contoso-chat/docs/02 | Workshop/09 | Troubleshooting/README.md b/contoso-chat/docs/02 | Workshop/09 | Troubleshooting/README.md

new file mode 100644

index 0000000..ff9a5b6

--- /dev/null

+++ b/contoso-chat/docs/02 | Workshop/09 | Troubleshooting/README.md

@@ -0,0 +1,34 @@

+# 1 | Debug Issues

+

+!!!warning "Don't see your issue listed?"

+

+ [Submit an issue](https://github.com/Azure-Samples/contoso-chat/issues/new/choose) to the repository with the following information, to help us debug and update the list

+

+ - Which workshop section were you on? (e.g., Setup Promptflow)

+ - What step of that section were you on?

+ - What was the error message or behavior you saw?

+ - What was the behavior you expected?

+ - Screenshot of the issue (if relevant).

+

+ When submitting any issue please make sure you mask any secrets or personal information (e.g., your Azure subscription id) to avoid exposing that information publicly.

+

+This page lists any frequently-encountered issues for this workshop, with some suggestions on how to debug these on your own. Note that Azure AI Studio (Preview) and Promptflow are both evolving rapidly - some known issues may be resolved in future updates to those tools.

+

+---

+

+## 1. Model Deployments

+

+!!!bug "1.1 | Requested Model Not Found"

+

+- **What Happened:**

+ - Required model does not show in options for Create

+- **Possible Causes:**

+ - Model not available in region where the Azure AI resource is provisioned

+ - Model available but no quota left in subscription

+- **Debug Suggestions:**

+ - Open "Manage" page under Azure AI Studio

+ - Pick "Quota" tab, select your subscription

+ - Check quota for region Azure AI resource is in

+ - If none left, look for region that still has quota

+ - Switch to that region and try to create deployment.

+

diff --git a/contoso-chat/docs/03 | Concepts/01-RAG.md b/contoso-chat/docs/03 | Concepts/01-RAG.md

new file mode 100644

index 0000000..8b83a47

--- /dev/null

+++ b/contoso-chat/docs/03 | Concepts/01-RAG.md

@@ -0,0 +1,7 @@

+# 01 | The RAG Pattern

+

+!!!warning "This page is under construction. Please check back!"

+

+---

+

+

\ No newline at end of file

diff --git a/contoso-chat/docs/03 | Concepts/02-Metrics.md b/contoso-chat/docs/03 | Concepts/02-Metrics.md

new file mode 100644

index 0000000..11c96f7

--- /dev/null

+++ b/contoso-chat/docs/03 | Concepts/02-Metrics.md

@@ -0,0 +1,8 @@

+# 02 | The Evaluation Metrics

+

+!!!warning "This page is under construction. Please check back!"

+

+---

+

+

+

\ No newline at end of file

diff --git a/contoso-chat/docs/04 | Tooling/01-Dev-Containers.md b/contoso-chat/docs/04 | Tooling/01-Dev-Containers.md

new file mode 100644

index 0000000..98b480d

--- /dev/null

+++ b/contoso-chat/docs/04 | Tooling/01-Dev-Containers.md

@@ -0,0 +1,7 @@

+# 01 | Dev Containers

+

+!!!warning "This page is under construction. Please check back!"

+

+---

+

+

\ No newline at end of file

diff --git a/contoso-chat/docs/04 | Tooling/02-Visual-Studio-Code.md b/contoso-chat/docs/04 | Tooling/02-Visual-Studio-Code.md

new file mode 100644

index 0000000..7872c9c

--- /dev/null

+++ b/contoso-chat/docs/04 | Tooling/02-Visual-Studio-Code.md

@@ -0,0 +1,8 @@

+# 02 | Visual Studio Code

+

+!!!warning "This page is under construction. Please check back!"

+

+---

+

+

+

\ No newline at end of file

diff --git a/contoso-chat/docs/04 | Tooling/03-Prompt-Flow.md b/contoso-chat/docs/04 | Tooling/03-Prompt-Flow.md

new file mode 100644

index 0000000..836d24e

--- /dev/null

+++ b/contoso-chat/docs/04 | Tooling/03-Prompt-Flow.md

@@ -0,0 +1,7 @@

+# 03 | Prompt Flow

+

+!!!warning "This page is under construction. Please check back!"

+

+---

+

+

\ No newline at end of file

diff --git a/contoso-chat/docs/04 | Tooling/04-Azure-AI-Studio.md b/contoso-chat/docs/04 | Tooling/04-Azure-AI-Studio.md

new file mode 100644

index 0000000..638931a

--- /dev/null

+++ b/contoso-chat/docs/04 | Tooling/04-Azure-AI-Studio.md

@@ -0,0 +1,7 @@

+# 04 | Azure AI Studio

+

+!!!warning "This page is under construction. Please check back!"

+

+---

+

+

\ No newline at end of file

diff --git a/contoso-chat/docs/README.md b/contoso-chat/docs/README.md

new file mode 100644

index 0000000..05ca0e9

--- /dev/null

+++ b/contoso-chat/docs/README.md