diff --git a/LICENSE b/LICENSE

deleted file mode 100644

index 52a5811..0000000

--- a/LICENSE

+++ /dev/null

@@ -1,21 +0,0 @@

-MIT License

-

-Copyright (c) 2021 RUCAIBox

-

-Permission is hereby granted, free of charge, to any person obtaining a copy

-of this software and associated documentation files (the "Software"), to deal

-in the Software without restriction, including without limitation the rights

-to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

-copies of the Software, and to permit persons to whom the Software is

-furnished to do so, subject to the following conditions:

-

-The above copyright notice and this permission notice shall be included in all

-copies or substantial portions of the Software.

-

-THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

-IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

-FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

-AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

-LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

-OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

-SOFTWARE.

diff --git a/README.md b/README.md

index a36f00f..093c818 100644

--- a/README.md

+++ b/README.md

@@ -1,286 +1,15 @@

# CRSLab

-[](https://pypi.org/project/crslab)

-[](https://github.com/rucaibox/crslab/releases)

-[](./LICENSE)

-[](https://arxiv.org/abs/2101.00939)

-[](https://crslab.readthedocs.io/en/latest/?badge=latest)

+This branch integrates the new evaluation approach from iEvaLM, currently supporting ChatGPT models and ReDial dataset. We will complete the evaluation for all models and datasets in the future. Please continue to follow us!

-[Paper](https://arxiv.org/pdf/2101.00939.pdf) | [Docs](https://crslab.readthedocs.io/en/latest/?badge=latest)

-| [中文版](./README_CN.md)

+# Quick Start 🚀

-**CRSLab** is an open-source toolkit for building Conversational Recommender System (CRS). It is developed based on

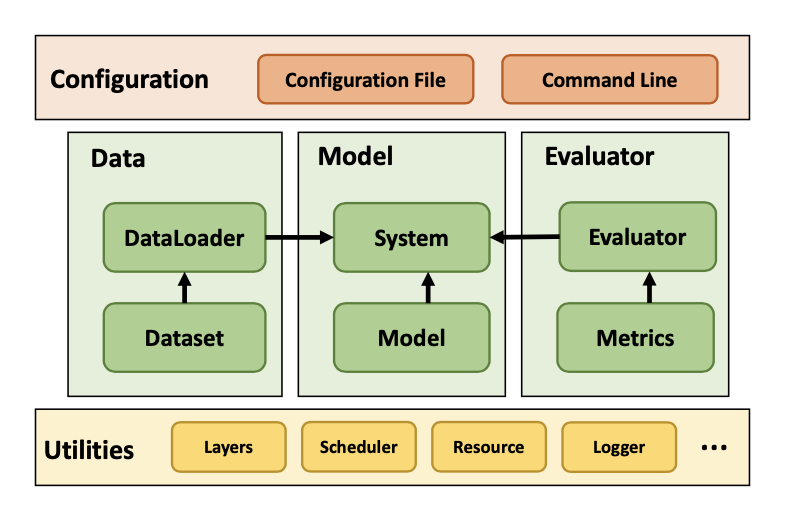

-Python and PyTorch. CRSLab has the following highlights:

-

-- **Comprehensive benchmark models and datasets**: We have integrated commonly-used 6 datasets and 18 models, including graph neural network and pre-training models such as R-GCN, BERT and GPT-2. We have preprocessed these datasets to support these models, and release for downloading.

-- **Extensive and standard evaluation protocols**: We support a series of widely-adopted evaluation protocols for testing and comparing different CRS.

-- **General and extensible structure**: We design a general and extensible structure to unify various conversational recommendation datasets and models, in which we integrate various built-in interfaces and functions for quickly development.

-- **Easy to get started**: We provide simple yet flexible configuration for new researchers to quickly start in our library.

-- **Human-machine interaction interfaces**: We provide flexible human-machine interaction interfaces for researchers to conduct qualitative analysis.

-

-

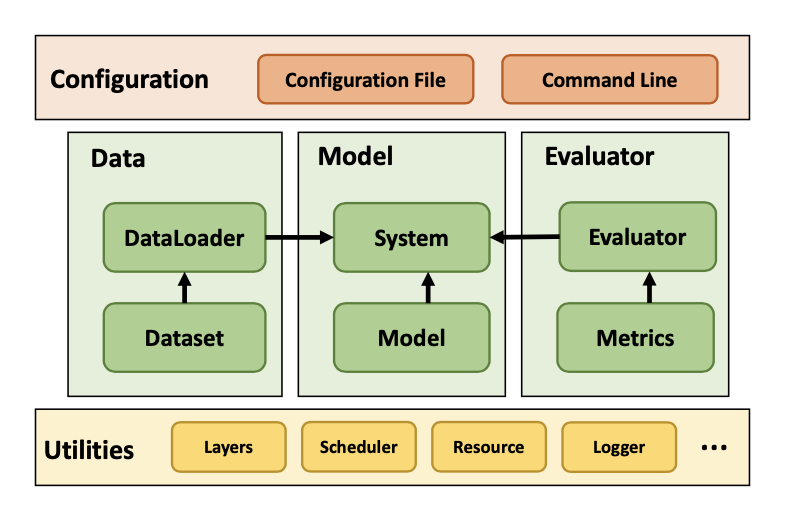

-  -

-

- Figure 1: The overall framework of CRSLab

-

-

-

-

-

-- [Installation](#Installation)

-- [Quick-Start](#Quick-Start)

-- [Models](#Models)

-- [Datasets](#Datasets)

-- [Performance](#Performance)

-- [Releases](#Releases)

-- [Contributions](#Contributions)

-- [Citing](#Citing)

-- [Team](#Team)

-- [License](#License)

-

-

-

-## Installation

-

-CRSLab works with the following operating systems:

-

-- Linux

-- Windows 10

-- macOS X

-

-CRSLab requires Python version 3.6 or later.

-

-CRSLab requires torch version 1.4.0 or later. If you want to use CRSLab with GPU, please ensure that CUDA or CUDAToolkit version is 9.2 or later. Please use the combinations shown in this [Link](https://pytorch-geometric.com/whl/) to ensure the normal operation of PyTorch Geometric.

-

-

-

-### Install PyTorch

-

-Use PyTorch [Locally Installation](https://pytorch.org/get-started/locally/) or [Previous Versions Installation](https://pytorch.org/get-started/previous-versions/) commands to install PyTorch. For example, on Linux and Windows 10:

-

-```bash

-# CUDA 10.1

-pip install torch==1.6.0+cu101 torchvision==0.7.0+cu101 -f https://download.pytorch.org/whl/torch_stable.html

-

-# CPU only

-pip install torch==1.6.0+cpu torchvision==0.7.0+cpu -f https://download.pytorch.org/whl/torch_stable.html

-```

-

-If you want to use CRSLab with GPU, make sure the following command prints `True` after installation:

-

-```bash

-$ python -c "import torch; print(torch.cuda.is_available())"

->>> True

-```

-

-

-

-### Install PyTorch Geometric

-

-Ensure that at least PyTorch 1.4.0 is installed:

-

-```bash

-$ python -c "import torch; print(torch.__version__)"

->>> 1.6.0

-```

-

-Find the CUDA version PyTorch was installed with:

-

-```bash

-$ python -c "import torch; print(torch.version.cuda)"

->>> 10.1

-```

-

-Install the relevant packages:

-

-```bash

-pip install torch-scatter -f https://pytorch-geometric.com/whl/torch-${TORCH}+${CUDA}.html

-pip install torch-sparse -f https://pytorch-geometric.com/whl/torch-${TORCH}+${CUDA}.html

-pip install torch-cluster -f https://pytorch-geometric.com/whl/torch-${TORCH}+${CUDA}.html

-pip install torch-spline-conv -f https://pytorch-geometric.com/whl/torch-${TORCH}+${CUDA}.html

-pip install torch-geometric

-```

-

-where `${CUDA}` and `${TORCH}` should be replaced by your specific CUDA version (`cpu`, `cu92`, `cu101`, `cu102`, `cu110`) and PyTorch version (`1.4.0`, `1.5.0`, `1.6.0`, `1.7.0`) respectively. For example, for PyTorch 1.6.0 and CUDA 10.1, type:

-

-```bash

-pip install torch-scatter -f https://pytorch-geometric.com/whl/torch-1.6.0+cu101.html

-pip install torch-sparse -f https://pytorch-geometric.com/whl/torch-1.6.0+cu101.html

-pip install torch-cluster -f https://pytorch-geometric.com/whl/torch-1.6.0+cu101.html

-pip install torch-spline-conv -f https://pytorch-geometric.com/whl/torch-1.6.0+cu101.html

-pip install torch-geometric

-```

-

-

-

-### Install CRSLab

-

-You can install from pip:

-

-```bash

-pip install crslab

-```

-

-OR install from source:

-

-```bash

-git clone https://github.com/RUCAIBox/CRSLab && cd CRSLab

-pip install -e .

-```

-

-

-

-## Quick-Start

-

-With the source code, you can use the provided script for initial usage of our library with cpu by default:

-

-```bash

-python run_crslab.py --config config/crs/kgsf/redial.yaml

-```

-

-The system will complete the data preprocessing, and training, validation, testing of each model in turn. Finally it will get the evaluation results of specified models.

-

-If you want to save pre-processed datasets and training results of models, you can use the following command:

+## Modify your OpenAI API key 🔑

+Please open the config/iEvaLM/chatgpt/redial.yaml file and replace the **your_api_key** with your own OpenAI API key.

+## Evaluate 🤔

+You can use two types of interaction: attribute-based question answering and free-form chit-chat.

```bash

-python run_crslab.py --config config/crs/kgsf/redial.yaml --save_data --save_system

-```

-

-In summary, there are following arguments in `run_crslab.py`:

-

-- `--config` or `-c`: relative path for configuration file(yaml).

-- `--gpu` or `-g`: specify GPU id(s) to use, we now support multiple GPUs. Defaults to CPU(-1).

-- `--save_data` or `-sd`: save pre-processed dataset.

-- `--restore_data` or `-rd`: restore pre-processed dataset from file.

-- `--save_system` or `-ss`: save trained system.

-- `--restore_system` or `-rs`: restore trained system from file.

-- `--debug` or `-d`: use validation dataset to debug your system.

-- `--interact` or `-i`: interact with your system instead of training.

-- `--tensorboard` or `-tb`: enable tensorboard to monitor train performance.

-

-

-

-## Models

-

-In CRSLab, we unify the task description of conversational recommendation into three sub-tasks, namely recommendation (recommend user-preferred items), conversation (generate proper responses) and policy (select proper interactive action). The recommendation and conversation sub-tasks are the core of a CRS and have been studied in most of works. The policy sub-task is needed by recent works, by which the CRS can interact with users through purposeful strategy.

-As the first release version, we have implemented 18 models in the four categories of CRS model, Recommendation model, Conversation model and Policy model.

-

-| Category | Model | Graph Neural Network? | Pre-training Model? |

-| :------------------: | :----------------------------------------------------------: | :-----------------------------: | :-----------------------------: |

-| CRS Model | [ReDial](https://arxiv.org/abs/1812.07617)

[KBRD](https://arxiv.org/abs/1908.05391)

[KGSF](https://arxiv.org/abs/2007.04032)

[TG-ReDial](https://arxiv.org/abs/2010.04125)

[INSPIRED](https://www.aclweb.org/anthology/2020.emnlp-main.654.pdf) | ×

√

√

×

× | ×

×

×

√

√ |

-| Recommendation model | Popularity

[GRU4Rec](https://arxiv.org/abs/1511.06939)

[SASRec](https://arxiv.org/abs/1808.09781)

[TextCNN](https://arxiv.org/abs/1408.5882)

[R-GCN](https://arxiv.org/abs/1703.06103)

[BERT](https://arxiv.org/abs/1810.04805) | ×

×

×

×

√

× | ×

×

×

×

×

√ |

-| Conversation model | [HERD](https://arxiv.org/abs/1507.04808)

[Transformer](https://arxiv.org/abs/1706.03762)

[GPT-2](http://www.persagen.com/files/misc/radford2019language.pdf) | ×

×

× | ×

×

√ |

-| Policy model | PMI

[MGCG](https://arxiv.org/abs/2005.03954)

[Conv-BERT](https://arxiv.org/abs/2010.04125)

[Topic-BERT](https://arxiv.org/abs/2010.04125)

[Profile-BERT](https://arxiv.org/abs/2010.04125) | ×

×

×

×

× | ×

×

√

√

√ |

-

-Among them, the four CRS models integrate the recommendation model and the conversation model to improve each other, while others only specify an individual task.

-

-For Recommendation model and Conversation model, we have respectively implemented the following commonly-used automatic evaluation metrics:

-

-| Category | Metrics |

-| :--------------------: | :----------------------------------------------------------: |

-| Recommendation Metrics | Hit@{1, 10, 50}, MRR@{1, 10, 50}, NDCG@{1, 10, 50} |

-| Conversation Metrics | PPL, BLEU-{1, 2, 3, 4}, Embedding Average/Extreme/Greedy, Distinct-{1, 2, 3, 4} |

-| Policy Metrics | Accuracy, Hit@{1,3,5} |

-

-

-

-## Datasets

-

-We have collected and preprocessed 6 commonly-used human-annotated datasets, and each dataset was matched with proper KGs as shown below:

-

-| Dataset | Dialogs | Utterances | Domains | Task Definition | Entity KG | Word KG |

-| :----------------------------------------------------------: | :-----: | :--------: | :----------: | :-------------: | :--------: | :--------: |

-| [ReDial](https://redialdata.github.io/website/) | 10,006 | 182,150 | Movie | -- | DBpedia | ConceptNet |

-| [TG-ReDial](https://github.com/RUCAIBox/TG-ReDial) | 10,000 | 129,392 | Movie | Topic Guide | CN-DBpedia | HowNet |

-| [GoRecDial](https://arxiv.org/abs/1909.03922) | 9,125 | 170,904 | Movie | Action Choice | DBpedia | ConceptNet |

-| [DuRecDial](https://arxiv.org/abs/2005.03954) | 10,200 | 156,000 | Movie, Music | Goal Plan | CN-DBpedia | HowNet |

-| [INSPIRED](https://github.com/sweetpeach/Inspired) | 1,001 | 35,811 | Movie | Social Strategy | DBpedia | ConceptNet |

-| [OpenDialKG](https://github.com/facebookresearch/opendialkg) | 13,802 | 91,209 | Movie, Book | Path Generate | DBpedia | ConceptNet |

-

-

-

-## Performance

-

-We have trained and test the integrated models on the TG-Redial dataset, which is split into training, validation and test sets using a ratio of 8:1:1. For each conversation, we start from the first utterance, and generate reply utterances or recommendations in turn by our model. We perform the evaluation on the three sub-tasks.

-

-### Recommendation Task

-

-| Model | Hit@1 | Hit@10 | Hit@50 | MRR@1 | MRR@10 | MRR@50 | NDCG@1 | NDCG@10 | NDCG@50 |

-| :-------: | :---------: | :--------: | :--------: | :---------: | :--------: | :--------: | :---------: | :--------: | :--------: |

-| SASRec | 0.000446 | 0.00134 | 0.0160 | 0.000446 | 0.000576 | 0.00114 | 0.000445 | 0.00075 | 0.00380 |

-| TextCNN | 0.00267 | 0.0103 | 0.0236 | 0.00267 | 0.00434 | 0.00493 | 0.00267 | 0.00570 | 0.00860 |

-| BERT | 0.00722 | 0.00490 | 0.0281 | 0.00722 | 0.0106 | 0.0124 | 0.00490 | 0.0147 | 0.0239 |

-| KBRD | 0.00401 | 0.0254 | 0.0588 | 0.00401 | 0.00891 | 0.0103 | 0.00401 | 0.0127 | 0.0198 |

-| KGSF | 0.00535 | **0.0285** | **0.0771** | 0.00535 | 0.0114 | **0.0135** | 0.00535 | **0.0154** | **0.0259** |

-| TG-ReDial | **0.00793** | 0.0251 | 0.0524 | **0.00793** | **0.0122** | 0.0134 | **0.00793** | 0.0152 | 0.0211 |

-

-

-### Conversation Task

-

-| Model | BLEU@1 | BLEU@2 | BLEU@3 | BLEU@4 | Dist@1 | Dist@2 | Dist@3 | Dist@4 | Average | Extreme | Greedy | PPL |

-| :---------: | :-------: | :-------: | :--------: | :--------: | :------: | :------: | :------: | :------: | :-------: | :-------: | :-------: | :------: |

-| HERD | 0.120 | 0.0141 | 0.00136 | 0.000350 | 0.181 | 0.369 | 0.847 | 1.30 | 0.697 | 0.382 | 0.639 | 472 |

-| Transformer | 0.266 | 0.0440 | 0.0145 | 0.00651 | 0.324 | 0.837 | 2.02 | 3.06 | 0.879 | 0.438 | 0.680 | 30.9 |

-| GPT2 | 0.0858 | 0.0119 | 0.00377 | 0.0110 | **2.35** | **4.62** | **8.84** | **12.5** | 0.763 | 0.297 | 0.583 | 9.26 |

-| KBRD | 0.267 | 0.0458 | 0.0134 | 0.00579 | 0.469 | 1.50 | 3.40 | 4.90 | 0.863 | 0.398 | 0.710 | 52.5 |

-| KGSF | **0.383** | **0.115** | **0.0444** | **0.0200** | 0.340 | 0.910 | 3.50 | 6.20 | **0.888** | **0.477** | **0.767** | 50.1 |

-| TG-ReDial | 0.125 | 0.0204 | 0.00354 | 0.000803 | 0.881 | 1.75 | 7.00 | 12.0 | 0.810 | 0.332 | 0.598 | **7.41** |

-

-

-### Policy Task

-

-| Model | Hit@1 | Hit@10 | Hit@50 | MRR@1 | MRR@10 | MRR@50 | NDCG@1 | NDCG@10 | NDCG@50 |

-| :--------: | :-------: | :-------: | :-------: | :-------: | :-------: | :-------: | :-------: | :-------: | :-------: |

-| MGCG | 0.591 | 0.818 | 0.883 | 0.591 | 0.680 | 0.683 | 0.591 | 0.712 | 0.729 |

-| Conv-BERT | 0.597 | 0.814 | 0.881 | 0.597 | 0.684 | 0.687 | 0.597 | 0.716 | 0.731 |

-| Topic-BERT | 0.598 | 0.828 | 0.885 | 0.598 | 0.690 | 0.693 | 0.598 | 0.724 | 0.737 |

-| TG-ReDial | **0.600** | **0.830** | **0.893** | **0.600** | **0.693** | **0.696** | **0.600** | **0.727** | **0.741** |

-

-The above results were obtained from our CRSLab in preliminary experiments. However, these algorithms were implemented and tuned based on our understanding and experiences, which may not achieve their optimal performance. If you could yield a better result for some specific algorithm, please kindly let us know. We will update this table after the results are verified.

-

-## Releases

-

-| Releases | Date | Features |

-| :------: | :-----------: | :----------: |

-| v0.1.1 | 1 / 4 / 2021 | Basic CRSLab |

-| v0.1.2 | 3 / 28 / 2021 | CRSLab |

-

-

-

-## Contributions

-

-Please let us know if you encounter a bug or have any suggestions by [filing an issue](https://github.com/RUCAIBox/CRSLab/issues).

-

-We welcome all contributions from bug fixes to new features and extensions.

-

-We expect all contributions discussed in the issue tracker and going through PRs.

-

-We thank the nice contributions through PRs from [@shubaoyu](https://github.com/shubaoyu), [@ToheartZhang](https://github.com/ToheartZhang).

-

-

-

-## Citing

-

-If you find CRSLab useful for your research or development, please cite our [Paper](https://arxiv.org/pdf/2101.00939.pdf):

-

-```

-@article{crslab,

- title={CRSLab: An Open-Source Toolkit for Building Conversational Recommender System},

- author={Kun Zhou, Xiaolei Wang, Yuanhang Zhou, Chenzhan Shang, Yuan Cheng, Wayne Xin Zhao, Yaliang Li, Ji-Rong Wen},

- year={2021},

- journal={arXiv preprint arXiv:2101.00939}

-}

-```

-

-

-

-## Team

-

-**CRSLab** was developed and maintained by [AI Box](http://aibox.ruc.edu.cn/) group in RUC.

-

-

-

-## License

-

-**CRSLab** uses [MIT License](./LICENSE).

-

+bash chatgpt_chat.sh # free-form chit-chat

+bash chatgpt_ask.sh # attribute-based question answering

+```

\ No newline at end of file

diff --git a/README_CN.md b/README_CN.md

deleted file mode 100644

index 8290a4a..0000000

--- a/README_CN.md

+++ /dev/null

@@ -1,290 +0,0 @@

-# CRSLab

-

-[](https://pypi.org/project/crslab)

-[](https://github.com/rucaibox/crslab/releases)

-[](./LICENSE)

-[](https://arxiv.org/abs/2101.00939)

-[](https://crslab.readthedocs.io/en/latest/?badge=latest)

-

-[论文](https://arxiv.org/pdf/2101.00939.pdf) | [文档](https://crslab.readthedocs.io/en/latest/?badge=latest)

-| [English Version](./README.md)

-

-**CRSLab** 是一个用于构建对话推荐系统(CRS)的开源工具包,其基于 PyTorch 实现、主要面向研究者使用,并具有如下特色:

-

-- **全面的基准模型和数据集**:我们集成了常用的 6 个数据集和 18 个模型,包括基于图神经网络和预训练模型,比如 GCN,BERT 和 GPT-2;我们还对数据集进行相关处理以支持这些模型,并提供预处理后的版本供大家下载。

-- **大规模的标准评测**:我们支持一系列被广泛认可的评估方式来测试和比较不同的 CRS。

-- **通用和可扩展的结构**:我们设计了通用和可扩展的结构来统一各种对话推荐数据集和模型,并集成了多种内置接口和函数以便于快速开发。

-- **便捷的使用方法**:我们为新手提供了简单而灵活的配置,方便其快速启动集成在 CRSLab 中的模型。

-- **人性化的人机交互接口**:我们提供了人性化的人机交互界面,以供研究者对比和测试不同的模型系统。

-

-

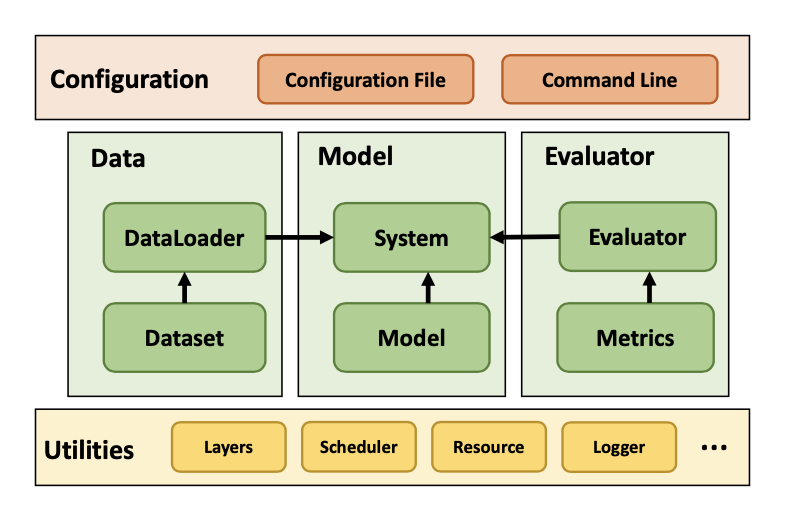

-  -

-

- 图片: CRSLab 的总体架构

-

-

-

-

-

-- [安装](#安装)

-- [快速上手](#快速上手)

-- [模型](#模型)

-- [数据集](#数据集)

-- [评测结果](#评测结果)

-- [发行版本](#发行版本)

-- [贡献](#贡献)

-- [引用](#引用)

-- [项目团队](#项目团队)

-- [免责声明](#免责声明)

-

-

-

-## 安装

-

-CRSLab 可以在以下几种系统上运行:

-

-- Linux

-- Windows 10

-- macOS X

-

-CRSLab 需要在 Python 3.6 或更高的环境下运行。

-

-CRSLab 要求 torch 版本在 1.4.0 及以上,如果你想在 GPU 上运行 CRSLab,请确保你的 CUDA 版本或者 CUDAToolkit 版本在 9.2 及以上。为保证 PyTorch Geometric 库的正常运行,请使用[链接](https://pytorch-geometric.com/whl/)所示的安装方式。

-

-

-

-### 安装 PyTorch

-

-使用 PyTorch [本地安装](https://pytorch.org/get-started/locally/)命令或者[先前版本安装](https://pytorch.org/get-started/previous-versions/)命令安装 PyTorch,比如在 Linux 和 Windows 下:

-

-```bash

-# CUDA 10.1

-pip install torch==1.6.0+cu101 torchvision==0.7.0+cu101 -f https://download.pytorch.org/whl/torch_stable.html

-

-# CPU only

-pip install torch==1.6.0+cpu torchvision==0.7.0+cpu -f https://download.pytorch.org/whl/torch_stable.html

-```

-

-安装完成后,如果你想在 GPU 上运行 CRSLab,请确保如下命令输出`True`:

-

-```bash

-$ python -c "import torch; print(torch.cuda.is_available())"

->>> True

-```

-

-

-

-### 安装 PyTorch Geometric

-

-确保安装的 PyTorch 版本至少为 1.4.0:

-

-```bash

-$ python -c "import torch; print(torch.__version__)"

->>> 1.6.0

-```

-

-找到安装好的 PyTorch 对应的 CUDA 版本:

-

-```bash

-$ python -c "import torch; print(torch.version.cuda)"

->>> 10.1

-```

-

-安装相关的包:

-

-```bash

-pip install torch-scatter -f https://pytorch-geometric.com/whl/torch-${TORCH}+${CUDA}.html

-pip install torch-sparse -f https://pytorch-geometric.com/whl/torch-${TORCH}+${CUDA}.html

-pip install torch-cluster -f https://pytorch-geometric.com/whl/torch-${TORCH}+${CUDA}.html

-pip install torch-spline-conv -f https://pytorch-geometric.com/whl/torch-${TORCH}+${CUDA}.html

-pip install torch-geometric

-```

-

-其中`${CUDA}`和`${TORCH}`应使用确定的 CUDA 版本(`cpu`,`cu92`,`cu101`,`cu102`,`cu110`)和 PyTorch 版本(`1.4.0`,`1.5.0`,`1.6.0`,`1.7.0`)来分别替换。比如,对于 PyTorch 1.6.0 和 CUDA 10.1,输入:

-

-```bash

-pip install torch-scatter -f https://pytorch-geometric.com/whl/torch-1.6.0+cu101.html

-pip install torch-sparse -f https://pytorch-geometric.com/whl/torch-1.6.0+cu101.html

-pip install torch-cluster -f https://pytorch-geometric.com/whl/torch-1.6.0+cu101.html

-pip install torch-spline-conv -f https://pytorch-geometric.com/whl/torch-1.6.0+cu101.html

-pip install torch-geometric

-```

-

-

-

-### 安装 CRSLab

-

-你可以通过 pip 来安装:

-

-```bash

-pip install crslab

-```

-

-也可以通过源文件进行进行安装:

-

-```bash

-git clone https://github.com/RUCAIBox/CRSLab && cd CRSLab

-pip install -e .

-```

-

-

-

-## 快速上手

-

-从 GitHub 下载 CRSLab 后,可以使用提供的脚本快速运行和测试,默认使用CPU:

-

-```bash

-python run_crslab.py --config config/crs/kgsf/redial.yaml

-```

-

-系统将依次完成数据的预处理,以及各模块的训练、验证和测试,并得到指定的模型评测结果。

-

-如果你希望保存数据预处理结果与模型训练结果,可以使用如下命令:

-

-```bash

-python run_crslab.py --config config/crs/kgsf/redial.yaml --save_data --save_system

-```

-

-总的来说,`run_crslab.py`有如下参数可供调用:

-

-- `--config` 或 `-c`:配置文件的相对路径,以指定运行的模型与数据集。

-- `--gpu` or `-g`:指定 GPU id,支持多 GPU,默认使用 CPU(-1)。

-- `--save_data` 或 `-sd`:保存预处理的数据。

-- `--restore_data` 或 `-rd`:从文件读取预处理的数据。

-- `--save_system` 或 `-ss`:保存训练好的 CRS 系统。

-- `--restore_system` 或 `-rs`:从文件载入提前训练好的系统。

-- `--debug` 或 `-d`:用验证集代替训练集以方便调试。

-- `--interact` 或 `-i`:与你的系统进行对话交互,而非进行训练。

-- `--tensorboard` or `-tb`:使用 tensorboardX 组件来监测训练表现。

-

-

-

-## 模型

-

-在第一个发行版中,我们实现了 4 类共 18 个模型。这里我们将对话推荐任务主要拆分成三个任务:推荐任务(生成推荐的商品),对话任务(生成对话的回复)和策略任务(规划对话推荐的策略)。其中所有的对话推荐系统都具有对话和推荐任务,他们是对话推荐系统的核心功能。而策略任务是一个辅助任务,其致力于更好的控制对话推荐系统,在不同的模型中的实现也可能不同(如 TG-ReDial 采用一个主题预测模型,DuRecDial 中采用一个对话规划模型等):

-

-

-

-| 类别 | 模型 | Graph Neural Network? | Pre-training Model? |

-| :------: | :----------------------------------------------------------: | :-----------------------------: | :-----------------------------: |

-| CRS 模型 | [ReDial](https://arxiv.org/abs/1812.07617)

[KBRD](https://arxiv.org/abs/1908.05391)

[KGSF](https://arxiv.org/abs/2007.04032)

[TG-ReDial](https://arxiv.org/abs/2010.04125)

[INSPIRED](https://www.aclweb.org/anthology/2020.emnlp-main.654.pdf) | ×

√

√

×

× | ×

×

×

√

√ |

-| 推荐模型 | Popularity

[GRU4Rec](https://arxiv.org/abs/1511.06939)

[SASRec](https://arxiv.org/abs/1808.09781)

[TextCNN](https://arxiv.org/abs/1408.5882)

[R-GCN](https://arxiv.org/abs/1703.06103)

[BERT](https://arxiv.org/abs/1810.04805) | ×

×

×

×

√

× | ×

×

×

×

×

√ |

-| 对话模型 | [HERD](https://arxiv.org/abs/1507.04808)

[Transformer](https://arxiv.org/abs/1706.03762)

[GPT-2](http://www.persagen.com/files/misc/radford2019language.pdf) | ×

×

× | ×

×

√ |

-| 策略模型 | PMI

[MGCG](https://arxiv.org/abs/2005.03954)

[Conv-BERT](https://arxiv.org/abs/2010.04125)

[Topic-BERT](https://arxiv.org/abs/2010.04125)

[Profile-BERT](https://arxiv.org/abs/2010.04125) | ×

×

×

×

× | ×

×

√

√

√ |

-

-

-其中,CRS 模型是指直接融合推荐模型和对话模型,以相互增强彼此的效果,故其内部往往已经包含了推荐、对话和策略模型。其他如推荐模型、对话模型、策略模型往往只关注以上任务中的某一个。

-

-我们对于这几类模型,我们还分别实现了如下的自动评测指标模块:

-

-| 类别 | 指标 |

-| :------: | :----------------------------------------------------------: |

-| 推荐指标 | Hit@{1, 10, 50}, MRR@{1, 10, 50}, NDCG@{1, 10, 50} |

-| 对话指标 | PPL, BLEU-{1, 2, 3, 4}, Embedding Average/Extreme/Greedy, Distinct-{1, 2, 3, 4} |

-| 策略指标 | Accuracy, Hit@{1,3,5} |

-

-

-

-

-

-## 数据集

-

-我们收集了 6 个常用的人工标注数据集,并对它们进行了预处理(包括引入外部知识图谱),以融入统一的 CRS 任务中。如下为相关数据集的统计数据:

-

-| Dataset | Dialogs | Utterances | Domains | Task Definition | Entity KG | Word KG |

-| :----------------------------------------------------------: | :-----: | :--------: | :----------: | :-------------: | :--------: | :--------: |

-| [ReDial](https://redialdata.github.io/website/) | 10,006 | 182,150 | Movie | -- | DBpedia | ConceptNet |

-| [TG-ReDial](https://github.com/RUCAIBox/TG-ReDial) | 10,000 | 129,392 | Movie | Topic Guide | CN-DBpedia | HowNet |

-| [GoRecDial](https://arxiv.org/abs/1909.03922) | 9,125 | 170,904 | Movie | Action Choice | DBpedia | ConceptNet |

-| [DuRecDial](https://arxiv.org/abs/2005.03954) | 10,200 | 156,000 | Movie, Music | Goal Plan | CN-DBpedia | HowNet |

-| [INSPIRED](https://github.com/sweetpeach/Inspired) | 1,001 | 35,811 | Movie | Social Strategy | DBpedia | ConceptNet |

-| [OpenDialKG](https://github.com/facebookresearch/opendialkg) | 13,802 | 91,209 | Movie, Book | Path Generate | DBpedia | ConceptNet |

-

-

-

-## 评测结果

-

-我们在 TG-ReDial 数据集上对模型进行了训练和测试,这里我们将数据集按照 8:1:1 切分。其中对于每条数据,我们从对话的第一轮开始,一轮一轮的进行推荐、策略生成、回复生成任务。下表记录了相关的评测结果。

-

-### 推荐任务

-

-| 模型 | Hit@1 | Hit@10 | Hit@50 | MRR@1 | MRR@10 | MRR@50 | NDCG@1 | NDCG@10 | NDCG@50 |

-| :-------: | :---------: | :--------: | :--------: | :---------: | :--------: | :--------: | :---------: | :--------: | :--------: |

-| SASRec | 0.000446 | 0.00134 | 0.0160 | 0.000446 | 0.000576 | 0.00114 | 0.000445 | 0.00075 | 0.00380 |

-| TextCNN | 0.00267 | 0.0103 | 0.0236 | 0.00267 | 0.00434 | 0.00493 | 0.00267 | 0.00570 | 0.00860 |

-| BERT | 0.00722 | 0.00490 | 0.0281 | 0.00722 | 0.0106 | 0.0124 | 0.00490 | 0.0147 | 0.0239 |

-| KBRD | 0.00401 | 0.0254 | 0.0588 | 0.00401 | 0.00891 | 0.0103 | 0.00401 | 0.0127 | 0.0198 |

-| KGSF | 0.00535 | **0.0285** | **0.0771** | 0.00535 | 0.0114 | **0.0135** | 0.00535 | **0.0154** | **0.0259** |

-| TG-ReDial | **0.00793** | 0.0251 | 0.0524 | **0.00793** | **0.0122** | 0.0134 | **0.00793** | 0.0152 | 0.0211 |

-

-

-

-### 对话任务

-

-| 模型 | BLEU@1 | BLEU@2 | BLEU@3 | BLEU@4 | Dist@1 | Dist@2 | Dist@3 | Dist@4 | Average | Extreme | Greedy | PPL |

-| :---------: | :-------: | :-------: | :--------: | :--------: | :------: | :------: | :------: | :------: | :-------: | :-------: | :-------: | :------: |

-| HERD | 0.120 | 0.0141 | 0.00136 | 0.000350 | 0.181 | 0.369 | 0.847 | 1.30 | 0.697 | 0.382 | 0.639 | 472 |

-| Transformer | 0.266 | 0.0440 | 0.0145 | 0.00651 | 0.324 | 0.837 | 2.02 | 3.06 | 0.879 | 0.438 | 0.680 | 30.9 |

-| GPT2 | 0.0858 | 0.0119 | 0.00377 | 0.0110 | **2.35** | **4.62** | **8.84** | **12.5** | 0.763 | 0.297 | 0.583 | 9.26 |

-| KBRD | 0.267 | 0.0458 | 0.0134 | 0.00579 | 0.469 | 1.50 | 3.40 | 4.90 | 0.863 | 0.398 | 0.710 | 52.5 |

-| KGSF | **0.383** | **0.115** | **0.0444** | **0.0200** | 0.340 | 0.910 | 3.50 | 6.20 | **0.888** | **0.477** | **0.767** | 50.1 |

-| TG-ReDial | 0.125 | 0.0204 | 0.00354 | 0.000803 | 0.881 | 1.75 | 7.00 | 12.0 | 0.810 | 0.332 | 0.598 | **7.41** |

-

-

-

-### 策略任务

-

-| 模型 | Hit@1 | Hit@10 | Hit@50 | MRR@1 | MRR@10 | MRR@50 | NDCG@1 | NDCG@10 | NDCG@50 |

-| :--------: | :-------: | :-------: | :-------: | :-------: | :-------: | :-------: | :-------: | :-------: | :-------: |

-| MGCG | 0.591 | 0.818 | 0.883 | 0.591 | 0.680 | 0.683 | 0.591 | 0.712 | 0.729 |

-| Conv-BERT | 0.597 | 0.814 | 0.881 | 0.597 | 0.684 | 0.687 | 0.597 | 0.716 | 0.731 |

-| Topic-BERT | 0.598 | 0.828 | 0.885 | 0.598 | 0.690 | 0.693 | 0.598 | 0.724 | 0.737 |

-| TG-ReDial | **0.600** | **0.830** | **0.893** | **0.600** | **0.693** | **0.696** | **0.600** | **0.727** | **0.741** |

-

-上述结果是我们使用 CRSLab 进行实验得到的。然而,这些算法是根据我们的经验和理解来实现和调参的,可能还没有达到它们的最佳性能。如果您能在某个具体算法上得到更好的结果,请告知我们。验证结果后,我们会更新该表。

-

-## 发行版本

-

-| 版本号 | 发行日期 | 特性 |

-| :----: | :-----------: | :----------: |

-| v0.1.1 | 1 / 4 / 2021 | Basic CRSLab |

-| v0.1.2 | 3 / 28 / 2021 | CRSLab |

-

-

-

-## 贡献

-

-如果您遇到错误或有任何建议,请通过 [Issue](https://github.com/RUCAIBox/CRSLab/issues) 进行反馈

-

-我们欢迎关于修复错误、添加新特性的任何贡献。

-

-如果想贡献代码,请先在 Issue 中提出问题,然后再提 PR。

-

-我们感谢 [@shubaoyu](https://github.com/shubaoyu), [@ToheartZhang](https://github.com/ToheartZhang) 通过 PR 为项目贡献的新特性。

-

-

-

-## 引用

-

-如果你觉得 CRSLab 对你的科研工作有帮助,请引用我们的[论文](https://arxiv.org/pdf/2101.00939.pdf):

-

-```

-@article{crslab,

- title={CRSLab: An Open-Source Toolkit for Building Conversational Recommender System},

- author={Kun Zhou, Xiaolei Wang, Yuanhang Zhou, Chenzhan Shang, Yuan Cheng, Wayne Xin Zhao, Yaliang Li, Ji-Rong Wen},

- year={2021},

- journal={arXiv preprint arXiv:2101.00939}

-}

-```

-

-

-

-## 项目团队

-

-**CRSLab** 由中国人民大学 [AI Box](http://aibox.ruc.edu.cn/) 小组开发和维护。

-

-

-

-## 免责声明

-

-**CRSLab** 基于 [MIT License](./LICENSE) 进行开发,本项目的所有数据和代码只能被用于学术目的。

diff --git a/chatgpt_ask.sh b/chatgpt_ask.sh

new file mode 100644

index 0000000..89f3d35

--- /dev/null

+++ b/chatgpt_ask.sh

@@ -0,0 +1 @@

+python run_crslab.py --config config/iEvaLM/chatgpt/redial.yaml --mode ask

\ No newline at end of file

diff --git a/chatgpt_chat.sh b/chatgpt_chat.sh

new file mode 100644

index 0000000..4e8478c

--- /dev/null

+++ b/chatgpt_chat.sh

@@ -0,0 +1 @@

+python run_crslab.py --config config/iEvaLM/chatgpt/redial.yaml --mode chat

\ No newline at end of file

diff --git a/config/conversation/gpt2/durecdial.yaml b/config/conversation/gpt2/durecdial.yaml

index 92a5329..5762297 100644

--- a/config/conversation/gpt2/durecdial.yaml

+++ b/config/conversation/gpt2/durecdial.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

conv_model: GPT2

# optim

diff --git a/config/conversation/gpt2/gorecdial.yaml b/config/conversation/gpt2/gorecdial.yaml

index ea155c4..7dd0a6d 100644

--- a/config/conversation/gpt2/gorecdial.yaml

+++ b/config/conversation/gpt2/gorecdial.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

conv_model: GPT2

# optim

diff --git a/config/conversation/gpt2/opendialkg.yaml b/config/conversation/gpt2/opendialkg.yaml

index d96e8d6..bf93096 100644

--- a/config/conversation/gpt2/opendialkg.yaml

+++ b/config/conversation/gpt2/opendialkg.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

conv_model: GPT2

# optim

diff --git a/config/conversation/gpt2/redial.yaml b/config/conversation/gpt2/redial.yaml

index 3a89ac8..d6db3d0 100644

--- a/config/conversation/gpt2/redial.yaml

+++ b/config/conversation/gpt2/redial.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

conv_model: GPT2

# optim

diff --git a/config/conversation/transformer/durecdial.yaml b/config/conversation/transformer/durecdial.yaml

index c61973c..a9f92fe 100644

--- a/config/conversation/transformer/durecdial.yaml

+++ b/config/conversation/transformer/durecdial.yaml

@@ -5,7 +5,7 @@ tokenize:

# dataloader

context_truncate: 1024

response_truncate: 1024

-scale: 0.01

+scale: 1

# model

conv_model: Transformer

token_emb_dim: 300

diff --git a/config/conversation/transformer/gorecdial.yaml b/config/conversation/transformer/gorecdial.yaml

index c05ddb4..fd578c5 100644

--- a/config/conversation/transformer/gorecdial.yaml

+++ b/config/conversation/transformer/gorecdial.yaml

@@ -5,7 +5,7 @@ tokenize:

# dataloader

context_truncate: 1024

response_truncate: 1024

-scale: 0.01

+scale: 1

# model

conv_model: Transformer

token_emb_dim: 300

diff --git a/config/conversation/transformer/inspired.yaml b/config/conversation/transformer/inspired.yaml

index 90b86d8..fc6ea05 100644

--- a/config/conversation/transformer/inspired.yaml

+++ b/config/conversation/transformer/inspired.yaml

@@ -5,7 +5,7 @@ tokenize:

# dataloader

context_truncate: 1024

response_truncate: 1024

-scale: 0.01

+scale: 1

# model

conv_model: Transformer

token_emb_dim: 300

diff --git a/config/conversation/transformer/opendialkg.yaml b/config/conversation/transformer/opendialkg.yaml

index 2971704..208adce 100644

--- a/config/conversation/transformer/opendialkg.yaml

+++ b/config/conversation/transformer/opendialkg.yaml

@@ -5,7 +5,7 @@ tokenize:

# dataloader

context_truncate: 1024

response_truncate: 1024

-scale: 0.01

+scale: 1

# model

conv_model: Transformer

token_emb_dim: 300

diff --git a/config/conversation/transformer/redial.yaml b/config/conversation/transformer/redial.yaml

index 25f2ab4..ae1c7c6 100644

--- a/config/conversation/transformer/redial.yaml

+++ b/config/conversation/transformer/redial.yaml

@@ -5,7 +5,7 @@ tokenize:

# dataloader

context_truncate: 1024

response_truncate: 1024

-scale: 0.01

+scale: 1

# model

conv_model: Transformer

token_emb_dim: 300

diff --git a/config/crs/kbrd/durecdial.yaml b/config/crs/kbrd/durecdial.yaml

index 4a115f2..fb62d7e 100644

--- a/config/crs/kbrd/durecdial.yaml

+++ b/config/crs/kbrd/durecdial.yaml

@@ -4,7 +4,7 @@ tokenize: jieba

# dataloader

context_truncate: 1024

response_truncate: 1024

-scale: 0.1

+scale: 1

# model

model: KBRD

token_emb_dim: 300

diff --git a/config/crs/kbrd/gorecdial.yaml b/config/crs/kbrd/gorecdial.yaml

index be78096..98360a5 100644

--- a/config/crs/kbrd/gorecdial.yaml

+++ b/config/crs/kbrd/gorecdial.yaml

@@ -4,7 +4,7 @@ tokenize: nltk

# dataloader

context_truncate: 1024

response_truncate: 1024

-scale: 0.01

+scale: 1

# model

model: KBRD

token_emb_dim: 300

diff --git a/config/crs/kgsf/durecdial.yaml b/config/crs/kgsf/durecdial.yaml

index b5e8eff..481eb1f 100644

--- a/config/crs/kgsf/durecdial.yaml

+++ b/config/crs/kgsf/durecdial.yaml

@@ -5,7 +5,7 @@ embedding: word2vec.npy

# dataloader

context_truncate: 256

response_truncate: 30

-scale: 0.01

+scale: 1

# model

model: KGSF

token_emb_dim: 300

diff --git a/config/crs/kgsf/gorecdial.yaml b/config/crs/kgsf/gorecdial.yaml

index 0e4ba7e..b9b1ad1 100644

--- a/config/crs/kgsf/gorecdial.yaml

+++ b/config/crs/kgsf/gorecdial.yaml

@@ -5,7 +5,7 @@ embedding: word2vec.npy

# dataloader

context_truncate: 256

response_truncate: 30

-scale: 0.01

+scale: 1

# model

model: KGSF

token_emb_dim: 300

diff --git a/config/crs/kgsf/opendialkg.yaml b/config/crs/kgsf/opendialkg.yaml

index b9a2b06..5d3df93 100644

--- a/config/crs/kgsf/opendialkg.yaml

+++ b/config/crs/kgsf/opendialkg.yaml

@@ -5,7 +5,7 @@ embedding: word2vec.npy

# dataloader

context_truncate: 256

response_truncate: 30

-scale: 0.01

+scale: 1

# model

model: KGSF

token_emb_dim: 300

diff --git a/config/crs/kgsf/redial.yaml b/config/crs/kgsf/redial.yaml

index b6c1de0..9b235da 100644

--- a/config/crs/kgsf/redial.yaml

+++ b/config/crs/kgsf/redial.yaml

@@ -5,7 +5,7 @@ embedding: word2vec.npy

# dataloader

context_truncate: 256

response_truncate: 30

-scale: 1.0

+scale: 1

# model

model: KGSF

token_emb_dim: 300

diff --git a/config/crs/kgsf/tgredial.yaml b/config/crs/kgsf/tgredial.yaml

index a120f98..981dbcf 100644

--- a/config/crs/kgsf/tgredial.yaml

+++ b/config/crs/kgsf/tgredial.yaml

@@ -5,7 +5,7 @@ embedding: word2vec.npy

# dataloader

context_truncate: 256

response_truncate: 30

-scale: 1.0

+scale: 1

# model

model: KGSF

token_emb_dim: 300

diff --git a/config/crs/ntrd/tgredial.yaml b/config/crs/ntrd/tgredial.yaml

index 44a1c77..91f38c7 100644

--- a/config/crs/ntrd/tgredial.yaml

+++ b/config/crs/ntrd/tgredial.yaml

@@ -5,7 +5,7 @@ embedding: word2vec.npy

# dataloader

context_truncate: 256

response_truncate: 30

-scale: 1.0

+scale: 1

# model

model: NTRD

token_emb_dim: 300

diff --git a/config/crs/redial/opendialkg.yaml b/config/crs/redial/opendialkg.yaml

index 061616b..7cf0637 100644

--- a/config/crs/redial/opendialkg.yaml

+++ b/config/crs/redial/opendialkg.yaml

@@ -6,7 +6,7 @@ tokenize:

# dataloader

utterance_truncate: 80

conversation_truncate: 40

-scale: 0.01

+scale: 1

# model

# rec

rec_model: ReDialRec

diff --git a/config/crs/redial/redial.yaml b/config/crs/redial/redial.yaml

index c6e848d..2cf569f 100644

--- a/config/crs/redial/redial.yaml

+++ b/config/crs/redial/redial.yaml

@@ -6,7 +6,7 @@ tokenize:

# dataloader

utterance_truncate: 80

conversation_truncate: 40

-scale: 0.01

+scale: 1

# model

# rec

rec_model: ReDialRec

diff --git a/config/crs/redial/tgredial.yaml b/config/crs/redial/tgredial.yaml

index a87d3c5..fa388f9 100644

--- a/config/crs/redial/tgredial.yaml

+++ b/config/crs/redial/tgredial.yaml

@@ -6,7 +6,7 @@ tokenize:

# dataloader

utterance_truncate: 80

conversation_truncate: 40

-scale: 0.01

+scale: 1

# model

# rec

rec_model: ReDialRec

diff --git a/config/crs/tgredial/durecdial.yaml b/config/crs/tgredial/durecdial.yaml

index 08a96aa..0bd4e9e 100644

--- a/config/crs/tgredial/durecdial.yaml

+++ b/config/crs/tgredial/durecdial.yaml

@@ -7,7 +7,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

rec_model: TGRec

conv_model: TGConv

diff --git a/config/crs/tgredial/gorecdial.yaml b/config/crs/tgredial/gorecdial.yaml

index 74382ff..f766def 100644

--- a/config/crs/tgredial/gorecdial.yaml

+++ b/config/crs/tgredial/gorecdial.yaml

@@ -7,7 +7,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

rec_model: TGRec

conv_model: TGConv

diff --git a/config/crs/tgredial/opendialkg.yaml b/config/crs/tgredial/opendialkg.yaml

index bcfb217..563bbcc 100644

--- a/config/crs/tgredial/opendialkg.yaml

+++ b/config/crs/tgredial/opendialkg.yaml

@@ -7,7 +7,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

rec_model: TGRec

conv_model: TGConv

diff --git a/config/crs/tgredial/redial.yaml b/config/crs/tgredial/redial.yaml

index 8e983a0..60943d8 100644

--- a/config/crs/tgredial/redial.yaml

+++ b/config/crs/tgredial/redial.yaml

@@ -7,7 +7,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

rec_model: TGRec

conv_model: TGConv

diff --git a/config/iEvaLM/chatgpt/redial.yaml b/config/iEvaLM/chatgpt/redial.yaml

new file mode 100644

index 0000000..01367fc

--- /dev/null

+++ b/config/iEvaLM/chatgpt/redial.yaml

@@ -0,0 +1,11 @@

+model: ChatGPT

+tokenize: nltk

+dataset: ReDial

+api_key: your_api_key

+turn_num: 5

+rec:

+ batch_size: 1

+conv:

+ batch_size: 1

+cache_item:

+ batch_size: 1000

\ No newline at end of file

diff --git a/config/policy/pmi/tgredial.yaml b/config/policy/pmi/tgredial.yaml

index 87bb5e6..f6ba96c 100644

--- a/config/policy/pmi/tgredial.yaml

+++ b/config/policy/pmi/tgredial.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

policy_model: PMI

# optim

diff --git a/config/recommendation/bert/durecdial.yaml b/config/recommendation/bert/durecdial.yaml

index 0d4250a..051e8d1 100644

--- a/config/recommendation/bert/durecdial.yaml

+++ b/config/recommendation/bert/durecdial.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

rec_model: BERT

# optim

diff --git a/config/recommendation/bert/gorecdial.yaml b/config/recommendation/bert/gorecdial.yaml

index 22ff335..cdbb30e 100644

--- a/config/recommendation/bert/gorecdial.yaml

+++ b/config/recommendation/bert/gorecdial.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

rec_model: BERT

# optim

diff --git a/config/recommendation/bert/opendialkg.yaml b/config/recommendation/bert/opendialkg.yaml

index 4b59696..ae9f12f 100644

--- a/config/recommendation/bert/opendialkg.yaml

+++ b/config/recommendation/bert/opendialkg.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

rec_model: BERT

# optim

diff --git a/config/recommendation/bert/redial.yaml b/config/recommendation/bert/redial.yaml

index be5fa53..48d664c 100644

--- a/config/recommendation/bert/redial.yaml

+++ b/config/recommendation/bert/redial.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

rec_model: BERT

# optim

diff --git a/config/recommendation/gru4rec/durecdial.yaml b/config/recommendation/gru4rec/durecdial.yaml

index 94a5f6a..aa73472 100644

--- a/config/recommendation/gru4rec/durecdial.yaml

+++ b/config/recommendation/gru4rec/durecdial.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

rec_model: GRU4REC

gru_hidden_size: 50

diff --git a/config/recommendation/gru4rec/gorecdial.yaml b/config/recommendation/gru4rec/gorecdial.yaml

index 0d80c59..5d48dd5 100644

--- a/config/recommendation/gru4rec/gorecdial.yaml

+++ b/config/recommendation/gru4rec/gorecdial.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.1

+scale: 1

# model

rec_model: GRU4REC

gru_hidden_size: 50

diff --git a/config/recommendation/gru4rec/opendialkg.yaml b/config/recommendation/gru4rec/opendialkg.yaml

index b4900b9..3aebaf8 100644

--- a/config/recommendation/gru4rec/opendialkg.yaml

+++ b/config/recommendation/gru4rec/opendialkg.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

rec_model: GRU4REC

gru_hidden_size: 50

diff --git a/config/recommendation/gru4rec/redial.yaml b/config/recommendation/gru4rec/redial.yaml

index 7b707e7..b4f9c75 100644

--- a/config/recommendation/gru4rec/redial.yaml

+++ b/config/recommendation/gru4rec/redial.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

rec_model: GRU4REC

gru_hidden_size: 50

diff --git a/config/recommendation/popularity/durecdial.yaml b/config/recommendation/popularity/durecdial.yaml

index 3131e0a..ca8759a 100644

--- a/config/recommendation/popularity/durecdial.yaml

+++ b/config/recommendation/popularity/durecdial.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

rec_model: Popularity

# optim

diff --git a/config/recommendation/popularity/gorecdial.yaml b/config/recommendation/popularity/gorecdial.yaml

index bf77cd6..e6334b0 100644

--- a/config/recommendation/popularity/gorecdial.yaml

+++ b/config/recommendation/popularity/gorecdial.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

rec_model: Popularity

# optim

diff --git a/config/recommendation/popularity/opendialkg.yaml b/config/recommendation/popularity/opendialkg.yaml

index ebaf2c9..3ff691b 100644

--- a/config/recommendation/popularity/opendialkg.yaml

+++ b/config/recommendation/popularity/opendialkg.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

rec_model: Popularity

# optim

diff --git a/config/recommendation/popularity/redial.yaml b/config/recommendation/popularity/redial.yaml

index b0cbec9..27ad004 100644

--- a/config/recommendation/popularity/redial.yaml

+++ b/config/recommendation/popularity/redial.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

rec_model: Popularity

# optim

diff --git a/config/recommendation/popularity/tgredial.yaml b/config/recommendation/popularity/tgredial.yaml

index 66c9ef7..95b9247 100644

--- a/config/recommendation/popularity/tgredial.yaml

+++ b/config/recommendation/popularity/tgredial.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

rec_model: Popularity

# optim

diff --git a/config/recommendation/sasrec/durecdial.yaml b/config/recommendation/sasrec/durecdial.yaml

index 15ba15e..2a32693 100644

--- a/config/recommendation/sasrec/durecdial.yaml

+++ b/config/recommendation/sasrec/durecdial.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

rec_model: SASREC

hidden_dropout_prob: 0.2

diff --git a/config/recommendation/sasrec/gorecdial.yaml b/config/recommendation/sasrec/gorecdial.yaml

index 243a646..98b6dfe 100644

--- a/config/recommendation/sasrec/gorecdial.yaml

+++ b/config/recommendation/sasrec/gorecdial.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

rec_model: SASREC

hidden_dropout_prob: 0.2

diff --git a/config/recommendation/sasrec/opendialkg.yaml b/config/recommendation/sasrec/opendialkg.yaml

index ba4c02d..0efb55e 100644

--- a/config/recommendation/sasrec/opendialkg.yaml

+++ b/config/recommendation/sasrec/opendialkg.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

rec_model: SASREC

hidden_dropout_prob: 0.2

diff --git a/config/recommendation/sasrec/redial.yaml b/config/recommendation/sasrec/redial.yaml

index add69ec..2dea48e 100644

--- a/config/recommendation/sasrec/redial.yaml

+++ b/config/recommendation/sasrec/redial.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

rec_model: SASREC

hidden_dropout_prob: 0.2

diff --git a/config/recommendation/textcnn/durecdial.yaml b/config/recommendation/textcnn/durecdial.yaml

index 3040132..c244237 100644

--- a/config/recommendation/textcnn/durecdial.yaml

+++ b/config/recommendation/textcnn/durecdial.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

rec_model: TextCNN

hidden_dropout_prob: 0.2

diff --git a/config/recommendation/textcnn/gorecdial.yaml b/config/recommendation/textcnn/gorecdial.yaml

index 6791031..f2fc0d5 100644

--- a/config/recommendation/textcnn/gorecdial.yaml

+++ b/config/recommendation/textcnn/gorecdial.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

rec_model: TextCNN

hidden_dropout_prob: 0.2

diff --git a/config/recommendation/textcnn/opendialkg.yaml b/config/recommendation/textcnn/opendialkg.yaml

index 88eba55..5f9972b 100644

--- a/config/recommendation/textcnn/opendialkg.yaml

+++ b/config/recommendation/textcnn/opendialkg.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

rec_model: TextCNN

hidden_dropout_prob: 0.2

diff --git a/config/recommendation/textcnn/redial.yaml b/config/recommendation/textcnn/redial.yaml

index 0466f51..6c43c0a 100644

--- a/config/recommendation/textcnn/redial.yaml

+++ b/config/recommendation/textcnn/redial.yaml

@@ -6,7 +6,7 @@ tokenize:

context_truncate: 256

response_truncate: 30

item_truncate: 100

-scale: 0.01

+scale: 1

# model

rec_model: TextCNN

hidden_dropout_prob: 0.2

diff --git a/crslab/config/config.py b/crslab/config/config.py

index 3ba6fe7..1bcd061 100644

--- a/crslab/config/config.py

+++ b/crslab/config/config.py

@@ -38,7 +38,7 @@ def __init__(self, config_file, gpu='-1', debug=False):

# gpu

os.environ['CUDA_VISIBLE_DEVICES'] = gpu

if gpu != '-1':

- self.opt['gpu'] = [i for i in range(len(gpu.split(',')))]

+ self.opt['gpu'] = [i for i in gpu.split(',')]

else:

self.opt['gpu'] = [-1]

# dataset

diff --git a/crslab/data/__init__.py b/crslab/data/__init__.py

index 33bea19..299d4e5 100644

--- a/crslab/data/__init__.py

+++ b/crslab/data/__init__.py

@@ -66,7 +66,8 @@

'ProfileBERT': TGReDialDataLoader,

'MGCG': TGReDialDataLoader,

'PMI': TGReDialDataLoader,

- 'NTRD': NTRDDataLoader

+ 'NTRD': NTRDDataLoader,

+ 'ChatGPT': ChatGPTDataLoader

}

diff --git a/crslab/data/dataloader/__init__.py b/crslab/data/dataloader/__init__.py

index 7b4ce12..dc83a89 100644

--- a/crslab/data/dataloader/__init__.py

+++ b/crslab/data/dataloader/__init__.py

@@ -5,3 +5,4 @@

from .redial import ReDialDataLoader

from .tgredial import TGReDialDataLoader

from .ntrd import NTRDDataLoader

+from .chatgpt import ChatGPTDataLoader

diff --git a/crslab/data/dataloader/chatgpt.py b/crslab/data/dataloader/chatgpt.py

new file mode 100644

index 0000000..41dd82b

--- /dev/null

+++ b/crslab/data/dataloader/chatgpt.py

@@ -0,0 +1,60 @@

+# @Time : 2023/6/14

+# @Author : Xinyu Tang

+# @Email : txy20010310@163.com

+

+from tqdm import tqdm

+

+from crslab.data.dataloader.base import BaseDataLoader

+

+class ChatGPTDataLoader(BaseDataLoader):

+

+ def __init__(self, opt, dataset, vocab):

+ super().__init__(opt, dataset)

+

+ def process_fn(self):

+ augment_dataset = []

+ for conv_dict in tqdm(self.dataset):

+ if conv_dict['role'] == 'Recommender' and len(conv_dict['items']) > 0:

+ augment_conv_dict = {

+ 'dialog_id': conv_dict['dialog_id'],

+ 'role': conv_dict['role'],

+ 'entity': conv_dict['context_entities'],

+ 'context': conv_dict['context'],

+ 'item': conv_dict['items']

+ }

+ augment_dataset.append(augment_conv_dict)

+ return augment_dataset

+

+ def batchify(self, batch):

+ batch_dialog_id = []

+ batch_role = []

+ batch_context = []

+ batch_movies = []

+ batch_entities = []

+

+ for conv_dict in batch:

+ batch_dialog_id.append(conv_dict['dialog_id'])

+ batch_role.append(conv_dict['role'])

+ batch_context.append(conv_dict['context'])

+ batch_movies.append(conv_dict['item'])

+ batch_entities.append(conv_dict['entity'])

+

+ return {

+ 'dialog_id': batch_dialog_id,

+ 'role': batch_role,

+ 'context': batch_context,

+ 'item': batch_movies,

+ 'entity': batch_entities

+ }

+

+ def rec_process_fn(self):

+ return self.process_fn()

+

+ def rec_batchify(self, batch):

+ return self.rec_batchify(batch)

+

+ def conv_process_fn(self):

+ return self.process_fn()

+

+ def conv_batchify(self, batch):

+ return self.batchify(batch)

\ No newline at end of file

diff --git a/crslab/data/dataloader/kbrd.py b/crslab/data/dataloader/kbrd.py

index 2720c5d..182044d 100644

--- a/crslab/data/dataloader/kbrd.py

+++ b/crslab/data/dataloader/kbrd.py

@@ -58,20 +58,27 @@ def rec_process_fn(self):

for conv_dict in tqdm(self.dataset):

if conv_dict['role'] == 'Recommender':

for movie in conv_dict['items']:

- augment_conv_dict = {'context_entities': conv_dict['context_entities'], 'item': movie}

+ augment_conv_dict = {

+ 'role': conv_dict['role'],

+ 'context': conv_dict['context'],

+ 'item': movie

+ }

augment_dataset.append(augment_conv_dict)

return augment_dataset

def rec_batchify(self, batch):

- batch_context_entities = []

+ batch_role = []

+ batch_context = []

batch_movies = []

for conv_dict in batch:

- batch_context_entities.append(conv_dict['context_entities'])

+ batch_role.append(conv_dict['role'])

+ batch_context.append(conv_dict['context'])

batch_movies.append(conv_dict['item'])

return {

- "context_entities": batch_context_entities,

- "item": torch.tensor(batch_movies, dtype=torch.long)

+ "role": batch_role,

+ 'context': batch_context,

+ "item": batch_movies

}

def conv_process_fn(self, *args, **kwargs):

diff --git a/crslab/data/dataset/base.py b/crslab/data/dataset/base.py

index 6befff5..a04c672 100644

--- a/crslab/data/dataset/base.py

+++ b/crslab/data/dataset/base.py

@@ -14,6 +14,8 @@

import numpy as np

from loguru import logger

+import json

+

from crslab.download import build

@@ -134,6 +136,14 @@ def _data_preprocess(self, train_data, valid_data, test_data):

"""

pass

+

+ @abstractmethod

+ def get_attr_list(self):

+ """

+ Returns:

+ (list of str): attributes

+ """

+ pass

def _load_from_restore(self, file_name="all_data.pkl"):

"""Restore saved dataset.

diff --git a/crslab/data/dataset/durecdial/durecdial.py b/crslab/data/dataset/durecdial/durecdial.py

index ded2da6..9b0db5a 100644

--- a/crslab/data/dataset/durecdial/durecdial.py

+++ b/crslab/data/dataset/durecdial/durecdial.py

@@ -68,7 +68,7 @@ def __init__(self, opt, tokenize, restore=False, save=False):

resource = resources[tokenize]

self.special_token_idx = resource['special_token_idx']

self.unk_token_idx = self.special_token_idx['unk']

- dpath = os.path.join(DATASET_PATH, 'durecdial', tokenize)

+ dpath = os.path.join(DATASET_PATH, 'durecdial')

super().__init__(opt, dpath, resource, restore, save)

def _load_data(self):

diff --git a/crslab/data/dataset/durecdial/resources.py b/crslab/data/dataset/durecdial/resources.py

index 327ccf8..6bb858f 100644

--- a/crslab/data/dataset/durecdial/resources.py

+++ b/crslab/data/dataset/durecdial/resources.py

@@ -14,7 +14,7 @@

'jieba': {

'version': '0.3',

'file': DownloadableFile(

- 'https://pkueducn-my.sharepoint.com/:u:/g/personal/franciszhou_pku_edu_cn/EQ5u_Mos1JBFo4MAN8DinUQB7dPWuTsIHGjjvMougLfYaQ?download=1',

+ 'https://pkueducn-my.sharepoint.com/:u:/g/personal/franciszhou_pkueducn_onmicrosoft_com/EQ5u_Mos1JBFo4MAN8DinUQB7dPWuTsIHGjjvMougLfYaQ?download=1',

'durecdial_jieba.zip',

'c2d24f7d262e24e45a9105161b5eb15057c96c291edb3a2a7b23c9c637fd3813',

),

@@ -30,7 +30,7 @@

'bert': {

'version': '0.3',

'file': DownloadableFile(

- 'https://pkueducn-my.sharepoint.com/:u:/g/personal/franciszhou_pku_edu_cn/ETGpJYjEM9tFhze2VfD33cQBDwa7zq07EUr94zoPZvMPtA?download=1',

+ 'https://pkueducn-my.sharepoint.com/:u:/g/personal/franciszhou_pkueducn_onmicrosoft_com/ETGpJYjEM9tFhze2VfD33cQBDwa7zq07EUr94zoPZvMPtA?download=1',

'durecdial_bert.zip',

'0126803aee62a5a4d624d8401814c67bee724ad0af5226d421318ac4eec496f5'

),

@@ -49,7 +49,7 @@

'gpt2': {

'version': '0.3',

'file': DownloadableFile(

- 'https://pkueducn-my.sharepoint.com/:u:/g/personal/franciszhou_pku_edu_cn/ETxJk-3Kd6tDgFvPhLo9bLUBfVsVZlF80QCnGFcVgusdJg?download=1',

+ 'https://pkueducn-my.sharepoint.com/:u:/g/personal/franciszhou_pkueducn_onmicrosoft_com/ETxJk-3Kd6tDgFvPhLo9bLUBfVsVZlF80QCnGFcVgusdJg?download=1',

'durecdial_gpt2.zip',

'a7a93292b4e4b8a5e5a2c644f85740e625e04fbd3da76c655150c00f97d405e4'

),

diff --git a/crslab/data/dataset/gorecdial/gorecdial.py b/crslab/data/dataset/gorecdial/gorecdial.py

index 1ce9d76..fe3e288 100644

--- a/crslab/data/dataset/gorecdial/gorecdial.py

+++ b/crslab/data/dataset/gorecdial/gorecdial.py

@@ -68,7 +68,7 @@ def __init__(self, opt, tokenize, restore=False, save=False):

resource = resources[tokenize]

self.special_token_idx = resource['special_token_idx']

self.unk_token_idx = self.special_token_idx['unk']

- dpath = os.path.join(DATASET_PATH, 'gorecdial', tokenize)

+ dpath = os.path.join(DATASET_PATH, 'gorecdial')

super().__init__(opt, dpath, resource, restore, save)

def _load_data(self):

diff --git a/crslab/data/dataset/gorecdial/resources.py b/crslab/data/dataset/gorecdial/resources.py

index b31e194..030202e 100644

--- a/crslab/data/dataset/gorecdial/resources.py

+++ b/crslab/data/dataset/gorecdial/resources.py

@@ -12,11 +12,11 @@

resources = {

'nltk': {

- 'version': '0.3',

+ 'version': '0.31',

'file': DownloadableFile(

- 'https://pkueducn-my.sharepoint.com/:u:/g/personal/franciszhou_pku_edu_cn/ESM_Wc7sbAlOgZWo_6lOx34B6mboskdpNdB7FLuyXUET2A?download=1',

+ 'https://pkueducn-my.sharepoint.com/:u:/g/personal/franciszhou_pkueducn_onmicrosoft_com/ESIqjwAg0ItAu7WGfukIt3cBXjzi7AZ9L_lcbFT1aS1qYQ?download=1',

'gorecdial_nltk.zip',

- '7e523f7ca90bb32ee8f2471ac5736717c45b20822c63bd958d0546de0a9cd863',

+ '58cd368f8f83c0c8555becc314a0017990545f71aefb7e93a52581c97d1b8e9b',

),

'special_token_idx': {

'pad': 0,

@@ -29,11 +29,11 @@

},

},

'bert': {

- 'version': '0.3',

+ 'version': '0.31',

'file': DownloadableFile(

- 'https://pkueducn-my.sharepoint.com/:u:/g/personal/franciszhou_pku_edu_cn/EcTG05imCYpFiBarVfnsAfkBVsbq1iPw23CYcp9kYE9X4g?download=1',

+ 'https://pkueducn-my.sharepoint.com/:u:/g/personal/franciszhou_pkueducn_onmicrosoft_com/Ed1HT8gzvRpDosVT83BEj5QBnzKpjR3Zbf5u49yyWP-k6Q?download=1',

'gorecdial_bert.zip',

- 'fc7aff18504f750d8974d90f2941a01ff22cc054283124936b778ba91f03554f'

+ '4fa10c3fe8ba538af0f393c99892739fcb376d832616aa7028334c594b3fec10'

),

'special_token_idx': {

'pad': 0,

@@ -48,11 +48,11 @@

}

},

'gpt2': {

- 'version': '0.3',

+ 'version': '0.31',

'file': DownloadableFile(

- 'https://pkueducn-my.sharepoint.com/:u:/g/personal/franciszhou_pku_edu_cn/Edg4_nbKA49HnQPcd65gPdoBALPADQd4V5qVqOrUub2m9w?download=1',

+ 'https://pkueducn-my.sharepoint.com/:u:/g/personal/franciszhou_pkueducn_onmicrosoft_com/EUJOHmX8v79DkZMq0x5r9d4B0UJlfw85v-VdciwKfAhpng?download=1',

'gorecdial_gpt2.zip',

- '7234138dcc27ed00bdac95da4096cd435023c229d227fa494d2bd7a653a492a9'

+ '44a15637e014b2e6628102ff654e1aef7ec1cbfa34b7ada1a03f294f72ddd4b1'

),

'special_token_idx': {

'pad': 0,

diff --git a/crslab/data/dataset/inspired/inspired.py b/crslab/data/dataset/inspired/inspired.py

index 73930f1..c44f0d7 100644

--- a/crslab/data/dataset/inspired/inspired.py

+++ b/crslab/data/dataset/inspired/inspired.py

@@ -68,7 +68,7 @@ def __init__(self, opt, tokenize, restore=False, save=False):

resource = resources[tokenize]

self.special_token_idx = resource['special_token_idx']

self.unk_token_idx = self.special_token_idx['unk']

- dpath = os.path.join(DATASET_PATH, 'inspired', tokenize)

+ dpath = os.path.join(DATASET_PATH, 'inspired')

super().__init__(opt, dpath, resource, restore, save)

def _load_data(self):

diff --git a/crslab/data/dataset/inspired/resources.py b/crslab/data/dataset/inspired/resources.py

index afb0cb1..504a760 100644

--- a/crslab/data/dataset/inspired/resources.py

+++ b/crslab/data/dataset/inspired/resources.py

@@ -14,7 +14,7 @@

'nltk': {

'version': '0.3',

'file': DownloadableFile(

- 'https://pkueducn-my.sharepoint.com/:u:/g/personal/franciszhou_pku_edu_cn/EdDgeChYguFLvz8hmkNdRhABmQF-LBfYtdb7rcdnB3kUgA?download=1',

+ 'https://pkueducn-my.sharepoint.com/:u:/g/personal/franciszhou_pkueducn_onmicrosoft_com/EdDgeChYguFLvz8hmkNdRhABmQF-LBfYtdb7rcdnB3kUgA?download=1',

'inspired_nltk.zip',

'776cadc7585abdbca2738addae40488826c82de3cfd4c2dc13dcdd63aefdc5c4',

),

@@ -30,7 +30,7 @@

'bert': {

'version': '0.3',

'file': DownloadableFile(

- 'https://pkueducn-my.sharepoint.com/:u:/g/personal/franciszhou_pku_edu_cn/EfBfyxLideBDsupMWb2tANgB6WxySTPQW11uM1F4UV5mTQ?download=1',

+ 'https://pkueducn-my.sharepoint.com/:u:/g/personal/franciszhou_pkueducn_onmicrosoft_com/EfBfyxLideBDsupMWb2tANgB6WxySTPQW11uM1F4UV5mTQ?download=1',

'inspired_bert.zip',

'9affea30978a6cd48b8038dddaa36f4cb4d8491cf8ae2de44a6d3dde2651f29c'

),

@@ -48,9 +48,9 @@

'gpt2': {

'version': '0.3',

'file': DownloadableFile(

- 'https://pkueducn-my.sharepoint.com/:u:/g/personal/franciszhou_pku_edu_cn/EVwbqtjDReZHnvb_l9TxaaIBAC63BjbqkN5ZKb24Mhsm_A?download=1',

+ 'https://pkueducn-my.sharepoint.com/:u:/g/personal/franciszhou_pkueducn_onmicrosoft_com/EVwbqtjDReZHnvb_l9TxaaIBAC63BjbqkN5ZKb24Mhsm_A?download=1',

'inspired_gpt2.zip',

- '23bb4ce3299186630fdf673e17f43ee43e91573ea786c922e3527e4c341a313c'

+ '261ad7e5325258d5cb8ffef0751925a58270fb6d9f17490f8552f6b86ef1eed2'

),

'special_token_idx': {

'pad': 0,

diff --git a/crslab/data/dataset/opendialkg/opendialkg.py b/crslab/data/dataset/opendialkg/opendialkg.py

index 8582705..102fd8b 100644

--- a/crslab/data/dataset/opendialkg/opendialkg.py

+++ b/crslab/data/dataset/opendialkg/opendialkg.py

@@ -69,7 +69,7 @@ def __init__(self, opt, tokenize, restore=False, save=False):

resource = resources[tokenize]

self.special_token_idx = resource['special_token_idx']

self.unk_token_idx = self.special_token_idx['unk']

- dpath = os.path.join(DATASET_PATH, 'opendialkg', tokenize)

+ dpath = os.path.join(DATASET_PATH, 'opendialkg')

super().__init__(opt, dpath, resource, restore, save)

def _load_data(self):

@@ -271,3 +271,7 @@ def _word_kg_process(self):

'edge': list(edges),

'entity': list(entities)

}

+

+ def get_attr_list(self):

+ attr_list = ['genre', 'actor', 'director', 'writer']

+ return attr_list

\ No newline at end of file

diff --git a/crslab/data/dataset/opendialkg/resources.py b/crslab/data/dataset/opendialkg/resources.py

index e00ddfc..9f7fb62 100644

--- a/crslab/data/dataset/opendialkg/resources.py

+++ b/crslab/data/dataset/opendialkg/resources.py

@@ -14,9 +14,9 @@

'nltk': {

'version': '0.3',

'file': DownloadableFile(

- 'https://pkueducn-my.sharepoint.com/:u:/g/personal/franciszhou_pku_edu_cn/ESB7grlJlehKv7XmYgMgq5AB85LhRu_rSW93_kL8Arfrhw?download=1',

+ 'https://pkueducn-my.sharepoint.com/:u:/g/personal/franciszhou_pkueducn_onmicrosoft_com/ESB7grlJlehKv7XmYgMgq5AB85LhRu_rSW93_kL8Arfrhw?download=1',

'opendialkg_nltk.zip',

- '6487f251ac74911e35bec690469fba52a7df14908575229b63ee30f63885c32f',

+ '6487f251ac74911e35bec690469fba52a7df14908575229b63ee30f63885c32f'

),

'special_token_idx': {

'pad': 0,

@@ -30,7 +30,7 @@

'bert': {

'version': '0.3',

'file': DownloadableFile(

- 'https://pkueducn-my.sharepoint.com/:u:/g/personal/franciszhou_pku_edu_cn/EWab0Pzgb4JOiecUHZxVaEEBRDBMoeLZDlStrr7YxentRA?download=1',

+ 'https://pkueducn-my.sharepoint.com/:u:/g/personal/franciszhou_pkueducn_onmicrosoft_com/EWab0Pzgb4JOiecUHZxVaEEBRDBMoeLZDlStrr7YxentRA?download=1',

'opendialkg_bert.zip',

'0ec3ff45214fac9af570744e9b5893f224aab931744c70b7eeba7e1df13a4f07'

),

@@ -48,7 +48,7 @@

'gpt2': {

'version': '0.3',

'file': DownloadableFile(

- 'https://pkueducn-my.sharepoint.com/:u:/g/personal/franciszhou_pku_edu_cn/EdE5iyKIoAhLvCwwBN4MdJwB2wsDADxJCs_KRaH-G3b7kg?download=1',

+ 'https://pkueducn-my.sharepoint.com/:u:/g/personal/franciszhou_pkueducn_onmicrosoft_com/EdE5iyKIoAhLvCwwBN4MdJwB2wsDADxJCs_KRaH-G3b7kg?download=1',

'opendialkg_gpt2.zip',

'dec20b01247cfae733988d7f7bfd1c99f4bb8ba7786b3fdaede5c9a618c6d71e'

),

diff --git a/crslab/data/dataset/redial/redial.py b/crslab/data/dataset/redial/redial.py

index cb6e47b..6ceb036 100644

--- a/crslab/data/dataset/redial/redial.py

+++ b/crslab/data/dataset/redial/redial.py

@@ -7,6 +7,11 @@

# @Author : Kun Zhou, Xiaolei Wang, Yuanhang Zhou

# @Email : francis_kun_zhou@163.com, wxl1999@foxmail.com, sdzyh002@gmail

+# UPDATE:

+# @Time : 2023/6/14

+# @Author : Xinyu Tang

+# @Email : txy20010310@163.com

+

r"""

ReDial

======

@@ -19,6 +24,7 @@

"""

import json

+import re

import os

from collections import defaultdict

from copy import copy

@@ -69,21 +75,21 @@ def __init__(self, opt, tokenize, restore=False, save=False):

resource = resources[tokenize]

self.special_token_idx = resource['special_token_idx']

self.unk_token_idx = self.special_token_idx['unk']

- dpath = os.path.join(DATASET_PATH, "redial", tokenize)

+ dpath = os.path.join(DATASET_PATH, opt['dataset'])

super().__init__(opt, dpath, resource, restore, save)

def _load_data(self):

train_data, valid_data, test_data = self._load_raw_data()

- self._load_vocab()

+ # self._load_vocab()

self._load_other_data()

vocab = {

- 'tok2ind': self.tok2ind,

- 'ind2tok': self.ind2tok,

+ # 'tok2ind': self.tok2ind,

+ # 'ind2tok': self.ind2tok,

'entity2id': self.entity2id,

'id2entity': self.id2entity,

'word2id': self.word2id,

- 'vocab_size': len(self.tok2ind),

+ # 'vocab_size': len(self.tok2ind),

'n_entity': self.n_entity,

'n_word': self.n_word,

}

@@ -93,14 +99,28 @@ def _load_data(self):

def _load_raw_data(self):

# load train/valid/test data

- with open(os.path.join(self.dpath, 'train_data.json'), 'r', encoding='utf-8') as f:

- train_data = json.load(f)

+ train_data = []

+ valid_data = []

+ test_data = []

+

+ with open(os.path.join(self.dpath, 'train_data.jsonl'), 'r', encoding='utf-8') as f:

+ lines = f.readlines()

+ for line in lines:

+ data = json.loads(line)

+ train_data.append(data)

logger.debug(f"[Load train data from {os.path.join(self.dpath, 'train_data.json')}]")

- with open(os.path.join(self.dpath, 'valid_data.json'), 'r', encoding='utf-8') as f:

- valid_data = json.load(f)

+ with open(os.path.join(self.dpath, 'valid_data.jsonl'), 'r', encoding='utf-8') as f:

+ lines = f.readlines()

+ for line in lines:

+ data = json.loads(line)

+ valid_data.append(data)

+ valid_data.append(data)

logger.debug(f"[Load valid data from {os.path.join(self.dpath, 'valid_data.json')}]")

- with open(os.path.join(self.dpath, 'test_data.json'), 'r', encoding='utf-8') as f:

- test_data = json.load(f)

+ with open(os.path.join(self.dpath, 'test_data.jsonl'), 'r', encoding='utf-8') as f:

+ lines = f.readlines()

+ for line in lines:

+ data = json.loads(line)

+ test_data.append(data)

logger.debug(f"[Load test data from {os.path.join(self.dpath, 'test_data.json')}]")

return train_data, valid_data, test_data

@@ -120,7 +140,7 @@ def _load_other_data(self):

self.id2entity = {idx: entity for entity, idx in self.entity2id.items()}

self.n_entity = max(self.entity2id.values()) + 1

# {head_entity_id: [(relation_id, tail_entity_id)]}

- self.entity_kg = json.load(open(os.path.join(self.dpath, 'dbpedia_subkg.json'), 'r', encoding='utf-8'))

+ self.entity_kg = json.load(open(os.path.join(self.dpath, 'kg.json'), 'r', encoding='utf-8'))

logger.debug(

f"[Load entity dictionary and KG from {os.path.join(self.dpath, 'entity2id.json')} and {os.path.join(self.dpath, 'dbpedia_subkg.json')}]")

@@ -132,6 +152,12 @@ def _load_other_data(self):

self.word_kg = open(os.path.join(self.dpath, 'conceptnet_subkg.txt'), 'r', encoding='utf-8')

logger.debug(

f"[Load word dictionary and KG from {os.path.join(self.dpath, 'concept2id.json')} and {os.path.join(self.dpath, 'conceptnet_subkg.txt')}]")

+ with open(os.path.join(self.dpath, 'id2info.json'), 'r', encoding='utf-8') as f:

+ id2info = json.load(f)

+ self.id2name = {}

+ for id, info in id2info.items():

+ self.id2name[id] = info['name']

+

def _data_preprocess(self, train_data, valid_data, test_data):

processed_train_data = self._raw_data_process(train_data)

@@ -145,49 +171,69 @@ def _data_preprocess(self, train_data, valid_data, test_data):

return processed_train_data, processed_valid_data, processed_test_data, processed_side_data

def _raw_data_process(self, raw_data):

- augmented_convs = [self._merge_conv_data(conversation["dialog"]) for conversation in tqdm(raw_data)]

+ augmented_convs = [self._merge_conv_data(conversation) for conversation in tqdm(raw_data)]

augmented_conv_dicts = []

for conv in tqdm(augmented_convs):

augmented_conv_dicts.extend(self._augment_and_add(conv))

return augmented_conv_dicts

def _merge_conv_data(self, dialog):

+ movie_pattern = re.compile(r'@\d+')

augmented_convs = []

last_role = None

- for utt in dialog:

- text_token_ids = [self.tok2ind.get(word, self.unk_token_idx) for word in utt["text"]]

- movie_ids = [self.entity2id[movie] for movie in utt['movies'] if movie in self.entity2id]

+ user_id = dialog['initiatorWorkerId']

+ recommender_id = dialog['respondentWorkerId']

+ conversation_id = dialog['conversationId']

+

+ for utt in dialog["messages"]:

+ # text_token_ids = [self.tok2ind.get(word, self.unk_token_idx) for word in utt["text"]]

+ turn_id = utt['turn_id']

+ dialog_turn_id = str(conversation_id) + '_' + str(turn_id)

+

+ text = utt['text']

+ for pattern in re.findall(movie_pattern, text):

+ if pattern.strip('@') in self.id2name:

+ text = text.replace(pattern, self.id2name[pattern.strip('@')])

+ movie_ids = [self.entity2id[movie] for movie in utt['item'] if movie in self.entity2id]

entity_ids = [self.entity2id[entity] for entity in utt['entity'] if entity in self.entity2id]

word_ids = [self.word2id[word] for word in utt['word'] if word in self.word2id]

-

- if utt["role"] == last_role:

- augmented_convs[-1]["text"] += text_token_ids

- augmented_convs[-1]["movie"] += movie_ids

+

+ role_id = utt['senderWorkerId']

+ if role_id == recommender_id:

+ role = 'Recommender'

+ elif role_id == user_id:

+ role = 'User'

+

+ if role == last_role:

+ augmented_convs[-1]["text"] += text

+ augmented_convs[-1]["item"] += movie_ids

augmented_convs[-1]["entity"] += entity_ids

augmented_convs[-1]["word"] += word_ids

else:

augmented_convs.append({

- "role": utt["role"],

- "text": text_token_ids,

+ "dialog_id": dialog_turn_id,

+ "role": role,

+ "text": text,

"entity": entity_ids,

- "movie": movie_ids,

+ "item": movie_ids,

"word": word_ids

})

- last_role = utt["role"]

+ last_role = role

return augmented_convs

def _augment_and_add(self, raw_conv_dict):

augmented_conv_dicts = []

- context_tokens, context_entities, context_words, context_items = [], [], [], []

+ context, context_entities, context_words, context_items = [], [], [], []

entity_set, word_set = set(), set()

for i, conv in enumerate(raw_conv_dict):

- text_tokens, entities, movies, words = conv["text"], conv["entity"], conv["movie"], conv["word"]

- if len(context_tokens) > 0:

+ text, entities, movies, words = conv["text"], conv["entity"], conv["item"], conv["word"]

+ if len(context) > 0:

conv_dict = {

+ "dialog_id": conv['dialog_id'],

"role": conv['role'],

- "context_tokens": copy(context_tokens),

- "response": text_tokens,

+ "context": copy(context),

+ "response": text,

"context_entities": copy(context_entities),

"context_words": copy(context_words),

"context_items": copy(context_items),

@@ -195,7 +241,7 @@ def _augment_and_add(self, raw_conv_dict):

}

augmented_conv_dicts.append(conv_dict)

- context_tokens.append(text_tokens)

+ context.append(text)

context_items += movies

for entity in entities + movies:

if entity not in entity_set:

@@ -213,7 +259,7 @@ def _side_data_process(self):

logger.debug("[Finish entity KG process]")

processed_word_kg = self._word_kg_process()

logger.debug("[Finish word KG process]")

- movie_entity_ids = json.load(open(os.path.join(self.dpath, 'movie_ids.json'), 'r', encoding='utf-8'))

+ movie_entity_ids = json.load(open(os.path.join(self.dpath, 'item_ids.json'), 'r', encoding='utf-8'))

logger.debug('[Load movie entity ids]')

side_data = {

@@ -266,3 +312,36 @@ def _word_kg_process(self):

'edge': list(edges),

'entity': list(entities)

}

+

+ def get_attr_list(self):

+ attr_list = ['genre', 'star', 'director']

+ return attr_list

+

+ def get_ask_instruction(self):

+ ask_instruction = '''To recommend me items that I will accept, you can choose one of the following options.

+A: ask my preference for genre

+B: ask my preference for actor

+C: ask my preference for director

+D: I can directly give recommendations

+Please enter the option character. Please only response a character.'''

+ option2attr = {

+ 'A': 'genre',

+ 'B': 'star',

+ 'C': 'director',

+ 'D': 'recommend'

+ }

+ option2template = {

+ 'A': 'Which genre do you like?',

+ 'B': 'Which star do you like?',

+ 'C': 'Which director do you like?',

+ }