pyg2p is a converter between GRIB and netCDF4/PCRaster files. It can also manipulates GRIB messages (performing aggregation or simple unit conversion) before applying the format conversion.

To install package, you can use a python virtual environment or directly install dependencies and package at system level (executable script will be saved into /usr/local/bin in this case).

IMPORTANT: Before to launch setup, you need to configure geopotentials and intertables paths in configuration/global/global_conf.json. These paths are used by pyg2p to read geopotentials and intertables already configured. You may need to download files from FTP (launch

pyg2p -Wfor this). Users running pyg2p installed by a different user (ie. root) will configure similar paths for their own intertables and geopotentials under his home folder. These paths will need write permissions.

Grab last archive and extract it in a folder (or clone this repository) and follow these steps:

$ cd pyg2p

$ vim configuration/global/global_conf.json # to edit shared paths !!!

$ python setup.py installAfter installation, you will have all dependencies installed and an executable script 'pyg2p' (in a virtual environment, script is located under <VIRTUALENV_PATH>/bin folder otherwise under /usr/local/bin). Some python packages can be problematic to install at first shot. Read following paragraph for details.

One of the things to configure for any user running pyg2p, is GEOPOTENTIALS and INTERTABLES variables with paths to folders with write permissions.

NOTE: From version 2.1, the user needs to setup these variables only if she/he needs to write new interpolation tables (option -B [-X]) or to add new geopotentials from grib files (option -g).

These variables contains user paths and must be configured in a .conf file (e.g. paths.conf) under ~/.pyg2p/ directory. This can look like:

GEOPOTENTIALS=/dataset/pyg2p_data_user/geopotentials

INTERTABLES=/dataset/pyg2p_data_user/intertables

User intertables (for interpolation) are read/write from INTERTABLES and geopotentials (for

correction) are read from GEOPOTENTIALS.

Pyg2p will use its default configuration for available intertables and geopotentials. These are read

from paths configured during installation in global_conf.json.

If you need to download default files from ECMWF FTP, just launch pyg2p with -W option and the

dataset argument (argument can be geopotentials or intertables) and files are saved in user

paths configured above:

$ pyg2p -W intertablesYou can edit FTP authentication parameters in ~/.pyg2p/ftp.json

User json configuration files are empty. If you need a new parameter or geopotential that is not configured internally in pyg2p, you can setup new items (or overwrite internal configuration).

If you are extracting a parameter with shortName xy from a grib file that is not already globally configured, add an element as shown below (only part in bold has been added):

{

"xy": {

"@description": "Variable description",

"@shortName": "xy",

"@unit": "unitstring"

}

}You can configure (more than) a conversion element with different ids and functions. You will use shortName and conversionId in the execution JSON templates.

{

"xy": { "@description": "Variable description",

"@shortName": "xy",

"@unit": "unitstring"

},

"xyz": {

"@description": "Variable description",

"@shortName": "xyz",

"@unit": "unitstring/unistring2",

"Conversion": [

{

"@cutOffNegative": true,

"@function": "x=x*(-0.13)**2",

"@id": "conv_xyz1",

"@unit": "g/d"

},

{

"@cutOffNegative": true,

"@function": "x=x/5466 - (x**2)",

"@id": "conv_xyz2",

"@unit": "g/h"

}

]

}

}Note: Aware the syntax of conversion functions. They must start with x= followed by the actual conversion formula where x is the value to convert. Units are only used for logging.

If the input grib file has a geopotential message, pyg2p will use it for correction. Otherwise, it will read the file from user data paths or global data paths. To add a geopotential GRIB file to pyg2p configuration, use this command:

$ pyg2p -g path_to_geopotential_gribThis will copy the file to folder defined in GEOPOTENTIALS variable and will update

geopotentials.json with the new item.

Interpolation tables are read from user or global data folders. If the table is missing, it will create it into user data folder for future interpolations (you must pass -B option to pyg2p). Depending on source and target grids size, and on interpolation method, table creation can take from minutes to days. To speed up interpolation table creation, use parallel option -X to have up to x6 speed gain.

Execution templates are JSON files that you will use to configure a conversion. You will pass path to

the file to pyg2p with command line option -c.

Most of options can be both defined in this JSON file and from command line.

Note that command line options overwrite JSON template.

If you have a large set of conversions for same parameters, it's more convenient to define a single template where you define parameter, interpolation and aggregation and pass the rest of parameters from command line. Here some examples of JSON commands files:

{

"Execution": {

"@name": "Octahedral test 1",

"Aggregation": {

"@step": 24,

"@type": "average"

},

"OutMaps": {

"@cloneMap": "/dataset/maps/europe5km/dem.map",

"@ext": 1,

"@fmap": 1,

"@namePrefix": "t2",

"@unitTime": 24,

"Interpolation": {

"@latMap": "/dataset/maps/europe5km/lat.map",

"@lonMap": "/dataset/maps/europe5km/long.map",

"@mode": "grib_nearest"

}

},

"Parameter": {

"@applyConversion": "k2c",

"@correctionFormula": "p+gem-dem*0.0065",

"@demMap": "/dataset/maps/europe5km/dem.map",

"@gem": "(z/9.81)*0.0065",

"@shortName": "2t"

}

}

}There are four sections of configuration.

Defines the aggregation method and step. Method can be accumulation, average or instantaneous.

Here you define interpolation method and paths to coordinates PCRaster maps, output unit time, the clone map etc.

This is a subelement of OutMaps. Here you define interpolation method (see later for details), paths to coordinates maps.

In this section, you configure the parameter to select by using its shortName, as stored in GRIB file.

You also configure conversion with applyConversion property set to a conversion id. Parameter

shortName must be already configured in ~/.pyg2p/parameters.json along with conversion ids.

If you need to apply correction based on DEM files and geopotentials, you can configure formulas

and the path to DEM map.

You can use variables in JSON files to define paths. Variables can be configured in .conf files under

~/.pyg2p/ folder.

/home/domenico/.pyg2p/myconf.conf

EUROPE_MAPS=/dataset/maps/europe5km

DEM_MAP=/dataset/maps/dem05.map

EUROPE_DEM=/dataset/maps/europe/dem.map

EUROPE_LAT=/dataset/maps/europe/lat.map

EUROPE_LON=/dataset/maps/europe/long.mapUsage of user defined paths in JSON command file:

{

"Execution": {

"@name": "eue_t24",

"Aggregation": {

"@step": 24,

"@type": "average"

},

"OutMaps": {

"@format": "netcdf",

"@cloneMap": "{EUROPE_MAPS}/lat.map",

"@ext": 1,

"@fmap": 1,

"@namePrefix": "pT24",

"@unitTime": 24,

"Interpolation": {

"@latMap": "{EUROPE_MAPS}/lat.map",

"@lonMap": "{EUROPE_MAPS}/long.map",

"@mode": "grib_nearest"

}

},

"Parameter": {

"@applyConversion": "k2c",

"@correctionFormula": "p+gem-dem*0.0065",

"@demMap": "{DEM_MAP}",

"@gem": "(z/9.81)*0.0065",

"@shortName": "2t"

}

}

}| Section | Attribute | Description |

|---|---|---|

| Execution | name | Descriptive name of the execution configuration. |

| Parameter | See relative table | |

| OutMaps | See relative table | |

| Parameter | See relative table | |

| Parameter | shortName | The short name of the parameter, as it is in the grib file. The application use this to select messages. Must be configured in the parameters.json file, otherwise the application exits with an error. |

| tstart | Optional grib selectors perturbationNumber, tstart, tend, dataDate and dataTime can also be issued via command line arguments (-m, -s, -e, -D, -T), which overwrite the ones in the execution JSON file. | |

| tend | ||

| perturbationNumber | ||

| dataDate | ||

| dataTime | ||

| level | ||

| applyConversion | The conversion id to apply, as in the parameters.json file for the parameter to select. The combination parameter/conversion must be properly configured in parmaters.json file, otherwise the application exits with an error. | |

| correctionFormula | Formula to use for parameter correction with p, gem, dem variables, representing parameter value, converted geopotential to gem, and DEM map value. E.g.: p+gem*0.0065-dem*0.0065 | |

| demMap | The dem map used for correction. | |

| gem | Formula for geopotential conversion for correction. | |

| OutMaps | cloneMap | The clone map with area (must have a REAL cell type and missing values for points outside area of interest. A dem map works fine. A typical area boolean map will not). |

| unitTime | Time unit in hours of output maps. Tipical value is 24 (daily maps). | |

| format | Output file format. Default 'pcraster'. Available formats are 'pcraster', 'netcdf'. | |

| namePrefix | Prefix for maps. Default is parameter shortName. | |

| fmap | First PCRaster map number. Default 1. | |

| Interpolation | See relative table. | |

| ext | Extension mode. It's the integer number defining the step numbers to skip when writing maps. Same as old grib2pcraster. Default 1. | |

| Aggregation | step | Step of aggregation in hours. |

| type | Type of aggregation (it was Manipulation in grib2pcraster). It can be average or accumulation. | |

| forceZeroArray | Optional. In case of “accumulation”, and only then, if this attribute is set to”y” (or any value different from “false”, “False”, “FALSE”, “no”, “NO”, “No”, “0”), the program will use a zero array as message at step 0 to compute the first map, even if the GRIB file has a step 0 message. | |

| Interpolation | mode | Interpolation mode. Possible values are: “nearest”, “invdist”, “grib_nearest”, “grib_invdist” |

| latMap | PCRaster map of target latitudes. | |

| lonMap | PCRaster map of target longitudes. | |

| intertableDir | Alternative home folder for interpolation lookup tables, where pyg2p will load/save intertables. Folder must be existing. If not set, pyg2p will use intertables from ~/.pyg2p/intertables/ | |

To use the application, after the main configuration you need to configure a template JSON file for each type of extraction you need to perform.

To configure the application and compile your JSON templates, you might need to know the variable shortName as stored in the input GRIB file you're using or in the geopotential GRIB. Just execute the following GRIB tool command:

grib_get -p shortName /path/to/grib

Other keys you would to know for configuration or debugging purposes are:

- startStep

- endStep (for instantaneous messages, it can be the same of startStep)

- perturbationNumber (the EPS member number)

- stepType (type of field: instantaneous: 'instant', average: 'avg', cumulated: 'cumul')

- longitudeOfFirstGridPointInDegrees

- longitudeOfLastGridPointInDegrees

- latitudeOfFirstGridPointInDegrees

- latiitudeOfLastGridPointInDegrees

- Ni (it can be missing)

- Nj (it states the resolution: it's the number of points along the meridian)

- numberOfValues

- gridType (e.g.: regular_ll, reduced_gg, rotated_ll)

If you run pyg2p without arguments, it shows help of all input arguments.

usage: pyg2p [-h] [-c json_file] [-o out_dir] [-i input_file]

[-I input_file_2nd] [-s tstart] [-e tend] [-m eps_member]

[-T data_time] [-D data_date] [-f fmap] [-F format] [-x extension_step]

[-n outfiles_prefix] [-l log_level] [-N intertable_dir] [-B] [-X]

[-t cmds_file] [-g geopotential] [-C path] [-z path] [-W dataset]

Execute the grib to pcraster conversion using parameters from the input json configuration.

Read user manual.

optional arguments:

-h, --help show this help message and exit

-c json_file, --commandsFile json_file

Path to json command file

-o out_dir, --outDir out_dir

Path where output maps will be created.

-i input_file, --inputFile input_file

Path to input grib.

-I input_file_2nd, --inputFile2 input_file_2nd

Path to 2nd resolution input grib.

-s tstart, --start tstart

Grib timestep start. It overwrites the tstart in json

execution file.

-e tend, --end tend Grib timestep end. It overwrites the tend in json

execution file.

-m eps_member, --perturbationNumber eps_member

eps member number

-T data_time, --dataTime data_time

To select messages by dataTime key value

-D data_date, --dataDate data_date

<YYYYMMDD> to select messages by dataDate key value

-f fmap, --fmap fmap First map number

-F format, --format format

Output format. Available options: netcdf, pcraster.

Default pcraster

-x extension_step, --ext extension_step

Extension number step

-n outfiles_prefix, --namePrefix outfiles_prefix

Prefix name for maps

-l log_level, --loggerLevel log_level

Console logging level

-N intertable_dir, --intertableDir intertable_dir

interpolation tables dir

-B, --createIntertable

create intertable file

-X, --interpolationParallel

Use parallelization tools to make interpolation

faster.If -B option is not passed or intertable

already exists it does not have any effect.

-t cmds_file, --test cmds_file

Path to a text file containing list of commands,

defining a battery of tests. Then it will create diff

pcraster maps and log alerts if differences are higher

than a threshold (edit configuration in test.json)

-g geopotential, --addGeopotential geopotential

Add the file to geopotentials.json configuration file, to use for correction. The file will be copied into

the right folder (configuration/geopotentials) Note:

shortName of geopotential must be "fis" or "z"

-C path, --convertConf path

Convert old xml configuration to new json format

-z path, --convertIntertables path

Convert old pyg2p intertables to new version and copy

to user folders

-W dataset, --downloadConf dataset

Download intertables and geopotentials (FTP settings

defined in ftp.json)pyg2p -c ./exec1.json -i ./input.grib -o /out/dir -s 12 -e 36 -F netcdf

pyg2p -c ./exec2.json -i ./input.grib -o /out/dir -m 10 -l INFO --format netcdf

pyg2p -c ./exec3.json -i ./input.grib -I /input2ndres.grib -o /out/dir -m 10 -l DEBUG

pyg2p -g /path/to/geopotential/grib/file # add geopotential to configuration

pyg2p -t /path/to/test/commands.txt

pyg2p -hNote: Even if 'netcdf' format is used for output, paths to PCRaster clone/area,

latitudes and longitudes maps have to be setup in any case.

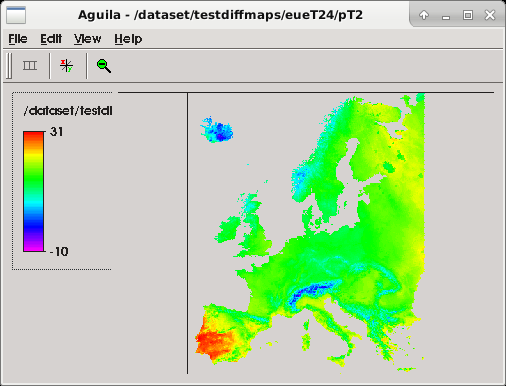

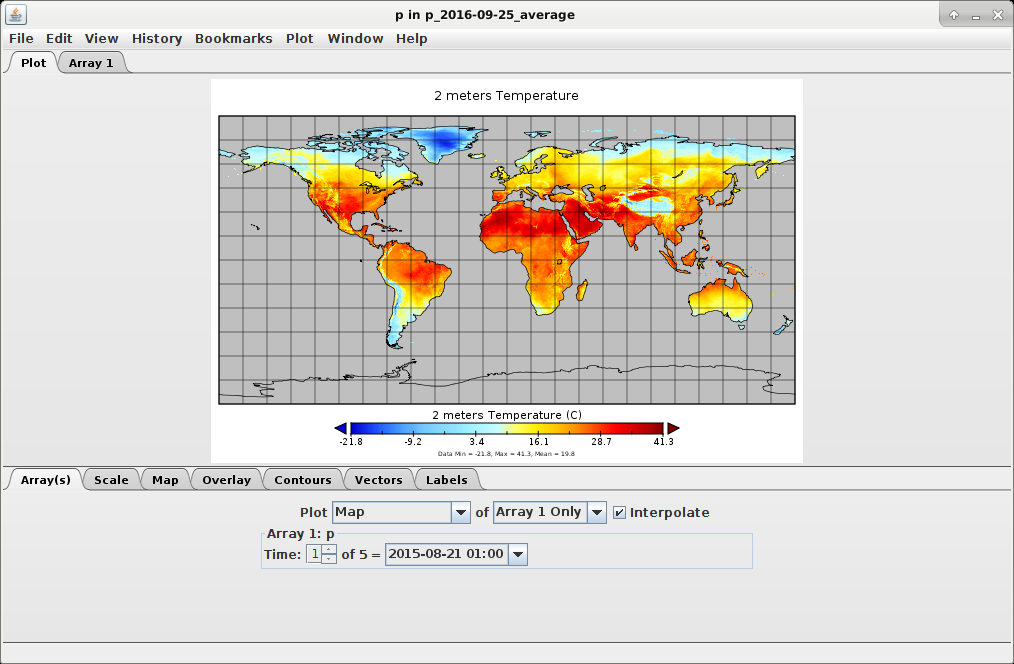

After the execution, you can check output maps by using the PCRaster2 Aguila viewer for PCRaster maps or the NASA Panoply3 viewer for NetCDF files.

aguila /dataset/testdiffmaps/eueT24/pT240000.001

./panoply.sh /dataset/octahedral/out/grib_vs_scipy/global/ta/p_2016-09-25_average.nc

Maps will be written in the folder specified by -o input argument. If this is missing, you will find maps in the folder where you launched the application (./). Refer to official documentation for further information about Aguila and Panoply.

Interpolation is configured in JSON execution templates using the Interpolation attribute inside OutMaps. There are four interpolation methods available. Two are using GRIB_API nearest neighbours routines while the other two leverage on Scipy kd_tree module.

Note: GRIB_API does not implement nearest neighbours routing for rotated grids.

You have to use scipy methods and regular target grids (i.e.: latitudes and

longitudes PCRaster maps).

Interpolation will use precompiled intertables. They will be found in the path configured in

INTERTABLES folder (take into account that can potentially contains gigabytes of files) or in global

data path. You can also define an alternate intertables directory with -N argument (or

@intertableDir attribute in JSON template).

If interlookup table doesn't exist, the application will create one into INTERTABLES folder and

update intertables.json configuration only if -B option is passed, otherwise program will exit.

Be aware that for certain combination of grid and maps, the creation of the interlookup table (which

is a numpy array saved in a binary file) could take several hours or even days for GRIB interpolation

methods.

To have better performances (up to x6 of gain) you can pass -X option to enable parallel processing.

Performances are not comparable with scipy based interpolation (seconds or minutes) but this option could not be viable for all GRIB inputs.

To configure the interpolation method for conversion, set the @mode attribute in Execution/OutMaps/Interpolation property.

This method uses GRIB API to perform nearest neighbour query. To configure this method, define:

{"Interpolation": {

"@latMap": "/dataset/maps/europe5km/lat.map",

"@lonMap": "/dataset/maps/europe5km/long.map",

"@mode": "grib_nearest"}

}It uses GRIB_API to query for four neighbours and relative distances. It applies inverse distance calculation to compute the final value. To configure this method:

{

"Interpolation": {

"@latMap": "/dataset/maps/europe5km/lat.map",

"@lonMap": "/dataset/maps/europe5km/long.map",

"@mode": "grib_invdist"}

}It's the same nearest neighbour algorithm of grib_nearest but it uses the scipy kd_tree4 module to obtain neighbours and distances.

{

"Interpolation": {

"@latMap": "/dataset/maps/europe5km/lat.map",

"@lonMap": "/dataset/maps/europe5km/long.map",

"@mode": "nearest"}

}It's the inverse distance algorithm with scipy.kd_tree , using 8 neighbours.

{

"Interpolation": {

"@latMap": "/dataset/maps/europe5km/lat.map",

"@lonMap": "/dataset/maps/europe5km/long.map",

"@mode": "invdist"}

}Attributes p, leafsize and eps for the kd tree algorithm are default in scipy library:

| Attribute | Details |

|---|---|

| p | 2 (Euclidean metric) |

| eps | 0 |

| leafsize | 10 |

Interpolation is configured under the OutMaps tag. With additional attributes, you also configure resulting PCRaster maps. Output dir is ./ by default or you can set it via command line using the option -o (--outDir).

| Attribute | Details |

|---|---|

| namePrefix | Prefix name for output map files. Default is the value of shortName key. |

| unitTime | Unit time in hours for results. This is used during aggregation operations. |

| fmap | Extension number for the first map. Default 1. |

| ext | Extension mode. It's the integer number defining the step numbers to skip when writing maps. Same as old grib2pcraster. Default 1. |

| cloneMap | Path to a PCRaster clone map, needed by PCRaster libraries to write a new map on disk. |

Values from grib files can be aggregated before to write the final PCRaster maps. There are two kinds of aggregation available: average and accumulation. The JSON configuration in the execution file will look like:

{

"Aggregation": {

"@type": "average"}

}To better understand what these two types of aggregations do, the DEBUG output of execution is presented later in same paragraph.

Temperature forecast data are usually instantaneous values. Coversely, many environmental models require average temperature values over a pre-defined time span (e.g. daily temperature values). The average function implemented within pyg2p allows to compute the average of instantaneous values: the time span for the computation of the average is defined by the user.

Here's a typical execution configuration and the output of interest:

cosmo_t24.json

{

"Execution": {

"@name": "cosmo_T24",

"Aggregation": {

"@step": 24,

"@type": "average"

},

"OutMaps": {

"@cloneMap": "/dataset/maps/europe/dem.map",

"@ext": 4,

"@fmap": 1,

"@namePrefix": "T24",

"@unitTime": 24,

"Interpolation": {

"@latMap": "/dataset/maps/europe/lat.map",

"@lonMap": "/dataset/maps/europe/lon.map",

"@mode": "nearest"

}

},

"Parameter": {

"@applyConversion": "k2c",

"@correctionFormula": "p+gem-dem*0.0065",

"@demMap": "/dataset/maps/europe/dem.map",

"@gem": "(z/9.81)*0.0065",

"@shortName": "2t"

}

}

}

Command

pyg2p -l DEBUG -c /execution_templates/cosmo_t24.json -i /dataset/cosmo/2012111912_pf10_t2.grb -o ./cosmo -m 10

ext parameter ext value will affect numbering of output maps:

[2013-07-12 00:06:18,545] :./cosmo/T24a0000.001 written!

[2013-07-12 00:06:18,811] :./cosmo/T24a0000.005 written!

[2013-07-12 00:06:19,079] :./cosmo/T24a0000.009 written!

[2013-07-12 00:06:19,349] :./cosmo/T24a0000.013 written!

[2013-07-12 00:06:19,620] :./cosmo/T24a0000.017 written!This is needed because we performed 24 hours average over 6 hourly steps.

The paragraph below explains how to set start, end, and aggregation steps in order to compute the average values.

Start step = start-of-first-day + aggregation time End step = end-of-the-last-day

Example 1: grib file containig instantanueous temperature data every 6 hours for 3 days (steps 0,6,12,18,...,72). The user wants to compute daily average values. Aggregation step =24 Start step = 24 (that is 0+24) End step = 72 Daily average for DAY 1 is the result of the average of the following steps: 6,12,28,24. Each step has weight=6, the daily average is then computed as Total/24. Daily average for DAY 2 is the result of the average of the following steps: 30,36,42,48. Each step has weight=6, the daily average is then computed as Total/24. Daily average for DAY 3 is the result of the average of the following steps: 54,60,66,72. Each step has weight=6, the daily average is then computed as Total/24.

Example2: grib file containig instantanueous temperature data every 6 hours for 1 days (steps 54,60,66,72). The user wants to compute daily average values. Aggregation step =24 Start step= 72 (that is 48+24) End step = 72 (in this case, end step and start step are both 72) Tthe result is the average of the steps 54,60,66,72

In order to better understand the above explained settings, the reader is invited to look at the detailed explanation below. The algorithm to compute the average values makes use of two nested loops: - EXTERNAL LOOP: iter_start: start-aggregation+1 iter_stop: end-aggregation+2 step_external: aggregation - INNER LOOP: iter_from: iter_start iter_to: iter_start+aggregation step_inner: 1

The inner loop looks for the steps included in the input grib. It looks for steps with increment 1 (step_inner=1). If a step is not found in the input grib, the code uses (repeats) the values of the closest next step.

Exmaple: grib file containig instantanueous temperature data every 6 hours for 3 days (steps 0,6,12,18,...,72). The user wants to compute daily average values.

- EXTERNAL LOOP1:

iter_start: 24-24+1 =1

iter_stop: 72-24+2=50

step_external:24

- INNER LOOP1:

iter_from: 1

iter_to: 1+24=25

The code looks for the steps from 1 to 24, with increments of 1

STEPS 1,2,3,4,5 are not found in the grib input file. The code uses the values of step 6.

STEP 6 is given by the grib input file.

STEPS 7,8,9,10,11 are not found in the grib input file. The code uses the values of step 12.

STEP 12 is given by the grib input file.

STEPS 13,14,15,16,17 are not found in the grib input file. The code uses the values of step 18.

STEP 18 is given by the grib input file.

....

AVERAGE= total/24

- EXTERNAL LOOP2:

iter_start: 25 (iter_start LOOP1 + step_external)

iter_stop: 50

step_external:24

- INNER LOOP2:

iter_from: 25

iter:to: 49

The code looks for the steps from 25 to 48, with increments of 1

- EXTERNAL LOOP3:

iter_start: 49 (iter_start LOOP2 + step_external)

iter_stop: 50

step_external:24

- INNER LOOP3:

iter_from: 49

iter_to: 73

For precipitation values, accumulation over 6 or 24 hours is often performed. Here's an example of configuration and execution output in DEBUG mode.

dwd_r06.json

{"Execution": {

"@name": "dwd_rain_gsp",

"Aggregation": {

"@step": 6,

"@type": "accumulation"

},

"OutMaps": {

"@cloneMap": "/dataset/maps/europe/dem.map",

"@fmap": 1,

"@namePrefix": "pR06",

"@unitTime": 24,

"Interpolation": {

"@latMap": "/dataset/maps/europe/lat.map",

"@lonMap": "/dataset/maps/europe/lon.map",

"@mode": "nearest"

}

},

"Parameter": {

"@shortName": "RAIN_GSP",

"@tend": 18,

"@tstart": 12

}

}

}Command

pyg2p -l DEBUG -c /execution_templates/dwd_r06.json -i /dataset/dwd/2012111912_pf10_tp.grb -o ./cosmo -m 10

Output

[2013-07-11 23:33:19,646] : Opening the GRIBReader for

/dataset/dwd/grib/dwd_grib1_ispra_LME_2012111900

[2013-07-11 23:33:19,859] : Grib input step 1 [type of step: accum]

[2013-07-11 23:33:19,859] : Gribs from 0 to 78

...

...

[2013-07-11 23:33:20,299] : ******** **** MANIPULATION **** *************

[2013-07-11 23:33:20,299] : Accumulation at resolution: 657

[2013-07-11 23:33:20,300] : out[s:6 e:12 res:657 step-lenght:6] = grib:12 - grib:6 *

(24/6))

[2013-07-11 23:33:20,316] : out[s:12 e:18 res:657 step-lenght:6] = grib:18 - grib:12 *

(24/6))Note: If you want to perform accumulation from Ts to Te with an aggregation step

Ta, and Ts-Ta=0 (e.g. Ts=6h, Te=48h, Ta=6h), the program will select the first

message at step 0 if present in the GRIB file, while you would use a zero values

message instead.

To use a zero values array, set the attribute forceZeroArray to ”true” in the

Aggregation configuration element.

For some DWD5 and COSMO6 accumulated precipitation files, the first zero message is

an instant precipitation and the decision at EFAS was to use a zero message, as it

happens for UKMO extractions, where input GRIB files don't have a first zero step

message.

grib_get -p units,name,stepRange,shortName,stepType 2012111912_pf10_tp.grb

kg m**-2 Total Precipitation 0 tp instant

kg m**-2 Total Precipitation 0-6 tp accum

kg m**-2 Total Precipitation 0-12 tp accum

kg m**-2 Total Precipitation 0-18 tp accum

...

...

kg m**-2 Total Precipitation 0-48 tp accumValues from grib files can be corrected with respect to their altitude coordinate (Lapse rate formulas). Formulas will use also a geopotential value (to read from a GRIB file, see later in this chapter for configuration). Correction has to be configured in the Parameter element, with three mandatory attributes.

- correctionFormula (the formula used for correction, with input variables parameter value (p), gem, and dem value.

- gem (the formula to obtain gem value from geopotential z value)

- demMap (path to the DEM PCRaster map)

Note: formulas must be written in python notation.

Tested configurations are only for temperature and are specified as follows:

Temperature correction

{

"Parameter": {

"@applyConversion": "k2c",

"@correctionFormula": "p+gem-dem*0.0065",

"@demMap": "/dataset/maps/europe/dem.map",

"@gem": "(z/9.81)*0.0065",

"@shortName": "2t"}

}A more complicated correction formula:

{

"Parameter": {

"@applyConversion": "k2c",

"@correctionFormula": "p/gem*(10**((-0.159)*dem/1000))",

"@demMap": "/dataset/maps/europe/dem.map",

"@gem": "(10**((-0.159)*(z/9.81)/1000))",

"@shortName": "2t"}

}z is the geopotential value as read from the grib file gem is the value resulting from the formula specified in gem attribute I.e.: (gem="(10**((-0.159)*(z/9.81)/1000)))" dem is the dem value as read from the PCRaster map

Be aware that if your dem map has directions values, those will be replicated in the final map.

The application will try to find a geopotential message in input GRIB file. If a geopotential message is not found, pyg2p will select a geopotential file from user or global data paths, by selecting filename from configuration according the geodetic attributes of GRIB message. If it doesn't find any suitable grib file, application will exit with an error message.

Geodetic attributes compose the key id in the JSON configuration (note the $ delimiter):

longitudeOfFirstGridPointInDegrees$longitudeOfLastGridPointInDegrees$Ni$Nj$numberOfValues$gridType

If you want to add another geopotential file to the configuration, just execute the command:

pyg2p -g /path/to/geopotential/grib/file

The application will copy the geopotential GRIB file into GEOPOTENTIALS folder (under user home directory)

and will also add the proper JSON configuration to geopotentials.json file.

Values from GRIB files can be converted before to write final output maps. Conversions are configured in the parameters.json file for each parameter (ie. shortName).

The right conversion formula will be selected using the id specified in the applyConversion attribute, and the shortName attribute of the parameter that is going to be extracted and converted.

Refer to Parameter configuration paragraph for details.

Console logger level is INFO by default and can be optionally set by using -l (or –loggerLevel) input argument.

Possible logger level values are ERROR, WARN, INFO, DEBUG, in increasing order of verbosity .

From version 1.3, pyg2p comes with a simple API to import and use from other python programs (e.g. pyEfas). The pyg2p API is intended to mimic the pyg2p.py script execution from command line so it provides a Command class with methods to set input parameters and a run_command(cmd) module level function to execute pyg2p.

- Create a pyg2p command:

from pyg2p.main import api

command = api.command()- Setup execution parameters using a chain of methods (or single calls):

command.with_cmdpath('a.json')

command.with_inputfile('0.grb')

command.with_log_level('ERROR').with_out_format('netcdf')

command.with_outdir('/dataout/').with_tstart('6').with_tend('24').with_eps('10').with_fmap('1')

command.with_ext('4')

print(str(command))

'pyg2p.py -c a.json -e 240 -f 1 -i 0.grb -l ERROR -m 10 -o /dataout/test -s 6 -x 4 -F netcdf'You can also create a command object using the input arguments as you would do when execute pyg2p from command line:

args_string = '-l ERROR -c /pyg2p_git/execution_templates_devel/eue_t24.json -i /dataset/test_2013330702/EpsN320-2013063000.grb -o /dataset/testdiffmaps/eueT24 -m 10'

command2 = api.command(args_string)Use the run_command function from pyg2p module. This will delegate the main method, without shell execution.

ret = api.run_command(command)The function returns the same value pyg2p returns if executed from shell (0 for correct executions, included those for which messages are not found).

You can add a geopotential file to configuration from pyg2p API as well, using Configuration classes:

from pyg2p.main.config import UserConfiguration, GeopotentialsConfiguration

user=UserConfiguration()

geopotentials=GeopotentialsConfiguration(user)

geopotentials.add('path/to/geopotential.grib')The result will be the same as executing pyg2p -g path/to/geopotential.grib.

Since version 3.1, pyg2p has a more usable api, useful for programmatically convert values

Here an example of usage:

from pyg2p.main.api import Pyg2pApi, ApiContext

config = {

'loggerLevel': 'ERROR',

'inputFile': '/data/gribs/cosmo.grib',

'fmap': 1,

'start': 6,

'end': 132,

'perturbationNumber': 2,

'intertableDir': '/data/myintertables/',

'geopotentialDir': '/data/mygeopotentials',

'OutMaps': {

'unitTime': 24,

'cloneMap': '/data/mymaps/dem.map',

'Interpolation': {

"latMap": '/data/mymaps/lat.map',

"lonMap": '/data/mymaps/lon.map',

"mode": "nearest"

}

},

'Aggregation': {

'step': 6,

'type': 'average'

},

'Parameter': {

'shortName': 'alhfl_s',

'applyConversion': 'tommd',

},

}

ctx = ApiContext(config)

api = Pyg2pApi(ctx)

values = api.execute()The values variable is an ordered dictionary with keys of class pyg2p.Step, which is simply a tuple of (start, end, resolution_along_meridian, step, level))

Each value of the dictionary is a numpy array representing a map of the converted variable for that step.

For example, the first value would correspond to a PCRaster map file 0000.001 generated and written by pyg2p when executed normally via CLI.

Check also this code we used in tests to validate API execution against CLI execution with same parameters:

import numpy as np

from pyg2p.main.readers import PCRasterReader

for i, (step, val) in enumerate(values.items(), start=1):

i = str(i).zfill(3)

reference = PCRasterReader(f'/data/reference/cosmo/E06a0000.{i}')).values

diff = np.abs(reference - val)

assert np.allclose(diff, np.zeros(diff.shape), rtol=1.e-2, atol=1.e-3, equal_nan=True)This paragraph will explain typical execution json configurations.

pyg2p -c example1.json -i /dataset/cosmo/2012111912_pf2_t2.grb -o ./out_1example1.json

{

"Execution": {

"@name": "eue_t24",

"Aggregation": {

"@step": 24,

"@type": "average"

},

"OutMaps": {

"@cloneMap": "{EUROPE_MAPS}/lat.map",

"@ext": 1,

"@fmap": 1,

"@namePrefix": "pT24",

"@unitTime": 24,

"Interpolation": {

"@latMap": "{EUROPE_MAPS}/lat.map",

"@lonMap": "{EUROPE_MAPS}/long.map",

"@mode": "grib_nearest"

}

},

"Parameter": {

"@applyConversion": "k2c",

"@correctionFormula": "p+gem-dem*0.0065",

"@demMap": "{DEM_MAP}",

"@gem": "(z/9.81)*0.0065",

"@shortName": "2t"

}

}

}This configuration, will select the 2t parameter from time step 0 to 12, out of a cosmo t2 file. Values will be corrected using the dem map and a geopotential file as in geopotentials.json configuration.

Maps will be written under ./out_1 folder (the folder will be created if not existing yet). The clone map is set as same as dem.map.

Note that paths to maps uses variables

EUROPE_MAPSandDEM_MAP. You will set these variables in myconf.conf file under ~/.pyg2p/ folder.

The original values will be converted using the conversion “k2c”. This conversion must be configured in the parameters.json file for the variable which is being extracted (2t). See Parameter property configuration at Parameter. The interpolation method is grib_nearest. Latitudes and longitudes values will be used only if the interpolation lookup table (intertable) hasn't be created yet but it's mandatory to set latMap and lonMap because the application uses their metadata raster attributes to select the right intertable. The table filename to be read and used for interpolation is automatically found by the application, so there is no need to specify it in configuration. However, lat and lon maps are mandatory configuration attributes.

pyg2p -c example1.json -i 20130325_en0to10.grib -I 20130325_en11to15.grib -o ./out_2Performs accumulation 24 hours out of sro values of two input grib files having different vertical resolutions. You can also feed pyg2p with a single multiresolution file.

pyg2p -c example1.json -i 20130325_sro_0to15.grib o ./out_2 -m 0{

"Execution": {

"@name": "multi_sro",

"Aggregation": {

"@step": 24,

"@type": "accumulation"

},

"OutMaps": {

"@cloneMap": "/dataset/maps/global/dem.map",

"@fmap": 1,

"@namePrefix": "psro",

"@unitTime": 24,

"Interpolation": {

"@latMap": "/dataset/maps/global/lat.map",

"@lonMap": "/dataset/maps/global/lon.map",

"@mode": "grib_nearest"

}

},

"Parameter": {

"@applyConversion": "m2mm",

"@shortName": "sro",

"@tend": 360,

"@tstart": 0

}

}

}This execution configuration will extract global overlapping messages sro (perturbation number 0) from two files at different resolution. Values will be converted using “tomm” conversion and maps (interpolation used here is grib_nearest) will be written under ./out_6 folder.

./pyg2p.py -i /dataset/eue/EpsN320-2012112000.grb -o ./out_eue -c execution_file_examples/execution_9.json{

"Execution": {

"@name": "eue_tp",

"Aggregation": {

"@step": 24,

"@type": "accumulation"

},

"OutMaps": {

"@cloneMap": "/dataset/maps/europe5km/lat.map",

"@fmap": 1,

"@namePrefix": "pR24",

"@unitTime": 24,

"Interpolation": {

"@latMap": "/dataset/maps/europe5km/lat.map",

"@lonMap": "/dataset/maps/europe5km/long.map",

"@mode": "grib_nearest"

}

},

"Parameter": {

"@applyConversion": "tomm",

"@shortName": "tp"

}

}

}Format: NETCDF4_CLASSIC.

Convention: CF-1.6

Dimensions:

xc: Number of rows of area/clone map

yc: Number of cols of area/clone map

time: Unlimited dimension for time steps

Variables:

lon: 2D array with shape (yc, xc)

lat: 2D array with shape (yc, xc)

time_nc: 1D array of values representing hours since dataDate of first grib message (endStep)

values_nc: a 3D array of dimensions (time, yc, xc), with coordinates set to 'lon, lat'.