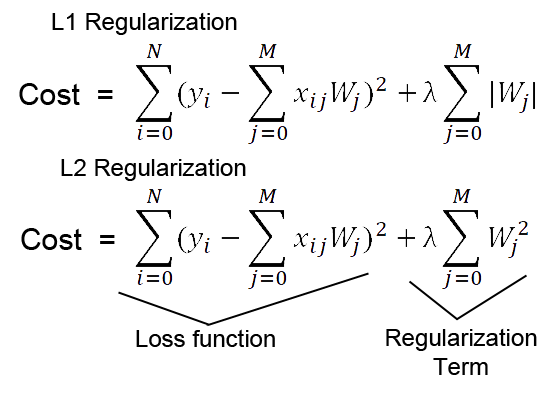

Regularization is a technique used for tuning the function by adding an additional penalty term in the error function. The additional term controls the excessively fluctuating function such that the coefficients don't take extreme values.

1.L1 & L2 Regularization:

- L1 regularization adds an L1 penalty equal to the absolute value of the magnitude of coefficients.

- L2 regularization adds an L2 penalty equal to the square of the magnitude of coefficients.

2.DropOut

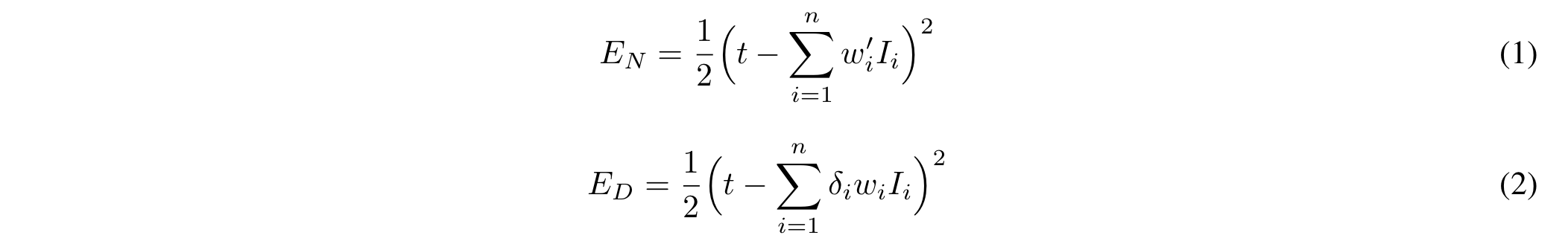

- Dropout is a regularization technique patented by Google for reducing overfitting in neural networks by preventing complex co-adaptations on training data.

- Eq. 1 shows loss for a regular network and Eq. 2 for a dropout network. In Eq. 2, the dropout rate is 𝛿, where 𝛿 ~ Bernoulli(p). This means 𝛿 is equal to 1 with probability p and 0 otherwise.

- Relationship between Dropout and Regularization, A Dropout rate of 0.5 will lead to the maximum regularization, and. Generalization of Dropout to Gaussian-Dropout.

- Code: Tensorflow.Keras | Pytorch

- Reference: https://papers.nips.cc/paper/4878-understanding-dropout.pdf

Dropout Variant:

- Alpha Dropout: Tensorflow | Pytorch | Paper

- Gaussian Dropout: Tensorflow | Pytorch | Paper

- Spatial Dropout: Tensorflow | Pytorch | Paper

- State Dropout: Tensorflow | Pytorch | Paper

- Recurrent Dropout: Tensorflow | Pytorch | Paper

This Markdown is continuously Updated.. Stay Tuned...!!!