diff --git a/.github/workflows/ci.yml b/.github/workflows/ci.yml

index 1a8d18c..66427cd 100644

--- a/.github/workflows/ci.yml

+++ b/.github/workflows/ci.yml

@@ -48,9 +48,7 @@ jobs:

run: |

python -m unittest

- - name: Test scripts

- env:

- ROBOFLOW_TOKEN: ${{ secrets.ROBOFLOW_API_KEY }}

+ - name: Test yolov5 train

shell: bash # for Windows compatibility

run: |

pip install -e .

@@ -66,34 +64,57 @@ jobs:

python yolov5/train.py --img 128 --batch 16 --weights yolov5/weights/yolov5n.pt --epochs 1 --device cpu

yolov5 train --img 128 --batch 16 --weights yolov5/weights/yolov5n.pt --epochs 1 --device cpu --freeze 10

yolov5 train --img 128 --batch 16 --weights yolov5/weights/yolov5n.pt --epochs 1 --device cpu --evolve 2

- # detect

+

+ - name: Test yolov5 detect

+ shell: bash # for Windows compatibility

+ run: |

python yolov5/detect.py --weights yolov5/weights/yolov5n.pt --device cpu

yolov5 detect --weights yolov5/weights/yolov5n.pt --device cpu

python yolov5/detect.py --weights runs/train/exp/weights/last.pt --device cpu

yolov5 detect --weights runs/train/exp/weights/last.pt --device cpu

- # val

+

+ - name: Test yolov5 val

+ shell: bash # for Windows compatibility

+ run: |

python yolov5/val.py --img 128 --batch 16 --weights yolov5/weights/yolov5n.pt --device cpu

yolov5 val --data yolov5/data/coco128.yaml --img 128 --batch 16 --weights yolov5/weights/yolov5n.pt --device cpu

python yolov5/val.py --img 128 --batch 16 --weights runs/train/exp/weights/last.pt --device cpu

yolov5 val --data yolov5/data/coco128.yaml --img 128 --batch 16 --weights runs/train/exp/weights/last.pt --device cpu

- # export

+

+ - name: Test yolov5 export

+ shell: bash # for Windows compatibility

+ run: |

pip install onnx onnx-simplifier tensorflowjs

python yolov5/export.py --weights yolov5/weights/yolov5n.pt --device cpu --include torchscript,onnx,tflite

yolov5 export --weights yolov5/weights/yolov5n.pt --device cpu --simplify --include torchscript,onnx,saved_model,pb,tfjs

- # benckmarks

+

+ - name: Test yolov5 benchmarks

+ shell: bash # for Windows compatibility

+ run: |

yolov5 benchmarks --weights yolov5n.pt --img 128 --pt-only --device cpu

- # classify

+

+ - name: Test yolov5 classify

+ shell: bash # for Windows compatibility

+ run: |

yolov5 classify train --img 128 --data mnist2560 --model yolov5n-cls.pt --epochs 1 --device cpu

yolov5 classify train --img 128 --data mnist2560 --model fcakyon/yolov5n-cls-v7.0 --epochs 1 --device cpu

yolov5 classify val --img 128 --data datasets/mnist2560 --weights yolov5n-cls.pt --device cpu

yolov5 classify predict --img 128 --weights yolov5n-cls.pt --device cpu

- # segment

+

+ - name: Test yolov5 segment

+ shell: bash # for Windows compatibility

+ run: |

yolov5 segment train --img 128 --weights yolov5n-seg.pt --epochs 1 --device cpu

yolov5 segment train --img 128 --weights fcakyon/yolov5n-seg-v7.0 --epochs 1 --device cpu

# yolov5 segment val --img 128 --weights yolov5n-seg.pt --device cpu

yolov5 segment predict --img 128 --weights yolov5n-seg.pt --device cpu

- # roboflow

- yolov5 train --data https://universe.roboflow.com/jacob-solawetz/aerial-maritime/dataset/10 --weights yolov5/weights/yolov5n.pt --device cpu --roboflow_token ${{ env.ROBOFLOW_TOKEN }}

- yolov5 classify train --data https://universe.roboflow.com/bagas-etcfr/flowerdata1/dataset/1 --img 128 --model yolov5n-cls.pt --epochs 1 --device cpu --roboflow_token ${{ env.ROBOFLOW_TOKEN }}

- yolov5 segment train --data https://universe.roboflow.com/scaffolding/scaffolding-distance/dataset/2 --img 128 --weights yolov5n-seg.pt --epochs 1 --device cpu --roboflow_token ${{ env.ROBOFLOW_TOKEN }}

+

+ - name: Test roboflow train

+ shell: bash # for Windows compatibility

+ env:

+ ROBOFLOW_TOKEN: ${{ secrets.ROBOFLOW_API_KEY }}

+ run: |

+ yolov5 train --data https://universe.roboflow.com/gdit/aerial-airport/dataset/1 --weights yolov5/weights/yolov5n.pt --img 128 --epochs 1 --device cpu --roboflow_token ${{ env.ROBOFLOW_TOKEN }}

+ # yolov5 classify train --data https://universe.roboflow.com/carlos-gabriel-da-silva-machado-siwvs/turtles-i1tlr/dataset/1 --img 128 --model yolov5n-cls.pt --epochs 1 --device cpu --roboflow_token ${{ env.ROBOFLOW_TOKEN }}

+ yolov5 segment train --data https://universe.roboflow.com/aymane-outani-auooc/cable-fzjik/dataset/2 --img 128 --weights yolov5n-seg.pt --epochs 1 --device cpu --roboflow_token ${{ env.ROBOFLOW_TOKEN }}

diff --git a/.github/workflows/package_testing.yml b/.github/workflows/package_testing.yml

index 3b0e345..bd6261a 100644

--- a/.github/workflows/package_testing.yml

+++ b/.github/workflows/package_testing.yml

@@ -46,9 +46,9 @@ jobs:

run: |

python -m unittest

- - name: Test scripts

+ - name: Test yolov5 train

+ shell: bash # for Windows compatibility

run: |

- pip install -e .

# donwload coco formatted testing data

python tests/download_test_coco_dataset.py

# train (dl base model from hf hub)

@@ -61,29 +61,49 @@ jobs:

python yolov5/train.py --img 128 --batch 16 --weights yolov5/weights/yolov5n.pt --epochs 1 --device cpu

yolov5 train --img 128 --batch 16 --weights yolov5/weights/yolov5n.pt --epochs 1 --device cpu --freeze 10

yolov5 train --img 128 --batch 16 --weights yolov5/weights/yolov5n.pt --epochs 1 --device cpu --evolve 2

- # detect

+ - name: Test yolov5 detect

+ shell: bash # for Windows compatibility

+ run: |

python yolov5/detect.py --weights yolov5/weights/yolov5n.pt --device cpu

yolov5 detect --weights yolov5/weights/yolov5n.pt --device cpu

python yolov5/detect.py --weights runs/train/exp/weights/last.pt --device cpu

yolov5 detect --weights runs/train/exp/weights/last.pt --device cpu

- # val

+ - name: Test yolov5 val

+ shell: bash # for Windows compatibility

+ run: |

python yolov5/val.py --img 128 --batch 16 --weights yolov5/weights/yolov5n.pt --device cpu

yolov5 val --data yolov5/data/coco128.yaml --img 128 --batch 16 --weights yolov5/weights/yolov5n.pt --device cpu

python yolov5/val.py --img 128 --batch 16 --weights runs/train/exp/weights/last.pt --device cpu

yolov5 val --data yolov5/data/coco128.yaml --img 128 --batch 16 --weights runs/train/exp/weights/last.pt --device cpu

- # export

+ - name: Test yolov5 export

+ shell: bash # for Windows compatibility

+ run: |

pip install onnx onnx-simplifier tensorflowjs

python yolov5/export.py --weights yolov5/weights/yolov5n.pt --device cpu --include torchscript,onnx,tflite

yolov5 export --weights yolov5/weights/yolov5n.pt --device cpu --simplify --include torchscript,onnx,saved_model,pb,tfjs

- # benckmarks

+ - name: Test yolov5 benchmarks

+ shell: bash # for Windows compatibility

+ run: |

yolov5 benchmarks --weights yolov5n.pt --img 128 --pt-only --device cpu

- # classify

+ - name: Test yolov5 classify

+ shell: bash # for Windows compatibility

+ run: |

yolov5 classify train --img 128 --data mnist2560 --model yolov5n-cls.pt --epochs 1 --device cpu

yolov5 classify train --img 128 --data mnist2560 --model fcakyon/yolov5n-cls-v7.0 --epochs 1 --device cpu

yolov5 classify val --img 128 --data datasets/mnist2560 --weights yolov5n-cls.pt --device cpu

yolov5 classify predict --img 128 --weights yolov5n-cls.pt --device cpu

- # segment

+ - name: Test yolov5 segment

+ shell: bash # for Windows compatibility

+ run: |

yolov5 segment train --img 128 --weights yolov5n-seg.pt --epochs 1 --device cpu

yolov5 segment train --img 128 --weights fcakyon/yolov5n-seg-v7.0 --epochs 1 --device cpu

# yolov5 segment val --img 128 --weights yolov5n-seg.pt --device cpu

yolov5 segment predict --img 128 --weights yolov5n-seg.pt --device cpu

+ - name: Test roboflow train

+ shell: bash # for Windows compatibility

+ env:

+ ROBOFLOW_TOKEN: ${{ secrets.ROBOFLOW_API_KEY }}

+ run: |

+ yolov5 train --data https://universe.roboflow.com/gdit/aerial-airport/dataset/1 --weights yolov5/weights/yolov5n.pt --img 128 --epochs 1 --device cpu --roboflow_token ${{ env.ROBOFLOW_TOKEN }}

+ # yolov5 classify train --data https://universe.roboflow.com/carlos-gabriel-da-silva-machado-siwvs/turtles-i1tlr/dataset/1 --img 128 --model yolov5n-cls.pt --epochs 1 --device cpu --roboflow_token ${{ env.ROBOFLOW_TOKEN }}

+ yolov5 segment train --data https://universe.roboflow.com/aymane-outani-auooc/cable-fzjik/dataset/2 --img 128 --weights yolov5n-seg.pt --epochs 1 --device cpu --roboflow_token ${{ env.ROBOFLOW_TOKEN }}

diff --git a/README.md b/README.md

index 866f615..e9b4070 100644

--- a/README.md

+++ b/README.md

@@ -25,7 +25,7 @@ You can finally install YOLOv5 o

-This yolov5 package contains everything from ultralytics/yolov5 at this commit plus:

+This yolov5 package contains everything from ultralytics/yolov5 at this commit plus:

1. Easy installation via pip: pip install yolov5

diff --git a/requirements.txt b/requirements.txt

index 4af966e..7d32a73 100644

--- a/requirements.txt

+++ b/requirements.txt

@@ -1,4 +1,4 @@

-# YOLOv5 🚀 requirements

+# YOLOv5 requirements

# Usage: pip install -r requirements.txt

# Base ------------------------------------------------------------------------

@@ -29,7 +29,7 @@ seaborn>=0.11.0

# Export ----------------------------------------------------------------------

# coremltools>=6.0 # CoreML export

-# onnx>=1.9.0 # ONNX export

+# onnx>=1.12.0 # ONNX export

# onnx-simplifier>=0.4.1 # ONNX simplifier

# nvidia-pyindex # TensorRT export

# nvidia-tensorrt # TensorRT export

@@ -44,7 +44,7 @@ seaborn>=0.11.0

# Extras ----------------------------------------------------------------------

# mss # screenshots

# albumentations>=1.0.3

-# pycocotools>=2.0 # COCO mAP

+# pycocotools>=2.0.6 # COCO mAP

# roboflow>=0.2.27

# ultralytics # HUB https://hub.ultralytics.com

@@ -55,4 +55,4 @@ boto3>=1.19.1

# coco to yolov5 conversion

sahi>=0.11.10

# huggingface

-huggingface-hub>=0.11.1

+huggingface-hub>=0.12.0

diff --git a/yolov5/__init__.py b/yolov5/__init__.py

index ce6190f..ccf565d 100644

--- a/yolov5/__init__.py

+++ b/yolov5/__init__.py

@@ -1,4 +1,4 @@

from yolov5.helpers import YOLOv5

from yolov5.helpers import load_model as load

-__version__ = "7.0.7"

+__version__ = "7.0.8"

diff --git a/yolov5/classify/predict.py b/yolov5/classify/predict.py

index 8feafe6..dc9dc7c 100644

--- a/yolov5/classify/predict.py

+++ b/yolov5/classify/predict.py

@@ -213,7 +213,7 @@ def parse_opt():

parser.add_argument('--augment', action='store_true', help='augmented inference')

parser.add_argument('--visualize', action='store_true', help='visualize features')

parser.add_argument('--update', action='store_true', help='update all models')

- parser.add_argument('--project', default=ROOT / 'runs/predict-cls', help='save results to project/name')

+ parser.add_argument('--project', default='runs/predict-cls', help='save results to project/name')

parser.add_argument('--name', default='exp', help='save results to project/name')

parser.add_argument('--exist-ok', action='store_true', help='existing project/name ok, do not increment')

parser.add_argument('--half', action='store_true', help='use FP16 half-precision inference')

@@ -227,7 +227,7 @@ def parse_opt():

def main():

opt = parse_opt()

- #check_requirements(exclude=('tensorboard', 'thop'))

+ check_requirements(exclude=('tensorboard', 'thop'))

run(**vars(opt))

diff --git a/yolov5/classify/train.py b/yolov5/classify/train.py

index bd2c6ad..c011c1c 100644

--- a/yolov5/classify/train.py

+++ b/yolov5/classify/train.py

@@ -6,7 +6,7 @@

$ python classify/train.py --model yolov5s-cls.pt --data imagenette160 --epochs 5 --img 224

Usage - Multi-GPU DDP training:

- $ python -m torch.distributed.run --nproc_per_node 4 --master_port 1 classify/train.py --model yolov5s-cls.pt --data imagenet --epochs 5 --img 224 --device 0,1,2,3

+ $ python -m torch.distributed.run --nproc_per_node 4 --master_port 2022 classify/train.py --model yolov5s-cls.pt --data imagenet --epochs 5 --img 224 --device 0,1,2,3

Datasets: --data mnist, fashion-mnist, cifar10, cifar100, imagenette, imagewoof, imagenet, or 'path/to/data'

YOLOv5-cls models: --model yolov5n-cls.pt, yolov5s-cls.pt, yolov5m-cls.pt, yolov5l-cls.pt, yolov5x-cls.pt

@@ -30,7 +30,7 @@

from torch.cuda import amp

from tqdm import tqdm

-from yolov5.utils.downloads import attempt_donwload_from_hub

+from yolov5.utils.downloads import attempt_download_from_hub

from yolov5.utils.roboflow import check_dataset_roboflow

FILE = Path(__file__).resolve()

@@ -43,18 +43,12 @@

from yolov5.models.experimental import attempt_load

from yolov5.models.yolo import ClassificationModel, DetectionModel

from yolov5.utils.dataloaders import create_classification_dataloader

-from yolov5.utils.general import (DATASETS_DIR, LOGGER, TQDM_BAR_FORMAT,

- WorkingDirectory, check_git_info,

- check_git_status, check_requirements,

- colorstr, download, increment_path,

- init_seeds, print_args, yaml_save)

+from yolov5.utils.general import (DATASETS_DIR, LOGGER, TQDM_BAR_FORMAT, WorkingDirectory, check_git_info, check_git_status,

+ check_requirements, colorstr, download, increment_path, init_seeds, print_args, yaml_save)

from yolov5.utils.loggers import GenericLogger

from yolov5.utils.plots import imshow_cls

-from yolov5.utils.torch_utils import (ModelEMA, model_info,

- reshape_classifier_output, select_device,

- smart_DDP, smart_optimizer,

- smartCrossEntropyLoss,

- torch_distributed_zero_first)

+from yolov5.utils.torch_utils import (ModelEMA, model_info, reshape_classifier_output, select_device, smart_DDP,

+ smart_optimizer, smartCrossEntropyLoss, torch_distributed_zero_first)

LOCAL_RANK = int(os.getenv('LOCAL_RANK', -1)) # https://pytorch.org/docs/stable/elastic/run.html

RANK = int(os.getenv('RANK', -1))

@@ -116,7 +110,7 @@ def train(opt, device):

# Model

# try to download from hf hub

- result = attempt_donwload_from_hub(opt.model, hf_token=None)

+ result = attempt_download_from_hub(opt.model, hf_token=None)

if result is not None:

opt.model = result

with torch_distributed_zero_first(LOCAL_RANK), WorkingDirectory(ROOT):

@@ -266,7 +260,7 @@ def train(opt, device):

f"\nPredict: yolov5 classify predict --weights {best} --source im.jpg"

f"\nValidate: yolov5 classify val --weights {best} --data {data_dir}"

f"\nExport: yolov5 export --weights {best} --include onnx"

- f"\nPyPi: model = yolov5.load('custom.pt')"

+ f"\nPython: model = yolov5.load('{best}')"

f"\nVisualize: https://netron.app\n")

# Plot examples

diff --git a/yolov5/classify/val.py b/yolov5/classify/val.py

index b31309b..7a0a23e 100644

--- a/yolov5/classify/val.py

+++ b/yolov5/classify/val.py

@@ -43,7 +43,7 @@

@smart_inference_mode()

def run(

- data=ROOT / '../datasets/mnist', # dataset dir

+ data='../datasets/mnist', # dataset dir

weights='yolov5s-cls.pt', # model.pt path(s)

batch_size=None, # batch size

batch=None, # batch size

@@ -155,14 +155,14 @@ def run(

def parse_opt():

parser = argparse.ArgumentParser()

- parser.add_argument('--data', type=str, default=ROOT / '../datasets/mnist', help='dataset path')

- parser.add_argument('--weights', nargs='+', type=str, default=ROOT / 'yolov5s-cls.pt', help='model.pt path(s)')

+ parser.add_argument('--data', type=str, default='../datasets/mnist', help='dataset path')

+ parser.add_argument('--weights', nargs='+', type=str, default='yolov5s-cls.pt', help='model.pt path(s)')

parser.add_argument('--batch-size', type=int, default=128, help='batch size')

parser.add_argument('--imgsz', '--img', '--img-size', type=int, default=224, help='inference size (pixels)')

parser.add_argument('--device', default='', help='cuda device, i.e. 0 or 0,1,2,3 or cpu')

parser.add_argument('--workers', type=int, default=8, help='max dataloader workers (per RANK in DDP mode)')

parser.add_argument('--verbose', nargs='?', const=True, default=True, help='verbose output')

- parser.add_argument('--project', default=ROOT / 'runs/val-cls', help='save to project/name')

+ parser.add_argument('--project', default='runs/val-cls', help='save to project/name')

parser.add_argument('--name', default='exp', help='save to project/name')

parser.add_argument('--exist-ok', action='store_true', help='existing project/name ok, do not increment')

parser.add_argument('--half', action='store_true', help='use FP16 half-precision inference')

diff --git a/yolov5/data/hyps/hyp.no-augmentation.yaml b/yolov5/data/hyps/hyp.no-augmentation.yaml

new file mode 100644

index 0000000..8fbd5b2

--- /dev/null

+++ b/yolov5/data/hyps/hyp.no-augmentation.yaml

@@ -0,0 +1,35 @@

+# YOLOv5 🚀 by Ultralytics, GPL-3.0 license

+# Hyperparameters when using Albumentations frameworks

+# python train.py --hyp hyp.no-augmentation.yaml

+# See https://github.com/ultralytics/yolov5/pull/3882 for YOLOv5 + Albumentations Usage examples

+

+lr0: 0.01 # initial learning rate (SGD=1E-2, Adam=1E-3)

+lrf: 0.1 # final OneCycleLR learning rate (lr0 * lrf)

+momentum: 0.937 # SGD momentum/Adam beta1

+weight_decay: 0.0005 # optimizer weight decay 5e-4

+warmup_epochs: 3.0 # warmup epochs (fractions ok)

+warmup_momentum: 0.8 # warmup initial momentum

+warmup_bias_lr: 0.1 # warmup initial bias lr

+box: 0.05 # box loss gain

+cls: 0.3 # cls loss gain

+cls_pw: 1.0 # cls BCELoss positive_weight

+obj: 0.7 # obj loss gain (scale with pixels)

+obj_pw: 1.0 # obj BCELoss positive_weight

+iou_t: 0.20 # IoU training threshold

+anchor_t: 4.0 # anchor-multiple threshold

+# anchors: 3 # anchors per output layer (0 to ignore)

+# this parameters are all zero since we want to use albumentation framework

+fl_gamma: 0.0 # focal loss gamma (efficientDet default gamma=1.5)

+hsv_h: 0 # image HSV-Hue augmentation (fraction)

+hsv_s: 00 # image HSV-Saturation augmentation (fraction)

+hsv_v: 0 # image HSV-Value augmentation (fraction)

+degrees: 0.0 # image rotation (+/- deg)

+translate: 0 # image translation (+/- fraction)

+scale: 0 # image scale (+/- gain)

+shear: 0 # image shear (+/- deg)

+perspective: 0.0 # image perspective (+/- fraction), range 0-0.001

+flipud: 0.0 # image flip up-down (probability)

+fliplr: 0.0 # image flip left-right (probability)

+mosaic: 0.0 # image mosaic (probability)

+mixup: 0.0 # image mixup (probability)

+copy_paste: 0.0 # segment copy-paste (probability)

diff --git a/yolov5/detect.py b/yolov5/detect.py

index 771ce7b..153f2c2 100644

--- a/yolov5/detect.py

+++ b/yolov5/detect.py

@@ -262,7 +262,7 @@ def parse_opt():

def main():

opt = parse_opt()

- #check_requirements(exclude=('tensorboard', 'thop'))

+ check_requirements(exclude=('tensorboard', 'thop'))

run(**vars(opt))

diff --git a/yolov5/export.py b/yolov5/export.py

index e041399..d7cd89a 100644

--- a/yolov5/export.py

+++ b/yolov5/export.py

@@ -132,7 +132,7 @@ def export_torchscript(model, im, file, optimize, prefix=colorstr('TorchScript:'

@try_export

def export_onnx(model, im, file, opset, dynamic, simplify, prefix=colorstr('ONNX:')):

# YOLOv5 ONNX export

- check_requirements('onnx')

+ check_requirements('onnx>=1.12.0')

import onnx

LOGGER.info(f'\n{prefix} starting export with onnx {onnx.__version__}...')

@@ -615,13 +615,13 @@ def run(

LOGGER.info(f'\nExport complete ({time.time() - t:.1f}s)'

f"\nResults saved to {colorstr('bold', file.parent.resolve())}"

f"\nDetect: yolov5 {'detect' if det else 'predict'} --weights {f[-1]} {h}"

- f"\nValidate: yolov5 yolov5 val --weights {f[-1]} {h}"

- f"\nnPython: model = yolov5.load('{f[-1]}') {s}"

+ f"\nValidate: yolov5 val --weights {f[-1]} {h}"

+ f"\nPython: model = yolov5.load('{f[-1]}') {s}"

f"\nVisualize: https://netron.app")

return f # return list of exported files/dirs

-def parse_opt():

+def parse_opt(known=False):

parser = argparse.ArgumentParser()

parser.add_argument('--data', type=str, default=ROOT / 'data/coco128.yaml', help='dataset.yaml path')

parser.add_argument('--weights', nargs='+', type=str, default='yolov5s.pt', help='model.pt path(s)')

@@ -635,7 +635,7 @@ def parse_opt():

parser.add_argument('--int8', action='store_true', help='CoreML/TF INT8 quantization')

parser.add_argument('--dynamic', action='store_true', help='ONNX/TF/TensorRT: dynamic axes')

parser.add_argument('--simplify', action='store_true', help='ONNX: simplify model')

- parser.add_argument('--opset', type=int, default=12, help='ONNX: opset version')

+ parser.add_argument('--opset', type=int, default=17, help='ONNX: opset version')

parser.add_argument('--verbose', action='store_true', help='TensorRT: verbose log')

parser.add_argument('--workspace', type=int, default=4, help='TensorRT: workspace size (GB)')

parser.add_argument('--nms', action='store_true', help='TF: add NMS to model')

@@ -649,7 +649,7 @@ def parse_opt():

nargs='+',

default=['torchscript'],

help='torchscript, onnx, openvino, engine, coreml, saved_model, pb, tflite, edgetpu, tfjs, paddle')

- opt = parser.parse_args()

+ opt = parser.parse_known_args()[0] if known else parser.parse_args()

print_args(vars(opt))

return opt

diff --git a/yolov5/models/common.py b/yolov5/models/common.py

index 2497957..b2de4d0 100644

--- a/yolov5/models/common.py

+++ b/yolov5/models/common.py

@@ -27,7 +27,7 @@

from yolov5.utils import TryExcept

from yolov5.utils.dataloaders import exif_transpose, letterbox

-from yolov5.utils.downloads import attempt_donwload_from_hub

+from yolov5.utils.downloads import attempt_download_from_hub

from yolov5.utils.general import (LOGGER, ROOT, Profile, check_requirements, check_suffix, check_version, colorstr,

increment_path, is_notebook, make_divisible, non_max_suppression, scale_boxes, xywh2xyxy,

xyxy2xywh, yaml_load)

@@ -333,11 +333,10 @@ def __init__(self, weights='yolov5s.pt', device=torch.device('cpu'), dnn=False,

from yolov5.models.experimental import attempt_download, attempt_load # scoped to avoid circular import

super().__init__()

-

w = str(weights[0] if isinstance(weights, list) else weights)

# try to dowload from hf hub

- result = attempt_donwload_from_hub(w, hf_token=hf_token)

+ result = attempt_download_from_hub(w, hf_token=hf_token)

if result is not None:

w = result

diff --git a/yolov5/segment/train.py b/yolov5/segment/train.py

index d0a0b08..e8baca5 100644

--- a/yolov5/segment/train.py

+++ b/yolov5/segment/train.py

@@ -47,7 +47,7 @@

from yolov5.utils.autoanchor import check_anchors

from yolov5.utils.autobatch import check_train_batch_size

from yolov5.utils.callbacks import Callbacks

-from yolov5.utils.downloads import (attempt_donwload_from_hub,

+from yolov5.utils.downloads import (attempt_download_from_hub,

attempt_download, is_url)

from yolov5.utils.general import (LOGGER, TQDM_BAR_FORMAT, check_amp,

check_dataset, check_file, check_git_info,

@@ -119,7 +119,7 @@ def train(hyp, opt, device, callbacks): # hyp is path/to/hyp.yaml or hyp dictio

# Model

# try to download from hf hub

- result = attempt_donwload_from_hub(weights, hf_token=None)

+ result = attempt_download_from_hub(weights, hf_token=None)

if result is not None:

weights = result

check_suffix(weights, '.pt') # check weights

diff --git a/yolov5/train.py b/yolov5/train.py

index 7e8154d..1091aff 100644

--- a/yolov5/train.py

+++ b/yolov5/train.py

@@ -36,6 +36,7 @@

from yolov5.helpers import (convert_coco_dataset_to_yolo, push_to_hfhub,

upload_to_s3)

from yolov5.utils.roboflow import check_dataset_roboflow

+from yolov5 import __version__

FILE = Path(__file__).resolve()

ROOT = FILE.parents[0] # YOLOv5 root directory

@@ -50,25 +51,19 @@

from yolov5.utils.autobatch import check_train_batch_size

from yolov5.utils.callbacks import Callbacks

from yolov5.utils.dataloaders import create_dataloader

-from yolov5.utils.downloads import (attempt_donwload_from_hub,

- attempt_download, is_url)

-from yolov5.utils.general import (LOGGER, TQDM_BAR_FORMAT, check_amp,

- check_dataset, check_file, check_img_size,

- check_suffix, check_yaml, colorstr,

- get_latest_run, increment_path, init_seeds,

- intersect_dicts, labels_to_class_weights,

- labels_to_image_weights, methods, one_cycle,

- print_args, print_mutation, strip_optimizer,

- yaml_save)

+from yolov5.utils.downloads import attempt_download, is_url, attempt_download_from_hub

+from yolov5.utils.general import (LOGGER, TQDM_BAR_FORMAT, check_amp, check_dataset, check_file, check_git_info,

+ check_git_status, check_img_size, check_requirements, check_suffix, check_yaml, colorstr,

+ get_latest_run, increment_path, init_seeds, intersect_dicts, labels_to_class_weights,

+ labels_to_image_weights, methods, one_cycle, print_args, print_mutation, strip_optimizer,

+ yaml_save)

from yolov5.utils.loggers import Loggers

from yolov5.utils.loggers.comet.comet_utils import check_comet_resume

from yolov5.utils.loss import ComputeLoss

from yolov5.utils.metrics import fitness

from yolov5.utils.plots import plot_evolve

-from yolov5.utils.torch_utils import (EarlyStopping, ModelEMA, de_parallel,

- select_device, smart_DDP,

- smart_optimizer, smart_resume,

- torch_distributed_zero_first)

+from yolov5.utils.torch_utils import (EarlyStopping, ModelEMA, de_parallel, select_device, smart_DDP, smart_optimizer,

+ smart_resume, torch_distributed_zero_first)

LOCAL_RANK = int(os.getenv('LOCAL_RANK', -1)) # https://pytorch.org/docs/stable/elastic/run.html

RANK = int(os.getenv('RANK', -1))

@@ -134,7 +129,7 @@ def train(hyp, opt, device, callbacks): # hyp is path/to/hyp.yaml or hyp dictio

# Model

# try to download from hf hub

- result = attempt_donwload_from_hub(weights, hf_token=None)

+ result = attempt_download_from_hub(weights, hf_token=None)

if result is not None:

weights = result

check_suffix(weights, '.pt') # check weights

@@ -222,7 +217,8 @@ def train(hyp, opt, device, callbacks): # hyp is path/to/hyp.yaml or hyp dictio

image_weights=opt.image_weights,

quad=opt.quad,

prefix=colorstr('train: '),

- shuffle=True)

+ shuffle=True,

+ seed=opt.seed)

labels = np.concatenate(dataset.labels, 0)

mlc = int(labels[:, 0].max()) # max label class

assert mlc < nc, f'Label class {mlc} exceeds nc={nc} in {data}. Possible class labels are 0-{nc - 1}'

@@ -271,7 +267,6 @@ def train(hyp, opt, device, callbacks): # hyp is path/to/hyp.yaml or hyp dictio

# nw = min(nw, (epochs - start_epoch) / 2 * nb) # limit warmup to < 1/2 of training

last_opt_step = -1

maps = np.zeros(nc) # mAP per class

- map50s = np.zeros(nc) # mAP50 per class

results = (0, 0, 0, 0, 0, 0, 0) # P, R, mAP@.5, mAP@.5-.95, val_loss(box, obj, cls)

scheduler.last_epoch = start_epoch - 1 # do not move

scaler = torch.cuda.amp.GradScaler(enabled=amp)

@@ -402,7 +397,8 @@ def train(hyp, opt, device, callbacks): # hyp is path/to/hyp.yaml or hyp dictio

'updates': ema.updates,

'optimizer': optimizer.state_dict(),

'opt': vars(opt),

- 'date': datetime.now().isoformat()}

+ 'date': datetime.now().isoformat(),

+ 'yolov5pip_version': __version__}

# Save last, best and delete

torch.save(ckpt, last)

@@ -442,7 +438,7 @@ def train(hyp, opt, device, callbacks): # hyp is path/to/hyp.yaml or hyp dictio

strip_optimizer(f) # strip optimizers

if f is best:

LOGGER.info(f'\nValidating {f}...')

- results, _, _, _ = validate.run(

+ results, _, _, _ = validate.run(

data_dict,

batch_size=batch_size // WORLD_SIZE * 2,

imgsz=imgsz,

@@ -557,7 +553,7 @@ def main(opt, callbacks=Callbacks()):

if RANK in {-1, 0}:

print_args(vars(opt))

#check_git_status()

- #check_requirements()

+ check_requirements()

if "roboflow.com" in str(opt.data):

opt.data = check_dataset_roboflow(

@@ -711,6 +707,7 @@ def run(**kwargs):

main(opt)

return opt

+

def run_cli(**kwargs):

'''

To be called from yolov5.cli

@@ -720,6 +717,7 @@ def run_cli(**kwargs):

setattr(opt, k, v)

main(opt)

+

if __name__ == "__main__":

opt = parse_opt()

main(opt)

diff --git a/yolov5/utils/dataloaders.py b/yolov5/utils/dataloaders.py

index b35e35d..8e80535 100644

--- a/yolov5/utils/dataloaders.py

+++ b/yolov5/utils/dataloaders.py

@@ -115,7 +115,8 @@ def create_dataloader(path,

image_weights=False,

quad=False,

prefix='',

- shuffle=False):

+ shuffle=False,

+ seed=0):

if rect and shuffle:

LOGGER.warning('WARNING ⚠️ --rect is incompatible with DataLoader shuffle, setting shuffle=False')

shuffle = False

@@ -141,7 +142,7 @@ def create_dataloader(path,

sampler = None if rank == -1 else distributed.DistributedSampler(dataset, shuffle=shuffle)

loader = DataLoader if image_weights else InfiniteDataLoader # only DataLoader allows for attribute updates

generator = torch.Generator()

- generator.manual_seed(6148914691236517205 + RANK)

+ generator.manual_seed(6148914691236517205 + seed + RANK)

return loader(dataset,

batch_size=batch_size,

shuffle=shuffle and sampler is None,

@@ -756,7 +757,7 @@ def load_image(self, i):

r = self.img_size / max(h0, w0) # ratio

if r != 1: # if sizes are not equal

interp = cv2.INTER_LINEAR if (self.augment or r > 1) else cv2.INTER_AREA

- im = cv2.resize(im, (int(w0 * r), int(h0 * r)), interpolation=interp)

+ im = cv2.resize(im, (math.ceil(w0 * r), math.ceil(h0 * r)), interpolation=interp)

return im, (h0, w0), im.shape[:2] # im, hw_original, hw_resized

return self.ims[i], self.im_hw0[i], self.im_hw[i] # im, hw_original, hw_resized

diff --git a/yolov5/utils/downloads.py b/yolov5/utils/downloads.py

index a4bdb9b..394d9a0 100644

--- a/yolov5/utils/downloads.py

+++ b/yolov5/utils/downloads.py

@@ -71,7 +71,7 @@ def github_assets(repository, version='latest'):

return response['tag_name'], [x['name'] for x in response['assets']] # tag, assets

# try to download from hf hub

- result = attempt_donwload_from_hub(file, hf_token=hf_token)

+ result = attempt_download_from_hub(file, hf_token=hf_token)

if result is not None:

file = result

@@ -110,8 +110,6 @@ def github_assets(repository, version='latest'):

min_bytes=1E5,

error_msg=f'{file} missing, try downloading from https://github.com/{repo}/releases/{tag} or {url3}')

-

-

return str(file)

@@ -128,7 +126,7 @@ def get_model_filename_from_hfhub(repo_id, hf_token=None):

return None

-def attempt_donwload_from_hub(repo_id, hf_token=None):

+def attempt_download_from_hub(repo_id, hf_token=None):

from huggingface_hub import hf_hub_download, list_repo_files

from huggingface_hub.utils._errors import RepositoryNotFoundError

from huggingface_hub.utils._validators import HFValidationError

diff --git a/yolov5/utils/general.py b/yolov5/utils/general.py

index 0695da5..a29e893 100644

--- a/yolov5/utils/general.py

+++ b/yolov5/utils/general.py

@@ -750,30 +750,30 @@ def coco80_to_coco91_class(): # converts 80-index (val2014) to 91-index (paper)

def xyxy2xywh(x):

# Convert nx4 boxes from [x1, y1, x2, y2] to [x, y, w, h] where xy1=top-left, xy2=bottom-right

y = x.clone() if isinstance(x, torch.Tensor) else np.copy(x)

- y[:, 0] = (x[:, 0] + x[:, 2]) / 2 # x center

- y[:, 1] = (x[:, 1] + x[:, 3]) / 2 # y center

- y[:, 2] = x[:, 2] - x[:, 0] # width

- y[:, 3] = x[:, 3] - x[:, 1] # height

+ y[..., 0] = (x[..., 0] + x[..., 2]) / 2 # x center

+ y[..., 1] = (x[..., 1] + x[..., 3]) / 2 # y center

+ y[..., 2] = x[..., 2] - x[..., 0] # width

+ y[..., 3] = x[..., 3] - x[..., 1] # height

return y

def xywh2xyxy(x):

# Convert nx4 boxes from [x, y, w, h] to [x1, y1, x2, y2] where xy1=top-left, xy2=bottom-right

y = x.clone() if isinstance(x, torch.Tensor) else np.copy(x)

- y[:, 0] = x[:, 0] - x[:, 2] / 2 # top left x

- y[:, 1] = x[:, 1] - x[:, 3] / 2 # top left y

- y[:, 2] = x[:, 0] + x[:, 2] / 2 # bottom right x

- y[:, 3] = x[:, 1] + x[:, 3] / 2 # bottom right y

+ y[..., 0] = x[..., 0] - x[..., 2] / 2 # top left x

+ y[..., 1] = x[..., 1] - x[..., 3] / 2 # top left y

+ y[..., 2] = x[..., 0] + x[..., 2] / 2 # bottom right x

+ y[..., 3] = x[..., 1] + x[..., 3] / 2 # bottom right y

return y

def xywhn2xyxy(x, w=640, h=640, padw=0, padh=0):

# Convert nx4 boxes from [x, y, w, h] normalized to [x1, y1, x2, y2] where xy1=top-left, xy2=bottom-right

y = x.clone() if isinstance(x, torch.Tensor) else np.copy(x)

- y[:, 0] = w * (x[:, 0] - x[:, 2] / 2) + padw # top left x

- y[:, 1] = h * (x[:, 1] - x[:, 3] / 2) + padh # top left y

- y[:, 2] = w * (x[:, 0] + x[:, 2] / 2) + padw # bottom right x

- y[:, 3] = h * (x[:, 1] + x[:, 3] / 2) + padh # bottom right y

+ y[..., 0] = w * (x[..., 0] - x[..., 2] / 2) + padw # top left x

+ y[..., 1] = h * (x[..., 1] - x[..., 3] / 2) + padh # top left y

+ y[..., 2] = w * (x[..., 0] + x[..., 2] / 2) + padw # bottom right x

+ y[..., 3] = h * (x[..., 1] + x[..., 3] / 2) + padh # bottom right y

return y

@@ -782,18 +782,18 @@ def xyxy2xywhn(x, w=640, h=640, clip=False, eps=0.0):

if clip:

clip_boxes(x, (h - eps, w - eps)) # warning: inplace clip

y = x.clone() if isinstance(x, torch.Tensor) else np.copy(x)

- y[:, 0] = ((x[:, 0] + x[:, 2]) / 2) / w # x center

- y[:, 1] = ((x[:, 1] + x[:, 3]) / 2) / h # y center

- y[:, 2] = (x[:, 2] - x[:, 0]) / w # width

- y[:, 3] = (x[:, 3] - x[:, 1]) / h # height

+ y[..., 0] = ((x[..., 0] + x[..., 2]) / 2) / w # x center

+ y[..., 1] = ((x[..., 1] + x[..., 3]) / 2) / h # y center

+ y[..., 2] = (x[..., 2] - x[..., 0]) / w # width

+ y[..., 3] = (x[..., 3] - x[..., 1]) / h # height

return y

def xyn2xy(x, w=640, h=640, padw=0, padh=0):

# Convert normalized segments into pixel segments, shape (n,2)

y = x.clone() if isinstance(x, torch.Tensor) else np.copy(x)

- y[:, 0] = w * x[:, 0] + padw # top left x

- y[:, 1] = h * x[:, 1] + padh # top left y

+ y[..., 0] = w * x[..., 0] + padw # top left x

+ y[..., 1] = h * x[..., 1] + padh # top left y

return y

@@ -833,9 +833,9 @@ def scale_boxes(img1_shape, boxes, img0_shape, ratio_pad=None):

gain = ratio_pad[0][0]

pad = ratio_pad[1]

- boxes[:, [0, 2]] -= pad[0] # x padding

- boxes[:, [1, 3]] -= pad[1] # y padding

- boxes[:, :4] /= gain

+ boxes[..., [0, 2]] -= pad[0] # x padding

+ boxes[..., [1, 3]] -= pad[1] # y padding

+ boxes[..., :4] /= gain

clip_boxes(boxes, img0_shape)

return boxes

@@ -862,13 +862,13 @@ def scale_segments(img1_shape, segments, img0_shape, ratio_pad=None, normalize=F

def clip_boxes(boxes, shape):

# Clip boxes (xyxy) to image shape (height, width)

if isinstance(boxes, torch.Tensor): # faster individually

- boxes[:, 0].clamp_(0, shape[1]) # x1

- boxes[:, 1].clamp_(0, shape[0]) # y1

- boxes[:, 2].clamp_(0, shape[1]) # x2

- boxes[:, 3].clamp_(0, shape[0]) # y2

+ boxes[..., 0].clamp_(0, shape[1]) # x1

+ boxes[..., 1].clamp_(0, shape[0]) # y1

+ boxes[..., 2].clamp_(0, shape[1]) # x2

+ boxes[..., 3].clamp_(0, shape[0]) # y2

else: # np.array (faster grouped)

- boxes[:, [0, 2]] = boxes[:, [0, 2]].clip(0, shape[1]) # x1, x2

- boxes[:, [1, 3]] = boxes[:, [1, 3]].clip(0, shape[0]) # y1, y2

+ boxes[..., [0, 2]] = boxes[..., [0, 2]].clip(0, shape[1]) # x1, x2

+ boxes[..., [1, 3]] = boxes[..., [1, 3]].clip(0, shape[0]) # y1, y2

def clip_segments(segments, shape):

@@ -898,6 +898,9 @@ def non_max_suppression(

list of detections, on (n,6) tensor per image [xyxy, conf, cls]

"""

+ # Checks

+ assert 0 <= conf_thres <= 1, f'Invalid Confidence threshold {conf_thres}, valid values are between 0.0 and 1.0'

+ assert 0 <= iou_thres <= 1, f'Invalid IoU {iou_thres}, valid values are between 0.0 and 1.0'

if isinstance(prediction, (list, tuple)): # YOLOv5 model in validation model, output = (inference_out, loss_out)

prediction = prediction[0] # select only inference output

@@ -909,10 +912,6 @@ def non_max_suppression(

nc = prediction.shape[2] - nm - 5 # number of classes

xc = prediction[..., 4] > conf_thres # candidates

- # Checks

- assert 0 <= conf_thres <= 1, f'Invalid Confidence threshold {conf_thres}, valid values are between 0.0 and 1.0'

- assert 0 <= iou_thres <= 1, f'Invalid IoU {iou_thres}, valid values are between 0.0 and 1.0'

-

# Settings

# min_wh = 2 # (pixels) minimum box width and height

max_wh = 7680 # (pixels) maximum box width and height

@@ -970,17 +969,13 @@ def non_max_suppression(

n = x.shape[0] # number of boxes

if not n: # no boxes

continue

- elif n > max_nms: # excess boxes

- x = x[x[:, 4].argsort(descending=True)[:max_nms]] # sort by confidence

- else:

- x = x[x[:, 4].argsort(descending=True)] # sort by confidence

+ x = x[x[:, 4].argsort(descending=True)[:max_nms]] # sort by confidence and remove excess boxes

# Batched NMS

c = x[:, 5:6] * (0 if agnostic else max_wh) # classes

boxes, scores = x[:, :4] + c, x[:, 4] # boxes (offset by class), scores

i = torchvision.ops.nms(boxes, scores, iou_thres) # NMS

- if i.shape[0] > max_det: # limit detections

- i = i[:max_det]

+ i = i[:max_det] # limit detections

if merge and (1 < n < 3E3): # Merge NMS (boxes merged using weighted mean)

# update boxes as boxes(i,4) = weights(i,n) * boxes(n,4)

iou = box_iou(boxes[i], boxes) > iou_thres # iou matrix

diff --git a/yolov5/utils/loggers/__init__.py b/yolov5/utils/loggers/__init__.py

index ed1354e..b93d3b5 100644

--- a/yolov5/utils/loggers/__init__.py

+++ b/yolov5/utils/loggers/__init__.py

@@ -115,6 +115,17 @@ def __init__(self, save_dir=None, weights=None, opt=None, hyp=None, logger=None,

if not neptune:

prefix = colorstr('Neptune AI: ')

s = f"{prefix}run 'pip install neptune-client' to automatically track and visualize YOLOv5 🚀 runs"

+ # if not wandb:

+ # prefix = colorstr('Weights & Biases: ')

+ # s = f"{prefix}run 'pip install wandb' to automatically track and visualize YOLOv5 🚀 runs in Weights & Biases"

+ # self.logger.info(s)

+ # if not clearml:

+ # prefix = colorstr('ClearML: ')

+ # s = f"{prefix}run 'pip install clearml' to automatically track, visualize and remotely train YOLOv5 🚀 in ClearML"

+ # self.logger.info(s)

+ # if not comet_ml:

+ # prefix = colorstr('Comet: ')

+ # s = f"{prefix}run 'pip install comet_ml' to automatically track and visualize YOLOv5 🚀 runs in Comet"

self.logger.info(s)

# TensorBoard

s = self.save_dir

@@ -129,6 +140,10 @@ def __init__(self, save_dir=None, weights=None, opt=None, hyp=None, logger=None,

run_id = torch.load(self.weights).get('wandb_id') if self.opt.resume and not wandb_artifact_resume else None

self.opt.hyp = self.hyp # add hyperparameters

self.wandb = WandbLogger(self.opt, run_id)

+ # temp warn. because nested artifacts not supported after 0.12.10

+ # if pkg.parse_version(wandb.__version__) >= pkg.parse_version('0.12.11'):

+ # s = "YOLOv5 temporarily requires wandb version 0.12.10 or below. Some features may not work as expected."

+ # self.logger.warning(s)

else:

self.wandb = None

diff --git a/yolov5/utils/loggers/comet/README.md b/yolov5/utils/loggers/comet/README.md

index 3a51cb9..8a361e2 100644

--- a/yolov5/utils/loggers/comet/README.md

+++ b/yolov5/utils/loggers/comet/README.md

@@ -2,13 +2,13 @@

# YOLOv5 with Comet

-This guide will cover how to use YOLOv5 with [Comet](https://bit.ly/yolov5-readme-comet)

+This guide will cover how to use YOLOv5 with [Comet](https://bit.ly/yolov5-readme-comet2)

# About Comet

Comet builds tools that help data scientists, engineers, and team leaders accelerate and optimize machine learning and deep learning models.

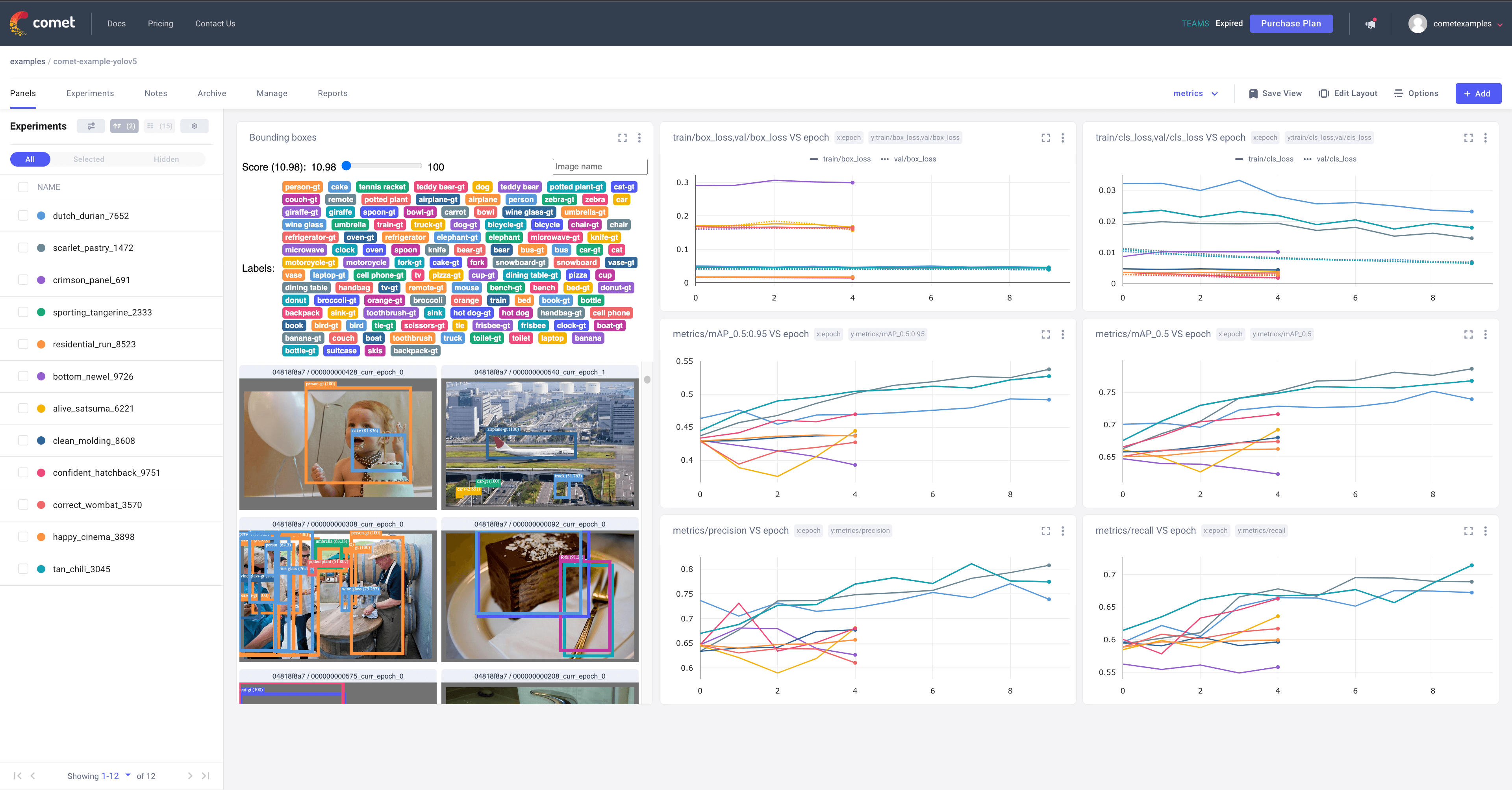

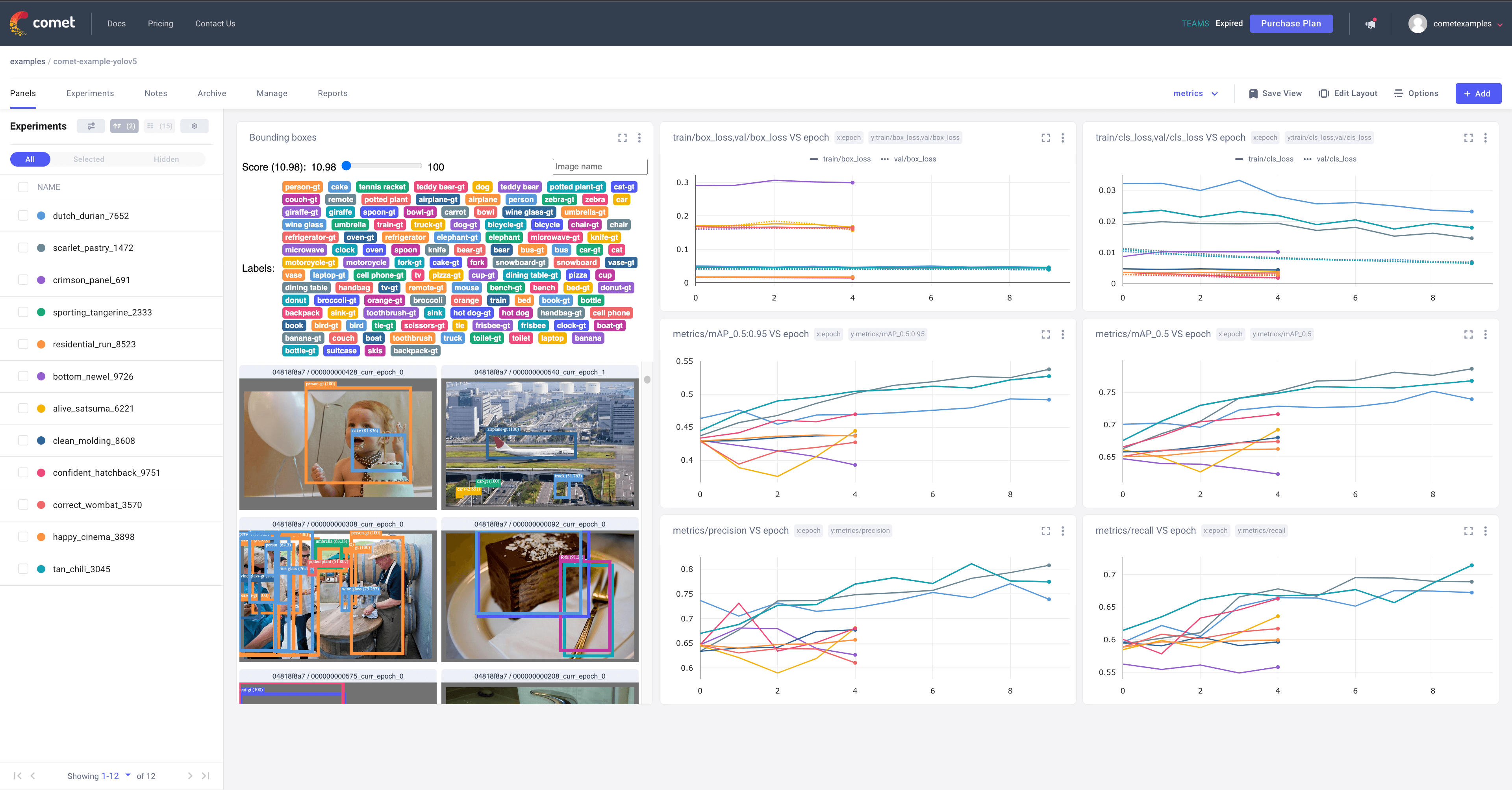

-Track and visualize model metrics in real time, save your hyperparameters, datasets, and model checkpoints, and visualize your model predictions with [Comet Custom Panels](https://bit.ly/yolov5-colab-comet-panels)!

+Track and visualize model metrics in real time, save your hyperparameters, datasets, and model checkpoints, and visualize your model predictions with [Comet Custom Panels](https://www.comet.com/docs/v2/guides/comet-dashboard/code-panels/about-panels/?utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github)!

Comet makes sure you never lose track of your work and makes it easy to share results and collaborate across teams of all sizes!

# Getting Started

@@ -51,10 +51,10 @@ python train.py --img 640 --batch 16 --epochs 5 --data coco128.yaml --weights yo

That's it! Comet will automatically log your hyperparameters, command line arguments, training and valiation metrics. You can visualize and analyze your runs in the Comet UI

- +

+ # Try out an Example!

-Check out an example of a [completed run here](https://www.comet.com/examples/comet-example-yolov5/a0e29e0e9b984e4a822db2a62d0cb357?experiment-tab=chart&showOutliers=true&smoothing=0&transformY=smoothing&xAxis=step&ref=yolov5&utm_source=yolov5&utm_medium=affilliate&utm_campaign=yolov5_comet_integration)

+Check out an example of a [completed run here](https://www.comet.com/examples/comet-example-yolov5/a0e29e0e9b984e4a822db2a62d0cb357?experiment-tab=chart&showOutliers=true&smoothing=0&transformY=smoothing&xAxis=step&utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github)

Or better yet, try it out yourself in this Colab Notebook

@@ -119,7 +119,7 @@ You can control the frequency of logged predictions and the associated images by

**Note:** The YOLOv5 validation dataloader will default to a batch size of 32, so you will have to set the logging frequency accordingly.

-Here is an [example project using the Panel](https://www.comet.com/examples/comet-example-yolov5?shareable=YcwMiJaZSXfcEXpGOHDD12vA1&ref=yolov5&utm_source=yolov5&utm_medium=affilliate&utm_campaign=yolov5_comet_integration)

+Here is an [example project using the Panel](https://www.comet.com/examples/comet-example-yolov5?shareable=YcwMiJaZSXfcEXpGOHDD12vA1&utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github)

```shell

@@ -161,7 +161,7 @@ env COMET_LOG_PER_CLASS_METRICS=true python train.py \

## Uploading a Dataset to Comet Artifacts

-If you would like to store your data using [Comet Artifacts](https://www.comet.com/docs/v2/guides/data-management/using-artifacts/#learn-more?ref=yolov5&utm_source=yolov5&utm_medium=affilliate&utm_campaign=yolov5_comet_integration), you can do so using the `upload_dataset` flag.

+If you would like to store your data using [Comet Artifacts](https://www.comet.com/docs/v2/guides/data-management/using-artifacts/#learn-more?utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github), you can do so using the `upload_dataset` flag.

The dataset be organized in the way described in the [YOLOv5 documentation](https://docs.ultralytics.com/tutorials/train-custom-datasets/#3-organize-directories). The dataset config `yaml` file must follow the same format as that of the `coco128.yaml` file.

@@ -251,6 +251,6 @@ comet optimizer -j utils/loggers/comet/hpo.py \

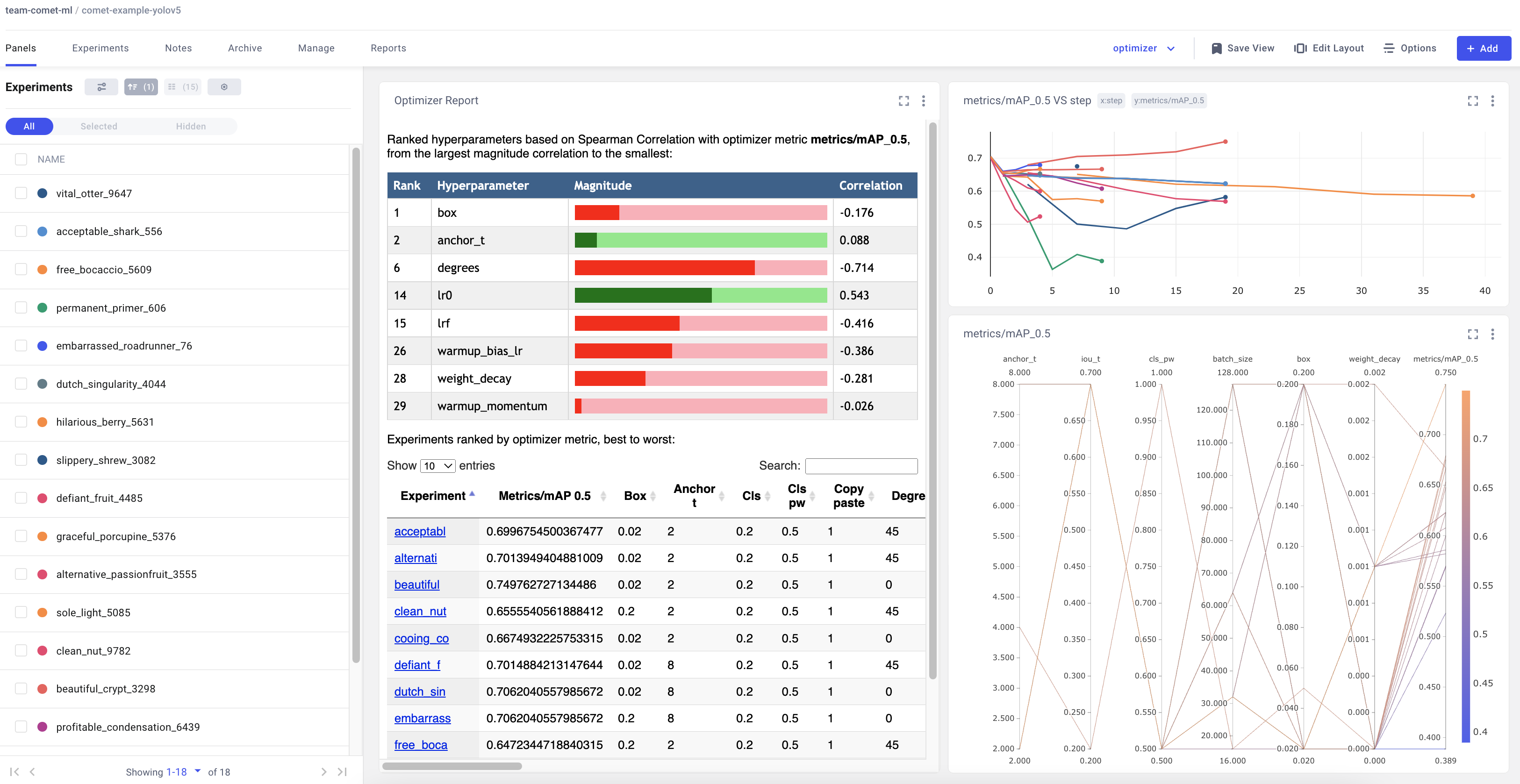

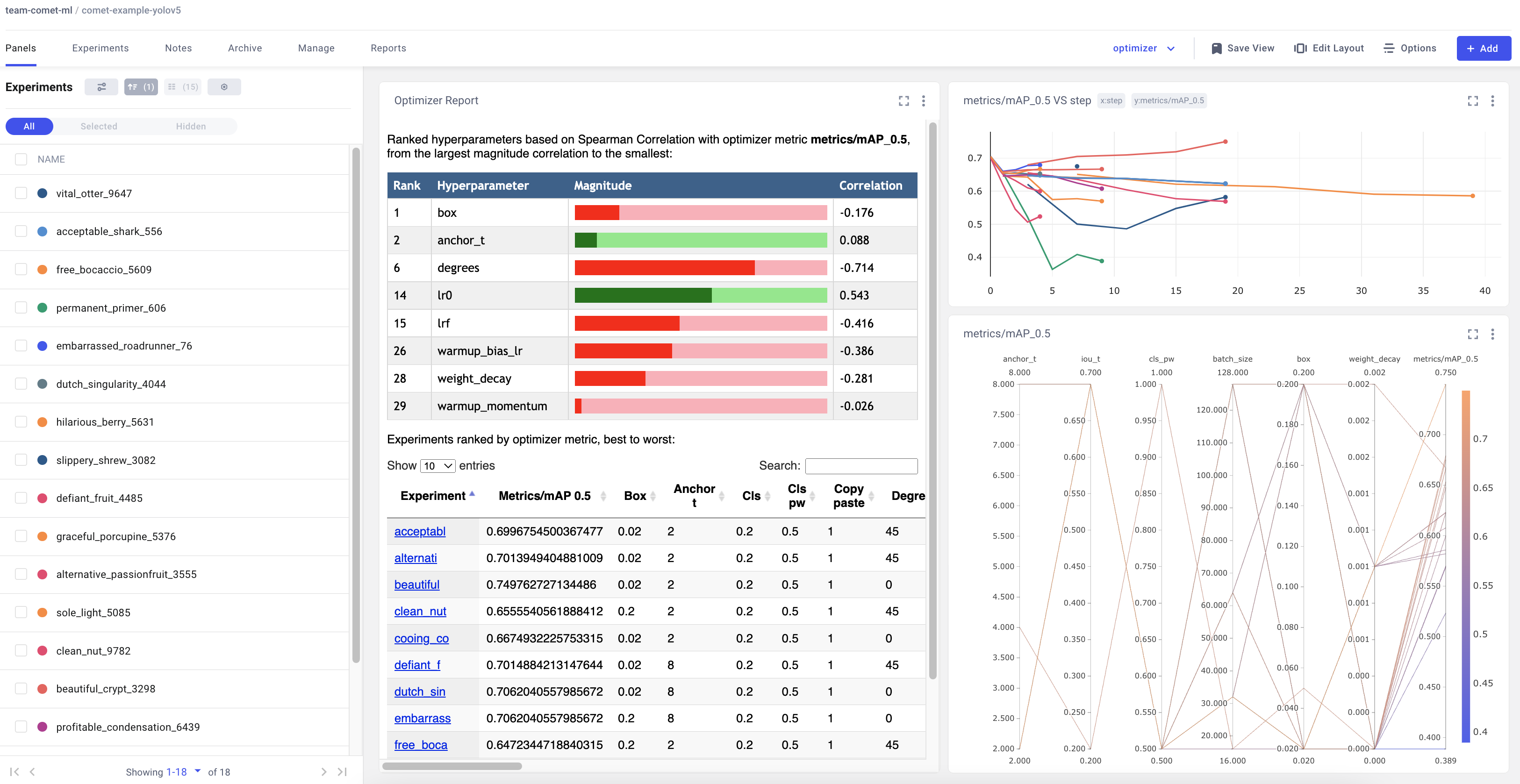

### Visualizing Results

-Comet provides a number of ways to visualize the results of your sweep. Take a look at a [project with a completed sweep here](https://www.comet.com/examples/comet-example-yolov5/view/PrlArHGuuhDTKC1UuBmTtOSXD/panels?ref=yolov5&utm_source=yolov5&utm_medium=affilliate&utm_campaign=yolov5_comet_integration)

+Comet provides a number of ways to visualize the results of your sweep. Take a look at a [project with a completed sweep here](https://www.comet.com/examples/comet-example-yolov5/view/PrlArHGuuhDTKC1UuBmTtOSXD/panels?utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github)

# Try out an Example!

-Check out an example of a [completed run here](https://www.comet.com/examples/comet-example-yolov5/a0e29e0e9b984e4a822db2a62d0cb357?experiment-tab=chart&showOutliers=true&smoothing=0&transformY=smoothing&xAxis=step&ref=yolov5&utm_source=yolov5&utm_medium=affilliate&utm_campaign=yolov5_comet_integration)

+Check out an example of a [completed run here](https://www.comet.com/examples/comet-example-yolov5/a0e29e0e9b984e4a822db2a62d0cb357?experiment-tab=chart&showOutliers=true&smoothing=0&transformY=smoothing&xAxis=step&utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github)

Or better yet, try it out yourself in this Colab Notebook

@@ -119,7 +119,7 @@ You can control the frequency of logged predictions and the associated images by

**Note:** The YOLOv5 validation dataloader will default to a batch size of 32, so you will have to set the logging frequency accordingly.

-Here is an [example project using the Panel](https://www.comet.com/examples/comet-example-yolov5?shareable=YcwMiJaZSXfcEXpGOHDD12vA1&ref=yolov5&utm_source=yolov5&utm_medium=affilliate&utm_campaign=yolov5_comet_integration)

+Here is an [example project using the Panel](https://www.comet.com/examples/comet-example-yolov5?shareable=YcwMiJaZSXfcEXpGOHDD12vA1&utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github)

```shell

@@ -161,7 +161,7 @@ env COMET_LOG_PER_CLASS_METRICS=true python train.py \

## Uploading a Dataset to Comet Artifacts

-If you would like to store your data using [Comet Artifacts](https://www.comet.com/docs/v2/guides/data-management/using-artifacts/#learn-more?ref=yolov5&utm_source=yolov5&utm_medium=affilliate&utm_campaign=yolov5_comet_integration), you can do so using the `upload_dataset` flag.

+If you would like to store your data using [Comet Artifacts](https://www.comet.com/docs/v2/guides/data-management/using-artifacts/#learn-more?utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github), you can do so using the `upload_dataset` flag.

The dataset be organized in the way described in the [YOLOv5 documentation](https://docs.ultralytics.com/tutorials/train-custom-datasets/#3-organize-directories). The dataset config `yaml` file must follow the same format as that of the `coco128.yaml` file.

@@ -251,6 +251,6 @@ comet optimizer -j utils/loggers/comet/hpo.py \

### Visualizing Results

-Comet provides a number of ways to visualize the results of your sweep. Take a look at a [project with a completed sweep here](https://www.comet.com/examples/comet-example-yolov5/view/PrlArHGuuhDTKC1UuBmTtOSXD/panels?ref=yolov5&utm_source=yolov5&utm_medium=affilliate&utm_campaign=yolov5_comet_integration)

+Comet provides a number of ways to visualize the results of your sweep. Take a look at a [project with a completed sweep here](https://www.comet.com/examples/comet-example-yolov5/view/PrlArHGuuhDTKC1UuBmTtOSXD/panels?utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github)

diff --git a/yolov5/utils/loggers/wandb/wandb_utils.py b/yolov5/utils/loggers/wandb/wandb_utils.py

index 35485f4..17c4b00 100644

--- a/yolov5/utils/loggers/wandb/wandb_utils.py

+++ b/yolov5/utils/loggers/wandb/wandb_utils.py

@@ -17,7 +17,7 @@

from yolov5 import __version__

from yolov5.utils.dataloaders import LoadImagesAndLabels, img2label_paths

-from yolov5.utils.general import LOGGER, check_dataset, check_file

+from utils.general import LOGGER, check_dataset, check_file

try:

import wandb

diff --git a/yolov5/utils/metrics.py b/yolov5/utils/metrics.py

index 52c7919..ff6946a 100644

--- a/yolov5/utils/metrics.py

+++ b/yolov5/utils/metrics.py

@@ -208,7 +208,7 @@ def plot(self, normalize=True, save_dir='', names=()):

vmin=0.0,

xticklabels=ticklabels,

yticklabels=ticklabels).set_facecolor((1, 1, 1))

- ax.set_ylabel('True')

+ ax.set_xlabel('True')

ax.set_ylabel('Predicted')

ax.set_title('Confusion Matrix')

fig.savefig(Path(save_dir) / 'confusion_matrix.png', dpi=250)

diff --git a/yolov5/utils/plots.py b/yolov5/utils/plots.py

index fffa5bc..08a9c71 100644

--- a/yolov5/utils/plots.py

+++ b/yolov5/utils/plots.py

@@ -88,7 +88,8 @@ def box_label(self, box, label='', color=(128, 128, 128), txt_color=(255, 255, 2

if self.pil or not is_ascii(label):

self.draw.rectangle(box, width=self.lw, outline=color) # box

if label:

- w, h = self.font.getsize(label) # text width, height

+ w, h = self.font.getsize(label) # text width, height (WARNING: deprecated) in 9.2.0

+ # _, _, w, h = self.font.getbbox(label) # text width, height (New)

outside = box[1] - h >= 0 # label fits outside box

self.draw.rectangle(

(box[0], box[1] - h if outside else box[1], box[0] + w + 1,

diff --git a/yolov5/utils/roboflow.py b/yolov5/utils/roboflow.py

index b243cd7..aa7d919 100644

--- a/yolov5/utils/roboflow.py

+++ b/yolov5/utils/roboflow.py

@@ -19,7 +19,7 @@ def extract_roboflow_metadata(url: str) -> tuple:

def resolve_roboflow_model_format(task: str) -> str:

task_format_mapping = {

- "detect": "yolov8",

+ "detect": "yolov5",

"segment": "yolov5",

"classify": "folder"

}

diff --git a/yolov5/utils/segment/dataloaders.py b/yolov5/utils/segment/dataloaders.py

index 9de6f0f..d66b361 100644

--- a/yolov5/utils/segment/dataloaders.py

+++ b/yolov5/utils/segment/dataloaders.py

@@ -37,7 +37,8 @@ def create_dataloader(path,

prefix='',

shuffle=False,

mask_downsample_ratio=1,

- overlap_mask=False):

+ overlap_mask=False,

+ seed=0):

if rect and shuffle:

LOGGER.warning('WARNING ⚠️ --rect is incompatible with DataLoader shuffle, setting shuffle=False')

shuffle = False

@@ -64,7 +65,7 @@ def create_dataloader(path,

sampler = None if rank == -1 else distributed.DistributedSampler(dataset, shuffle=shuffle)

loader = DataLoader if image_weights else InfiniteDataLoader # only DataLoader allows for attribute updates

generator = torch.Generator()

- generator.manual_seed(6148914691236517205 + RANK)

+ generator.manual_seed(6148914691236517205 + seed + RANK)

return loader(

dataset,

batch_size=batch_size,

diff --git a/yolov5/val.py b/yolov5/val.py

index e8c6dd7..2b880f9 100644

--- a/yolov5/val.py

+++ b/yolov5/val.py

@@ -336,7 +336,7 @@ def run(

json.dump(jdict, f)

try: # https://github.com/cocodataset/cocoapi/blob/master/PythonAPI/pycocoEvalDemo.ipynb

- check_requirements('pycocotools')

+ check_requirements('pycocotools>=2.0.6')

from pycocotools.coco import COCO

from pycocotools.cocoeval import COCOeval

@@ -400,7 +400,7 @@ def parse_opt():

def main():

opt = parse_opt()

- #check_requirements(exclude=('tensorboard', 'thop'))

+ check_requirements(exclude=('tensorboard', 'thop'))

if opt.task in ('train', 'val', 'test'): # run normally

if opt.conf_thres > 0.001: # https://github.com/ultralytics/yolov5/issues/1466

diff --git a/yolov5/utils/loggers/wandb/wandb_utils.py b/yolov5/utils/loggers/wandb/wandb_utils.py

index 35485f4..17c4b00 100644

--- a/yolov5/utils/loggers/wandb/wandb_utils.py

+++ b/yolov5/utils/loggers/wandb/wandb_utils.py

@@ -17,7 +17,7 @@

from yolov5 import __version__

from yolov5.utils.dataloaders import LoadImagesAndLabels, img2label_paths

-from yolov5.utils.general import LOGGER, check_dataset, check_file

+from utils.general import LOGGER, check_dataset, check_file

try:

import wandb

diff --git a/yolov5/utils/metrics.py b/yolov5/utils/metrics.py

index 52c7919..ff6946a 100644

--- a/yolov5/utils/metrics.py

+++ b/yolov5/utils/metrics.py

@@ -208,7 +208,7 @@ def plot(self, normalize=True, save_dir='', names=()):

vmin=0.0,

xticklabels=ticklabels,

yticklabels=ticklabels).set_facecolor((1, 1, 1))

- ax.set_ylabel('True')

+ ax.set_xlabel('True')

ax.set_ylabel('Predicted')

ax.set_title('Confusion Matrix')

fig.savefig(Path(save_dir) / 'confusion_matrix.png', dpi=250)

diff --git a/yolov5/utils/plots.py b/yolov5/utils/plots.py

index fffa5bc..08a9c71 100644

--- a/yolov5/utils/plots.py

+++ b/yolov5/utils/plots.py

@@ -88,7 +88,8 @@ def box_label(self, box, label='', color=(128, 128, 128), txt_color=(255, 255, 2

if self.pil or not is_ascii(label):

self.draw.rectangle(box, width=self.lw, outline=color) # box

if label:

- w, h = self.font.getsize(label) # text width, height

+ w, h = self.font.getsize(label) # text width, height (WARNING: deprecated) in 9.2.0

+ # _, _, w, h = self.font.getbbox(label) # text width, height (New)

outside = box[1] - h >= 0 # label fits outside box

self.draw.rectangle(

(box[0], box[1] - h if outside else box[1], box[0] + w + 1,

diff --git a/yolov5/utils/roboflow.py b/yolov5/utils/roboflow.py

index b243cd7..aa7d919 100644

--- a/yolov5/utils/roboflow.py

+++ b/yolov5/utils/roboflow.py

@@ -19,7 +19,7 @@ def extract_roboflow_metadata(url: str) -> tuple:

def resolve_roboflow_model_format(task: str) -> str:

task_format_mapping = {

- "detect": "yolov8",

+ "detect": "yolov5",

"segment": "yolov5",

"classify": "folder"

}

diff --git a/yolov5/utils/segment/dataloaders.py b/yolov5/utils/segment/dataloaders.py

index 9de6f0f..d66b361 100644

--- a/yolov5/utils/segment/dataloaders.py

+++ b/yolov5/utils/segment/dataloaders.py

@@ -37,7 +37,8 @@ def create_dataloader(path,

prefix='',

shuffle=False,

mask_downsample_ratio=1,

- overlap_mask=False):

+ overlap_mask=False,

+ seed=0):

if rect and shuffle:

LOGGER.warning('WARNING ⚠️ --rect is incompatible with DataLoader shuffle, setting shuffle=False')

shuffle = False

@@ -64,7 +65,7 @@ def create_dataloader(path,

sampler = None if rank == -1 else distributed.DistributedSampler(dataset, shuffle=shuffle)

loader = DataLoader if image_weights else InfiniteDataLoader # only DataLoader allows for attribute updates

generator = torch.Generator()

- generator.manual_seed(6148914691236517205 + RANK)

+ generator.manual_seed(6148914691236517205 + seed + RANK)

return loader(

dataset,

batch_size=batch_size,

diff --git a/yolov5/val.py b/yolov5/val.py

index e8c6dd7..2b880f9 100644

--- a/yolov5/val.py

+++ b/yolov5/val.py

@@ -336,7 +336,7 @@ def run(

json.dump(jdict, f)

try: # https://github.com/cocodataset/cocoapi/blob/master/PythonAPI/pycocoEvalDemo.ipynb

- check_requirements('pycocotools')

+ check_requirements('pycocotools>=2.0.6')

from pycocotools.coco import COCO

from pycocotools.cocoeval import COCOeval

@@ -400,7 +400,7 @@ def parse_opt():

def main():

opt = parse_opt()

- #check_requirements(exclude=('tensorboard', 'thop'))

+ check_requirements(exclude=('tensorboard', 'thop'))

if opt.task in ('train', 'val', 'test'): # run normally

if opt.conf_thres > 0.001: # https://github.com/ultralytics/yolov5/issues/1466

diff --git a/yolov5/utils/loggers/wandb/wandb_utils.py b/yolov5/utils/loggers/wandb/wandb_utils.py

index 35485f4..17c4b00 100644

--- a/yolov5/utils/loggers/wandb/wandb_utils.py

+++ b/yolov5/utils/loggers/wandb/wandb_utils.py

@@ -17,7 +17,7 @@

from yolov5 import __version__

from yolov5.utils.dataloaders import LoadImagesAndLabels, img2label_paths

-from yolov5.utils.general import LOGGER, check_dataset, check_file

+from utils.general import LOGGER, check_dataset, check_file

try:

import wandb

diff --git a/yolov5/utils/metrics.py b/yolov5/utils/metrics.py

index 52c7919..ff6946a 100644

--- a/yolov5/utils/metrics.py

+++ b/yolov5/utils/metrics.py

@@ -208,7 +208,7 @@ def plot(self, normalize=True, save_dir='', names=()):

vmin=0.0,

xticklabels=ticklabels,

yticklabels=ticklabels).set_facecolor((1, 1, 1))

- ax.set_ylabel('True')

+ ax.set_xlabel('True')

ax.set_ylabel('Predicted')

ax.set_title('Confusion Matrix')

fig.savefig(Path(save_dir) / 'confusion_matrix.png', dpi=250)

diff --git a/yolov5/utils/plots.py b/yolov5/utils/plots.py

index fffa5bc..08a9c71 100644

--- a/yolov5/utils/plots.py

+++ b/yolov5/utils/plots.py

@@ -88,7 +88,8 @@ def box_label(self, box, label='', color=(128, 128, 128), txt_color=(255, 255, 2

if self.pil or not is_ascii(label):

self.draw.rectangle(box, width=self.lw, outline=color) # box

if label:

- w, h = self.font.getsize(label) # text width, height

+ w, h = self.font.getsize(label) # text width, height (WARNING: deprecated) in 9.2.0

+ # _, _, w, h = self.font.getbbox(label) # text width, height (New)

outside = box[1] - h >= 0 # label fits outside box

self.draw.rectangle(

(box[0], box[1] - h if outside else box[1], box[0] + w + 1,

diff --git a/yolov5/utils/roboflow.py b/yolov5/utils/roboflow.py

index b243cd7..aa7d919 100644

--- a/yolov5/utils/roboflow.py

+++ b/yolov5/utils/roboflow.py

@@ -19,7 +19,7 @@ def extract_roboflow_metadata(url: str) -> tuple:

def resolve_roboflow_model_format(task: str) -> str:

task_format_mapping = {

- "detect": "yolov8",

+ "detect": "yolov5",

"segment": "yolov5",

"classify": "folder"

}

diff --git a/yolov5/utils/segment/dataloaders.py b/yolov5/utils/segment/dataloaders.py

index 9de6f0f..d66b361 100644

--- a/yolov5/utils/segment/dataloaders.py

+++ b/yolov5/utils/segment/dataloaders.py

@@ -37,7 +37,8 @@ def create_dataloader(path,

prefix='',

shuffle=False,

mask_downsample_ratio=1,

- overlap_mask=False):

+ overlap_mask=False,

+ seed=0):

if rect and shuffle:

LOGGER.warning('WARNING ⚠️ --rect is incompatible with DataLoader shuffle, setting shuffle=False')

shuffle = False

@@ -64,7 +65,7 @@ def create_dataloader(path,

sampler = None if rank == -1 else distributed.DistributedSampler(dataset, shuffle=shuffle)

loader = DataLoader if image_weights else InfiniteDataLoader # only DataLoader allows for attribute updates

generator = torch.Generator()

- generator.manual_seed(6148914691236517205 + RANK)

+ generator.manual_seed(6148914691236517205 + seed + RANK)

return loader(

dataset,

batch_size=batch_size,

diff --git a/yolov5/val.py b/yolov5/val.py

index e8c6dd7..2b880f9 100644

--- a/yolov5/val.py

+++ b/yolov5/val.py

@@ -336,7 +336,7 @@ def run(

json.dump(jdict, f)

try: # https://github.com/cocodataset/cocoapi/blob/master/PythonAPI/pycocoEvalDemo.ipynb

- check_requirements('pycocotools')

+ check_requirements('pycocotools>=2.0.6')

from pycocotools.coco import COCO

from pycocotools.cocoeval import COCOeval

@@ -400,7 +400,7 @@ def parse_opt():

def main():

opt = parse_opt()

- #check_requirements(exclude=('tensorboard', 'thop'))

+ check_requirements(exclude=('tensorboard', 'thop'))

if opt.task in ('train', 'val', 'test'): # run normally

if opt.conf_thres > 0.001: # https://github.com/ultralytics/yolov5/issues/1466

diff --git a/yolov5/utils/loggers/wandb/wandb_utils.py b/yolov5/utils/loggers/wandb/wandb_utils.py

index 35485f4..17c4b00 100644

--- a/yolov5/utils/loggers/wandb/wandb_utils.py

+++ b/yolov5/utils/loggers/wandb/wandb_utils.py

@@ -17,7 +17,7 @@

from yolov5 import __version__

from yolov5.utils.dataloaders import LoadImagesAndLabels, img2label_paths

-from yolov5.utils.general import LOGGER, check_dataset, check_file

+from utils.general import LOGGER, check_dataset, check_file

try:

import wandb

diff --git a/yolov5/utils/metrics.py b/yolov5/utils/metrics.py

index 52c7919..ff6946a 100644

--- a/yolov5/utils/metrics.py

+++ b/yolov5/utils/metrics.py

@@ -208,7 +208,7 @@ def plot(self, normalize=True, save_dir='', names=()):

vmin=0.0,

xticklabels=ticklabels,

yticklabels=ticklabels).set_facecolor((1, 1, 1))

- ax.set_ylabel('True')

+ ax.set_xlabel('True')

ax.set_ylabel('Predicted')

ax.set_title('Confusion Matrix')

fig.savefig(Path(save_dir) / 'confusion_matrix.png', dpi=250)

diff --git a/yolov5/utils/plots.py b/yolov5/utils/plots.py

index fffa5bc..08a9c71 100644

--- a/yolov5/utils/plots.py

+++ b/yolov5/utils/plots.py

@@ -88,7 +88,8 @@ def box_label(self, box, label='', color=(128, 128, 128), txt_color=(255, 255, 2

if self.pil or not is_ascii(label):

self.draw.rectangle(box, width=self.lw, outline=color) # box

if label:

- w, h = self.font.getsize(label) # text width, height

+ w, h = self.font.getsize(label) # text width, height (WARNING: deprecated) in 9.2.0

+ # _, _, w, h = self.font.getbbox(label) # text width, height (New)

outside = box[1] - h >= 0 # label fits outside box

self.draw.rectangle(

(box[0], box[1] - h if outside else box[1], box[0] + w + 1,

diff --git a/yolov5/utils/roboflow.py b/yolov5/utils/roboflow.py

index b243cd7..aa7d919 100644

--- a/yolov5/utils/roboflow.py

+++ b/yolov5/utils/roboflow.py

@@ -19,7 +19,7 @@ def extract_roboflow_metadata(url: str) -> tuple:

def resolve_roboflow_model_format(task: str) -> str:

task_format_mapping = {

- "detect": "yolov8",

+ "detect": "yolov5",

"segment": "yolov5",

"classify": "folder"

}

diff --git a/yolov5/utils/segment/dataloaders.py b/yolov5/utils/segment/dataloaders.py

index 9de6f0f..d66b361 100644

--- a/yolov5/utils/segment/dataloaders.py

+++ b/yolov5/utils/segment/dataloaders.py

@@ -37,7 +37,8 @@ def create_dataloader(path,

prefix='',

shuffle=False,

mask_downsample_ratio=1,

- overlap_mask=False):

+ overlap_mask=False,

+ seed=0):

if rect and shuffle:

LOGGER.warning('WARNING ⚠️ --rect is incompatible with DataLoader shuffle, setting shuffle=False')

shuffle = False

@@ -64,7 +65,7 @@ def create_dataloader(path,

sampler = None if rank == -1 else distributed.DistributedSampler(dataset, shuffle=shuffle)

loader = DataLoader if image_weights else InfiniteDataLoader # only DataLoader allows for attribute updates

generator = torch.Generator()

- generator.manual_seed(6148914691236517205 + RANK)

+ generator.manual_seed(6148914691236517205 + seed + RANK)

return loader(

dataset,

batch_size=batch_size,

diff --git a/yolov5/val.py b/yolov5/val.py

index e8c6dd7..2b880f9 100644

--- a/yolov5/val.py

+++ b/yolov5/val.py

@@ -336,7 +336,7 @@ def run(

json.dump(jdict, f)

try: # https://github.com/cocodataset/cocoapi/blob/master/PythonAPI/pycocoEvalDemo.ipynb

- check_requirements('pycocotools')

+ check_requirements('pycocotools>=2.0.6')

from pycocotools.coco import COCO

from pycocotools.cocoeval import COCOeval

@@ -400,7 +400,7 @@ def parse_opt():

def main():

opt = parse_opt()

- #check_requirements(exclude=('tensorboard', 'thop'))

+ check_requirements(exclude=('tensorboard', 'thop'))

if opt.task in ('train', 'val', 'test'): # run normally

if opt.conf_thres > 0.001: # https://github.com/ultralytics/yolov5/issues/1466

+

+ # Try out an Example!

-Check out an example of a [completed run here](https://www.comet.com/examples/comet-example-yolov5/a0e29e0e9b984e4a822db2a62d0cb357?experiment-tab=chart&showOutliers=true&smoothing=0&transformY=smoothing&xAxis=step&ref=yolov5&utm_source=yolov5&utm_medium=affilliate&utm_campaign=yolov5_comet_integration)

+Check out an example of a [completed run here](https://www.comet.com/examples/comet-example-yolov5/a0e29e0e9b984e4a822db2a62d0cb357?experiment-tab=chart&showOutliers=true&smoothing=0&transformY=smoothing&xAxis=step&utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github)

Or better yet, try it out yourself in this Colab Notebook

@@ -119,7 +119,7 @@ You can control the frequency of logged predictions and the associated images by

**Note:** The YOLOv5 validation dataloader will default to a batch size of 32, so you will have to set the logging frequency accordingly.

-Here is an [example project using the Panel](https://www.comet.com/examples/comet-example-yolov5?shareable=YcwMiJaZSXfcEXpGOHDD12vA1&ref=yolov5&utm_source=yolov5&utm_medium=affilliate&utm_campaign=yolov5_comet_integration)

+Here is an [example project using the Panel](https://www.comet.com/examples/comet-example-yolov5?shareable=YcwMiJaZSXfcEXpGOHDD12vA1&utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github)

```shell

@@ -161,7 +161,7 @@ env COMET_LOG_PER_CLASS_METRICS=true python train.py \

## Uploading a Dataset to Comet Artifacts

-If you would like to store your data using [Comet Artifacts](https://www.comet.com/docs/v2/guides/data-management/using-artifacts/#learn-more?ref=yolov5&utm_source=yolov5&utm_medium=affilliate&utm_campaign=yolov5_comet_integration), you can do so using the `upload_dataset` flag.

+If you would like to store your data using [Comet Artifacts](https://www.comet.com/docs/v2/guides/data-management/using-artifacts/#learn-more?utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github), you can do so using the `upload_dataset` flag.

The dataset be organized in the way described in the [YOLOv5 documentation](https://docs.ultralytics.com/tutorials/train-custom-datasets/#3-organize-directories). The dataset config `yaml` file must follow the same format as that of the `coco128.yaml` file.

@@ -251,6 +251,6 @@ comet optimizer -j

# Try out an Example!

-Check out an example of a [completed run here](https://www.comet.com/examples/comet-example-yolov5/a0e29e0e9b984e4a822db2a62d0cb357?experiment-tab=chart&showOutliers=true&smoothing=0&transformY=smoothing&xAxis=step&ref=yolov5&utm_source=yolov5&utm_medium=affilliate&utm_campaign=yolov5_comet_integration)

+Check out an example of a [completed run here](https://www.comet.com/examples/comet-example-yolov5/a0e29e0e9b984e4a822db2a62d0cb357?experiment-tab=chart&showOutliers=true&smoothing=0&transformY=smoothing&xAxis=step&utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github)

Or better yet, try it out yourself in this Colab Notebook

@@ -119,7 +119,7 @@ You can control the frequency of logged predictions and the associated images by

**Note:** The YOLOv5 validation dataloader will default to a batch size of 32, so you will have to set the logging frequency accordingly.

-Here is an [example project using the Panel](https://www.comet.com/examples/comet-example-yolov5?shareable=YcwMiJaZSXfcEXpGOHDD12vA1&ref=yolov5&utm_source=yolov5&utm_medium=affilliate&utm_campaign=yolov5_comet_integration)

+Here is an [example project using the Panel](https://www.comet.com/examples/comet-example-yolov5?shareable=YcwMiJaZSXfcEXpGOHDD12vA1&utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github)

```shell

@@ -161,7 +161,7 @@ env COMET_LOG_PER_CLASS_METRICS=true python train.py \

## Uploading a Dataset to Comet Artifacts

-If you would like to store your data using [Comet Artifacts](https://www.comet.com/docs/v2/guides/data-management/using-artifacts/#learn-more?ref=yolov5&utm_source=yolov5&utm_medium=affilliate&utm_campaign=yolov5_comet_integration), you can do so using the `upload_dataset` flag.

+If you would like to store your data using [Comet Artifacts](https://www.comet.com/docs/v2/guides/data-management/using-artifacts/#learn-more?utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github), you can do so using the `upload_dataset` flag.

The dataset be organized in the way described in the [YOLOv5 documentation](https://docs.ultralytics.com/tutorials/train-custom-datasets/#3-organize-directories). The dataset config `yaml` file must follow the same format as that of the `coco128.yaml` file.

@@ -251,6 +251,6 @@ comet optimizer -j