diff --git a/README.md b/README.md

index 75ad127..9161d6a 100644

--- a/README.md

+++ b/README.md

@@ -1,4 +1,3 @@

-

# [中文] | [[English]](https://github.com/guojianyang/cv-detect-robot/blob/main/README_EN.md)

# CDR(cv-detect-robot)项目介绍-------(工业级视觉算法侧端部署)

@@ -10,6 +9,19 @@

> ( **备注(3)**):随着本人及团队《中山大学-MICRO_lab实验室》的学习成长,该项目会不定期进行维护和更新,由于能力有限,项目中存在错误和不足之处望各位批评指正或在`issue`中留言。

> ( **备注(4)**):为方便大家学习交流,已建立**CDR(cv-detect-robot)项目**交流微信群,请添加群负责人`小郭`微信号`17370042325`,以方便拉您进群。

+***

+***

+# CDR~走向实用化之-v3.0版本更新内容如下🔥🔥🔥🔥🔥:

+- 增加子项目(六)和(七),分别为yolox的python接口和cpp接口。

+- 在子项目(五)中,将摄像头检测和视频文件检测集成到一个python程序中,具体操作参考同级文件夹下README.md。

+- 解决子项目(一)(二)(三)(四)中实时摄像头检测屏幕画面全覆盖问题。

+- 解决频繁出现的`mmap err:Bad file descriptor`错误。

+- 对yolov5和yolox检测模型后都级联了DCF目标跟踪器。

+- 对于所有子项目都可进行指定目标类别检测跟踪。

+- 发布了Nano板和NX板在运行CDR项目时需要注意事项的README.md文档。

+- 发布如何生成engine文件的README.md文档。

+- 解决子项目(二)yolov5-deepstream-python中ros节点读取数据一直显示24个恒定目标数据的问题

+

***

***

# CDR子项目(一)(yolov5-ros-deepstream)

@@ -50,5 +62,24 @@

> 最终视频检测效果请进入[resnet10-ros-deepstream检测](https://www.bilibili.com/video/BV1Xg411w78P/)

+# CDR子项目(六)(yolox-deepstream-python)

+- yolox-deepstream-python 子项目简介

+> 该项目是将yolox视觉检测算法与神经网络加速引擎tensorRT结合,本子项目采另一种引擎文件生成方法,通过onnx转到engine,此方法更具灵活性,也越来越稳定,符合行业主流发展趋势,在英伟达的deepstream框架下运行,在同一硬件平台上的任意软件目录中,建立一个读取物理内存的`client.py`脚本文件(里面只包含一个读取内存的代码段),将指定好的物理内存中的数据读取出来,在读取成功的前提下,可将该代码段插入到任意需要目标检测数据的python项目中,从而使该python项目能顺利获取目标检测数据。

+

+> 详细教程请进入[yolox-deepstream-python](https://github.com/guojianyang/cv-detect-robot/tree/main/yolox-ros-deepstream)

+

+> 最终视频检测效果请进入[yolox-deepstream-python检测](https://www.bilibili.com/video/BV1k34y1o7Ck/)

+

+# CDR子项目(七)(yolox-deepstream-cpp)

+- yolox-deepstream-cpp 子项目简介

+> 该项目是将yolox视觉检测算法与神经网络加速引擎tensorRT结合,本子项目采另一种引擎文件生成方法,通过onnx转到engine,此方法更具灵活性,也越来越稳定,符合行业主流发展趋势,在英伟达的deepstream框架下运行,在同一硬件平台上的任意软件目录中,建立一个读取物理内存的`yolox_tensor.cpp`文件(里面只包含一个读取内存的代码段),编译后可将指定好的物理内存中的数据读取出来,在读取成功的前提下,可将该代码段插入到任意需要目标检测数据的C++项目中,从而使该C++项目能顺利获取目标检测数据。

+

+> 详细教程请进入[yolox-deepstream-cpp](https://github.com/guojianyang/cv-detect-robot/tree/main/yolox-ros-deepstream)

+

+> 最终视频检测效果请进入[yolox-deepstream-cpp检测](https://www.bilibili.com/video/BV1k34y1o7Ck/)

+

# [CDR项目常见问题及其解决方案(Common problems and solutions)](https://github.com/guojianyang/cv-detect-robot/wiki/CDR%E9%A1%B9%E7%9B%AE%E5%B8%B8%E8%A7%81%E9%97%AE%E9%A2%98%E5%8F%8A%E5%85%B6%E8%A7%A3%E5%86%B3%E6%96%B9%E6%A1%88(Common-problems-and-solutions))

+# [Jetson Nano和 NX在运行CDR项目时注意事项](https://github.com/guojianyang/cv-detect-robot/wiki/Jetson-Nano%E5%92%8C-NX%E5%9C%A8%E8%BF%90%E8%A1%8CCDR%E9%A1%B9%E7%9B%AE%E6%97%B6%E6%B3%A8%E6%84%8F%E4%BA%8B%E9%A1%B9)

+# [wts文件生成engine文件的方法](https://github.com/guojianyang/cv-detect-robot/wiki/wts%E6%96%87%E4%BB%B6%E7%94%9F%E6%88%90engine%E6%96%87%E4%BB%B6%E7%9A%84%E6%96%B9%E6%B3%95)

+

diff --git a/resnet10-ros-deepstream/deepstream_python_apps/README.md b/resnet10-ros-deepstream/deepstream_python_apps/README.md

deleted file mode 100644

index 7b97439..0000000

--- a/resnet10-ros-deepstream/deepstream_python_apps/README.md

+++ /dev/null

@@ -1,58 +0,0 @@

-# DeepStream Python Apps

-

-This repository contains Python bindings and sample applications for the [DeepStream SDK](https://developer.nvidia.com/deepstream-sdk).

-

-SDK version supported: 5.1

-

-Download the latest release package complete with bindings and sample applications from the [release section](../../releases).

-

-Please report any issues or bugs on the [Deepstream SDK Forums](https://devtalk.nvidia.com/default/board/209).

-

-* [Python Bindings](#metadata_bindings)

-* [Sample Applications](#sample_applications)

-

-

-## Python Bindings

-

-DeepStream pipelines can be constructed using Gst Python, the GStreamer framework's Python bindings. For accessing DeepStream MetaData,

-Python bindings are provided in the form of a compiled module which is included in the DeepStream SDK. This module is generated using [Pybind11](https://github.com/pybind/pybind11).

-

-

-

-

-

-These bindings support a Python interface to the MetaData structures and functions. Usage of this interface is documented in the [HOW-TO Guide](HOWTO.md) and demonstrated in the sample applications.

-This release adds bindings for decoded image buffers (NvBufSurface) as well as inference output tensors (NvDsInferTensorMeta).

-

-

-## Sample Applications

-

-Sample applications provided here demonstrate how to work with DeepStream pipelines using Python.

-The sample applications require [MetaData Bindings](#metadata_bindings) to work.

-

-To run the sample applications or write your own, please consult the [HOW-TO Guide](HOWTO.md)

-

-

-

-

-

-We currently provide the following sample applications:

-* [deepstream-test1](apps/deepstream-test1) -- 4-class object detection pipeline

-* [deepstream-test2](apps/deepstream-test2) -- 4-class object detection, tracking and attribute classification pipeline

-* [deepstream-test3](apps/deepstream-test3) -- multi-stream pipeline performing 4-class object detection

-* [deepstream-test4](apps/deepstream-test4) -- msgbroker for sending analytics results to the cloud

-* [deepstream-imagedata-multistream](apps/deepstream-imagedata-multistream) -- multi-stream pipeline with access to image buffers

-* [deepstream-ssd-parser](apps/deepstream-ssd-parser) -- SSD model inference via Triton server with output parsing in Python

-* [deepstream-test1-usbcam](apps/deepstream-test1-usbcam) -- deepstream-test1 pipelien with USB camera input

-* [deepstream-test1-rtsp-out](apps/deepstream-test1-rtsp-out) -- deepstream-test1 pipeline with RTSP output

-* [deepstream-opticalflow](apps/deepstream-opticalflow) -- optical flow and visualization pipeline with flow vectors returned in NumPy array

-* [deepstream-segmentation](apps/deepstream-segmentation) -- segmentation and visualization pipeline with segmentation mask returned in NumPy array

-* [deepstream-nvdsanalytics](apps/deepstream-nvdsanalytics) -- multistream pipeline with analytics plugin

-* [runtime_source_add_delete](apps/runtime_source_add_delete) -- add/delete source streams at runtime

-

-Of these applications, the following have been updated or added in this release:

-* runtime_source_add_delete -- add/delete source streams at runtime

-

-Detailed application information is provided in each application's subdirectory under [apps](apps).

-

-

diff --git a/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/README.md b/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/README.md

old mode 100644

new mode 100755

index 561a7b3..1246a1d

--- a/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/README.md

+++ b/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/README.md

@@ -1,3 +1,13 @@

-# test7 建立只能播放的实例,并能实时打印出目标检测数据(坐标、目标跟踪ID号和类别)

+# test7 建立只能播放的实例,并能实时打印出目标检测数据(坐标、置信度和类别)

+python3命令后面第一个参数“1”代表视频文件输入模式,“2”代表实时摄像头输入模式

+- video_file:

+python3 deepstream-test_7_usb_file.py 1 /opt/nvidia/deepstream/deepstream-5.1/samples/streams/sample_720p.h264

+(备注):jetson NX 中,以上输入可能会报错,此时需将183行`source_file.set_property('location', args[2])`中的args[2]直接改为视频文件的绝对路径

+

+

+- real_video:

+python3 deepstream-test_7_usb_file.py 2 /dev/video0

+

+

diff --git a/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/client_ros_7.py b/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/client_ros_7.py

old mode 100644

new mode 100755

diff --git a/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/deepstream-test_7.py b/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/deepstream-test_7_usb_file.py

old mode 100644

new mode 100755

similarity index 74%

rename from resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/deepstream-test_7.py

rename to resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/deepstream-test_7_usb_file.py

index 0ece48c..a1d1f25

--- a/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/deepstream-test_7.py

+++ b/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/deepstream-test_7_usb_file.py

@@ -17,6 +17,7 @@

fps_streams = {}

frame_count = {}

saved_count = {}

+Detect_Mode = 0 # 默认值为"0", "1"为 file文件读取模式, "2"为usb实时视频检测模式

bounding_bboxes =[]

PGIE_CLASS_ID_VEHICLE = 0

PGIE_CLASS_ID_BICYCLE = 1

@@ -75,7 +76,7 @@ def osd_sink_pad_buffer_probe(pad, info, u_data):

bounding_bboxes.append(int(width))

bounding_bboxes.append(int(height))

bounding_bboxes.append(int(object_id))

- obj_meta.rect_params.border_color.set(0.0, 0.0, 0.0, 1.0)

+ obj_meta.rect_params.border_color.set(1.0, 0.0, 0.0, 0.0) #--------red ,green, blue, black

try:

l_obj = l_obj.next

@@ -133,15 +134,32 @@ def osd_sink_pad_buffer_probe(pad, info, u_data):

return Gst.PadProbeReturn.OK

-def main():

+def main(args):

+ if len(args)!=3:

+ sys.stderr.write(" \n\n\n对不起,您还未输入视频源!!!\n\n\n")

+ sys.exit(1)

+ else:

+ if args[1]=="1":

+ Detect_Mode = 1

+ print("您已进入视频文件检测模式!!!")

+ if args[1]=="2":

+ Detect_Mode = 2

+ print("您已进入实时视频检测模式!!!")

GObject.threads_init()

Gst.init(None)

-

print("Creating Pileline \n")

pipeline = Gst.Pipeline()

- source = Gst.ElementFactory.make("filesrc", "file-source")

- h264parser = Gst.ElementFactory.make("h264parse", "h264-parper") # h264的编解码

- decoder = Gst.ElementFactory.make("nvv4l2decoder"," nvv4l2-decoder") # h264的编解码

+ if Detect_Mode ==1:

+ source_file = Gst.ElementFactory.make("filesrc", "file-source")

+ h264parser = Gst.ElementFactory.make("h264parse", "h264-parper") # h264的编解码

+ decoder = Gst.ElementFactory.make("nvv4l2decoder"," nvv4l2-decoder") # h264的编解码

+ if Detect_Mode == 2:

+ source_usb = Gst.ElementFactory.make("v4l2src", "usb-cam-source")

+ caps_v4l2src = Gst.ElementFactory.make("capsfilter", "v4l2src_caps")

+ vidconvsrc = Gst.ElementFactory.make("videoconvert", "convertor_src1")

+ nvvidconvsrc = Gst.ElementFactory.make("nvvideoconvert", "convertor_src2")

+ caps_vidconvsrc = Gst.ElementFactory.make("capsfilter", "nvmm_caps")

+

streammux = Gst.ElementFactory.make("nvstreammux", "Stream-muxer") # autobatch 自动批量处理

pgie = Gst.ElementFactory.make("nvinfer", "primary-inference") #第一级网络(主要的推理引擎engine)

@@ -153,6 +171,7 @@ def main():

if not sink_real:

sys.stderr.write(" Unable to create egl sink \n")

+

tracker = Gst.ElementFactory.make("nvtracker", "tracker")

if not tracker:

sys.stderr.write(" Unable to create tracker \n")

@@ -160,15 +179,21 @@ def main():

sgie1= Gst.ElementFactory.make("nvinfer", "secondary1-inference") #第二级网络

nvvidconv = Gst.ElementFactory.make("nvvideoconvert", "convertor") #转换工具(因为输出的东西要放到画面上)

nvosd = Gst.ElementFactory.make("nvdsosd"," onscreendisplay") # 显示display工具

+ if Detect_Mode==1:

+ source_file.set_property('location', args[2]) #输入本地文件视频源

+ if Detect_Mode==2:

+ source_usb.set_property('device', args[2]) #输入usb实时视频源

+ caps_v4l2src.set_property('caps', Gst.Caps.from_string("video/x-raw, framerate=30/1"))

+ caps_vidconvsrc.set_property('caps', Gst.Caps.from_string("video/x-raw(memory:NVMM)"))

- source.set_property('location', "/opt/nvidia/deepstream/deepstream-5.1/samples/streams/sample_720p.h264") #输入视频源

streammux.set_property('width', 1920) #设置输入源的宽

- streammux.set_property("height", 1080) #设置输入源的高

- streammux.set_property("batch-size", 1)

+ streammux.set_property('height', 1080) #设置输入源的高

+ streammux.set_property('batch-size', 1)

streammux.set_property("batched-push-timeout", 4000000)

pgie.set_property('config-file-path',"dstest7_pgie_config.txt")

sgie1.set_property('config-file-path',"dstest7_sgie1_config.txt")

#pgie.set_property('config-file-path', "dstest3_pgie_config.txt")

+ sink_real.set_property('sync', False) #决定实时视频是否同步显示

config = configparser.ConfigParser()

config.read('dstest7_tracker_config.txt')

@@ -195,12 +220,21 @@ def main():

tracker.set_property('enable_batch_process', tracker_enable_batch_process)

print("Adding elements to Pipeline \n")

- pipeline.add(source )

- pipeline.add(h264parser)

- pipeline.add(decoder)

+ if Detect_Mode==1:

+ pipeline.add(source_file )

+ pipeline.add(h264parser)

+ pipeline.add(decoder)

+ if Detect_Mode==2:

+ pipeline.add(source_usb)

+ pipeline.add(caps_v4l2src)

+ pipeline.add(vidconvsrc)

+ pipeline.add(nvvidconvsrc)

+ pipeline.add(caps_vidconvsrc)

pipeline.add(streammux)

pipeline.add(pgie)

+

pipeline.add(tracker)

+

pipeline.add(sgie1)

pipeline.add(nvvidconv)

pipeline.add(nvosd)

@@ -208,12 +242,27 @@ def main():

pipeline.add(transform)

pipeline.add(sink_real)

- source.link(h264parser)

- h264parser.link(decoder)

- sinkpad = streammux.get_request_pad("sink_0")

- srcpad = decoder.get_static_pad("src")

- srcpad.link(sinkpad)

+ if Detect_Mode==1:

+ source_file.link(h264parser)

+ h264parser.link(decoder)

+ sinkpad_file = streammux.get_request_pad("sink_0")

+ srcpad_file = decoder.get_static_pad("src")

+ srcpad_file.link(sinkpad_file)

+ if Detect_Mode==2:

+ source_usb.link(caps_v4l2src)

+ caps_v4l2src.link(vidconvsrc)

+ vidconvsrc.link(nvvidconvsrc)

+ nvvidconvsrc.link(caps_vidconvsrc)

+ sinkpad_usb = streammux.get_request_pad("sink_0")

+ if not sinkpad_usb:

+ sys.stderr.write(" Unable to get the sink pad of streammux \n")

+ srcpad_usb = caps_vidconvsrc.get_static_pad("src")

+ if not srcpad_usb:

+ sys.stderr.write(" Unable to get source pad of caps_vidconvsrc \n")

+ srcpad_usb.link(sinkpad_usb)

streammux.link(pgie)

+

+

pgie.link(tracker)

tracker.link(sgie1)

sgie1.link(nvvidconv)

@@ -223,6 +272,7 @@ def main():

transform.link(sink_real)

else:

nvosd.link(sink_real)

+

loop = GObject.MainLoop()

bus = pipeline.get_bus()

bus.add_signal_watch()

@@ -245,4 +295,8 @@ def main():

pipeline.set_state(Gst.State.NULL)

if __name__ == "__main__":

- sys.exit(main())

\ No newline at end of file

+ sys.exit(main(sys.argv))

+

+

+

+

diff --git a/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/dstest7_pgie_config.txt b/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/dstest7_pgie_config.txt

old mode 100644

new mode 100755

index 99f24b4..85f2198

--- a/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/dstest7_pgie_config.txt

+++ b/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/dstest7_pgie_config.txt

@@ -60,19 +60,16 @@

[property]

gpu-id=0

net-scale-factor=0.0039215697906911373

-

model-file=../../../../samples/models/Primary_Detector/resnet10.caffemodel

proto-file=../../../../samples/models/Primary_Detector/resnet10.prototxt

-model-engine-file=../../../../samples/models/Primary_Detector/resnet10.caffemodel_b1_gpu0_fp16.engine

+model-engine-file=../../../../samples/models/Primary_Detector/resnet10.caffemodel_b1_gpu0_int8.engine

labelfile-path=../../../../samples/models/Primary_Detector/labels.txt

-

+#int8-calib-file=../../../../samples/models/Primary_Detector/cal_trt.bin

force-implicit-batch-dim=1

batch-size=1

-network-mode=2

-process-mode=1

-model-color-format=0

+network-mode=1

num-detected-classes=4

-interval=5

+interval=1

gie-unique-id=1

output-blob-names=conv2d_bbox;conv2d_cov/Sigmoid

@@ -80,6 +77,7 @@ output-blob-names=conv2d_bbox;conv2d_cov/Sigmoid

#scaling-compute-hw=0

[class-attrs-all]

-pre-cluster-threshold=0.2

+pre-cluster-threshold=0.4

eps=0.2

group-threshold=1

+

diff --git a/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/dstest7_sgie1_config.txt b/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/dstest7_sgie1_config.txt

old mode 100644

new mode 100755

index 70c65b7..8c08ce7

--- a/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/dstest7_sgie1_config.txt

+++ b/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/dstest7_sgie1_config.txt

@@ -62,34 +62,24 @@ gpu-id=0

net-scale-factor=1

model-file=../../../../samples/models/Secondary_CarColor/resnet18.caffemodel

proto-file=../../../../samples/models/Secondary_CarColor/resnet18.prototxt

-model-engine-file=../../../../samples/models/Secondary_CarColor/resnet18.caffemodel_b16_gpu0_fp32.engine

+model-engine-file=../../../../samples/models/Secondary_CarColor/resnet18.caffemodel_b16_gpu0_int8.engine

mean-file=../../../../samples/models/Secondary_CarColor/mean.ppm

labelfile-path=../../../../samples/models/Secondary_CarColor/labels.txt

-int8-calib-file=../../../../samples/models/Secondary_CarColor/cal_trt.bin

-

+#int8-calib-file=../../../../samples/models/Secondary_CarColor/cal_trt.bin

force-implicit-batch-dim=1

batch-size=16

# 0=FP32 and 1=INT8 mode

-network-mode=0

+network-mode=1

input-object-min-width=64

input-object-min-height=64

process-mode=2

model-color-format=1

gpu-id=0

-

-#本模型的id号

gie-unique-id=2

-

-#接收哪个推理模型输出的数据

operate-on-gie-id=1

-

-#所检测的类别id(PGIE_CLASS_ID_VEHICLE = 0)

operate-on-class-ids=0

is-classifier=1

-

-# 输出层

output-blob-names=predictions/Softmax

-

classifier-async-mode=1

classifier-threshold=0.51

process-mode=2

diff --git a/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/dstest7_tracker_config.txt b/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/dstest7_tracker_config.txt

old mode 100644

new mode 100755

index 502c208..599cca5

--- a/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/dstest7_tracker_config.txt

+++ b/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/dstest7_tracker_config.txt

@@ -28,11 +28,13 @@

# ll-config-file: required for NvDCF, optional for KLT and IOU

#

[tracker]

-tracker-width=640

-tracker-height=384

+#tracker-width=640

+#tracker-height=384

+tracker-width=960

+tracker-height=544

gpu-id=0

-ll-lib-file=/opt/nvidia/deepstream/deepstream-5.1/lib/libnvds_mot_klt.so

-#ll-lib-file=/opt/nvidia/deepstream/deepstream-5.1/lib/libnvds_nvdcf.so

+#ll-lib-file=/opt/nvidia/deepstream/deepstream-5.1/lib/libnvds_mot_klt.so

+ll-lib-file=/opt/nvidia/deepstream/deepstream-5.1/lib/libnvds_nvdcf.so

ll-config-file=tracker_config.yml

#enable-past-frame=1

enable-batch-process=1

diff --git a/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/internal_memory.txt b/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/internal_memory.txt

old mode 100644

new mode 100755

index 1c1cdaa..b99a7ea

--- a/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/internal_memory.txt

+++ b/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/internal_memory.txt

@@ -1 +1 @@

-137-- [0, 407, 570, 159, 149, 240, 2, 475, 54, 135, 320, 273, 0, 483, 1278, 102, 33, 293, 0, 475, 771, 54, 30, 296, 0, 497, 1725, 171, 50, 297], 483, 1293, 105, 33, 293, 0, 497, 1749, 93, 59, 294, 2, 568, 30, 129, 213, 295]3], 475, 447, 21, 53, 280, 2, 475, 468, 24, 56, 281]8, 0, 483, 1221, 132, 36, 229]8, 783, 57, 47, 224, 0, 489, 1326, 144, 39, 225] 483, 1236, 87, 30, 219]215, 2, 452, 69, 123, 337, 216]9, 2, 480, 426, 24, 70, 190] 3],[ 381 407 423 457 0.25845 1],[ 241 319 276 401 0.27718 39]111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111

+2---- [], 642, 577, 513, 212, 446] 0, 627, 203, 717, 371, 430]], 619, 55, 271, 150, 196]2, 0, 487, 1586, 175, 63, 271]481, 1072, 65, 24, 265]] 0, 482, 1101, 72, 26, 265]63]476, 443, 29, 77, 169]2, 475, 401, 29, 77, 187]]187], 482, 1231, 64, 28, 232]9]9] 2, 484, 87, 128, 369, 178]2, 29, 79, 11209024405222981859]]69, 0, 484, 683, 41, 36, 11209024405222981867]] 475, 355, 40, 124, 11209024405222981801]37], 2, 487, 410, 65, 161, 11209024405222981809]]0, 472, 1107, 36, 22, 2633, 0, 472, 528, 27, 42, 2634, 0, 478, 777, 57, 42, 2635, 0, 480, 774, 57, 39, 2636]31]621, 0, 503, 1578, 147, 36, 2622, 0, 497, 1605, 150, 47, 2623, 0, 497, 1569, 150, 42, 2624]2, 2, 407, 54, 135, 390, 2557] 0, 497, 1629, 156, 47, 2616]00, 1725, 171, 50, 2589, 0, 497, 1743, 177, 53, 2590, 0, 497, 1674, 174, 53, 2591]90, 36, 2414]506, 1593, 273, 104, 2285, 0, 500, 1581, 276, 104, 2286, 0, 503, 1593, 264, 98, 2287], 75, 923, 2, 464, 411, 27, 75, 2018], 1239, 87, 30, 2122, 0, 486, 1335, 147, 45, 2123, 0, 489, 1335, 150, 36, 2124, 0, 483, 1341, 150, 45, 2125, 0, 492, 1341, 141, 39, 2126], 2, 447, 66, 123, 348, 1929]1, 156, 47, 2093, 0, 483, 657, 99, 75, 2094, 0, 492, 1416, 153, 33, 2095, 0, 489, 1401, 153, 42, 2096, 0, 483, 1407, 153, 47, 2097, 0, 483, 1317, 87, 28, 2098]16, 2, 475, 411, 24, 70, 1840]6, 2012, 0, 480, 1275, 96, 36, 2013, 0, 480, 1272, 90, 36, 2014] 2, 362, 3, 198, 660, 53, 2, 362, 3, 195, 649, 54, 2, 351, 3, 192, 652, 55, 2, 368, 6, 189, 660, 56, 2, 447, 291, 150, 413, 57, 2, 461, 306, 141, 396, 58, 2, 444, 297, 153, 416, 59, 2, 354, 3, 195, 652, 60, 2, 368, 3, 198, 644, 61, 2, 360, 3, 201, 652, 62, 2, 385, 12, 192, 627, 63, 2, 511, 315, 132, 348, 64, 2, 511, 324, 123, 345, 65, 2, 390, 0, 207, 624, 66, 2, 407, 3, 201, 607, 67, 2, 405, 3, 201, 613, 68, 2, 416, 15, 189, 585, 69, 2, 452, 0, 192, 556, 70, 2, 500, 6, 186, 517, 71, 2, 495, 6, 186, 520, 72, 2, 461, 12, 183, 545, 73, 2, 489, 21, 189, 531, 75] 480, 12, 189, 531, 75]1111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111

diff --git a/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/tracker_config.yml b/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/tracker_config.yml

old mode 100644

new mode 100755

index 6af8f06..f99f0af

--- a/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/tracker_config.yml

+++ b/resnet10-ros-deepstream/deepstream_python_apps/apps/deepstream-test7/tracker_config.yml

@@ -23,7 +23,7 @@

NvDCF:

# [General]

- useUniqueID: 1 # Use 64-bit long Unique ID when assignining tracker ID. Default is [true]

+ useUniqueID: 0 # Use 64-bit long Unique ID when assignining tracker ID. Default is [true]

maxTargetsPerStream: 99 # Max number of targets to track per stream. Recommended to set >10. Note: this value should account for the targets being tracked in shadow mode as well. Max value depends on the GPU memory capacity

# [Feature Extraction]

@@ -35,7 +35,7 @@ NvDCF:

filterLr: 0.15 # learning rate for DCF filter in exponential moving average. Valid Range: [0.0, 1.0]

filterChannelWeightsLr: 0.22 # learning rate for the channel weights among feature channels. Valid Range: [0.0, 1.0]

gaussianSigma: 0.75 # Standard deviation for Gaussian for desired response when creating DCF filter [pixels]

- featureImgSizeLevel: 3 # Size of a feature image. Valid range: {1, 2, 3, 4, 5}, from the smallest to the largest

+ featureImgSizeLevel: 5 # Size of a feature image. Valid range: {1, 2, 3, 4, 5}, from the smallest to the largest

SearchRegionPaddingScale: 1 # Search region size. Determines how large the search region should be scaled from the target bbox. Valid range: {1, 2, 3}, from the smallest to the largest

# [MOT] [False Alarm Handling]

diff --git a/yolov5-deepstream-cpp/README.md b/yolov5-deepstream-cpp/README.md

index 5b529b9..0f52e28 100644

--- a/yolov5-deepstream-cpp/README.md

+++ b/yolov5-deepstream-cpp/README.md

@@ -4,12 +4,12 @@

- 英伟达TX2板载计算机

- 鼠标键盘(推荐使用有线连接方式)

## 软件环境

-- Jetpack 4.5 (ubuntu 18.04)

+- Jetpack 4.5.1 (ubuntu 18.04)

- TensorRT 7.1

- CUDA 10.2

- cuDNN 8.0

- OpenCV 4.1.1

-- deepstream 5.0

+- deepstream 5.1

## 一、安装ROS操作系统

**备注**:(以下操作最好在搭建梯子或者更换国内源的情况下进行,否则下载速度很慢)

@@ -31,8 +31,8 @@

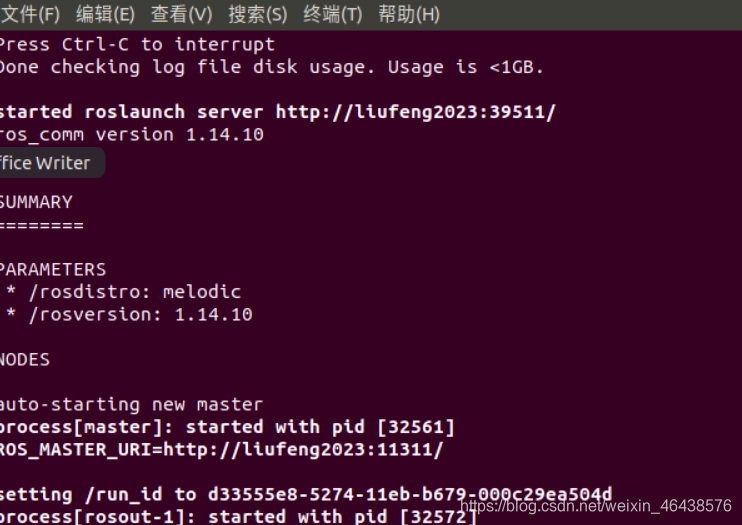

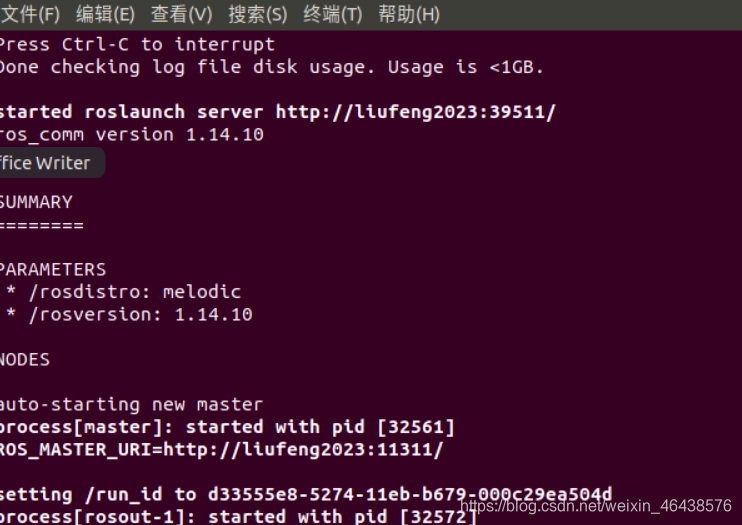

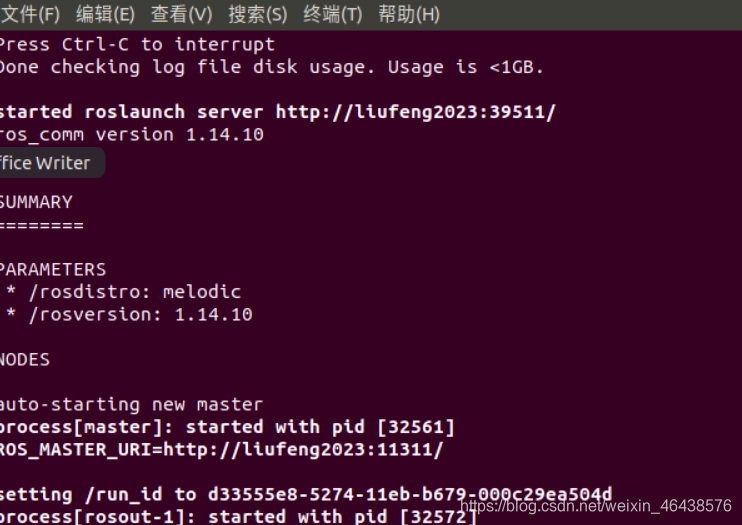

- 以上步骤执行完成后,可尝试在终端运行`roscore`命令,若出现下图所示,说明ros安装正常:

***

-## 二、安装DeepStream on TX2(Jetpack 4.5)

-(**备注**):若使用SDKManager软件对TX2进行刷机,且刷入系统时选择了DeepStream 5.0选项,便会自动安装 DeepStream,无需进行以下手动安装。

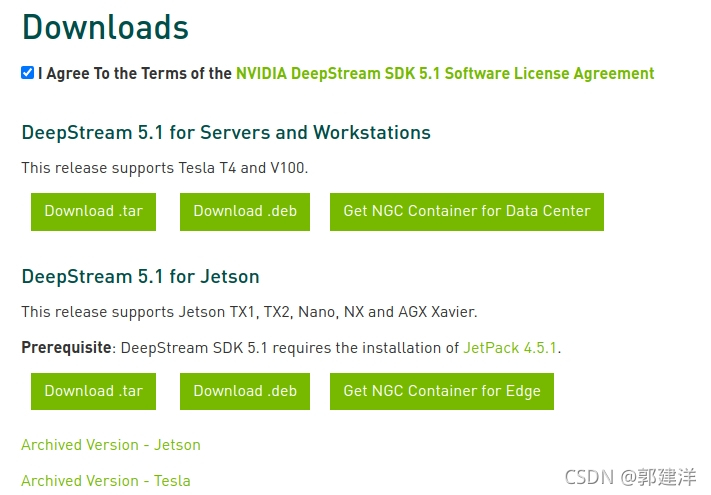

+## 二、安装DeepStream on TX2(Jetpack 4.5.1)

+(**备注**):若使用SDKManager软件对TX2进行刷机,且刷入系统时选择了DeepStream 5.1选项,便会自动安装 DeepStream,无需进行以下手动安装。

### 1.安装依赖

执行下面命令来安装需要的软件包:

@@ -48,12 +48,12 @@

libjansson4=2.11-1

### 2.安装 DeepStream SDK

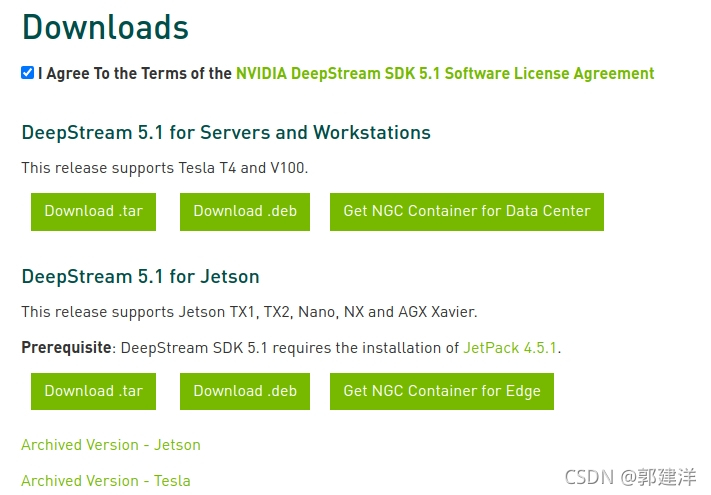

- (1)进入[官方DeepStream SDK](https://developer.nvidia.com/embedded/deepstream-on-jetson-downloads-archived)选择`DeepStream 5.0 for Jetson`并下载(Jetpack 4.5 向下兼容)

+ (1)进入[官方DeepStream SDK](https://developer.nvidia.com/embedded/deepstream-on-jetson-downloads-archived)选择`DeepStream 5.1 for Jetson`并下载(Jetpack 4.5.1 向下兼容)

- (2)下载后得到压缩文件`deepstream_sdk_5.0_jetson.tbz2`,输入以下命令以提取并安装DeepStream SDK:

+ (2)下载后得到压缩文件`deepstream_sdk_5.1_jetson.tbz2`,输入以下命令以提取并安装DeepStream SDK:

- sudo tar -xvf deepstream_sdk_5.0_jetson.tbz2 -C /

- cd /opt/nvidia/deepstream/deepstream-5.0

+ sudo tar -xvf deepstream_sdk_5.1_jetson.tbz2 -C /

+ cd /opt/nvidia/deepstream/deepstream-5.1

sudo ./install.sh

sudo ldconfig

@@ -61,7 +61,7 @@

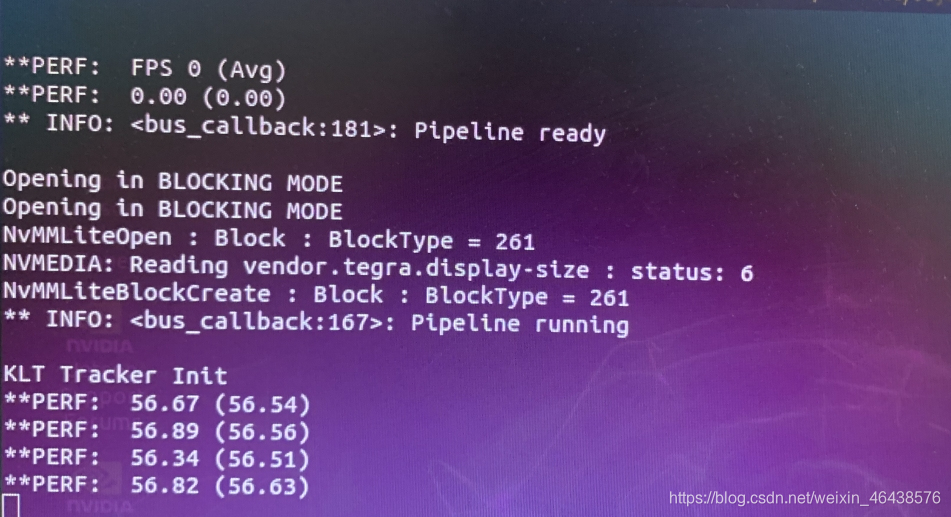

(3) DeepStream测试

- 执行以下命令:

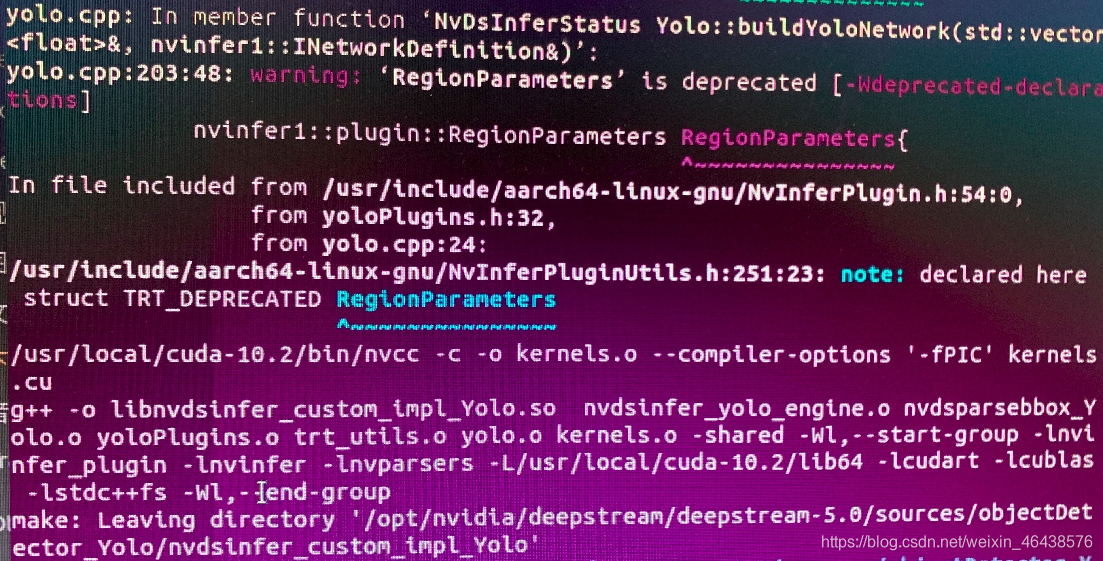

-> cd /opt/nvidia/deepstream/deepstream-5.0/sources/objectDetector_Yolo

+> cd /opt/nvidia/deepstream/deepstream-5.1/sources/objectDetector_Yolo

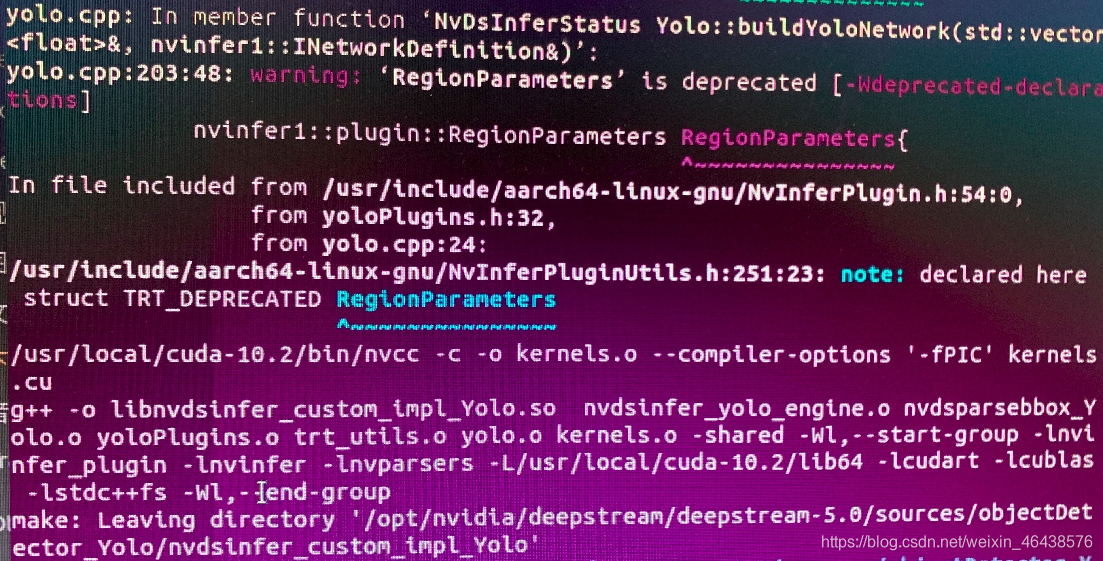

- 执行编译命令

>`sudo CUDA_VER=10.2 make -C nvdsinfer_custom_impl_Yolo`

出现如下图所示结果,说明编译成功:

@@ -82,20 +82,20 @@

1. **从github克隆`cv-detect-ros`项目**(建议在搭建梯子的环境下进行git clone)

> 先按 `ctrl + alt +t` 进入终端(默认克隆的文件在家目录下)

-> git clone https://github.com/guojianyang/cv-detect-ros.git

+> git clone -b v3.0 https://github.com/guojianyang/cv-detect-ros.git

2. **首先对我们所要操作的文件夹赋予权限**

-> sudo chmod -R 777 /opt/nvidia/deepstream/deepstream-5.0/sources/

- 3. **再拷贝cv-detect-ros/yolov5-deepstream-cpp/yolov5-ros文件夹到opt/nvidia/deepstream/deepstream-5.0/sources/**

->sudo cp ~/cv-detect-ros/yolov5-deepstream-cpp/yolov5-ros /opt/nvidia/deepstream/deepstream-5.0/sources/

- 4. **然后进入拷贝的目标文件夹 /opt/nvidia/deepstream/deepstream-5.0/sources/**

+> sudo chmod -R 777 /opt/nvidia/deepstream/deepstream-5.1/sources/

+ 3. **再拷贝cv-detect-ros/yolov5-deepstream-cpp/yolov5-io-cpp文件夹到opt/nvidia/deepstream/deepstream-5.1/sources/**

+>sudo cp ~/cv-detect-robot/yolov5-deepstream-cpp/yolov5-io-cpp /opt/nvidia/deepstream/deepstream-5.1/sources/

+ 4. **然后进入拷贝的目标文件夹 /opt/nvidia/deepstream/deepstream-5.1/sources/**

> cd /opt/nvidia/deepstream/deepstream-5.0/sources

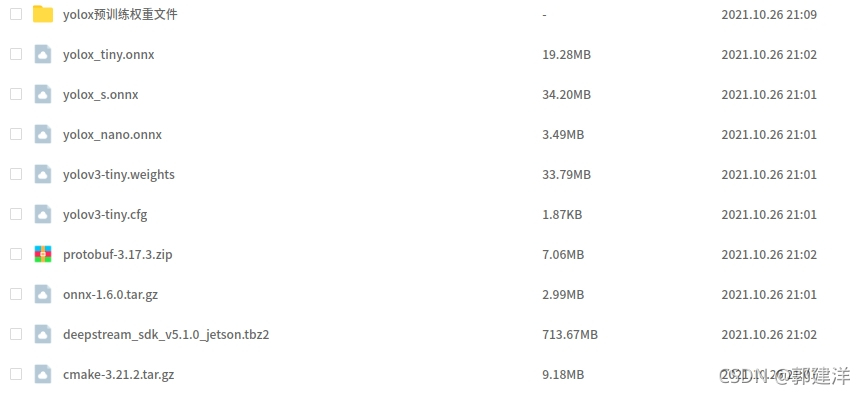

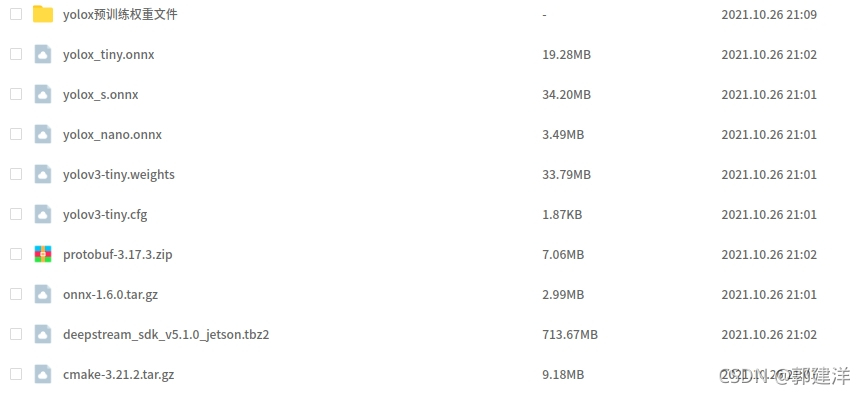

-> 在该文件夹下有yolov5-ros目录,但是打开目录后没有发现下图中的`video`文件夹,这是由于`video`体量大,受到github上传容量限制,`video`视频文件可自行在以下百度网盘链接下载:

+> 在该文件夹下有yolov5-io-cpp目录,但是打开目录后没有发现下图中的`video`文件夹,这是由于`video`体量大,受到github上传容量限制,`video`视频文件可自行在以下百度网盘链接下载:

> 链接: https://pan.baidu.com/s/1V_AftufqGdym4EEKJ0RnpQ 密码: fr8u

- 5. **yolov5-ros文件夹下的内容如下图所示:**

+ 5. **yolov5-io-cpp文件夹下的内容如下图所示:**

@@ -123,25 +123,21 @@

>source1_usb_dec_infer_yolov5.txt--------------------启动csi摄像头实时检测

- 6. **通过download_engine.txt文本下载“number_v30.engnine”和“yolov5s.engine”引擎**

+ 6. **下载引擎文件**

> 由于受到github上传容量限制

>

-> `请通过以下百度网盘链接下载引擎文件夹engine_file(包含“number_v30.engine”和“yolov5s.engine”):

->链接: https://pan.baidu.com/s/1xzR8UdZWM2dk3iqGWDG46Q 密码: 4e4d`

->

->`或者

->将yolov5-ros-deepstream/yolo5-ros文件夹下的引擎文件“number_v30.engine”和“yolov5s.engine”复制到本目录下`

+> `请通过以下百度网盘链接下载引擎文件夹Jetson_engine(包含Nano_engine和NX_engine):

-- **备注**:生成number_v30.engine引擎文件的原模型number_v30.pt文件放于以下链接中,因为引擎文件在非同一硬件平台可能会出现问题,如项目中自带的引擎文件运行报错,可通过number_v30.pt生成新的number_v30.engine引擎文件。

-链接: https://pan.baidu.com/s/1DlCddhAIzpLGPwzV_c8_-w 密码: pk1b

- 7. **编译yolov5-ros-deepstream/yolov5-ros源码**

- > cd /opt/nvidia/deepstream/deepstream-5.0/sources/yolov5-ros

+- **备注**:生成引擎文件的原模型pt或wts文件放于以下链接中,因为引擎文件在非同一硬件平台可能会出现问题,如以上下载的引擎文件运行报错,可通过pt和wts文件生成新的engine引擎文件。

+链接: https://pan.baidu.com/s/11AadDRDod8zlmlye5w4Msg?pwd=6a76 密码: 6a76

+ 7. **编译yolov5-deepstream-cpp/yolov5-io-cpp源码**

+ > cd /opt/nvidia/deepstream/deepstream-5.1/sources/yolov5-io-cpp

> CUDA_VER=10.2 make -C nvdsinfer_custom_impl_Yolo

## 四、运行测试

-### 1.运行number_v30.engine引擎测试视频文件夹video内的视频文件内的视频

-> cd /opt/nvidia/deepstream/deepstream-5.0/sources/yolov5-ros

+### 1.运行yolov5s.engine引擎测试视频文件夹video内的视频文件内的视频

+> cd /opt/nvidia/deepstream/deepstream-5./sources/yolov5-io-cpp/

> deepstream-app -c deepstream_app_number_sv30.txt

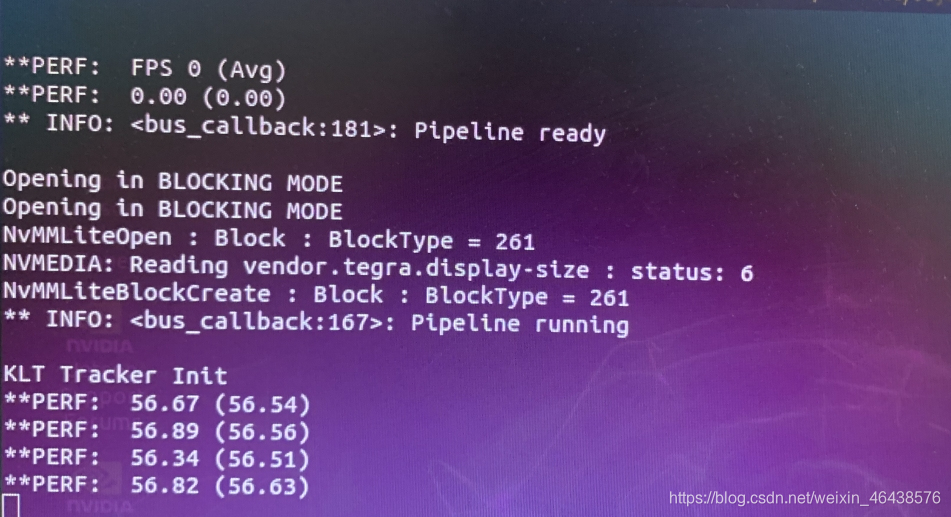

- 正常运行`number_v30.engine`引擎后,会出现实时检测数字的视频流,在命令框里可看到运行帧率(FPS)

@@ -154,10 +150,11 @@

### 2.运行yolov5s.engine引擎测试视频文件夹video内的视频文件内的视频

-> cd /opt/nvidia/deepstream/deepstream-5.0/sources/yolov5-ros

+> cd /opt/nvidia/deepstream/deepstream-5.1/sources/yolov5-io-cpp

> deepstream-app -c deepstream_app_config.txt

### 3.YOLOv5 USB摄像头视频测试命令

> deepstream-app -c source1_usb_dec_infer_yolov5.txt

### 4.YOLOv5 CSI 摄像头视频测试命令

> deepstream-app -c source1_csi_dec_infer_yolov5.txt

+

diff --git a/yolov5-deepstream-cpp/yolov5-ros/config_infer_number_sv30.txt b/yolov5-deepstream-cpp/yolov5-io-cpp/config_infer_number_sv30.txt

similarity index 100%

rename from yolov5-deepstream-cpp/yolov5-ros/config_infer_number_sv30.txt

rename to yolov5-deepstream-cpp/yolov5-io-cpp/config_infer_number_sv30.txt

diff --git a/yolov5-deepstream-python/yolov5-ros/config_infer_primary.txt b/yolov5-deepstream-cpp/yolov5-io-cpp/config_infer_primary.txt

similarity index 76%

rename from yolov5-deepstream-python/yolov5-ros/config_infer_primary.txt

rename to yolov5-deepstream-cpp/yolov5-io-cpp/config_infer_primary.txt

index ee008f0..d94cecf 100755

--- a/yolov5-deepstream-python/yolov5-ros/config_infer_primary.txt

+++ b/yolov5-deepstream-cpp/yolov5-io-cpp/config_infer_primary.txt

@@ -4,15 +4,17 @@ net-scale-factor=0.0039215697906911373

model-color-format=0

model-engine-file=yolov5s.engine

labelfile-path=labels.txt

+process-mode=1

+network-mode=0

num-detected-classes=80

-interval=0

gie-unique-id=1

-process-mode=1

network-type=0

+output-blob-names=prob

cluster-mode=4

-maintain-aspect-ratio=0

+maintain-aspect-ratio=1

parse-bbox-func-name=NvDsInferParseCustomYoloV5

custom-lib-path=nvdsinfer_custom_impl_Yolo/libnvdsinfer_custom_impl_Yolo.so

[class-attrs-all]

-pre-cluster-threshold=0.25

+nms-iou-threshold=0.5

+pre-cluster-threshold=0.4

diff --git a/yolov5-deepstream-cpp/c++_yolov5_tensor/yolov5_tensor b/yolov5-deepstream-cpp/yolov5-io-cpp/cpp_io/yolov5_tensor

similarity index 92%

rename from yolov5-deepstream-cpp/c++_yolov5_tensor/yolov5_tensor

rename to yolov5-deepstream-cpp/yolov5-io-cpp/cpp_io/yolov5_tensor

index 4622667..bf9af03 100755

Binary files a/yolov5-deepstream-cpp/c++_yolov5_tensor/yolov5_tensor and b/yolov5-deepstream-cpp/yolov5-io-cpp/cpp_io/yolov5_tensor differ

diff --git a/yolov5-deepstream-cpp/c++_yolov5_tensor/yolov5_tensor.cpp b/yolov5-deepstream-cpp/yolov5-io-cpp/cpp_io/yolov5_tensor.cpp

similarity index 88%

rename from yolov5-deepstream-cpp/c++_yolov5_tensor/yolov5_tensor.cpp

rename to yolov5-deepstream-cpp/yolov5-io-cpp/cpp_io/yolov5_tensor.cpp

index a452621..e5c59ac 100755

--- a/yolov5-deepstream-cpp/c++_yolov5_tensor/yolov5_tensor.cpp

+++ b/yolov5-deepstream-cpp/yolov5-io-cpp/cpp_io/yolov5_tensor.cpp

@@ -18,7 +18,7 @@ int main()

{

while(1)

{

- int fd = open("/opt/nvidia/deepstream/deepstream-5.0/sources/yolov5/nvdsinfer_custom_impl_Yolo/internal_memory.txt",O_RDONLY);

+ int fd = open("/opt/nvidia/deepstream/deepstream-5.1/sources/yolov5-io-cpp/nvdsinfer_custom_impl_Yolo/internal_memory.txt",O_RDONLY);

char *addr = (char *)mmap(NULL, 3008, PROT_READ,MAP_SHARED, fd, 0);

if (addr == MAP_FAILED)

{

diff --git a/yolov5-deepstream-python/yolov5-ros/deepstream_app_config.txt b/yolov5-deepstream-cpp/yolov5-io-cpp/deepstream_app_config.txt

similarity index 63%

rename from yolov5-deepstream-python/yolov5-ros/deepstream_app_config.txt

rename to yolov5-deepstream-cpp/yolov5-io-cpp/deepstream_app_config.txt

index fe526fc..a738c6f 100755

--- a/yolov5-deepstream-python/yolov5-ros/deepstream_app_config.txt

+++ b/yolov5-deepstream-cpp/yolov5-io-cpp/deepstream_app_config.txt

@@ -1,6 +1,6 @@

[application]

enable-perf-measurement=1

-perf-measurement-interval-sec=1

+perf-measurement-interval-sec=5

[tiled-display]

enable=1

@@ -14,7 +14,7 @@ nvbuf-memory-type=0

[source0]

enable=1

type=3

-uri=file://video/sample_1080p_h264.mp4

+uri=file://../../samples/streams/sample_1080p_h264.mp4

num-sources=1

gpu-id=0

cudadec-memtype=0

@@ -30,7 +30,7 @@ nvbuf-memory-type=0

[osd]

enable=1

gpu-id=0

-border-width=1

+border-width=4

text-size=15

text-color=1;1;1;1;

text-bg-color=0.3;0.3;0.3;1

@@ -59,5 +59,18 @@ gie-unique-id=1

nvbuf-memory-type=0

config-file=config_infer_primary.txt

+[tracker]

+enable=1

+#tracker-width=640

+#tracker-height=384

+tracker-width=960

+tracker-height=544

+gpu-id=0

+#ll-lib-file=/opt/nvidia/deepstream/deepstream-5.1/lib/libnvds_mot_klt.so

+ll-lib-file=/opt/nvidia/deepstream/deepstream-5.1/lib/libnvds_nvdcf.so

+ll-config-file=tracker_config.yml

+#enable-past-frame=1

+enable-batch-process=1

+

[tests]

file-loop=0

diff --git a/yolov5-deepstream-cpp/yolov5-ros/deepstream_app_number_sv30.txt b/yolov5-deepstream-cpp/yolov5-io-cpp/deepstream_app_number_sv30.txt

similarity index 100%

rename from yolov5-deepstream-cpp/yolov5-ros/deepstream_app_number_sv30.txt

rename to yolov5-deepstream-cpp/yolov5-io-cpp/deepstream_app_number_sv30.txt

diff --git a/yolov5-deepstream-cpp/yolov5-ros/labels.txt b/yolov5-deepstream-cpp/yolov5-io-cpp/labels.txt

similarity index 100%

rename from yolov5-deepstream-cpp/yolov5-ros/labels.txt

rename to yolov5-deepstream-cpp/yolov5-io-cpp/labels.txt

diff --git a/yolov5-deepstream-cpp/yolov5-ros/number_v30.txt b/yolov5-deepstream-cpp/yolov5-io-cpp/number_v30.txt

similarity index 100%

rename from yolov5-deepstream-cpp/yolov5-ros/number_v30.txt

rename to yolov5-deepstream-cpp/yolov5-io-cpp/number_v30.txt

diff --git a/yolov5-deepstream-cpp/yolov5-ros/nvdsinfer_custom_impl_Yolo/Makefile b/yolov5-deepstream-cpp/yolov5-io-cpp/nvdsinfer_custom_impl_Yolo/Makefile

similarity index 100%

rename from yolov5-deepstream-cpp/yolov5-ros/nvdsinfer_custom_impl_Yolo/Makefile

rename to yolov5-deepstream-cpp/yolov5-io-cpp/nvdsinfer_custom_impl_Yolo/Makefile

diff --git a/yolov5-deepstream-cpp/yolov5-io-cpp/nvdsinfer_custom_impl_Yolo/internal_memory.txt b/yolov5-deepstream-cpp/yolov5-io-cpp/nvdsinfer_custom_impl_Yolo/internal_memory.txt

new file mode 100755

index 0000000..1218bae

--- /dev/null

+++ b/yolov5-deepstream-cpp/yolov5-io-cpp/nvdsinfer_custom_impl_Yolo/internal_memory.txt

@@ -0,0 +1 @@

+2 2 524 212 82 103 0.775 2 339 234 68 39 0.539 2 402 234 31 14 0.439 2 403 233 28 14 0.526 9 588 145 11 19 0.457 9 107 38 25 20 0.409 9 216 163 11 13 0.443 9 91 84 20 28 0.418 9 174 181 11 22 0.448 9 487 161 9 21 0.434 9 487 161 9 21 0.427 2 387 149 30 14 0.513 13 0 231 47 108 0.440 36 26 221 21 32 0.405 24 84 154 23 24 0.456 28 27 221 19 34 0.415 2 567 158 40 17 0.420 24 99 165 28 35 0.695 2 181 258 12 10 0.263 2 226 269 20 17 0.265 9 565 183 27 44 0.266 2 317 267 16 12 0.252 9 566 183 26 43 0.348 24 93 290 28 65 0.575 ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

diff --git a/yolov5-deepstream-cpp/yolov5-ros/nvdsinfer_custom_impl_Yolo/libnvdsinfer_custom_impl_Yolo.so b/yolov5-deepstream-cpp/yolov5-io-cpp/nvdsinfer_custom_impl_Yolo/libnvdsinfer_custom_impl_Yolo.so

similarity index 89%

rename from yolov5-deepstream-cpp/yolov5-ros/nvdsinfer_custom_impl_Yolo/libnvdsinfer_custom_impl_Yolo.so

rename to yolov5-deepstream-cpp/yolov5-io-cpp/nvdsinfer_custom_impl_Yolo/libnvdsinfer_custom_impl_Yolo.so

index 67f16b0..6a7fd5c 100755

Binary files a/yolov5-deepstream-cpp/yolov5-ros/nvdsinfer_custom_impl_Yolo/libnvdsinfer_custom_impl_Yolo.so and b/yolov5-deepstream-cpp/yolov5-io-cpp/nvdsinfer_custom_impl_Yolo/libnvdsinfer_custom_impl_Yolo.so differ

diff --git a/yolov5-deepstream-cpp/yolov5-ros/nvdsinfer_custom_impl_Yolo/nvdsparsebbox_Yolo.cpp b/yolov5-deepstream-cpp/yolov5-io-cpp/nvdsinfer_custom_impl_Yolo/nvdsparsebbox_Yolo.cpp

similarity index 97%

rename from yolov5-deepstream-cpp/yolov5-ros/nvdsinfer_custom_impl_Yolo/nvdsparsebbox_Yolo.cpp

rename to yolov5-deepstream-cpp/yolov5-io-cpp/nvdsinfer_custom_impl_Yolo/nvdsparsebbox_Yolo.cpp

index b231861..9b6c1fe 100755

--- a/yolov5-deepstream-cpp/yolov5-ros/nvdsinfer_custom_impl_Yolo/nvdsparsebbox_Yolo.cpp

+++ b/yolov5-deepstream-cpp/yolov5-io-cpp/nvdsinfer_custom_impl_Yolo/nvdsparsebbox_Yolo.cpp

@@ -71,7 +71,7 @@ bool cmp(Detection& a, Detection& b) {

return a.conf > b.conf;

}

-//非极大值抑制算法NMS

+

void nms(std::vector& res, float *output, float conf_thresh, float nms_thresh) {

int det_size = sizeof(Detection) / sizeof(float);

std::map> m;

@@ -187,7 +187,7 @@ static bool NvDsInferParseYoloV5(

/*-----------------below these code is taking the boundingboxes into memory of 'internal_memory.txt'----------------*/

unsigned long int boundingboxes_len = objectlist_len*60 + 10;

- int fd = open("/opt/nvidia/deepstream/deepstream-5.0/sources/yolov5-ros/nvdsinfer_custom_impl_Yolo/internal_memory.txt",O_RDWR|O_CREAT, 00777);

+ int fd = open("/opt/nvidia/deepstream/deepstream-5.1/sources/yolov5-io-cpp/nvdsinfer_custom_impl_Yolo/internal_memory.txt",O_RDWR|O_CREAT, 00777);

char* guo =(char*)(mmap(NULL, boundingboxes_len, PROT_READ|PROT_WRITE, MAP_SHARED, fd, 0));

//determine whether the memory is read successfully by the return-value 'MAP_FAILED'

diff --git a/yolov5-deepstream-cpp/yolov5-ros/nvdsinfer_custom_impl_Yolo/nvdsparsebbox_Yolo.o b/yolov5-deepstream-cpp/yolov5-io-cpp/nvdsinfer_custom_impl_Yolo/nvdsparsebbox_Yolo.o

old mode 100755

new mode 100644

similarity index 84%

rename from yolov5-deepstream-cpp/yolov5-ros/nvdsinfer_custom_impl_Yolo/nvdsparsebbox_Yolo.o

rename to yolov5-deepstream-cpp/yolov5-io-cpp/nvdsinfer_custom_impl_Yolo/nvdsparsebbox_Yolo.o

index c461fc1..ea5bd32

Binary files a/yolov5-deepstream-cpp/yolov5-ros/nvdsinfer_custom_impl_Yolo/nvdsparsebbox_Yolo.o and b/yolov5-deepstream-cpp/yolov5-io-cpp/nvdsinfer_custom_impl_Yolo/nvdsparsebbox_Yolo.o differ

diff --git a/yolov5-deepstream-cpp/yolov5-ros/nvdsinfer_custom_impl_Yolo/utils.h b/yolov5-deepstream-cpp/yolov5-io-cpp/nvdsinfer_custom_impl_Yolo/utils.h

similarity index 100%

rename from yolov5-deepstream-cpp/yolov5-ros/nvdsinfer_custom_impl_Yolo/utils.h

rename to yolov5-deepstream-cpp/yolov5-io-cpp/nvdsinfer_custom_impl_Yolo/utils.h

diff --git a/yolov5-deepstream-cpp/yolov5-ros/nvdsinfer_custom_impl_Yolo/yololayer.cu b/yolov5-deepstream-cpp/yolov5-io-cpp/nvdsinfer_custom_impl_Yolo/yololayer.cu

similarity index 100%

rename from yolov5-deepstream-cpp/yolov5-ros/nvdsinfer_custom_impl_Yolo/yololayer.cu

rename to yolov5-deepstream-cpp/yolov5-io-cpp/nvdsinfer_custom_impl_Yolo/yololayer.cu

diff --git a/yolov5-deepstream-cpp/yolov5-ros/nvdsinfer_custom_impl_Yolo/yololayer.h b/yolov5-deepstream-cpp/yolov5-io-cpp/nvdsinfer_custom_impl_Yolo/yololayer.h

similarity index 100%

rename from yolov5-deepstream-cpp/yolov5-ros/nvdsinfer_custom_impl_Yolo/yololayer.h

rename to yolov5-deepstream-cpp/yolov5-io-cpp/nvdsinfer_custom_impl_Yolo/yololayer.h

diff --git a/yolov5-deepstream-cpp/yolov5-ros/nvdsinfer_custom_impl_Yolo/yololayer.o b/yolov5-deepstream-cpp/yolov5-io-cpp/nvdsinfer_custom_impl_Yolo/yololayer.o

similarity index 100%

rename from yolov5-deepstream-cpp/yolov5-ros/nvdsinfer_custom_impl_Yolo/yololayer.o

rename to yolov5-deepstream-cpp/yolov5-io-cpp/nvdsinfer_custom_impl_Yolo/yololayer.o

diff --git a/yolov5-deepstream-cpp/yolov5-ros/source1_csi_dec_infer_yolov5.txt b/yolov5-deepstream-cpp/yolov5-io-cpp/source1_csi_dec_infer_yolov5.txt

similarity index 100%

rename from yolov5-deepstream-cpp/yolov5-ros/source1_csi_dec_infer_yolov5.txt

rename to yolov5-deepstream-cpp/yolov5-io-cpp/source1_csi_dec_infer_yolov5.txt

diff --git a/yolov5-deepstream-cpp/yolov5-io-cpp/source1_usb_dec_infer_yolov5.txt b/yolov5-deepstream-cpp/yolov5-io-cpp/source1_usb_dec_infer_yolov5.txt

new file mode 100755

index 0000000..5c36a61

--- /dev/null

+++ b/yolov5-deepstream-cpp/yolov5-io-cpp/source1_usb_dec_infer_yolov5.txt

@@ -0,0 +1,89 @@

+

+[application]

+enable-perf-measurement=1

+perf-measurement-interval-sec=5

+#gie-kitti-output-dir=streamscl

+

+[tiled-display]

+enable=1

+rows=1

+columns=1

+width=1280

+height=720

+

+[source0]

+enable=1

+#Type - 1=CameraV4L2 2=URI 3=MultiURI

+type=1

+camera-width=640

+camera-height=480

+camera-fps-n=30

+camera-fps-d=1

+camera-v4l2-dev-node=0

+

+[sink0]

+enable=1

+#Type - 1=FakeSink 2=EglSink 3=File 4=RTSPStreaming 5=Overlay

+type=2

+sync=0

+display-id=0

+offset-x=100

+offset-y=120

+width=1280

+height=720

+overlay-id=1

+source-id=0

+

+[osd]

+enable=1

+border-width=4

+text-size=15

+text-color=1;1;1;1;

+text-bg-color=0.3;0.3;0.3;1

+font=Serif

+show-clock=0

+clock-x-offset=800

+clock-y-offset=820

+clock-text-size=12

+clock-color=1;0;0;0

+

+[streammux]

+##Boolean property to inform muxer that sources are live

+live-source=1

+batch-size=1

+##time out in usec, to wait after the first buffer is available

+##to push the batch even if the complete batch is not formed

+batched-push-timeout=40000

+## Set muxer output width and height

+width=1280

+height=720

+## If set to TRUE, system timestamp will be attached as ntp timestamp

+## If set to FALSE, ntp timestamp from rtspsrc, if available, will be attached

+# attach-sys-ts-as-ntp=1

+

+# config-file property is mandatory for any gie section.

+# Other properties are optional and if set will override the properties set in

+# the infer config file.

+[primary-gie]

+enable=1

+gpu-id=0

+gie-unique-id=1

+nvbuf-memory-type=0

+config-file=config_infer_primary.txt

+

+[tracker]

+enable=1

+#tracker-width=640

+#tracker-height=384

+tracker-width=960

+tracker-height=544

+gpu-id=0

+#ll-lib-file=/opt/nvidia/deepstream/deepstream-5.1/lib/libnvds_mot_klt.so

+ll-lib-file=/opt/nvidia/deepstream/deepstream-5.1/lib/libnvds_nvdcf.so

+ll-config-file=tracker_config.yml

+#enable-past-frame=1

+enable-batch-process=1

+

+

+[tests]

+file-loop=0

diff --git a/yolov5-deepstream-cpp/yolov5-io-cpp/tracker_config.yml b/yolov5-deepstream-cpp/yolov5-io-cpp/tracker_config.yml

new file mode 100755

index 0000000..f99f0af

--- /dev/null

+++ b/yolov5-deepstream-cpp/yolov5-io-cpp/tracker_config.yml

@@ -0,0 +1,96 @@

+%YAML:1.0

+################################################################################

+# Copyright (c) 2020, NVIDIA CORPORATION. All rights reserved.

+#

+# Permission is hereby granted, free of charge, to any person obtaining a

+# copy of this software and associated documentation files (the "Software"),

+# to deal in the Software without restriction, including without limitation

+# the rights to use, copy, modify, merge, publish, distribute, sublicense,

+# and/or sell copies of the Software, and to permit persons to whom the

+# Software is furnished to do so, subject to the following conditions:

+#

+# The above copyright notice and this permission notice shall be included in

+# all copies or substantial portions of the Software.

+#

+# THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

+# IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

+# FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL

+# THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

+# LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

+# FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER

+# DEALINGS IN THE SOFTWARE.

+################################################################################

+

+NvDCF:

+ # [General]

+ useUniqueID: 0 # Use 64-bit long Unique ID when assignining tracker ID. Default is [true]

+ maxTargetsPerStream: 99 # Max number of targets to track per stream. Recommended to set >10. Note: this value should account for the targets being tracked in shadow mode as well. Max value depends on the GPU memory capacity

+

+ # [Feature Extraction]

+ useColorNames: 1 # Use ColorNames feature

+ useHog: 0 # Use Histogram-of-Oriented-Gradient (HOG) feature

+ useHighPrecisionFeature: 0 # Use high-precision in feature extraction. Default is [true]

+

+ # [DCF]

+ filterLr: 0.15 # learning rate for DCF filter in exponential moving average. Valid Range: [0.0, 1.0]

+ filterChannelWeightsLr: 0.22 # learning rate for the channel weights among feature channels. Valid Range: [0.0, 1.0]

+ gaussianSigma: 0.75 # Standard deviation for Gaussian for desired response when creating DCF filter [pixels]

+ featureImgSizeLevel: 5 # Size of a feature image. Valid range: {1, 2, 3, 4, 5}, from the smallest to the largest

+ SearchRegionPaddingScale: 1 # Search region size. Determines how large the search region should be scaled from the target bbox. Valid range: {1, 2, 3}, from the smallest to the largest

+

+ # [MOT] [False Alarm Handling]

+ maxShadowTrackingAge: 30 # Max length of shadow tracking (the shadow tracking age is incremented when (1) there's detector input yet no match or (2) tracker confidence is lower than minTrackerConfidence). Once reached, the tracker will be terminated.

+ probationAge: 3 # Once the tracker age (incremented at every frame) reaches this, the tracker is considered to be valid

+ earlyTerminationAge: 1 # Early termination age (in terms of shadow tracking age) during the probation period. If reached during the probation period, the tracker will be terminated prematurely.

+

+ # [Tracker Creation Policy] [Target Candidacy]

+ minDetectorConfidence: -1 # If the confidence of a detector bbox is lower than this, then it won't be considered for tracking

+ minTrackerConfidence: 0.7 # If the confidence of an object tracker is lower than this on the fly, then it will be tracked in shadow mode. Valid Range: [0.0, 1.0]

+ minTargetBboxSize: 10 # If the width or height of the bbox size gets smaller than this threshold, the target will be terminated.

+ minDetectorBboxVisibilityTobeTracked: 0.0 # If the detector-provided bbox's visibility (i.e., IOU with image) is lower than this, it won't be considered.

+ minVisibiilty4Tracking: 0.0 # If the visibility of the tracked object (i.e., IOU with image) is lower than this, it will be terminated immediately, assuming it is going out of scene.

+

+ # [Tracker Termination Policy]

+ targetDuplicateRunInterval: 5 # The interval in which the duplicate target detection removal is carried out. A Negative value indicates indefinite interval. Unit: [frames]

+ minIou4TargetDuplicate: 0.9 # If the IOU of two target bboxes are higher than this, the newer target tracker will be terminated.

+

+ # [Data Association] Matching method

+ useGlobalMatching: 0 # If true, enable a global matching algorithm (i.e., Hungarian method). Otherwise, a greedy algorithm wll be used.

+

+ # [Data Association] Thresholds in matching scores to be considered as a valid candidate for matching

+ minMatchingScore4Overall: 0.0 # Min total score

+ minMatchingScore4SizeSimilarity: 0.5 # Min bbox size similarity score

+ minMatchingScore4Iou: 0.1 # Min IOU score

+ minMatchingScore4VisualSimilarity: 0.2 # Min visual similarity score

+ minTrackingConfidenceDuringInactive: 1.0 # Min tracking confidence during INACTIVE period. If tracking confidence is higher than this, then tracker will still output results until next detection

+

+ # [Data Association] Weights for each matching score term

+ matchingScoreWeight4VisualSimilarity: 0.8 # Weight for the visual similarity (in terms of correlation response ratio)

+ matchingScoreWeight4SizeSimilarity: 0.0 # Weight for the Size-similarity score

+ matchingScoreWeight4Iou: 0.1 # Weight for the IOU score

+ matchingScoreWeight4Age: 0.1 # Weight for the tracker age

+

+ # [State Estimator]

+ useTrackSmoothing: 1 # Use a state estimator

+ stateEstimatorType: 1 # The type of state estimator among { moving_avg:1, kalman_filter:2 }

+

+ # [State Estimator] [MovingAvgEstimator]

+ trackExponentialSmoothingLr_loc: 0.5 # Learning rate for new location

+ trackExponentialSmoothingLr_scale: 0.3 # Learning rate for new scale

+ trackExponentialSmoothingLr_velocity: 0.05 # Learning rate for new velocity

+

+ # [State Estimator] [Kalman Filter]

+ kfProcessNoiseVar4Loc: 0.1 # Process noise variance for location in Kalman filter

+ kfProcessNoiseVar4Scale: 0.04 # Process noise variance for scale in Kalman filter

+ kfProcessNoiseVar4Vel: 0.04 # Process noise variance for velocity in Kalman filter

+ kfMeasurementNoiseVar4Trk: 9 # Measurement noise variance for tracker's detection in Kalman filter

+ kfMeasurementNoiseVar4Det: 9 # Measurement noise variance for detector's detection in Kalman filter

+

+ # [Past-frame Data]

+ useBufferedOutput: 0 # Enable storing of past-frame data in a buffer and report it back

+

+ # [Instance-awareness]

+ useInstanceAwareness: 0 # Use instance-awareness for multi-object tracking

+ lambda_ia: 2 # Regularlization factor for each instance

+ maxInstanceNum_ia: 4 # The number of nearby object instances to use for instance-awareness

+

diff --git a/yolov5-deepstream-cpp/yolov5-ros/download_engine.txt b/yolov5-deepstream-cpp/yolov5-ros/download_engine.txt

deleted file mode 100644

index 54627e6..0000000

--- a/yolov5-deepstream-cpp/yolov5-ros/download_engine.txt

+++ /dev/null

@@ -1,8 +0,0 @@

-##由于受到github上传容量限制

-

-请通过以下百度网盘链接下载引擎文件夹engine_file(包含“number_v30.engine”和“yolov5s.engine”):

-链接: https://pan.baidu.com/s/1xzR8UdZWM2dk3iqGWDG46Q 密码: 4e4d

-

-## or 或者

-将yolov5-ros-deepstream/yolo5-ros文件夹下的引擎文件“number_v30.engine”和“yolov5s.engine”复制到本目录下

-

diff --git a/yolov5-deepstream-cpp/yolov5-ros/nvdsinfer_custom_impl_Yolo/internal_memory.txt b/yolov5-deepstream-cpp/yolov5-ros/nvdsinfer_custom_impl_Yolo/internal_memory.txt

deleted file mode 100755

index ef396fb..0000000

--- a/yolov5-deepstream-cpp/yolov5-ros/nvdsinfer_custom_impl_Yolo/internal_memory.txt

+++ /dev/null

@@ -1 +0,0 @@

-15 0 18 257 48 178 0.738 0 0 327 46 123 0.474 0 128 269 11 42 0.285 2 433 299 173 195 0.885 2 375 274 76 56 0.795 2 229 272 29 32 0.745 2 260 270 22 22 0.731 2 416 274 37 22 0.614 2 355 270 21 16 0.602 2 193 270 27 37 0.519 2 579 293 28 28 0.464 2 324 271 25 12 0.379 2 580 294 27 60 0.325 2 182 272 13 17 0.300 9 564 183 28 44 0.297 2 172 274 19 12 0.281 2 172 275 19 11 0.274 10 437 401 170 206 0.354 2 181 258 12 10 0.263 2 226 269 20 17 0.265 9 565 183 27 44 0.266 2 317 267 16 12 0.252 9 566 183 26 43 0.348 24 93 290 28 65 0.575 ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

diff --git a/yolov5-deepstream-python/README.md b/yolov5-deepstream-python/README.md

index e8dad36..6981672 100644

--- a/yolov5-deepstream-python/README.md

+++ b/yolov5-deepstream-python/README.md

@@ -77,25 +77,25 @@

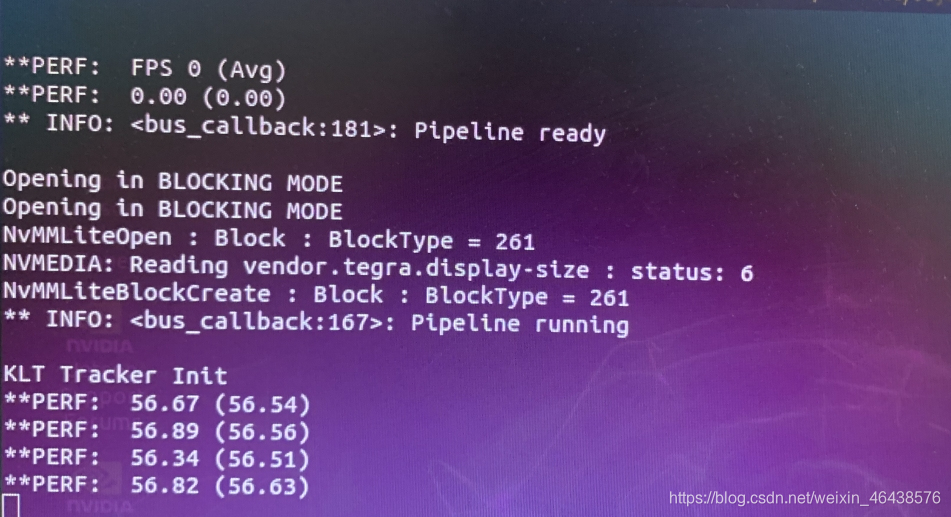

若能生成相关engine引擎并启动视频流检测,则说明DeepStream SDK安装成功,如下图所示:

***

-## 三、从github克隆`cv-detect-ros`项目,并将本人设计好的`yolov5-deepstream-python`子项目的相关子文件夹拷贝到相应目录下进行编译

+## 三、从github克隆`cv-detect-robot`项目,并将本人设计好的`yolov5-deepstream-python`子项目的相关子文件夹拷贝到相应目录下进行编译

- 1. **从github克隆`cv-detect-ros`项目**(建议在搭建梯子的环境下进行git clone)

+ 1. **从github克隆`cv-detect-robot`项目**(建议在搭建梯子的环境下进行git clone)

> 先按 `ctrl + alt +t` 进入终端(默认克隆的文件在家目录下)

-> git clone https://github.com/guojianyang/cv-detect-ros.git

+> git clone -b v3.0 https://github.com/guojianyang/cv-detect-ros.git

2. **首先对我们所要操作的文件夹赋予权限**

> sudo chmod -R 777 /opt/nvidia/deepstream/deepstream-5.0/sources/

- 3. **再拷贝cv-detect-ros/yolov5-deepstream-python/yolov5-ros文件夹到opt/nvidia/deepstream/deepstream-5.0/sources/**

->sudo cp ~/cv-detect-ros/yolov5-deepstream-python/yolov5-ros /opt/nvidia/deepstream/deepstream-5.0/sources/

+ 3. **再拷贝cv-detect-ros/yolov5-deepstream-python/yolov5-io-python文件夹到opt/nvidia/deepstream/deepstream-5.0/sources/**

+>sudo cp ~/cv-detect-ros/yolov5-deepstream-python/yolov5-io-python /opt/nvidia/deepstream/deepstream-5.0/sources/

4. **然后进入拷贝的目标文件夹 /opt/nvidia/deepstream/deepstream-5.0/sources/**

> cd /opt/nvidia/deepstream/deepstream-5.0/sources

-> 在该文件夹下有yolov5-ros目录,但是打开目录后没有发现下图中的`video`文件夹,这是由于`video`体量大,受到github上传容量限制,`video`视频文件可自行在以下百度网盘链接下载:

+> 在该文件夹下有yolov5-io-python目录,但是打开目录后没有发现下图中的`video`文件夹,这是由于`video`体量大,受到github上传容量限制,`video`视频文件可自行在以下百度网盘链接下载:

> 链接: https://pan.baidu.com/s/1V_AftufqGdym4EEKJ0RnpQ 密码: fr8u

- 5. **yolov5-ros文件夹下的内容如下图所示:**

+ 5. **yolov5-io-python文件夹下的内容如下图所示:**

@@ -125,21 +125,18 @@

>source1_usb_dec_infer_yolov5.txt--------------------启动csi摄像头实时检测

- 6. **通过download_engine.txt文本下载“number_v30.engnine”和“yolov5s.engine”引擎**

+6. **下载引擎文件**

+

> 由于受到github上传容量限制

>

-> `请通过以下百度网盘链接下载引擎文件夹engine_file(包含“number_v30.engine”和“yolov5s.engine”):

->链接: https://pan.baidu.com/s/1xzR8UdZWM2dk3iqGWDG46Q 密码: 4e4d`

->

->`或者

->将yolov5-ros-deepstream/yolo5-ros文件夹下的引擎文件“number_v30.engine”和“yolov5s.engine”复制到本目录下`

+> `请通过以下百度网盘链接下载引擎文件夹Jetson_engine(包含Nano_engine和NX_engine):

-- **备注**:生成number_v30.engine引擎文件的原模型number_v30.pt文件放于以下链接中,因为引擎文件在非同一硬件平台可能会出现问题,如项目中自带的引擎文件运行报错,可通过number_v30.pt生成新的number_v30.engine引擎文件。

-链接: https://pan.baidu.com/s/1DlCddhAIzpLGPwzV_c8_-w 密码: pk1b

+- **备注**:生成引擎文件的原模型pt或wts文件放于以下链接中,因为引擎文件在非同一硬件平台可能会出现问题,如以上下载的引擎文件运行报错,可通过pt和wts文件生成新的engine引擎文件。

+链接: https://pan.baidu.com/s/11AadDRDod8zlmlye5w4Msg?pwd=6a76 密码: 6a76

- 7. **编译yolov5-deepstream-python/yolov5-ros源码**

- > cd /opt/nvidia/deepstream/deepstream-5.0/sources/yolov5-ros

+ 7. **编译yolov5-deepstream-python/yolov5-io-python源码**

+ > cd /opt/nvidia/deepstream/deepstream-5.0/sources/yolov5-io-python/

> CUDA_VER=10.2 make -C nvdsinfer_custom_impl_Yolo

@@ -147,7 +144,7 @@

## 四、运行测试

### 1.运行number_v30.engine引擎测试视频文件夹video内的视频文件内的视频

-> cd /opt/nvidia/deepstream/deepstream-5.0/sources/yolov5-ros

+> cd /opt/nvidia/deepstream/deepstream-5.0/sources/yolov5-io-python

> deepstream-app -c deepstream_app_number_sv30.txt

- 正常运行`number_v30.engine`引擎后,会出现实时检测数字的视频流,在命令框里可看到运行帧率(FPS)

- 启动 client.py脚本读取目标检测数据并打印出来(建议使用python2做运行 client.py)

@@ -156,7 +153,7 @@

### 2.运行yolov5s.engine引擎测试视频文件夹video内的视频文件内的视频

-> cd /opt/nvidia/deepstream/deepstream-5.0/sources/yolov5-ros

+> cd /opt/nvidia/deepstream/deepstream-5.0/sources/yolov5-io-python

> deepstream-app -c deepstream_app_config.txt

### 3.YOLOv5 USB摄像头视频测试命令

> deepstream-app -c source1_usb_dec_infer_yolov5.txt

diff --git a/yolov5-deepstream-python/client.py b/yolov5-deepstream-python/yolov5-io-python/client.py

similarity index 74%

rename from yolov5-deepstream-python/client.py

rename to yolov5-deepstream-python/yolov5-io-python/client.py

index e2dfe0e..d176f89 100755

--- a/yolov5-deepstream-python/client.py

+++ b/yolov5-deepstream-python/yolov5-io-python/client.py

@@ -7,11 +7,12 @@

global boundingboxes_list

if __name__ == "__main__":

while (1):

- with open('/opt/nvidia/deepstream/deepstream-5.0/sources/yolov5/nvdsinfer_custom_impl_Yolo/internal_memory.txt', 'r+b') as f:

+ with open('/opt/nvidia/deepstream/deepstream-5.1/sources/yolov5-io-python/nvdsinfer_custom_impl_Yolo/internal_memory.txt', 'r+b') as f:

# mmap基本上接收两个参数,(文件描述符,读取长度),size 为0表示读取整个文件

mm = mmap.mmap(f.fileno(), 0)

mm.seek(0) # 定位到文件头

- byte_numline = mm[0] # mm[]取出来的是byte类型, 要转为int型

+ # byte_numline = mm[0] # mm[]取出来的是byte类型, 要转为int型

+ byte_numline = int(mm.read(1))

str_numline = str(byte_numline) # 将bytes转化为str类型

boundingboxes_txt = str(mm[10:int(str_numline)*60 + 10])

#print("boundingboxes = \n",mm[6:int(str_numline_out)+6])

@@ -23,8 +24,8 @@

box_number = len(boundingboxes_list) // 6

print('box_number = ', box_number)

re_array_boundingbox = numpy.resize(array_boundingbox, (box_number, 6))

- #print('re_array_boundingbox:\n', re_array_boundingbox)

- print(re_array_boundingbox)

+ #print('re_array_boundingbox:\n', re_array_boundingbox)

+ print(re_array_boundingbox)

print("#---------------------------------------------------------------#")

diff --git a/yolov5-deepstream-python/yolov5-ros/config_infer_number_sv30.txt b/yolov5-deepstream-python/yolov5-io-python/config_infer_number_sv30.txt

similarity index 100%

rename from yolov5-deepstream-python/yolov5-ros/config_infer_number_sv30.txt

rename to yolov5-deepstream-python/yolov5-io-python/config_infer_number_sv30.txt

diff --git a/yolov5-deepstream-cpp/yolov5-ros/config_infer_primary.txt b/yolov5-deepstream-python/yolov5-io-python/config_infer_primary.txt

similarity index 76%

rename from yolov5-deepstream-cpp/yolov5-ros/config_infer_primary.txt

rename to yolov5-deepstream-python/yolov5-io-python/config_infer_primary.txt

index ee008f0..d94cecf 100755

--- a/yolov5-deepstream-cpp/yolov5-ros/config_infer_primary.txt

+++ b/yolov5-deepstream-python/yolov5-io-python/config_infer_primary.txt

@@ -4,15 +4,17 @@ net-scale-factor=0.0039215697906911373

model-color-format=0

model-engine-file=yolov5s.engine

labelfile-path=labels.txt

+process-mode=1

+network-mode=0

num-detected-classes=80

-interval=0

gie-unique-id=1

-process-mode=1

network-type=0

+output-blob-names=prob

cluster-mode=4

-maintain-aspect-ratio=0

+maintain-aspect-ratio=1

parse-bbox-func-name=NvDsInferParseCustomYoloV5

custom-lib-path=nvdsinfer_custom_impl_Yolo/libnvdsinfer_custom_impl_Yolo.so

[class-attrs-all]

-pre-cluster-threshold=0.25

+nms-iou-threshold=0.5

+pre-cluster-threshold=0.4

diff --git a/yolov5-deepstream-cpp/yolov5-ros/deepstream_app_config.txt b/yolov5-deepstream-python/yolov5-io-python/deepstream_app_config.txt

similarity index 63%

rename from yolov5-deepstream-cpp/yolov5-ros/deepstream_app_config.txt

rename to yolov5-deepstream-python/yolov5-io-python/deepstream_app_config.txt

index fe526fc..a738c6f 100755

--- a/yolov5-deepstream-cpp/yolov5-ros/deepstream_app_config.txt

+++ b/yolov5-deepstream-python/yolov5-io-python/deepstream_app_config.txt

@@ -1,6 +1,6 @@

[application]

enable-perf-measurement=1

-perf-measurement-interval-sec=1

+perf-measurement-interval-sec=5

[tiled-display]

enable=1

@@ -14,7 +14,7 @@ nvbuf-memory-type=0

[source0]

enable=1

type=3

-uri=file://video/sample_1080p_h264.mp4

+uri=file://../../samples/streams/sample_1080p_h264.mp4

num-sources=1

gpu-id=0

cudadec-memtype=0

@@ -30,7 +30,7 @@ nvbuf-memory-type=0

[osd]

enable=1

gpu-id=0

-border-width=1

+border-width=4

text-size=15

text-color=1;1;1;1;

text-bg-color=0.3;0.3;0.3;1

@@ -59,5 +59,18 @@ gie-unique-id=1

nvbuf-memory-type=0

config-file=config_infer_primary.txt

+[tracker]

+enable=1

+#tracker-width=640

+#tracker-height=384

+tracker-width=960

+tracker-height=544

+gpu-id=0

+#ll-lib-file=/opt/nvidia/deepstream/deepstream-5.1/lib/libnvds_mot_klt.so

+ll-lib-file=/opt/nvidia/deepstream/deepstream-5.1/lib/libnvds_nvdcf.so

+ll-config-file=tracker_config.yml

+#enable-past-frame=1

+enable-batch-process=1

+

[tests]

file-loop=0

diff --git a/yolov5-deepstream-python/yolov5-ros/deepstream_app_number_sv30.txt b/yolov5-deepstream-python/yolov5-io-python/deepstream_app_number_sv30.txt

similarity index 100%

rename from yolov5-deepstream-python/yolov5-ros/deepstream_app_number_sv30.txt

rename to yolov5-deepstream-python/yolov5-io-python/deepstream_app_number_sv30.txt

diff --git a/yolov5-deepstream-python/yolov5-ros/labels.txt b/yolov5-deepstream-python/yolov5-io-python/labels.txt

similarity index 100%

rename from yolov5-deepstream-python/yolov5-ros/labels.txt

rename to yolov5-deepstream-python/yolov5-io-python/labels.txt

diff --git a/yolov5-deepstream-python/yolov5-ros/number_v30.txt b/yolov5-deepstream-python/yolov5-io-python/number_v30.txt

similarity index 100%

rename from yolov5-deepstream-python/yolov5-ros/number_v30.txt

rename to yolov5-deepstream-python/yolov5-io-python/number_v30.txt

diff --git a/yolov5-deepstream-python/yolov5-ros/nvdsinfer_custom_impl_Yolo/Makefile b/yolov5-deepstream-python/yolov5-io-python/nvdsinfer_custom_impl_Yolo/Makefile

similarity index 100%

rename from yolov5-deepstream-python/yolov5-ros/nvdsinfer_custom_impl_Yolo/Makefile

rename to yolov5-deepstream-python/yolov5-io-python/nvdsinfer_custom_impl_Yolo/Makefile

diff --git a/yolov5-deepstream-python/yolov5-io-python/nvdsinfer_custom_impl_Yolo/internal_memory.txt b/yolov5-deepstream-python/yolov5-io-python/nvdsinfer_custom_impl_Yolo/internal_memory.txt

new file mode 100755

index 0000000..0788c7f

--- /dev/null

+++ b/yolov5-deepstream-python/yolov5-io-python/nvdsinfer_custom_impl_Yolo/internal_memory.txt

@@ -0,0 +1 @@