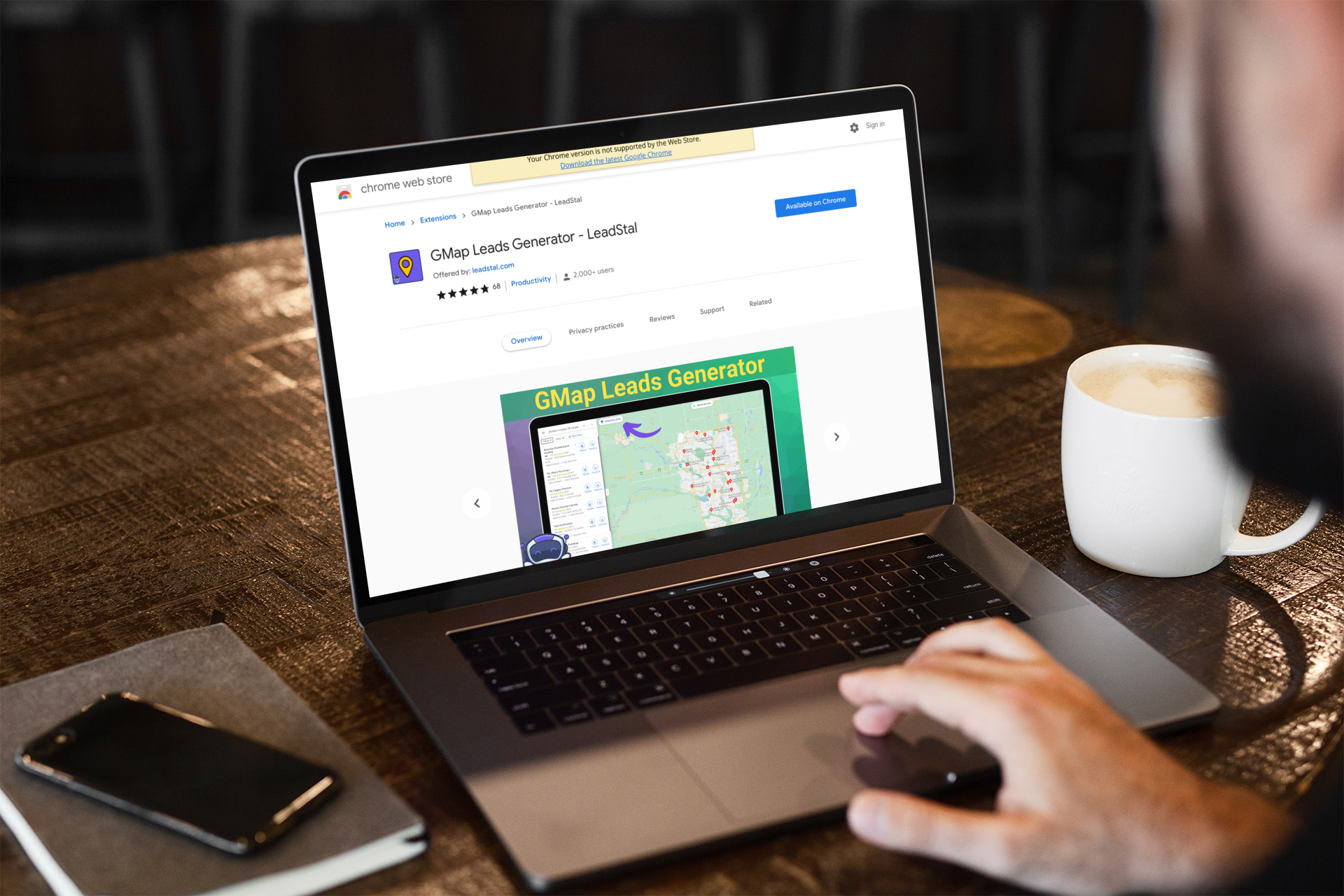

These extensions for scraping Google maps can be used for a number of purposes in various situations that can be either data collection or market research.

These extensions for scraping Google maps can be used for a number of purposes in various situations that can be either data collection or market research.

Have you ever had a situation where your scrapper came across an error [may it be server error or scraper block] and had to start over again?

Have you ever had a situation where your scrapper came across an error [may it be server error or scraper block] and had to start over again?

A brief comparison between Selenium and Playwright from a web scraping perspective. Which one is the most convenient to use?

A brief comparison between Selenium and Playwright from a web scraping perspective. Which one is the most convenient to use?

Para extraer datos de websites, puede usar las herramientas de extracción de datos como Octoparse. Estas herramientas pueden extraer datos de website automáticamente y guardarlos en muchos formatos, como Excel, JSON, CSV, HTML o en su propia base de datos a través de API. Solo toma unos minutos puede extraer miles de líneas de datos, la mejor es que no se necesita codificación en este proceso.

Para extraer datos de websites, puede usar las herramientas de extracción de datos como Octoparse. Estas herramientas pueden extraer datos de website automáticamente y guardarlos en muchos formatos, como Excel, JSON, CSV, HTML o en su propia base de datos a través de API. Solo toma unos minutos puede extraer miles de líneas de datos, la mejor es que no se necesita codificación en este proceso.

Check out this step-by-step guide on how to build your own LinkedIn scraper for free!

Check out this step-by-step guide on how to build your own LinkedIn scraper for free!

Want to scrape data from Google Maps? This tutorial shows you how to do it.

Want to scrape data from Google Maps? This tutorial shows you how to do it.

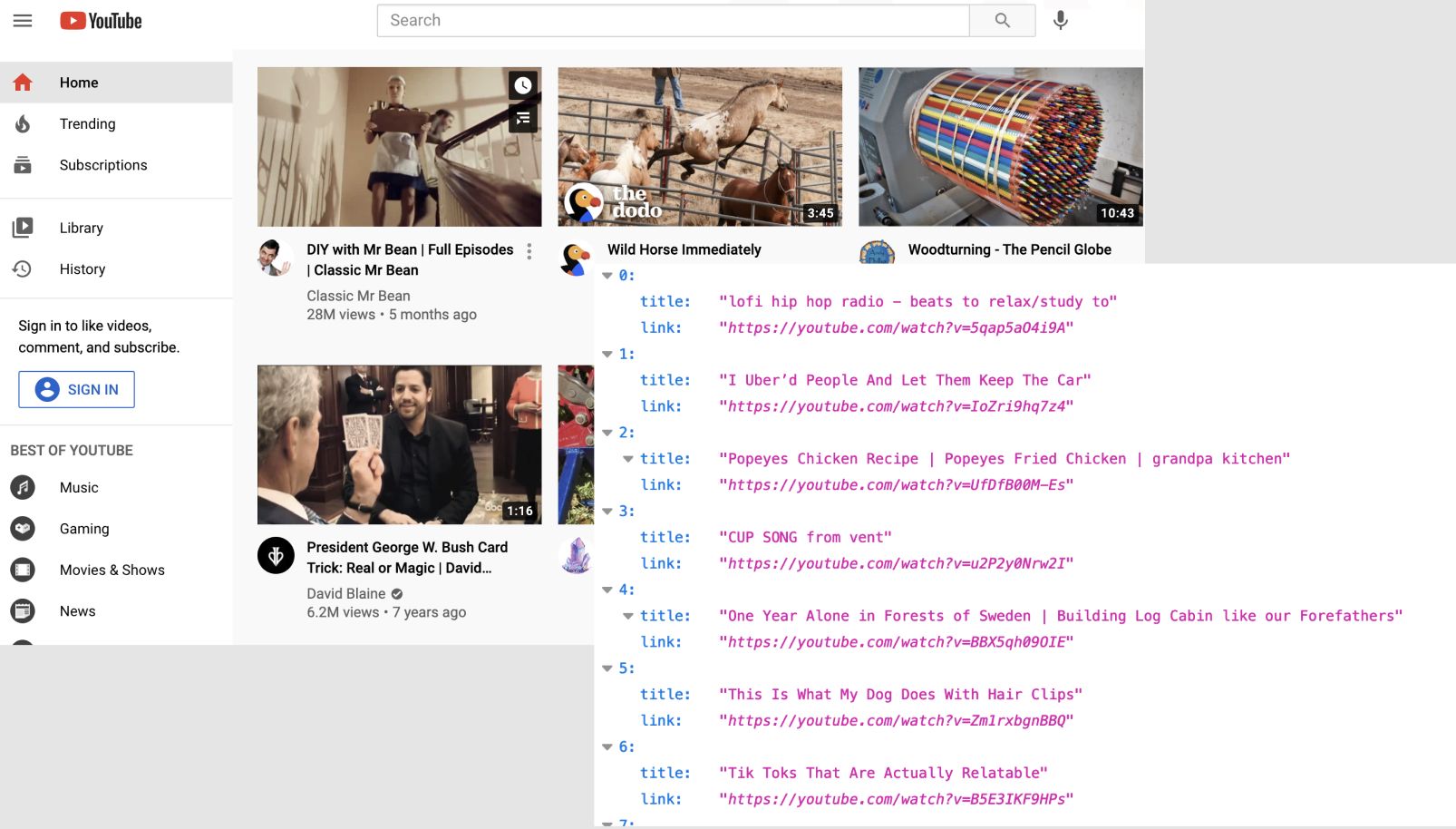

In the last few years, web scraping has been one of my day to day and frequently needed tasks. I was wondering if I can make it smart and automatic to save lots of time. So I made AutoScraper!

In the last few years, web scraping has been one of my day to day and frequently needed tasks. I was wondering if I can make it smart and automatic to save lots of time. So I made AutoScraper!

Image: Goodreads.com

Image: Goodreads.com

Scraping ChatGPT with Python

Scraping ChatGPT with Python

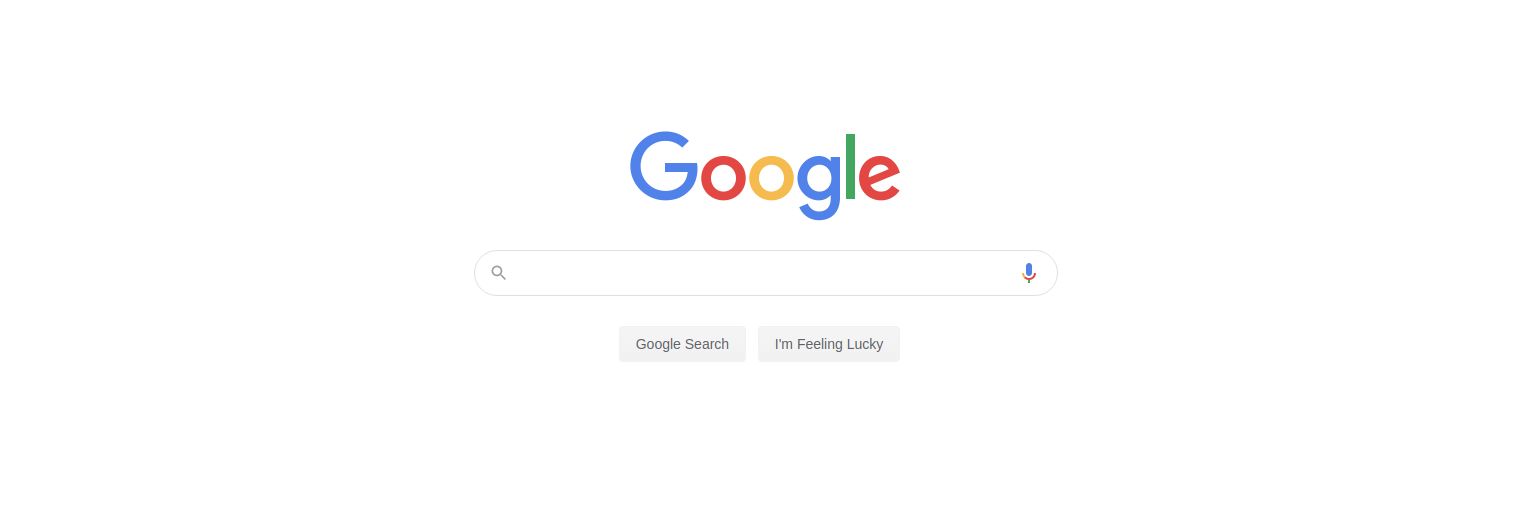

The goal of SEO is to get your website to the top of the search engine. One excellent way of tracking SEO progress is by checking the Search engine result pages (SERPs) of a website.

The goal of SEO is to get your website to the top of the search engine. One excellent way of tracking SEO progress is by checking the Search engine result pages (SERPs) of a website.

Learn how to scrape the web using scripts written in node.js to automate scraping data off of the website and using it for whatever purpose.

Learn how to scrape the web using scripts written in node.js to automate scraping data off of the website and using it for whatever purpose.

Ever since Google Web Search API deprecation in 2011, I've been searching for an alternative. I need a way to get links from Google search into my Python script. So I made my own, and here is a quick guide on scraping Google searches with requests and Beautiful Soup.

Ever since Google Web Search API deprecation in 2011, I've been searching for an alternative. I need a way to get links from Google search into my Python script. So I made my own, and here is a quick guide on scraping Google searches with requests and Beautiful Soup.

Let's find out what email scraping is, how you can use it, and what's more important: whether it's legal or not.

Let's find out what email scraping is, how you can use it, and what's more important: whether it's legal or not.

If you are looking for a way to automate browser website clicks, you came to the right place.

If you are looking for a way to automate browser website clicks, you came to the right place.

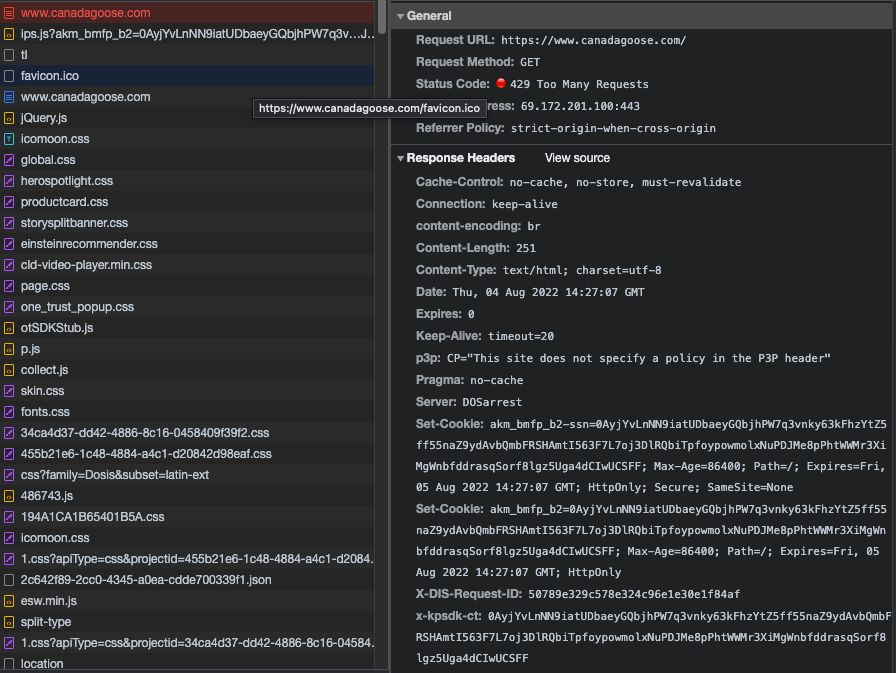

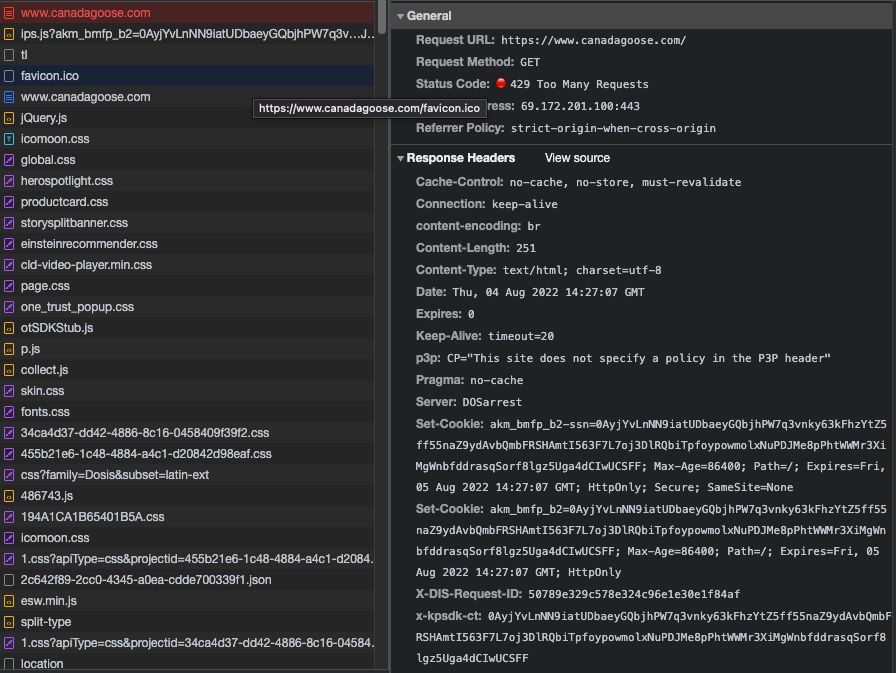

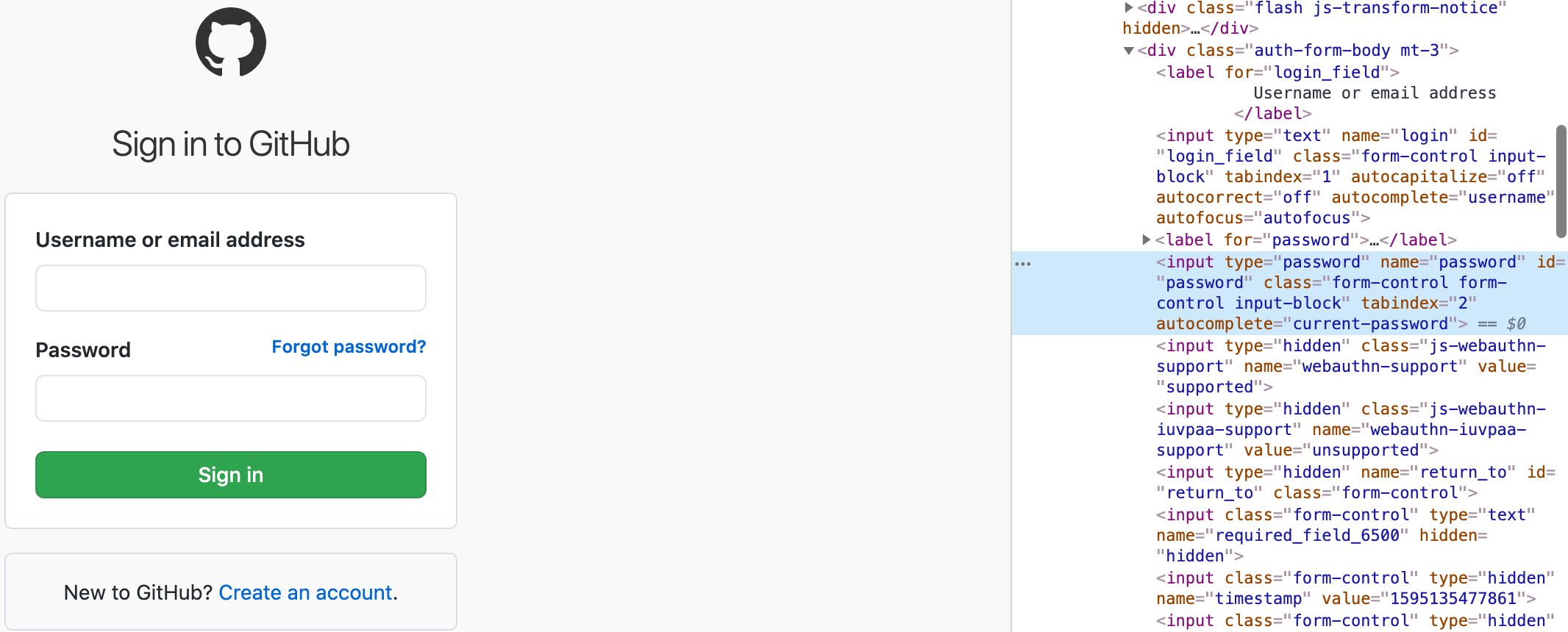

Anti-bot techniques are getting life harder for web scrapers. In this post we'll see how Kasada protects a website and how a misconfiguration of it can be used

Anti-bot techniques are getting life harder for web scrapers. In this post we'll see how Kasada protects a website and how a misconfiguration of it can be used

Learn how to emulate a normal user request and scrape Google Search Console data using Python and Beautiful Soup.

Learn how to emulate a normal user request and scrape Google Search Console data using Python and Beautiful Soup.

Learn the differences between web scraping and data mining and how to apply them.

Learn the differences between web scraping and data mining and how to apply them.

Web scraping - A Complete Guide: In this blog, we will learn everything about web scraping, its methods and uses, the correct way of doing it.

Web scraping - A Complete Guide: In this blog, we will learn everything about web scraping, its methods and uses, the correct way of doing it.

Scraping Wikipedia for data using Puppeteer and Node

Scraping Wikipedia for data using Puppeteer and Node

Web scraping tools and framework comparison to bypass the most common anti-bot solutions like Cloudflare, Perimeterx, Datadome, Kasada and F5

Web scraping tools and framework comparison to bypass the most common anti-bot solutions like Cloudflare, Perimeterx, Datadome, Kasada and F5

Both large and small businesses rely more and more on web crawling to boost their marketing efforts.

Both large and small businesses rely more and more on web crawling to boost their marketing efforts.

The shutdowns brought an opportunity for my daughter to participate in virtual scouting events all over the United States. When the event registration form changed, I took the chance to try out some new web scraping skills while inspiring my daughter about the power of code for everyday tasks.

The shutdowns brought an opportunity for my daughter to participate in virtual scouting events all over the United States. When the event registration form changed, I took the chance to try out some new web scraping skills while inspiring my daughter about the power of code for everyday tasks.

Pro Tips & Techniques to Scrape Any Website Reliably. Go beyond CSS selectors to get hidden content. Metadata is full of valuable information.

Pro Tips & Techniques to Scrape Any Website Reliably. Go beyond CSS selectors to get hidden content. Metadata is full of valuable information.

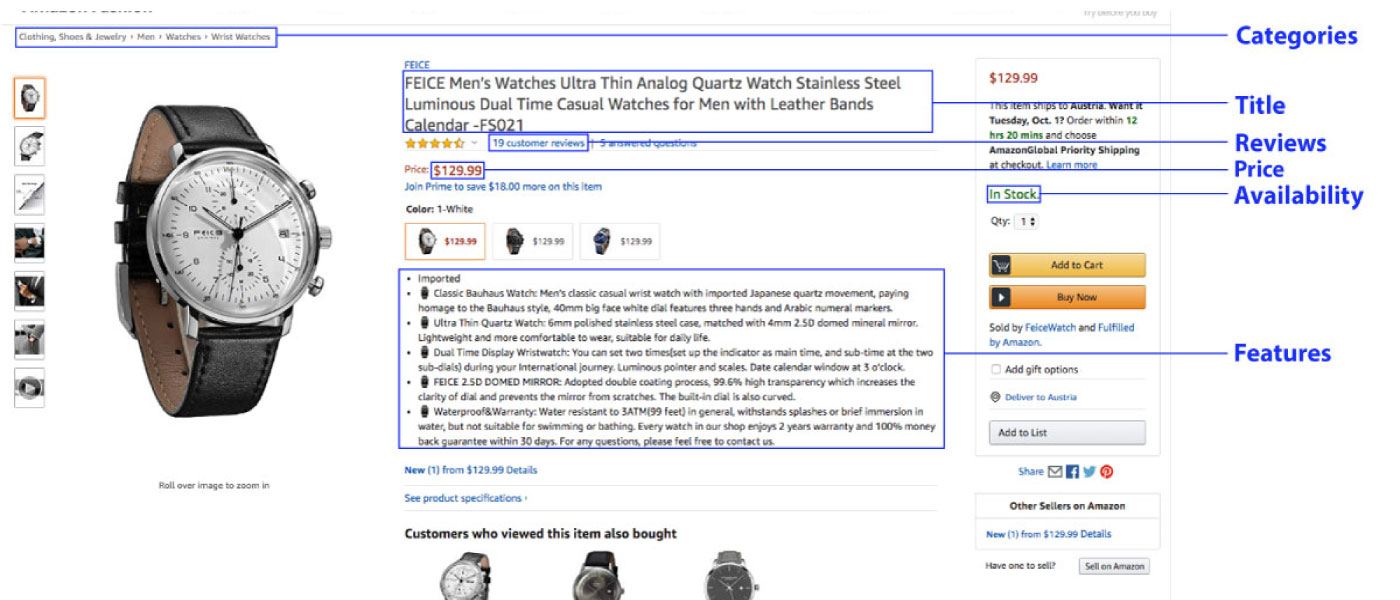

Web-scrape Amazon reviews with and without Python code.

Web-scrape Amazon reviews with and without Python code.

While building ScrapingBee I'm always checking different forums everyday to help people about web scraping related questions and engage with the community.

While building ScrapingBee I'm always checking different forums everyday to help people about web scraping related questions and engage with the community.

Block specific resources from downloading with Playwright. Save time and money by downloading only the essential resources while web scraping or testing.

Block specific resources from downloading with Playwright. Save time and money by downloading only the essential resources while web scraping or testing.

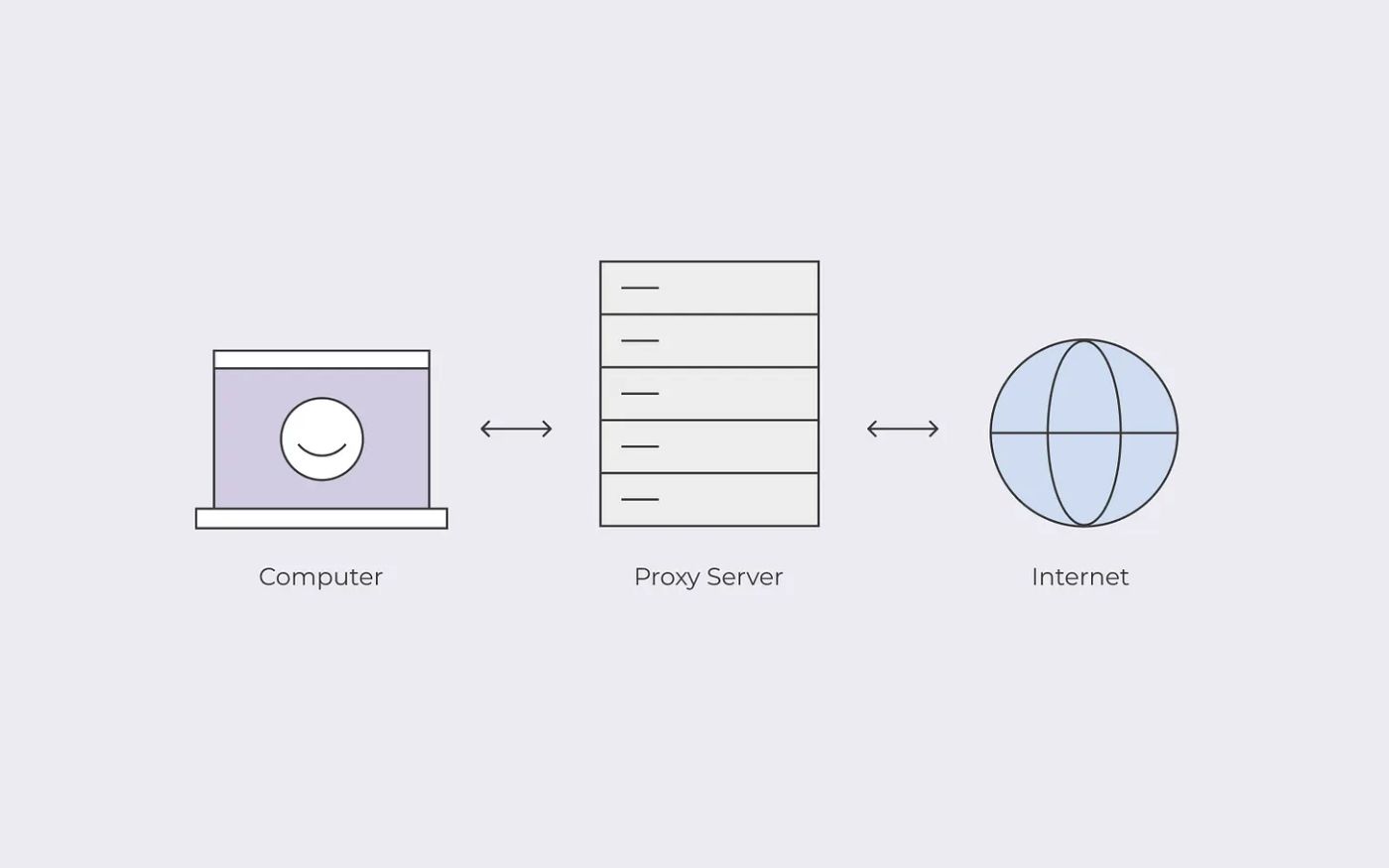

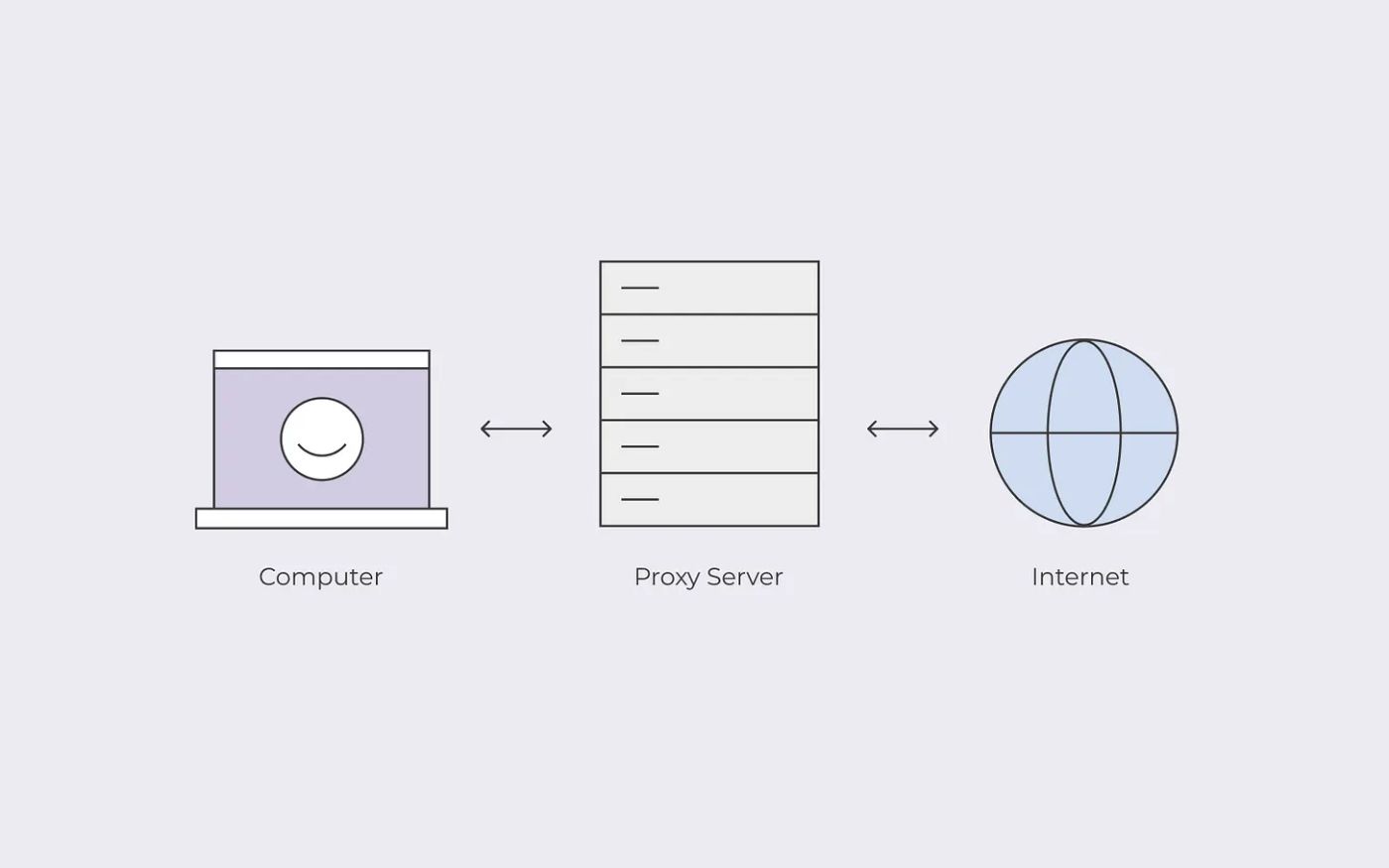

Web automation and web scraping are quite popular among people out there. That’s mainly because people tend to use web scraping and other similar automation technologies to grab information they want from the internet. The internet can be considered as one of the biggest sources of information. If we can use that wisely, we will be able to scrape lots of important facts. However, it is important for us to use appropriate methodologies to get the most out of web scraping. That’s where proxies come into play.

Web automation and web scraping are quite popular among people out there. That’s mainly because people tend to use web scraping and other similar automation technologies to grab information they want from the internet. The internet can be considered as one of the biggest sources of information. If we can use that wisely, we will be able to scrape lots of important facts. However, it is important for us to use appropriate methodologies to get the most out of web scraping. That’s where proxies come into play.

A guide on how to do Web Scraping in DotNet (.NET) CSharp (C#), with examples. Software Development Coding Programming Selenium HtmlAgilityPack Puppeteer

A guide on how to do Web Scraping in DotNet (.NET) CSharp (C#), with examples. Software Development Coding Programming Selenium HtmlAgilityPack Puppeteer

Learn how to build a web scraper with Javascript and Node.js. Add anti-blocking techniques, a headless browser, and parallelize requests with a queue.

Learn how to build a web scraper with Javascript and Node.js. Add anti-blocking techniques, a headless browser, and parallelize requests with a queue.

The internet is a treasure trove of valuable information. Read this article to find out how web crawling, scraping, and parsing can help you.

The internet is a treasure trove of valuable information. Read this article to find out how web crawling, scraping, and parsing can help you.

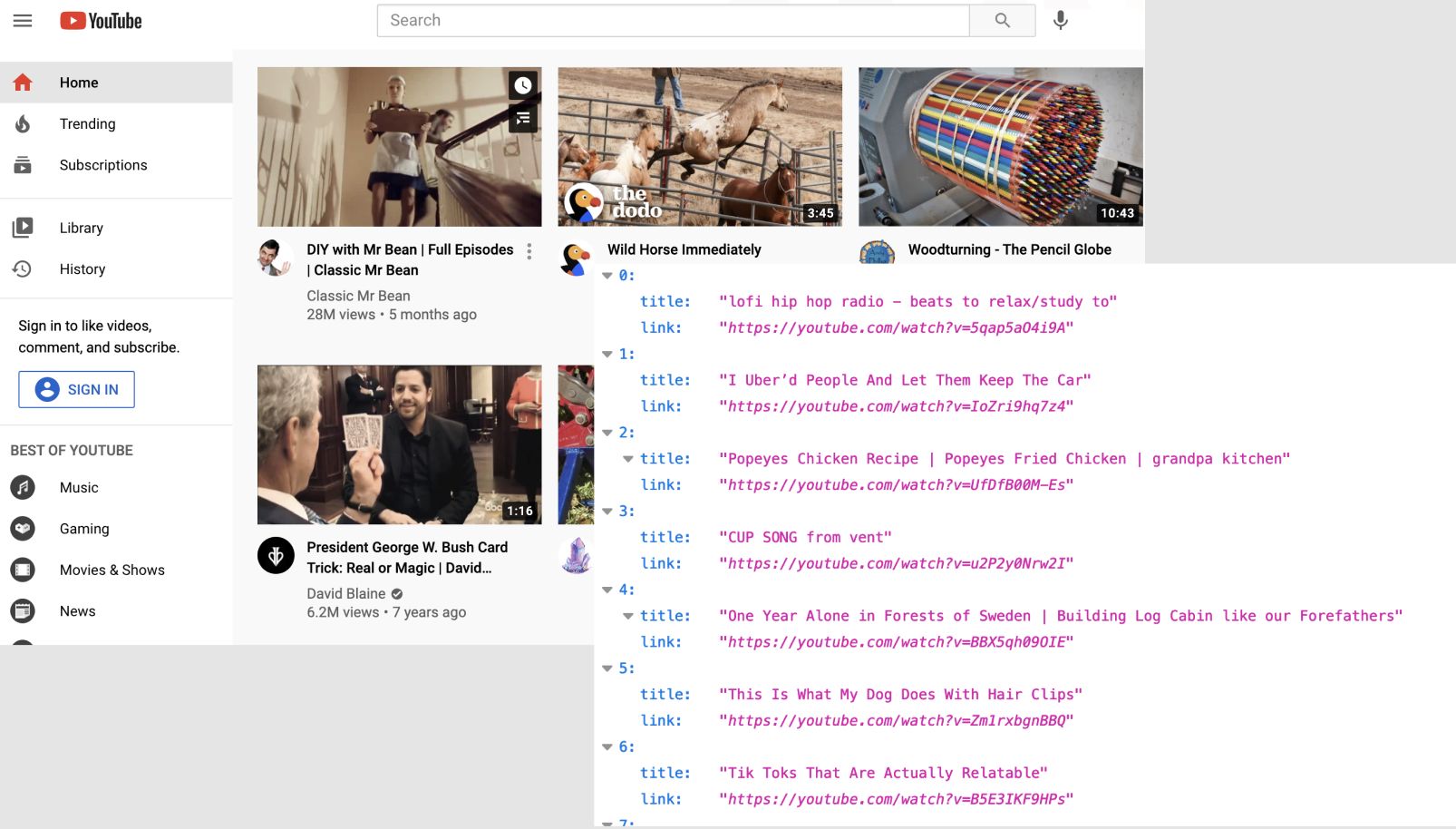

Scraping Google News Results can get you access to articles from thousands of sources, including CNN, BBC, Reuters, etc.

Scraping Google News Results can get you access to articles from thousands of sources, including CNN, BBC, Reuters, etc.

In the previous articles, I introduce you to two different tools to perform web scraping with Java. HtmlUnit in the first article, and PhantomJS in the article about handling Javascript heavy website.

In the previous articles, I introduce you to two different tools to perform web scraping with Java. HtmlUnit in the first article, and PhantomJS in the article about handling Javascript heavy website.

Scraping Ikea website for every country to get insights about its pricing strategies (and have a quick view of the difficulties of web scraping).

Scraping Ikea website for every country to get insights about its pricing strategies (and have a quick view of the difficulties of web scraping).

Scraping football data (soccer in the US) is a great way to build comprehensive datasets to help create stats dashboards. Check out our football data scraper!

Scraping football data (soccer in the US) is a great way to build comprehensive datasets to help create stats dashboards. Check out our football data scraper!

Suppose you want to get large amounts of information from a website as quickly as possible. How can this be done?

Suppose you want to get large amounts of information from a website as quickly as possible. How can this be done?

Collecting data from the web can be the core of data science. In this article, we'll see how to start with scraping with or without having to write code.

Collecting data from the web can be the core of data science. In this article, we'll see how to start with scraping with or without having to write code.

La paginación es una técnica ampliamente utilizada en el diseño web que divide el contenido en varias páginas, presentando grandes conjuntos de datos de una manera mucho más fácil de digerir para los internautas.

La paginación es una técnica ampliamente utilizada en el diseño web que divide el contenido en varias páginas, presentando grandes conjuntos de datos de una manera mucho más fácil de digerir para los internautas.

In this tutorial, we will be scraping Google Finance, a data-rich website for traders and investors to get access to real-time financial data.

In this tutorial, we will be scraping Google Finance, a data-rich website for traders and investors to get access to real-time financial data.

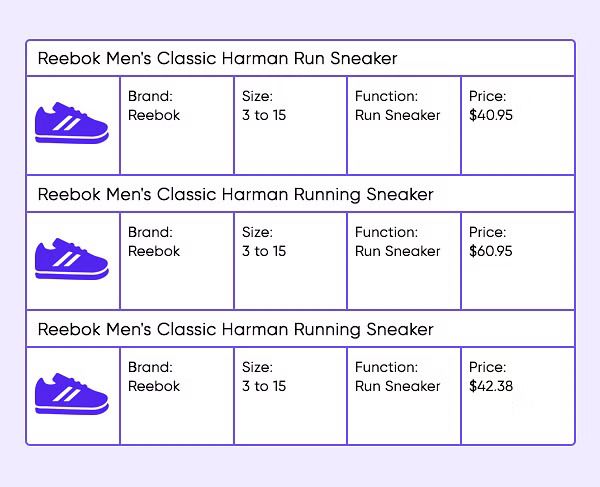

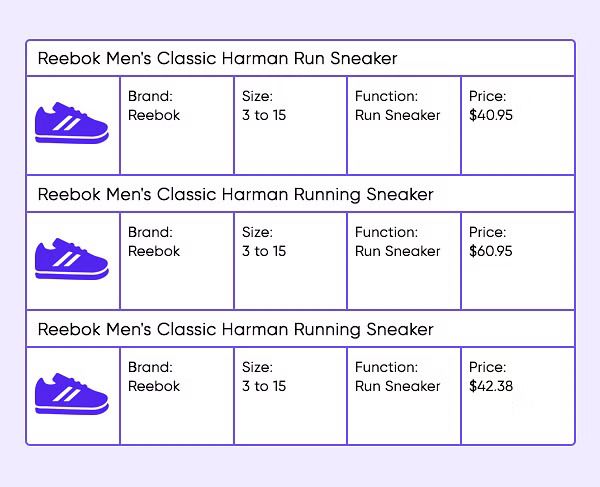

An easy tutorial showcasing the power of puppeteer and browserless. Scrape Google Shopping to gather prices of specific items automatically!

An easy tutorial showcasing the power of puppeteer and browserless. Scrape Google Shopping to gather prices of specific items automatically!

Why in large web scraping projects there's the need of proxy servers? Here a brief explanation of what they are and how they work and their differences.

Why in large web scraping projects there's the need of proxy servers? Here a brief explanation of what they are and how they work and their differences.

Previously published at https://www.octoparse.es/blog/15-preguntas-frecuentes-sobre-web-scraping

Previously published at https://www.octoparse.es/blog/15-preguntas-frecuentes-sobre-web-scraping

Off late, “Fintech” has been and remains to be a buzzword. It is transcending beyond traditional banking and financial services, encompassing online wallets, crypto, crowdfunding, asset management, and pretty much every other activity that includes a financial transaction. Thereby competing directly and fiercely with traditional financing giants and their methods.

Off late, “Fintech” has been and remains to be a buzzword. It is transcending beyond traditional banking and financial services, encompassing online wallets, crypto, crowdfunding, asset management, and pretty much every other activity that includes a financial transaction. Thereby competing directly and fiercely with traditional financing giants and their methods.

In this part of the ‘Alpha Capture in Digital Commerce series’,we will explore the challenges of data acquisition in retail and discuss data science application

In this part of the ‘Alpha Capture in Digital Commerce series’,we will explore the challenges of data acquisition in retail and discuss data science application

¿Alguna vez te sucede cuando la gente te pide que escribas una API separada para integrar datos de redes sociales y guardar los datos sin procesar en tu base de datos de análisis en el sitio? Definitivamente quieres saber qué es la API, cómo se usa en web scraping y qué puede lograr con ella. Echemos un vistazo.

¿Alguna vez te sucede cuando la gente te pide que escribas una API separada para integrar datos de redes sociales y guardar los datos sin procesar en tu base de datos de análisis en el sitio? Definitivamente quieres saber qué es la API, cómo se usa en web scraping y qué puede lograr con ella. Echemos un vistazo.

It’s safe to say that the amount of data available on the internet nowadays is practically limitless, with much of it no more than a few clicks away. However, gaining access to the information you need sometimes involves a lot of time, money, and effort.

It’s safe to say that the amount of data available on the internet nowadays is practically limitless, with much of it no more than a few clicks away. However, gaining access to the information you need sometimes involves a lot of time, money, and effort.

Follow me along on how I explored Germany’s largest travel forum Vielfliegertref. As an inspiring data scientist, building interesting portfolio projects is key to showcase your skills. When I learned coding and data science as a business student through online courses, I disliked that datasets were made up of fake data or were solved before like Boston House Prices or the Titanic dataset on Kaggle.

Follow me along on how I explored Germany’s largest travel forum Vielfliegertref. As an inspiring data scientist, building interesting portfolio projects is key to showcase your skills. When I learned coding and data science as a business student through online courses, I disliked that datasets were made up of fake data or were solved before like Boston House Prices or the Titanic dataset on Kaggle.

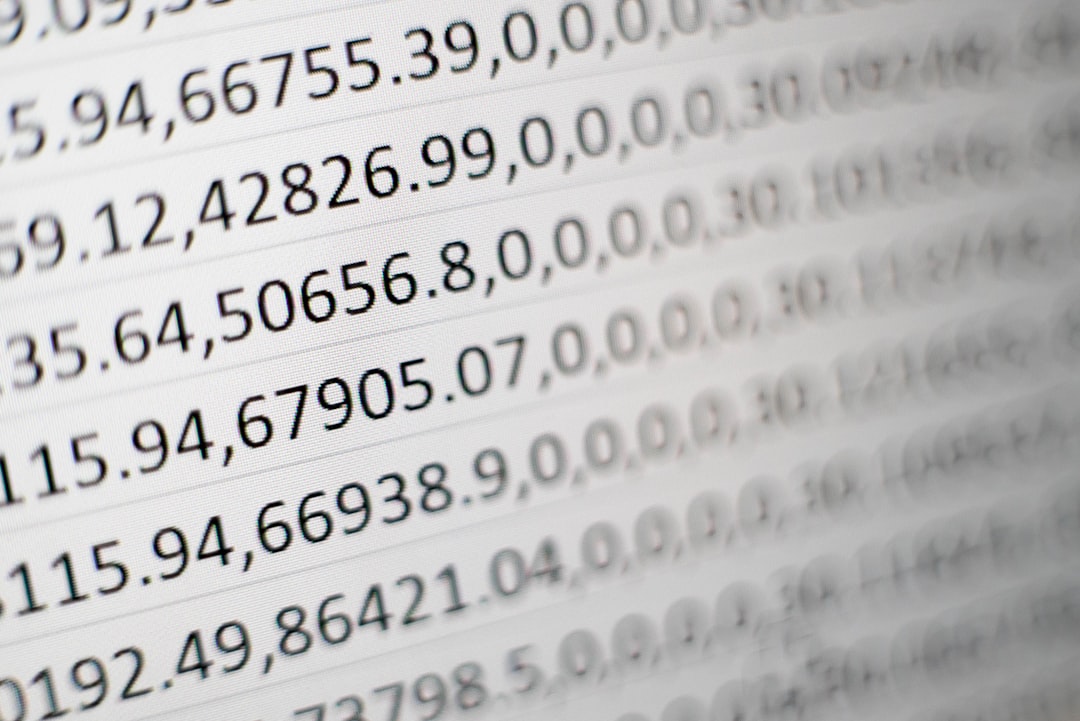

In this article, we’ll observe another test with1.1M Hacker News curated comments with numeric fields

In this article, we’ll observe another test with1.1M Hacker News curated comments with numeric fields

Broken Link Building – 29.9% New Users, A Higher DR, and a Revenue Boost of 42.3%

Broken Link Building – 29.9% New Users, A Higher DR, and a Revenue Boost of 42.3%

Learn how to leverage web scraping in marketing. In this article, we unpack use cases and tips for getting started.

Learn how to leverage web scraping in marketing. In this article, we unpack use cases and tips for getting started.

A quick introduction to web scraping, what it is, how it works, some pros and cons, and a few tools you can use to approach it

A quick introduction to web scraping, what it is, how it works, some pros and cons, and a few tools you can use to approach it

Last week I finished my Ruby curriculum at Microverse. So I was ready to build my Capstone Project. Which is a solo project at the end of each of the Microverse technical curriculum sections.

Last week I finished my Ruby curriculum at Microverse. So I was ready to build my Capstone Project. Which is a solo project at the end of each of the Microverse technical curriculum sections.

The rise of various technologies has brought data-driven businesses into the limelight.

The rise of various technologies has brought data-driven businesses into the limelight.

Amazon is one of the largest e-commerce platforms across the globe. It has one of the largest customer bases and one of the most versatile and adaptive product portfolios. It definitely gets the advantage of a large amount of data and better operational processes in place due to its standing as one of the largest retailers. Having said that, even you can use Amazon’s data as an advantage to yourself to design a better product and price portfolio.

Amazon is one of the largest e-commerce platforms across the globe. It has one of the largest customer bases and one of the most versatile and adaptive product portfolios. It definitely gets the advantage of a large amount of data and better operational processes in place due to its standing as one of the largest retailers. Having said that, even you can use Amazon’s data as an advantage to yourself to design a better product and price portfolio.

La necesidad de crawling datos web ha aumentado en los últimos años. Los datos crawled se pueden usar para evaluación o predicción en diferentes campos. Aquí, me gustaría hablar sobre 3 métodos que podemos adoptar para scrape datos desde un sitio web.

La necesidad de crawling datos web ha aumentado en los últimos años. Los datos crawled se pueden usar para evaluación o predicción en diferentes campos. Aquí, me gustaría hablar sobre 3 métodos que podemos adoptar para scrape datos desde un sitio web.

While there are a few different libraries for scraping the web with Node.js, in this tutorial, i'll be using the puppeteer library.

While there are a few different libraries for scraping the web with Node.js, in this tutorial, i'll be using the puppeteer library.

In this post, we will learn to scrape Google Shopping Results using Node JS with Unirest and Cheerio.

In this post, we will learn to scrape Google Shopping Results using Node JS with Unirest and Cheerio.

There’s no doubt that in order to make a decent profit on Amazon, it is essential to choose the best product to sell. To find out which product sells the best, we need to conduct product research to understand the market.

There’s no doubt that in order to make a decent profit on Amazon, it is essential to choose the best product to sell. To find out which product sells the best, we need to conduct product research to understand the market.

Por favor clic el artículo original:http://www.octoparse.es/blog/70-fuentes-de-datos-gratuitas-en-2020

Por favor clic el artículo original:http://www.octoparse.es/blog/70-fuentes-de-datos-gratuitas-en-2020

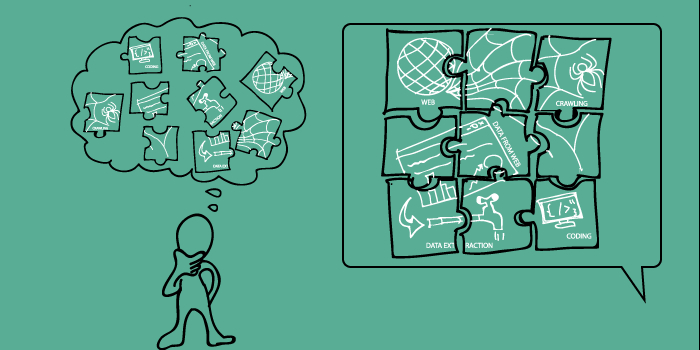

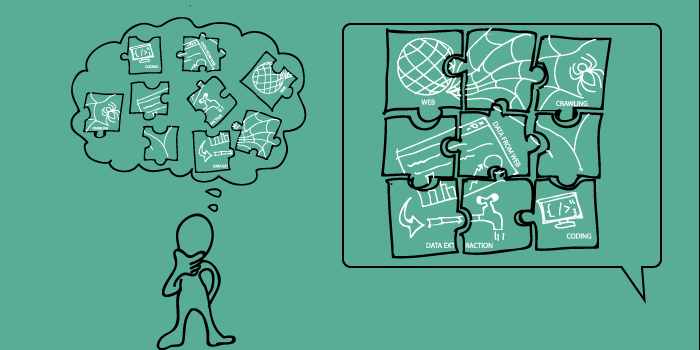

Learn the fundamental distinctions between web crawling and web scraping, and determine which one is right for you.

Learn the fundamental distinctions between web crawling and web scraping, and determine which one is right for you.

A guide to web scraping with Puppeteer, Node.js, and Autocode with tips and examples

A guide to web scraping with Puppeteer, Node.js, and Autocode with tips and examples

The business world is a very cold and hard place where only the best find their way to succeed. The market — each market — has its own limits and even if it’s pretty easy to get into the market, the most difficult part comes when you have to find a way to stay in that market and grow your business when the competition is always growing.

The business world is a very cold and hard place where only the best find their way to succeed. The market — each market — has its own limits and even if it’s pretty easy to get into the market, the most difficult part comes when you have to find a way to stay in that market and grow your business when the competition is always growing.

A while ago I was trying to perform an analysis of a Medium publication for a personal project. But getting the data was a problem – scraping only the publication’s home page does not guarantee that you get all the data you want.

A while ago I was trying to perform an analysis of a Medium publication for a personal project. But getting the data was a problem – scraping only the publication’s home page does not guarantee that you get all the data you want.

In this article, I will tell you what role the HTTP/HTTPS sniffer plays in data parsing and why it is very important.

In this article, I will tell you what role the HTTP/HTTPS sniffer plays in data parsing and why it is very important.

Web scraping has broken the barriers of programming and can now be done in a much simpler and easier manner without using a single line of code.

Web scraping has broken the barriers of programming and can now be done in a much simpler and easier manner without using a single line of code.

From a technical marketer perspective, scraping and automation libraries are extremely important to learn. Here’s an introduction to two of the most widely used web scraping libraries in Node JS.

From a technical marketer perspective, scraping and automation libraries are extremely important to learn. Here’s an introduction to two of the most widely used web scraping libraries in Node JS.

Web scraping or crawling is the fact of fetching data from a third party website by downloading and parsing the HTML code to extract the data you want.

Web scraping or crawling is the fact of fetching data from a third party website by downloading and parsing the HTML code to extract the data you want.

In this post, we will learn web scraping Google with Node JS using some of the in-demand web scraping and web parsing libraries present in Node JS.

In this post, we will learn web scraping Google with Node JS using some of the in-demand web scraping and web parsing libraries present in Node JS.

In this post, we are going to scrape data from Linkedin using Python and a Web Scraping Tool. We are going to extract Company Name, Website, Industry, Company Size, Number of employees, Headquarters Address, and Specialties.

In this post, we are going to scrape data from Linkedin using Python and a Web Scraping Tool. We are going to extract Company Name, Website, Industry, Company Size, Number of employees, Headquarters Address, and Specialties.

When you need tons of data quickly, a web scraper is the best option. Luckily, making your own scraper isn't as hard as it seems. Here's how to do it in NodeJS!

When you need tons of data quickly, a web scraper is the best option. Luckily, making your own scraper isn't as hard as it seems. Here's how to do it in NodeJS!

Web scraping is all about programmatically using Python or any other programming language to download, clean, and use the data from a web page.

Web scraping is all about programmatically using Python or any other programming language to download, clean, and use the data from a web page.

Everything you need to know to automate, optimize and streamline the data collection process in your organization!

Everything you need to know to automate, optimize and streamline the data collection process in your organization!

Is Python really the easiest and most efficient way to scrape a website? There are other options out there. Find out which one is best for you!

Is Python really the easiest and most efficient way to scrape a website? There are other options out there. Find out which one is best for you!

Web scraping as a product has low entry requirements, which attracts freelancers and development teams to it.

Web scraping as a product has low entry requirements, which attracts freelancers and development teams to it.

La necesidad de extraer datos de sitios web está aumentando. Cuando realizamos proyectos relacionados con datos, como el monitoreo de precios, análisis de negocios o agregador de noticias, siempre tendremos que registrar los datos de los sitios web. Sin embargo, copiar y pegar datos línea por línea ha quedado desactualizado. En este artículo, le enseñaremos cómo convertirse en un "experto" en la extracción de datos de sitios web, que consiste en hacer web scraping con python.

La necesidad de extraer datos de sitios web está aumentando. Cuando realizamos proyectos relacionados con datos, como el monitoreo de precios, análisis de negocios o agregador de noticias, siempre tendremos que registrar los datos de los sitios web. Sin embargo, copiar y pegar datos línea por línea ha quedado desactualizado. En este artículo, le enseñaremos cómo convertirse en un "experto" en la extracción de datos de sitios web, que consiste en hacer web scraping con python.

Intro

Intro

Financial market data is one of the most valuable data in the current time. If analyzed correctly, it holds the potential of turning an organisation’s economic issues upside down. Among a few of them, Yahoo finance is one such website which provides free access to this valuable data of stocks and commodities prices. In this blog, we are going to implement a simple web crawler in python which will help us in scraping yahoo finance website. Some of the applications of scraping Yahoo finance data can be forecasting stock prices, predicting market sentiment towards a stock, gaining an investive edge and cryptocurrency trading. Also, the process of generating investment plans can make good use of this data!

Financial market data is one of the most valuable data in the current time. If analyzed correctly, it holds the potential of turning an organisation’s economic issues upside down. Among a few of them, Yahoo finance is one such website which provides free access to this valuable data of stocks and commodities prices. In this blog, we are going to implement a simple web crawler in python which will help us in scraping yahoo finance website. Some of the applications of scraping Yahoo finance data can be forecasting stock prices, predicting market sentiment towards a stock, gaining an investive edge and cryptocurrency trading. Also, the process of generating investment plans can make good use of this data!

Data extraction has many forms and can be complicated. From Preventing your IP from getting banned to bypassing the captchas, to parsing the source correctly, headerless chrome for javascript rendering, data cleaning, and then generating the data in a usable format, there is a lot of effort that goes in. I have been scraping data from the web for over 8 years. We used web scraping for tracking the prices of other hotel booking vendors. So, when our competitor lowers his prices we get a notification to lower our prices to from our cron web scrapers.

Data extraction has many forms and can be complicated. From Preventing your IP from getting banned to bypassing the captchas, to parsing the source correctly, headerless chrome for javascript rendering, data cleaning, and then generating the data in a usable format, there is a lot of effort that goes in. I have been scraping data from the web for over 8 years. We used web scraping for tracking the prices of other hotel booking vendors. So, when our competitor lowers his prices we get a notification to lower our prices to from our cron web scrapers.

La necesidad de extraer datos de sitios web está aumentando. Cuando realizamos proyectos relacionados con datos, como el monitoreo de precios, análisis de negocios o agregador de noticias, siempre tendremos que registrar los datos de los sitios web. Sin embargo, copiar y pegar datos línea por línea ha quedado desactualizado. En este artículo, le enseñaremos cómo convertirse en un "experto" en la extracción de datos de sitios web, que consiste en hacer web scraping con python.

La necesidad de extraer datos de sitios web está aumentando. Cuando realizamos proyectos relacionados con datos, como el monitoreo de precios, análisis de negocios o agregador de noticias, siempre tendremos que registrar los datos de los sitios web. Sin embargo, copiar y pegar datos línea por línea ha quedado desactualizado. En este artículo, le enseñaremos cómo convertirse en un "experto" en la extracción de datos de sitios web, que consiste en hacer web scraping con python.

With a Scriptable app, it’s possible to create a native iOS widget even with basic JavaScript knowledge.

With a Scriptable app, it’s possible to create a native iOS widget even with basic JavaScript knowledge.

As the CEO of a proxy service and data scraping solutions provider, I understand completely why global data breaches that appear on news headlines at times have given web scraping a terrible reputation and why so many people feel cynical about Big Data these days.

As the CEO of a proxy service and data scraping solutions provider, I understand completely why global data breaches that appear on news headlines at times have given web scraping a terrible reputation and why so many people feel cynical about Big Data these days.

To scrape a website, it’s common to send GET requests, but it's useful to know how to send data. In this article, we'll see how to start with POST requests.

To scrape a website, it’s common to send GET requests, but it's useful to know how to send data. In this article, we'll see how to start with POST requests.

A few years ago, Cambridge Analytica made netizens concerned regarding the gathering of their online data. At that time, affected or interested users had little knowledge of how big the big-data industry actually was.

A few years ago, Cambridge Analytica made netizens concerned regarding the gathering of their online data. At that time, affected or interested users had little knowledge of how big the big-data industry actually was.

An easy tutorial showcasing the power of puppeteer and browserless. Scrape Amazon.com to gather prices of specific items automatically!

An easy tutorial showcasing the power of puppeteer and browserless. Scrape Amazon.com to gather prices of specific items automatically!

Learn how you can easily scrape the latest stock prices using Node.js and puppeteer!

Learn how you can easily scrape the latest stock prices using Node.js and puppeteer!

How to gather data without those pesky databases.

How to gather data without those pesky databases.

Con el advenimiento de los grandes datos, las personas comienzan a obtener datos de Internet para el análisis de datos con la ayuda de rastreadores web. Hay varias formas de hacer su propio rastreador: extensiones en los navegadores, codificación de python con Beautiful Soup o Scrapy, y también herramientas de extracción de datos como Octoparse.

Con el advenimiento de los grandes datos, las personas comienzan a obtener datos de Internet para el análisis de datos con la ayuda de rastreadores web. Hay varias formas de hacer su propio rastreador: extensiones en los navegadores, codificación de python con Beautiful Soup o Scrapy, y también herramientas de extracción de datos como Octoparse.

TL;DR: We’ve released the Apify SDK — an open-source Node.js library for scraping and web crawling. There was one for Python, but until now, there was no such library for JavaScript, THE language of the web.

TL;DR: We’ve released the Apify SDK — an open-source Node.js library for scraping and web crawling. There was one for Python, but until now, there was no such library for JavaScript, THE language of the web.

Mastering Web-Scraping like a boss. Data Extraction Tips & Insights, Use Cases, Challenges... Everything you need to know🔥

Mastering Web-Scraping like a boss. Data Extraction Tips & Insights, Use Cases, Challenges... Everything you need to know🔥

How to not get stuck when collecting tabular data from the internet.

How to not get stuck when collecting tabular data from the internet.

Are you looking for a method of scraping Amazon reviews and do not know where to begin with? In that case, you may find this blog very useful in scraping Amazon reviews. In this blog, we will discuss scraping amazon reviews using Scrapy in python. Web scraping is a simple means of collecting data from different websites, and Scrapy is a web crawling framework in python.

Are you looking for a method of scraping Amazon reviews and do not know where to begin with? In that case, you may find this blog very useful in scraping Amazon reviews. In this blog, we will discuss scraping amazon reviews using Scrapy in python. Web scraping is a simple means of collecting data from different websites, and Scrapy is a web crawling framework in python.

Usually forgotten in all Data Science masters and courses, Web Scraping is, in my honest opinion a basic tool in the Data Scientist toolset, as is the tool for getting and therefore using external data from your organization when public databases are not available.

Usually forgotten in all Data Science masters and courses, Web Scraping is, in my honest opinion a basic tool in the Data Scientist toolset, as is the tool for getting and therefore using external data from your organization when public databases are not available.

Use a Ruby script to get the jab in India

Use a Ruby script to get the jab in India

In this post, we will learn to scrape Google Maps Reviews using the Google Maps hidden API.

In this post, we will learn to scrape Google Maps Reviews using the Google Maps hidden API.

These days we are all scared of the new airborne contagious coronavirus (2019-nCoV). Even if it is a tiny cough or low fever, it might underlie a lethargic symptom. However, what is the real truth?

These days we are all scared of the new airborne contagious coronavirus (2019-nCoV). Even if it is a tiny cough or low fever, it might underlie a lethargic symptom. However, what is the real truth?

I’m sure almost everyone reading this has been affected by the emergence of the novel coronavirus disease (COVID-19), in addition to noticing some serious disruptive economic changes across most industries. Our data research department here at Oxylabs has confirmed these movements, especially in the e-commerce, human resources (HR), travel, accommodation and cybersecurity segments.

I’m sure almost everyone reading this has been affected by the emergence of the novel coronavirus disease (COVID-19), in addition to noticing some serious disruptive economic changes across most industries. Our data research department here at Oxylabs has confirmed these movements, especially in the e-commerce, human resources (HR), travel, accommodation and cybersecurity segments.

Welcome to the new way of scraping the web. In the following guide, we will scrape BestBuy product pages, without writing any parsers, using one simple library: Scrapezone SDK.

Welcome to the new way of scraping the web. In the following guide, we will scrape BestBuy product pages, without writing any parsers, using one simple library: Scrapezone SDK.

From the most popular seats to the most popular viewing times, we wanted to find out more about the movie trends in Singapore . So we created PopcornData — a website to get a glimpse of Singapore’s Movie trends — by scraping data, finding interesting insights, and visualizing them.

From the most popular seats to the most popular viewing times, we wanted to find out more about the movie trends in Singapore . So we created PopcornData — a website to get a glimpse of Singapore’s Movie trends — by scraping data, finding interesting insights, and visualizing them.

Ever since Google Web Search API deprecation in 2011, I’ve been searching for an alternative. I need a way to get links from Google search into my Python script. So I made my own, and here is a quick guide on scraping Google searches with requests and Beautiful Soup.

Ever since Google Web Search API deprecation in 2011, I’ve been searching for an alternative. I need a way to get links from Google search into my Python script. So I made my own, and here is a quick guide on scraping Google searches with requests and Beautiful Soup.

LinkedIn is a great place to find leads and engage with prospects. In order to engage with potential leads, you’ll need a list of users to contact. However, getting that list might be difficult because LinkedIn has made it difficult for web scraping tools. That is why I made a script to search Google for potential LinkedIn user and company profiles.

LinkedIn is a great place to find leads and engage with prospects. In order to engage with potential leads, you’ll need a list of users to contact. However, getting that list might be difficult because LinkedIn has made it difficult for web scraping tools. That is why I made a script to search Google for potential LinkedIn user and company profiles.

web scraping is practiced by businesses that create their marketing and development strategies based on the vast amount of web data

web scraping is practiced by businesses that create their marketing and development strategies based on the vast amount of web data

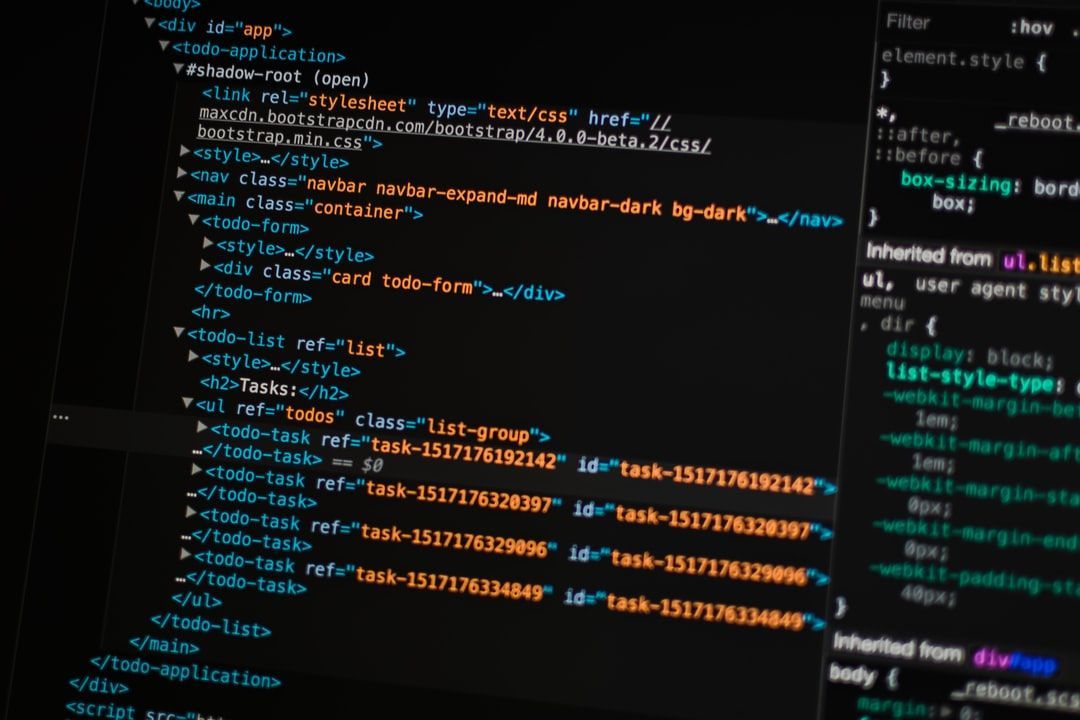

With the massive increase in the volume of data on the Internet, this technique is becoming increasingly beneficial in retrieving information from websites and applying them for various use cases. Typically, web data extraction involves making a request to the given web page, accessing its HTML code, and parsing that code to harvest some information. Since JavaScript is excellent at manipulating the DOM (Document Object Model) inside a web browser, creating data extraction scripts in Node.js can be extremely versatile. Hence, this tutorial focuses on javascript web scraping.

With the massive increase in the volume of data on the Internet, this technique is becoming increasingly beneficial in retrieving information from websites and applying them for various use cases. Typically, web data extraction involves making a request to the given web page, accessing its HTML code, and parsing that code to harvest some information. Since JavaScript is excellent at manipulating the DOM (Document Object Model) inside a web browser, creating data extraction scripts in Node.js can be extremely versatile. Hence, this tutorial focuses on javascript web scraping.

In this post, we are going to learn web scraping with python. Using python we are going to Scrape websites like Walmart, eBay, and Amazon for the pricing of Microsoft Xbox One X 1TB Black Console. Using that scraper you would be able to scrape pricing for any product from these websites. As you know I like to make things pretty simple, for that, I will also be using a web scraper which will increase your scraping efficiency.

In this post, we are going to learn web scraping with python. Using python we are going to Scrape websites like Walmart, eBay, and Amazon for the pricing of Microsoft Xbox One X 1TB Black Console. Using that scraper you would be able to scrape pricing for any product from these websites. As you know I like to make things pretty simple, for that, I will also be using a web scraper which will increase your scraping efficiency.

When you talk about web scraping, PHP is the last thing most people think about.

When you talk about web scraping, PHP is the last thing most people think about.

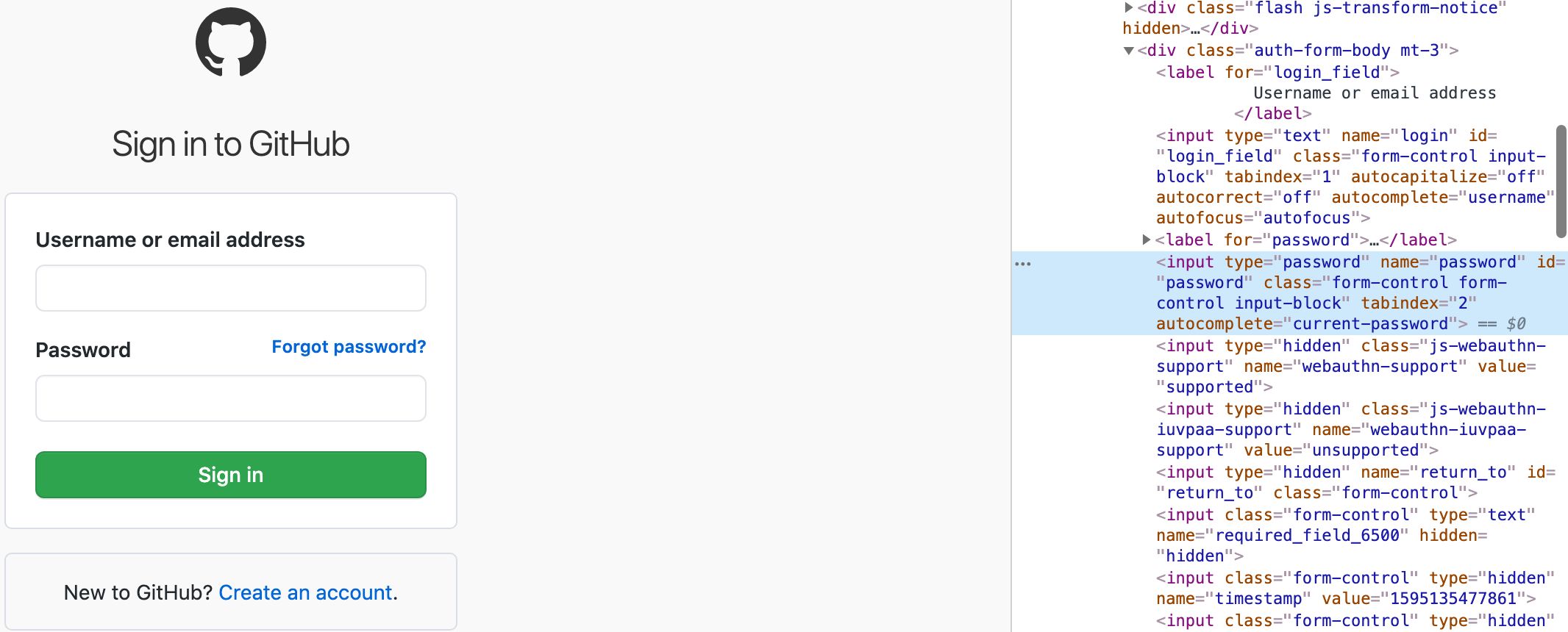

Hi everyone. In this article we are going to talk about how can you write a simple web scraper and a little search application using well known existing technologies which you perhaps didn’t know they can do that.

Hi everyone. In this article we are going to talk about how can you write a simple web scraper and a little search application using well known existing technologies which you perhaps didn’t know they can do that.

For a while, nobody in my circle of friends was talking about crypto.

For a while, nobody in my circle of friends was talking about crypto.

In this post, we are going to scrape Yahoo Finance using python. This is a great source for stock-market data. We will code a scraper for that. Using that scraper you would be able to scrape stock data of any company from yahoo finance. As you know I like to make things pretty simple, for that, I will also be using a web scraper which will increase your scraping efficiency.

In this post, we are going to scrape Yahoo Finance using python. This is a great source for stock-market data. We will code a scraper for that. Using that scraper you would be able to scrape stock data of any company from yahoo finance. As you know I like to make things pretty simple, for that, I will also be using a web scraper which will increase your scraping efficiency.

As the world is facing the worst pandemic ever, I was just looking at how countries spend on their healthcare infrastructure. So, I thought of doing a data visualization of the medical expense of several countries. My search led to this article, which has data from many countries for the year 2016. I did not found any authentic source for the latest year. So, we’ll continue with 2016.

As the world is facing the worst pandemic ever, I was just looking at how countries spend on their healthcare infrastructure. So, I thought of doing a data visualization of the medical expense of several countries. My search led to this article, which has data from many countries for the year 2016. I did not found any authentic source for the latest year. So, we’ll continue with 2016.

Scraping Google SERPs (search engine result pages) is as straightforward or as complicated as the tools we use.

Scraping Google SERPs (search engine result pages) is as straightforward or as complicated as the tools we use.

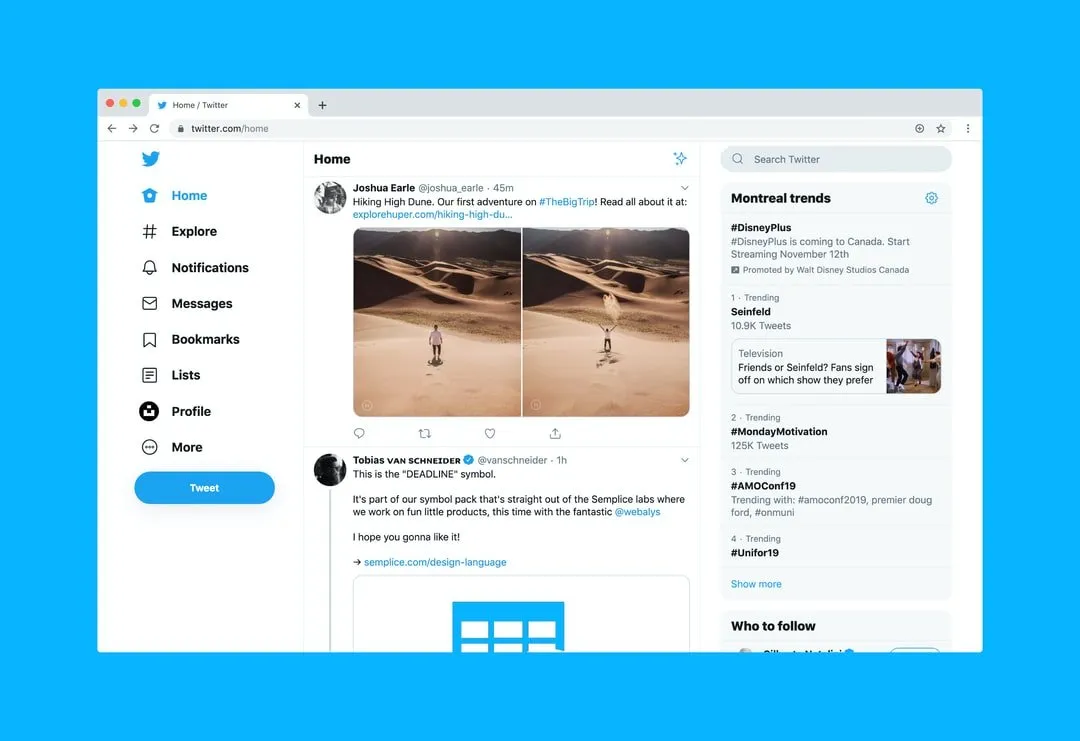

A Quick Method To Extract Tweets and Replies For Free

A Quick Method To Extract Tweets and Replies For Free

Web data extraction or web scraping in 2020 is the only way to get desired data if owners of a web site don't grant access to their users through API.

Web data extraction or web scraping in 2020 is the only way to get desired data if owners of a web site don't grant access to their users through API.

Web development has moved at a tremendous pace in the last decade with a lot of frameworks coming in for both backend and frontend development. Websites have become smarter and so have the underlying frameworks used in developing them. All these advancements in web development have led to the development of the browsers themselves too.

Web development has moved at a tremendous pace in the last decade with a lot of frameworks coming in for both backend and frontend development. Websites have become smarter and so have the underlying frameworks used in developing them. All these advancements in web development have led to the development of the browsers themselves too.

Some time ago, a few friends and I decided to build an app. We duck-taped our code together, launched our first version, then attracted a few users with a small marketing budget.

Some time ago, a few friends and I decided to build an app. We duck-taped our code together, launched our first version, then attracted a few users with a small marketing budget.

Coronavirus cases are increasing day by day. It’s very important to get vaccinated. so I tried to create an automated notifier to tell me when a lost opened up.

Coronavirus cases are increasing day by day. It’s very important to get vaccinated. so I tried to create an automated notifier to tell me when a lost opened up.

Web Scraping with Python is a popular subject around data science enthusiasts. Here is a piece of content aimed at beginners who want to learn Web Scraping with Python lxml library.

Web Scraping with Python is a popular subject around data science enthusiasts. Here is a piece of content aimed at beginners who want to learn Web Scraping with Python lxml library.

Business Intelligence (BI) es un negocio basado en datos, un proceso de toma de decisiones basado en datos recopilados. A menudo es utilizado por gerentes y ejecutivos para generar ideas procesables. Como resultado, BI siempre se conoce indistintamente como "Business Analytics" o "Data Analytics".

Business Intelligence (BI) es un negocio basado en datos, un proceso de toma de decisiones basado en datos recopilados. A menudo es utilizado por gerentes y ejecutivos para generar ideas procesables. Como resultado, BI siempre se conoce indistintamente como "Business Analytics" o "Data Analytics".

In this post we are going to scrape websites to gather data via the API World's top 300 APIs of year. The major reason of doing web scraping is it saves time and avoid manual data gathering and also allows you to have all the data in a structured form.

In this post we are going to scrape websites to gather data via the API World's top 300 APIs of year. The major reason of doing web scraping is it saves time and avoid manual data gathering and also allows you to have all the data in a structured form.

In this post, we are going to scrape Yahoo Finance using python. This is a great source for stock-market data. We will code a scraper for that. Using that scraper you would be able to scrape stock data of any company from yahoo finance. As you know I like to make things pretty simple, for that, I will also be using a web scraper which will increase your scraping efficiency.

In this post, we are going to scrape Yahoo Finance using python. This is a great source for stock-market data. We will code a scraper for that. Using that scraper you would be able to scrape stock data of any company from yahoo finance. As you know I like to make things pretty simple, for that, I will also be using a web scraper which will increase your scraping efficiency.