diff --git a/README.md b/README.md

index 2673e60..bc81403 100644

--- a/README.md

+++ b/README.md

@@ -12,69 +12,55 @@ want to turn the massive amounts of data produced by their apps, tools and servi

### Getting Started

-Kamon datadog module is currently available for Scala 2.10, 2.11 and 2.12.

-

Supported releases and dependencies are shown below.

-| kamon-datadog | status | jdk | scala | akka |

-|:------:|:------:|:----:|------------------|:------:|

-| 0.6.7 | stable | 1.7+, 1.8+ | 2.10, 2.11, 2.12 | 2.3.x, 2.4.x |

+| kamon-datadog | status | jdk | scala |

+|:--------------:|:------:|:----:|------------------|

+| 1.0.0 | stable | 1.8+ | 2.10, 2.11, 2.12 |

-To get started with SBT, simply add the following to your `build.sbt`

-file:

+To get started with SBT, simply add the following to your `build.sbt` file:

```scala

-libraryDependencies += "io.kamon" %% "kamon-datadog" % "0.6.7"

+libraryDependencies += "io.kamon" %% "kamon-datadog" % "1.0.0"

```

-Configuration

--------------

-

-By default, this module assumes that you have an instance of the Datadog Agent running in localhost and listening on

-port 8125. If that is not the case the you can use the `kamon.datadog.hostname` and `kamon.datadog.port` configuration

-keys to point the module at your Datadog Agent installation.

-

-The Datadog module subscribes itself to the entities included in the `kamon.datadog.subscriptions` key. By default, the

-following subscriptions are included:

+And add the Agent or API reporter to Kamon:

```

-kamon.datadog {

- subscriptions {

- histogram = [ "**" ]

- min-max-counter = [ "**" ]

- gauge = [ "**" ]

- counter = [ "**" ]

- trace = [ "**" ]

- trace-segment = [ "**" ]

- akka-actor = [ "**" ]

- akka-dispatcher = [ "**" ]

- akka-router = [ "**" ]

- system-metric = [ "**" ]

- http-server = [ "**" ]

- }

-}

+Kamon.addReporter(new DatadogAgentReporter())

+// OR

+Kamon.addReporter(new DatadogAPIReporter())

```

-If you are interested in reporting additional entities to Datadog please ensure that you include the categories and name

-patterns accordingly.

+Configuration

+-------------

+#### Agent Reporter

-### Metric Naming Conventions ###

+By default, the Agent reporter assumes that you have an instance of the Datadog Agent running in localhost and listening on

+port 8125. If that is not the case the you can use the `kamon.datadog.agent.hostname` and `kamon.datadog.agent.port` configuration

+keys to point the module at your Datadog Agent installation.

-For all single instrument entities (those tracking counters, histograms, gaugues and min-max-counters) the generated

-metric key will follow the `application.instrument-type.entity-name` pattern. Additionaly all tags supplied when

-creating the instrument will also be reported.

+#### API Reporter

-For all other entities the pattern is a little different: `application.entity-category.entity-name` and a identification

-tag using the category and entity name will be used. For example, all mailbox size measurements for Akka actors are

-reported under the `application.akka-actor.mailbox-size` metric and a identification tag similar to

-`actor:/user/example-actor` is included as well.

+When using the API reporter you must configure your API key using the `kamon.datadog.http.api-key` configuration setting.

+Since Kamon has access to the entire distribution of values for a given period, the API reporter can directly post the

+data that would otherwise be summarized and sent by the Datadog Agent. Gauges andAll histogram-backed metrics will be reported as

+follows:

+ - metric.avg

+ - metric.count

+ - metric.median

+ - metric.95percentile

+ - metric.max

+ - metric.min

-Finally, the application name can be changed by setting the `kamon.datadog.application-name` configuration key.

+You can refer to the [Datadog documentation](https://docs.datadoghq.com/developers/metrics/#histograms) for more details.

### Metric Units ###

-Kamon keeps all timing measurements in nanoseconds and memory measurements in bytes. In order to scale those to other units before sending to datadog, set `time-units` and `memory-units` config keys to desired units. Supported units are:

+Kamon keeps all timing measurements in nanoseconds and memory measurements in bytes. In order to scale those to other

+units before sending to datadog set the `time-units` and `memory-units` config keys to desired units. Supported units are:

+

```

n - nanoseconds

µs - microseconds

@@ -86,30 +72,31 @@ kb - kilobytes

mb - megabytes

gb - gigabytes

```

+

For example,

+

```

kamon.datadog.time-units = "ms"

```

+

will scale all timing measurements to milliseconds right before sending to datadog.

+

Integration Notes

-----------------

* Contrary to other Datadog client implementations, we don't flush the metrics data as soon as the measurements are

taken but instead, all metrics data is buffered by the `kamon-datadog` module and flushed periodically using the

- configured `kamon.datadog.flush-interval` and `kamon.datadog.max-packet-size` settings.

-* It is advisable to experiment with the `kamon.datadog.flush-interval` and `kamon.datadog.max-packet-size` settings to

+ configured `kamon.metric.tick-interval` and `kamon.datadog.max-packet-size` settings.

+* It is advisable to experiment with the `kamon.metric.tick-interval` and `kamon.datadog.agent.max-packet-size` settings to

find the right balance between network bandwidth utilisation and granularity on your metrics data.

- -

Visualization and Fun

---------------------

-Creating a dashboard in the Datadog user interface is really simple, just start typing the application name ("kamon" by

-default) in the metric selector and all metric names will start to show up. You can also break it down based on the entity

-names. Here is a very simple example of a dashboard created with metrics reported by Kamon:

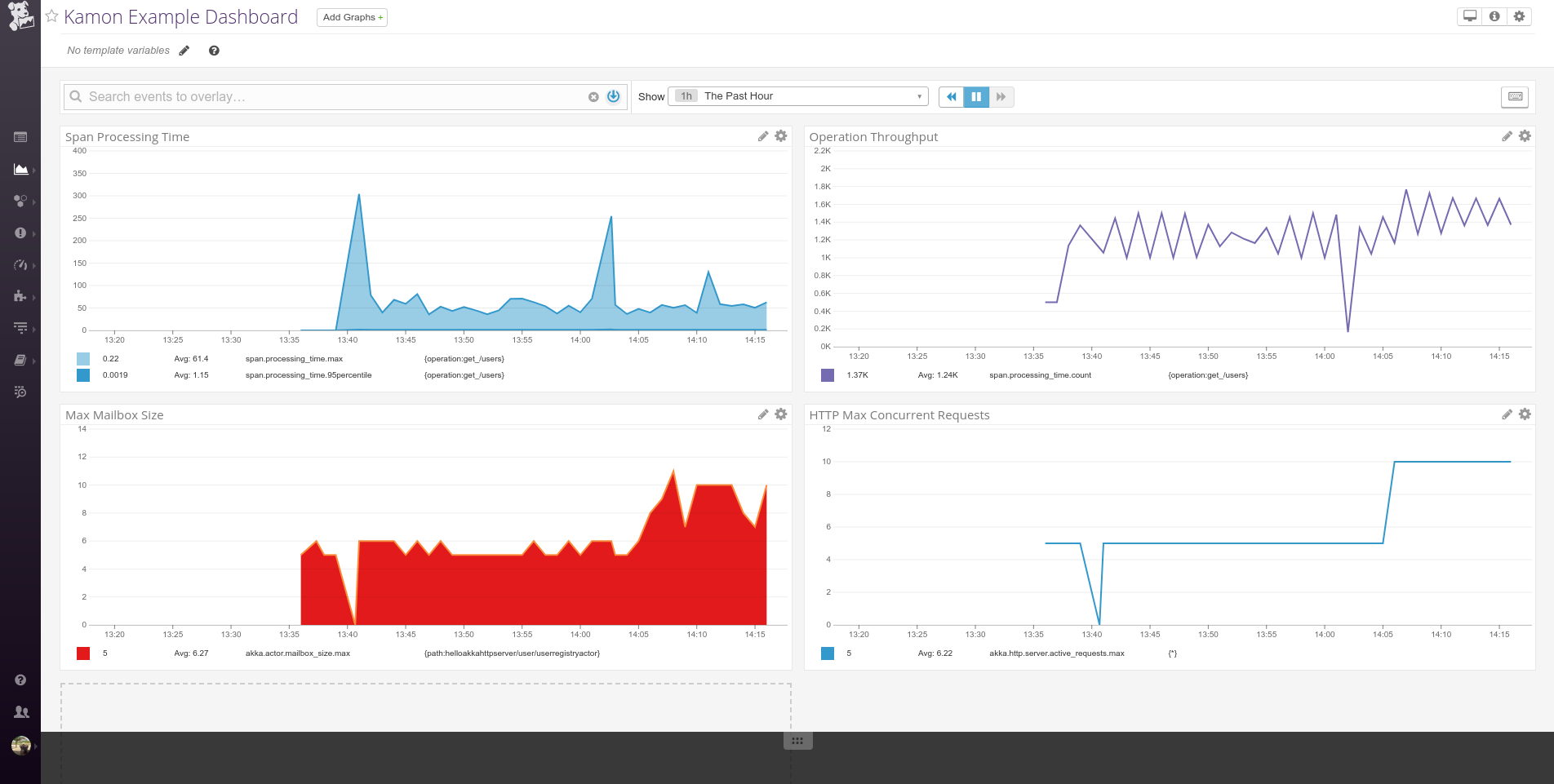

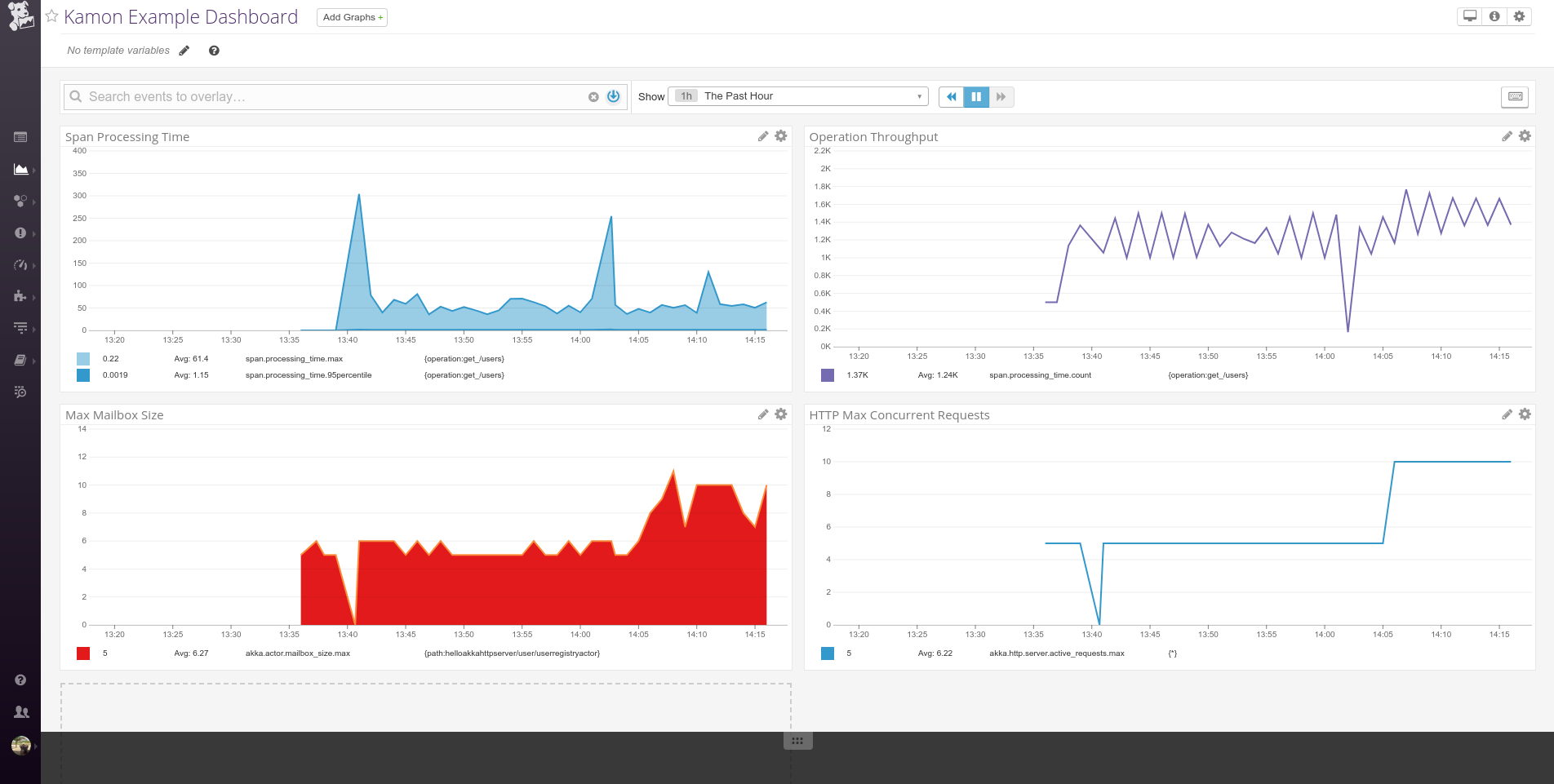

+Creating a dashboard in the Datadog user interface is really simple, all metric names will match the Kamon metric names

+with the additional "qualifier" suffix. Here is a very simple example of a dashboard created with metrics reported by Kamon:

-

Visualization and Fun

---------------------

-Creating a dashboard in the Datadog user interface is really simple, just start typing the application name ("kamon" by

-default) in the metric selector and all metric names will start to show up. You can also break it down based on the entity

-names. Here is a very simple example of a dashboard created with metrics reported by Kamon:

+Creating a dashboard in the Datadog user interface is really simple, all metric names will match the Kamon metric names

+with the additional "qualifier" suffix. Here is a very simple example of a dashboard created with metrics reported by Kamon:

diff --git a/src/main/scala/kamon/datadog/DatadogAPIReporter.scala b/src/main/scala/kamon/datadog/DatadogAPIReporter.scala

index 7310d97..8723951 100644

--- a/src/main/scala/kamon/datadog/DatadogAPIReporter.scala

+++ b/src/main/scala/kamon/datadog/DatadogAPIReporter.scala

@@ -37,15 +37,11 @@ class DatadogAPIReporter extends MetricReporter {

private val logger = LoggerFactory.getLogger(classOf[DatadogAPIReporter])

private val symbols = DecimalFormatSymbols.getInstance(Locale.US)

-

- private val jsonType = MediaType.parse("application/json; charset=utf-8")

-

symbols.setDecimalSeparator('.') // Just in case there is some weird locale config we are not aware of.

+ private val jsonType = MediaType.parse("application/json; charset=utf-8")

private val valueFormat = new DecimalFormat("#0.#########", symbols)

-

private var configuration = readConfiguration(Kamon.config())

-

private var httpClient: OkHttpClient = createHttpClient(configuration)

override def start(): Unit = {

@@ -78,6 +74,7 @@ class DatadogAPIReporter extends MetricReporter {

val timestamp = snapshot.from.getEpochSecond.toString

val host = Kamon.environment.host

+ val interval = Math.round(Duration.between(snapshot.from, snapshot.to).toMillis() / 1000D)

val seriesBuilder = new StringBuilder()

def addDistribution(metric: MetricDistribution): Unit = {

@@ -86,7 +83,7 @@ class DatadogAPIReporter extends MetricReporter {

val average = if (distribution.count > 0L) (distribution.sum / distribution.count) else 0L

addMetric(name + ".avg", valueFormat.format(scale(average, unit)), gauge, metric.tags)

addMetric(name + ".count", valueFormat.format(distribution.count), count, metric.tags)

- addMetric(name + ".median", valueFormat.format(distribution.percentile(50D).value), gauge, metric.tags)

+ addMetric(name + ".median", valueFormat.format(scale(distribution.percentile(50D).value, unit)), gauge, metric.tags)

addMetric(name + ".95percentile", valueFormat.format(scale(distribution.percentile(95D).value, unit)), gauge, metric.tags)

addMetric(name + ".max", valueFormat.format(scale(distribution.max, unit)), gauge, metric.tags)

addMetric(name + ".min", valueFormat.format(scale(distribution.min, unit)), gauge, metric.tags)

@@ -99,7 +96,7 @@ class DatadogAPIReporter extends MetricReporter {

if (seriesBuilder.length() > 0) seriesBuilder.append(",")

seriesBuilder

- .append(s"""{"metric":"$metricName","points":[[$timestamp,$value]],"type":"$metricType","host":"$host","tags":$allTagsString}""")

+ .append(s"""{"metric":"$metricName","interval":$interval,"points":[[$timestamp,$value]],"type":"$metricType","host":"$host","tags":$allTagsString}""")

}

def add(metric: MetricValue, metricType: String): Unit =

@@ -117,9 +114,13 @@ class DatadogAPIReporter extends MetricReporter {

}

private def scale(value: Long, unit: MeasurementUnit): Double = unit.dimension match {

- case Time if unit.magnitude != time.seconds.magnitude => MeasurementUnit.scale(value, unit, time.seconds)

- case Information if unit.magnitude != information.bytes.magnitude => MeasurementUnit.scale(value, unit, information.bytes)

- case _ => value.toDouble

+ case Time if unit.magnitude != configuration.timeUnit.magnitude =>

+ MeasurementUnit.scale(value, unit, configuration.timeUnit)

+

+ case Information if unit.magnitude != configuration.informationUnit.magnitude =>

+ MeasurementUnit.scale(value, unit, configuration.informationUnit)

+

+ case _ => value.toDouble

}

// Apparently okhttp doesn't require explicit closing of the connection

@@ -149,7 +150,6 @@ class DatadogAPIReporter extends MetricReporter {

private object DatadogAPIReporter {

val apiUrl = "https://app.datadoghq.com/api/v1/series?api_key="

-

val count = "count"

val gauge = "gauge"

diff --git a/src/main/scala/kamon/datadog/DatadogAPIReporter.scala b/src/main/scala/kamon/datadog/DatadogAPIReporter.scala

index 7310d97..8723951 100644

--- a/src/main/scala/kamon/datadog/DatadogAPIReporter.scala

+++ b/src/main/scala/kamon/datadog/DatadogAPIReporter.scala

@@ -37,15 +37,11 @@ class DatadogAPIReporter extends MetricReporter {

private val logger = LoggerFactory.getLogger(classOf[DatadogAPIReporter])

private val symbols = DecimalFormatSymbols.getInstance(Locale.US)

-

- private val jsonType = MediaType.parse("application/json; charset=utf-8")

-

symbols.setDecimalSeparator('.') // Just in case there is some weird locale config we are not aware of.

+ private val jsonType = MediaType.parse("application/json; charset=utf-8")

private val valueFormat = new DecimalFormat("#0.#########", symbols)

-

private var configuration = readConfiguration(Kamon.config())

-

private var httpClient: OkHttpClient = createHttpClient(configuration)

override def start(): Unit = {

@@ -78,6 +74,7 @@ class DatadogAPIReporter extends MetricReporter {

val timestamp = snapshot.from.getEpochSecond.toString

val host = Kamon.environment.host

+ val interval = Math.round(Duration.between(snapshot.from, snapshot.to).toMillis() / 1000D)

val seriesBuilder = new StringBuilder()

def addDistribution(metric: MetricDistribution): Unit = {

@@ -86,7 +83,7 @@ class DatadogAPIReporter extends MetricReporter {

val average = if (distribution.count > 0L) (distribution.sum / distribution.count) else 0L

addMetric(name + ".avg", valueFormat.format(scale(average, unit)), gauge, metric.tags)

addMetric(name + ".count", valueFormat.format(distribution.count), count, metric.tags)

- addMetric(name + ".median", valueFormat.format(distribution.percentile(50D).value), gauge, metric.tags)

+ addMetric(name + ".median", valueFormat.format(scale(distribution.percentile(50D).value, unit)), gauge, metric.tags)

addMetric(name + ".95percentile", valueFormat.format(scale(distribution.percentile(95D).value, unit)), gauge, metric.tags)

addMetric(name + ".max", valueFormat.format(scale(distribution.max, unit)), gauge, metric.tags)

addMetric(name + ".min", valueFormat.format(scale(distribution.min, unit)), gauge, metric.tags)

@@ -99,7 +96,7 @@ class DatadogAPIReporter extends MetricReporter {

if (seriesBuilder.length() > 0) seriesBuilder.append(",")

seriesBuilder

- .append(s"""{"metric":"$metricName","points":[[$timestamp,$value]],"type":"$metricType","host":"$host","tags":$allTagsString}""")

+ .append(s"""{"metric":"$metricName","interval":$interval,"points":[[$timestamp,$value]],"type":"$metricType","host":"$host","tags":$allTagsString}""")

}

def add(metric: MetricValue, metricType: String): Unit =

@@ -117,9 +114,13 @@ class DatadogAPIReporter extends MetricReporter {

}

private def scale(value: Long, unit: MeasurementUnit): Double = unit.dimension match {

- case Time if unit.magnitude != time.seconds.magnitude => MeasurementUnit.scale(value, unit, time.seconds)

- case Information if unit.magnitude != information.bytes.magnitude => MeasurementUnit.scale(value, unit, information.bytes)

- case _ => value.toDouble

+ case Time if unit.magnitude != configuration.timeUnit.magnitude =>

+ MeasurementUnit.scale(value, unit, configuration.timeUnit)

+

+ case Information if unit.magnitude != configuration.informationUnit.magnitude =>

+ MeasurementUnit.scale(value, unit, configuration.informationUnit)

+

+ case _ => value.toDouble

}

// Apparently okhttp doesn't require explicit closing of the connection

@@ -149,7 +150,6 @@ class DatadogAPIReporter extends MetricReporter {

private object DatadogAPIReporter {

val apiUrl = "https://app.datadoghq.com/api/v1/series?api_key="

-

val count = "count"

val gauge = "gauge"

-

Visualization and Fun

---------------------

-Creating a dashboard in the Datadog user interface is really simple, just start typing the application name ("kamon" by

-default) in the metric selector and all metric names will start to show up. You can also break it down based on the entity

-names. Here is a very simple example of a dashboard created with metrics reported by Kamon:

+Creating a dashboard in the Datadog user interface is really simple, all metric names will match the Kamon metric names

+with the additional "qualifier" suffix. Here is a very simple example of a dashboard created with metrics reported by Kamon:

-

Visualization and Fun

---------------------

-Creating a dashboard in the Datadog user interface is really simple, just start typing the application name ("kamon" by

-default) in the metric selector and all metric names will start to show up. You can also break it down based on the entity

-names. Here is a very simple example of a dashboard created with metrics reported by Kamon:

+Creating a dashboard in the Datadog user interface is really simple, all metric names will match the Kamon metric names

+with the additional "qualifier" suffix. Here is a very simple example of a dashboard created with metrics reported by Kamon:

diff --git a/src/main/scala/kamon/datadog/DatadogAPIReporter.scala b/src/main/scala/kamon/datadog/DatadogAPIReporter.scala

index 7310d97..8723951 100644

--- a/src/main/scala/kamon/datadog/DatadogAPIReporter.scala

+++ b/src/main/scala/kamon/datadog/DatadogAPIReporter.scala

@@ -37,15 +37,11 @@ class DatadogAPIReporter extends MetricReporter {

private val logger = LoggerFactory.getLogger(classOf[DatadogAPIReporter])

private val symbols = DecimalFormatSymbols.getInstance(Locale.US)

-

- private val jsonType = MediaType.parse("application/json; charset=utf-8")

-

symbols.setDecimalSeparator('.') // Just in case there is some weird locale config we are not aware of.

+ private val jsonType = MediaType.parse("application/json; charset=utf-8")

private val valueFormat = new DecimalFormat("#0.#########", symbols)

-

private var configuration = readConfiguration(Kamon.config())

-

private var httpClient: OkHttpClient = createHttpClient(configuration)

override def start(): Unit = {

@@ -78,6 +74,7 @@ class DatadogAPIReporter extends MetricReporter {

val timestamp = snapshot.from.getEpochSecond.toString

val host = Kamon.environment.host

+ val interval = Math.round(Duration.between(snapshot.from, snapshot.to).toMillis() / 1000D)

val seriesBuilder = new StringBuilder()

def addDistribution(metric: MetricDistribution): Unit = {

@@ -86,7 +83,7 @@ class DatadogAPIReporter extends MetricReporter {

val average = if (distribution.count > 0L) (distribution.sum / distribution.count) else 0L

addMetric(name + ".avg", valueFormat.format(scale(average, unit)), gauge, metric.tags)

addMetric(name + ".count", valueFormat.format(distribution.count), count, metric.tags)

- addMetric(name + ".median", valueFormat.format(distribution.percentile(50D).value), gauge, metric.tags)

+ addMetric(name + ".median", valueFormat.format(scale(distribution.percentile(50D).value, unit)), gauge, metric.tags)

addMetric(name + ".95percentile", valueFormat.format(scale(distribution.percentile(95D).value, unit)), gauge, metric.tags)

addMetric(name + ".max", valueFormat.format(scale(distribution.max, unit)), gauge, metric.tags)

addMetric(name + ".min", valueFormat.format(scale(distribution.min, unit)), gauge, metric.tags)

@@ -99,7 +96,7 @@ class DatadogAPIReporter extends MetricReporter {

if (seriesBuilder.length() > 0) seriesBuilder.append(",")

seriesBuilder

- .append(s"""{"metric":"$metricName","points":[[$timestamp,$value]],"type":"$metricType","host":"$host","tags":$allTagsString}""")

+ .append(s"""{"metric":"$metricName","interval":$interval,"points":[[$timestamp,$value]],"type":"$metricType","host":"$host","tags":$allTagsString}""")

}

def add(metric: MetricValue, metricType: String): Unit =

@@ -117,9 +114,13 @@ class DatadogAPIReporter extends MetricReporter {

}

private def scale(value: Long, unit: MeasurementUnit): Double = unit.dimension match {

- case Time if unit.magnitude != time.seconds.magnitude => MeasurementUnit.scale(value, unit, time.seconds)

- case Information if unit.magnitude != information.bytes.magnitude => MeasurementUnit.scale(value, unit, information.bytes)

- case _ => value.toDouble

+ case Time if unit.magnitude != configuration.timeUnit.magnitude =>

+ MeasurementUnit.scale(value, unit, configuration.timeUnit)

+

+ case Information if unit.magnitude != configuration.informationUnit.magnitude =>

+ MeasurementUnit.scale(value, unit, configuration.informationUnit)

+

+ case _ => value.toDouble

}

// Apparently okhttp doesn't require explicit closing of the connection

@@ -149,7 +150,6 @@ class DatadogAPIReporter extends MetricReporter {

private object DatadogAPIReporter {

val apiUrl = "https://app.datadoghq.com/api/v1/series?api_key="

-

val count = "count"

val gauge = "gauge"

diff --git a/src/main/scala/kamon/datadog/DatadogAPIReporter.scala b/src/main/scala/kamon/datadog/DatadogAPIReporter.scala

index 7310d97..8723951 100644

--- a/src/main/scala/kamon/datadog/DatadogAPIReporter.scala

+++ b/src/main/scala/kamon/datadog/DatadogAPIReporter.scala

@@ -37,15 +37,11 @@ class DatadogAPIReporter extends MetricReporter {

private val logger = LoggerFactory.getLogger(classOf[DatadogAPIReporter])

private val symbols = DecimalFormatSymbols.getInstance(Locale.US)

-

- private val jsonType = MediaType.parse("application/json; charset=utf-8")

-

symbols.setDecimalSeparator('.') // Just in case there is some weird locale config we are not aware of.

+ private val jsonType = MediaType.parse("application/json; charset=utf-8")

private val valueFormat = new DecimalFormat("#0.#########", symbols)

-

private var configuration = readConfiguration(Kamon.config())

-

private var httpClient: OkHttpClient = createHttpClient(configuration)

override def start(): Unit = {

@@ -78,6 +74,7 @@ class DatadogAPIReporter extends MetricReporter {

val timestamp = snapshot.from.getEpochSecond.toString

val host = Kamon.environment.host

+ val interval = Math.round(Duration.between(snapshot.from, snapshot.to).toMillis() / 1000D)

val seriesBuilder = new StringBuilder()

def addDistribution(metric: MetricDistribution): Unit = {

@@ -86,7 +83,7 @@ class DatadogAPIReporter extends MetricReporter {

val average = if (distribution.count > 0L) (distribution.sum / distribution.count) else 0L

addMetric(name + ".avg", valueFormat.format(scale(average, unit)), gauge, metric.tags)

addMetric(name + ".count", valueFormat.format(distribution.count), count, metric.tags)

- addMetric(name + ".median", valueFormat.format(distribution.percentile(50D).value), gauge, metric.tags)

+ addMetric(name + ".median", valueFormat.format(scale(distribution.percentile(50D).value, unit)), gauge, metric.tags)

addMetric(name + ".95percentile", valueFormat.format(scale(distribution.percentile(95D).value, unit)), gauge, metric.tags)

addMetric(name + ".max", valueFormat.format(scale(distribution.max, unit)), gauge, metric.tags)

addMetric(name + ".min", valueFormat.format(scale(distribution.min, unit)), gauge, metric.tags)

@@ -99,7 +96,7 @@ class DatadogAPIReporter extends MetricReporter {

if (seriesBuilder.length() > 0) seriesBuilder.append(",")

seriesBuilder

- .append(s"""{"metric":"$metricName","points":[[$timestamp,$value]],"type":"$metricType","host":"$host","tags":$allTagsString}""")

+ .append(s"""{"metric":"$metricName","interval":$interval,"points":[[$timestamp,$value]],"type":"$metricType","host":"$host","tags":$allTagsString}""")

}

def add(metric: MetricValue, metricType: String): Unit =

@@ -117,9 +114,13 @@ class DatadogAPIReporter extends MetricReporter {

}

private def scale(value: Long, unit: MeasurementUnit): Double = unit.dimension match {

- case Time if unit.magnitude != time.seconds.magnitude => MeasurementUnit.scale(value, unit, time.seconds)

- case Information if unit.magnitude != information.bytes.magnitude => MeasurementUnit.scale(value, unit, information.bytes)

- case _ => value.toDouble

+ case Time if unit.magnitude != configuration.timeUnit.magnitude =>

+ MeasurementUnit.scale(value, unit, configuration.timeUnit)

+

+ case Information if unit.magnitude != configuration.informationUnit.magnitude =>

+ MeasurementUnit.scale(value, unit, configuration.informationUnit)

+

+ case _ => value.toDouble

}

// Apparently okhttp doesn't require explicit closing of the connection

@@ -149,7 +150,6 @@ class DatadogAPIReporter extends MetricReporter {

private object DatadogAPIReporter {

val apiUrl = "https://app.datadoghq.com/api/v1/series?api_key="

-

val count = "count"

val gauge = "gauge"