This work has been published in ICRA 2022 and can be found here. Please contact Xingtong Liu (xliu89jhu@gmail.com) or Mathias Unberath (unberath@jhu.edu) if you have any questions.

If you find our work relevant, please consider citing it as

@INPROCEEDINGS{liu2022sage,

author={Liu, Xingtong and Li, Zhaoshuo and Ishii, Masaru and Hager, Gregory D. and Taylor, Russell H. and Unberath, Mathias},

booktitle={2022 International Conference on Robotics and Automation (ICRA)},

title={SAGE: SLAM with Appearance and Geometry Prior for Endoscopy},

year={2022},

volume={},

number={},

pages={5587-5593},

doi={10.1109/ICRA46639.2022.9812257}}

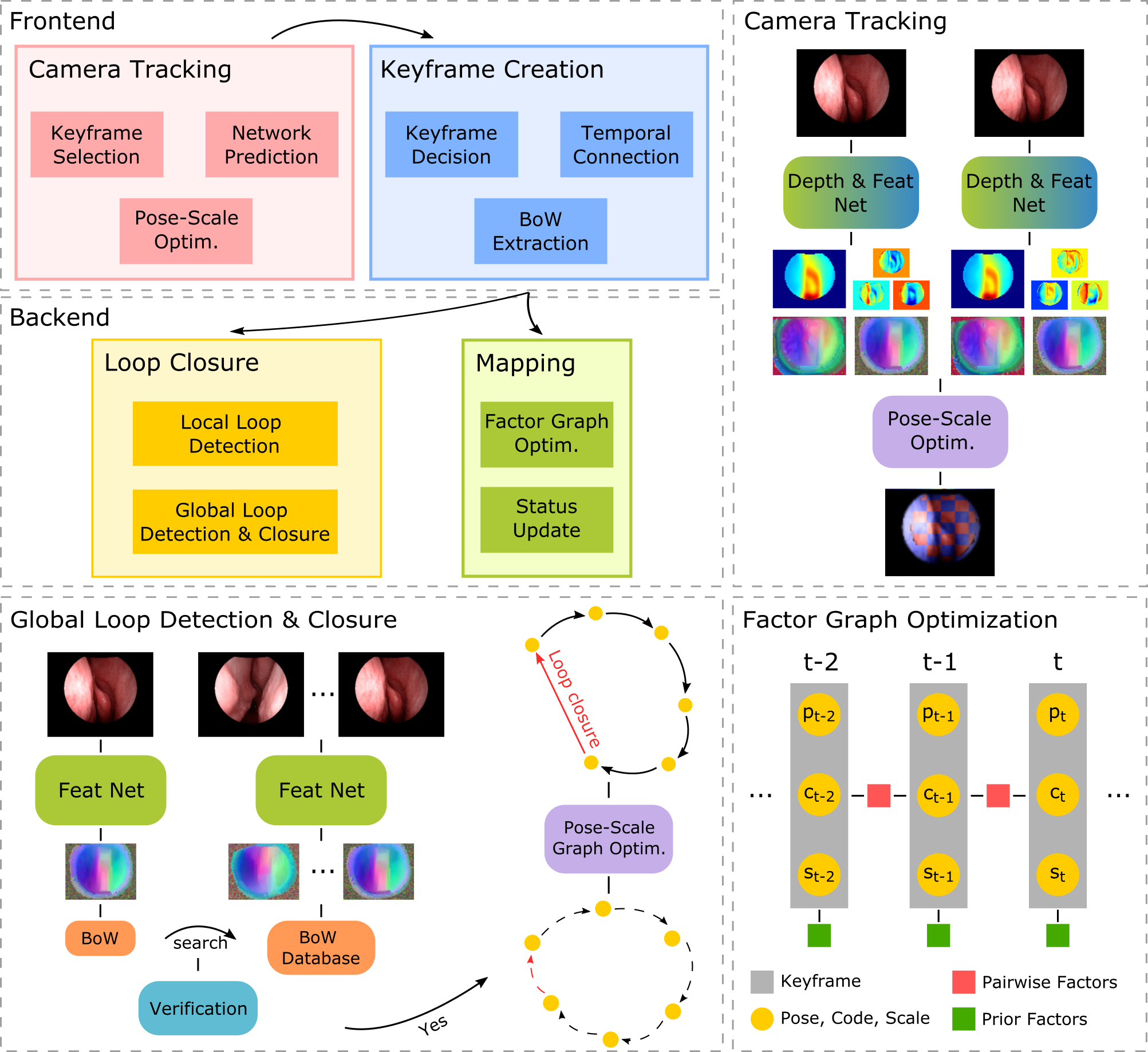

SAGE-SLAM system diagram:

ICRA 2022 supplementary video (YouTube video):

Fly-through of surface reconstruction:

For each GIF above, from left to right are the original endoscopic video, the textured rendering of the surface reconstruction through the camera trajectory from the SLAM system, the depth rendering of the reconstruction, and the dense depth map estimated from the SLAM system. For each sequence, the surface reconstruction is generated using volumetric TSDF with the dense depth maps and camera poses of all keyframes from the SLAM system as input. Note that all sequences above were unseen during the representation learning.

-

Clone this repository with

git clone git@github.com:lppllppl920/SAGE-SLAM.git -

Download an example dataset from this link. (To generate HDF5 of your own dataset for training, you can follow this repo. Note that you will need to store

depth_imageandmask_imagerendered in this code block in the 'render_depth' and 'render_mask' keys of the HDF5 file created.) -

Create a

datafolder inside the cloned repository and put the downloaded folderbag_1inside thedatafolder. -

After the steps above, the folder structure of the cloned repository will be shown as below with the command

tree -d -L 2 <path of the cloned repository>├── data │ └── bag_1 ├── pretrained ├── representation │ ├── configs │ ├── datasets │ ├── losses │ ├── models │ ├── scripts │ └── utils └── system ├── configs ├── sources └── thirdparty -

Install the Docker Engine with the instructions here and here, build a Docker image, and start a Docker container created from the built Docker image. Note that the

PWin thedocker buildcommand can be specified as any string as the password to access thesudopriviledge inside the Docker container. Note that the step 6, 7, and 8 below are optional if you only want to test run the SAGE-SLAM system, because we have pre-generated all required data.cd <path of the cloned repository> && \ docker build \ --build-arg UID=$(id -u) \ --build-arg GID=$(id -g) \ --build-arg UNAME=$(whoami) \ --build-arg PW=<password of your choice> \ -f Dockerfile \ -t sage-slam \ . && \ docker run \ -it \ --privileged \ --env DISPLAY=$DISPLAY \ -v /tmp/.X11-unix:/tmp/.X11-unix:rw \ -v $HOME/.Xauthority:$HOME/.Xauthority:rw \ --gpus=all \ --ipc=host \ --net=host \ --mount type=bind,source=<path of the cloned repository>,target=$HOME \ --mount type=bind,source=/tmp,target=/tmp \ --name sage-slam \ sage-slamNote that some of the options in the

docker runcommand are to enable X11 display inside the Docker container. Runsudo apt install -y firefoxandfirefoxwithin the container to install the firefox browser and open it up to test if the X11 display is working normally. Recent versions MacOS seem to have problems supporting the X11 display used by the third-party libraryPangolinof this repository. In this case, the GUI can be disabled when the SLAM system is ran, which is introduced later. -

Now the current working directory should be the home directory of the Docker container. To start the representation learning process, run the following command:

cd $HOME && \ /opt/conda/bin/python $HOME/representation/training.py \ --config_path "$HOME/representation/configs/training.json"Note that a set of pre-trained network models are provided inside

$HOME/pretrainedfolder. With the given setting specified in the$HOME/representation/configs/training.json, these pre-trained models are loaded. Setnet_load_weightsinside thetraining.jsontofalseif you want to train the networks from scratch. -

To visualize the tensorboard outputs during the training process, open a new terminal console that is outside of the Docker container, and run the following command:

tensorboard --logdir="/tmp/SAGE-SLAM_<time of the experiment>" \ --host=127.0.0.1 \ --port=6006Then open a compatible browser (such as Google Chrome) and type in

http://localhost:6006/to open the tensorboard dashboard. Note that the value of the optionlogdirshould be the path of the experiment of which you want to inspect the results. -

Inside the Docker container, to generate Pytorch JIT ScriptModule's that will be used in the SAGE-SLAM system, change

net_depth_model_path,net_feat_model_path,net_ba_model_path, andnet_disc_model_pathinside$HOME/representation/configs/export.jsonto the corresponding model paths and run the following command:cd $HOME && \ /opt/conda/bin/python $HOME/representation/training.py \ --config_path "$HOME/representation/configs/export.json" -

To build the SAGE-SLAM system implemented in C++, run the following command:

SLAM_BUILD_TYPE=Release && \ $HOME/system/thirdparty/makedeps_with_argument.sh $SLAM_BUILD_TYPE && \ mkdir -p $HOME/build/$SLAM_BUILD_TYPE && \ cd $HOME/build/$SLAM_BUILD_TYPE && \ cmake -DCMAKE_BUILD_TYPE=$SLAM_BUILD_TYPE $HOME/system/ && \ make -j6 && \ cd $HOMENote the

SLAM_BUILD_TYPEcan be changed toDebugto enable debugging if you want to further develop the SLAM system. With this command executed, the folder structure within the Docker container should look like below with the commandtree -d -L 3 $HOME:├── build │ └── Release │ ├── bin │ ├── CMakeFiles │ ├── sources │ └── thirdparty ├── data │ └── bag_1 │ ├── _start_002603_end_002984_stride_1000_segment_00 │ ├── _start_003213_end_003527_stride_1000_segment_00 │ └── _start_004259_end_004629_stride_1000_segment_00 ├── pretrained ├── representation │ ├── configs │ ├── datasets │ ├── losses │ ├── models │ ├── scripts │ └── utils └── system ├── configs ├── sources │ ├── common │ ├── core │ ├── cuda │ ├── demo │ ├── drivers │ ├── gui │ └── tools └── thirdparty ├── build_Release ├── camera_drivers ├── DBoW2 ├── eigen ├── gtsam ├── install_Release ├── opengv ├── Pangolin ├── Sophus ├── TEASER-plusplus └── vision_core -

Run the SAGE-SLAM system with the following command:

SLAM_BUILD_TYPE=Release && \ cd $HOME && \ LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$HOME/system/thirdparty/install_$SLAM_BUILD_TYPE/lib \ MESA_GL_VERSION_OVERRIDE=3.3 \ $HOME/build/$SLAM_BUILD_TYPE/bin/df_demo \ --flagfile $HOME/system/configs/slam_run.flags \ --enable_gui=falseNote that the X11 display should work normally with host operating system as linux. In this case, the option

enable_guican be set totrueto bring up the GUI of the SLAM system. Besides the common SLAM GUI, ifenable_guiis set totrue, appending--v=1to the command above will show more outputting messages and an image of the recent loop pair when a global loop is detected. Changing the verbose option above to--v=3will display even more messages and images for the debugging purpose. -

If you would like to run the system outside the Docker container, you will need to manually setup the environment (libraries, packages, etc.) the same way as what is indicated in the

Dockerfile.

As mentioned in the paper related to this repository, more details of the method are provided here.