diff --git a/CONTRIBUTING.md b/CONTRIBUTING.md

index a9fdf310a..2969aa6d7 100644

--- a/CONTRIBUTING.md

+++ b/CONTRIBUTING.md

@@ -23,7 +23,7 @@ Help and contributions are very welcome in the form of code contributions but al

1. If working on an issue, signal other contributors that you are actively working on it by commenting on it. Wait for approval in form of someone assigning you to the issue.

2. Fork the desired repo, develop and test your code changes.

- 1. See the [Development Guide](docs/developers/development.md) for more instructions on setting up your environment and testing changes locally.

+ 1. See the [Development Guide](/docs/caph/04-developers/01-development-guide.md) for more instructions on setting up your environment and testing changes locally.

3. Submit a pull request.

1. All code PR must be created in "draft mode". This helps other contributors by not blocking E2E tests, which cannot run in parallel. After your PR is approved, you can mark it "ready for review".

1. All code PR must be have a title starting with one of

@@ -41,4 +41,4 @@ Help and contributions are very welcome in the form of code contributions but al

All changes must be code reviewed. Coding conventions and standards are explained in the official [developer docs](https://github.com/kubernetes/community/tree/master/contributors/devel). Expect reviewers to request that you avoid common [go style mistakes](https://github.com/golang/go/wiki/CodeReviewComments) in your PRs.

-In case you want to run our E2E tests locally, please refer to [Testing](docs/developers/development.md#submitting-prs-and-testing) guide.

+In case you want to run our E2E tests locally, please refer to [Testing](/docs/caph/04-developers/01-development-guide.md#submitting-prs-and-testing) guide.

diff --git a/README.md b/README.md

index 6bee07253..ae785bd71 100644

--- a/README.md

+++ b/README.md

@@ -3,9 +3,9 @@

@@ -77,11 +77,11 @@ If you don't have a dedicated team for managing Kubernetes, you can use [Syself

Ready to dive in? Here are some resources to get you started:

-- [**Cluster API Provider Hetzner 15 Minute Tutorial**](docs/topics/quickstart.md): Set up a bootstrap cluster using Kind and deploy a Kubernetes cluster on Hetzner.

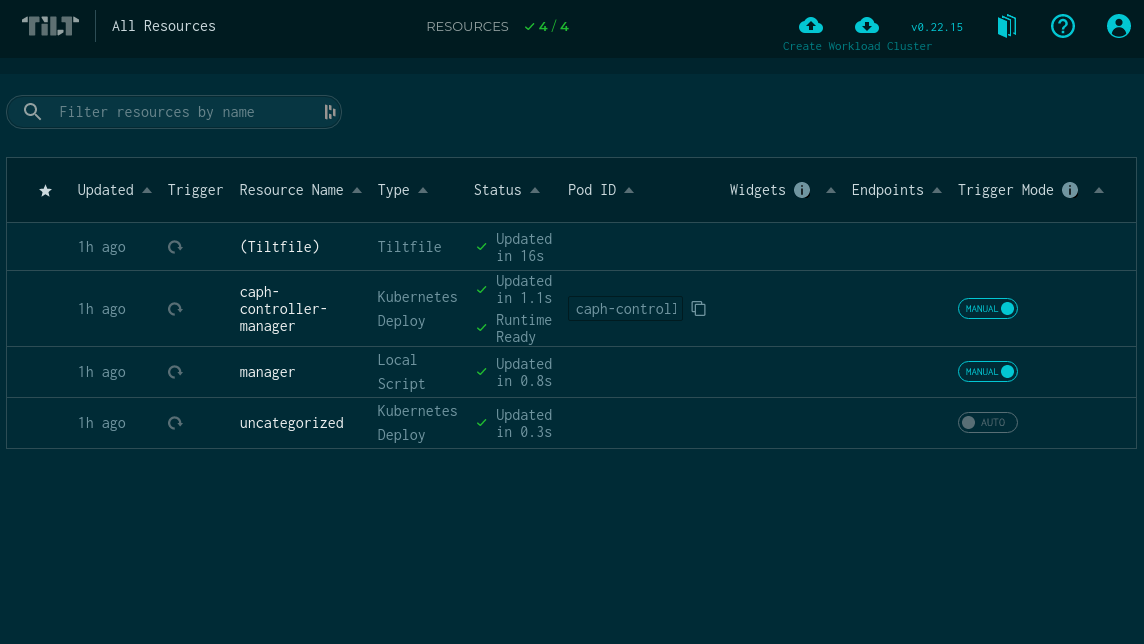

-- [**Develop and test Kubernetes clusters with Tilt**](docs/developers/development.md): Start using Tilt for rapid testing of various cluster flavors, like with/without a private network or bare metal.

-- [**Develop and test your own node-images**](docs/topics/node-image.md): Learn how to use your own machine images for production systems.

+- [**Cluster API Provider Hetzner 15 Minute Tutorial**](/docs/caph/01-getting-started/03-quickstart.md): Set up a bootstrap cluster using Kind and deploy a Kubernetes cluster on Hetzner.

+- [**Develop and test Kubernetes clusters with Tilt**](/docs/caph/04-developers/01-development-guide.md): Start using Tilt for rapid testing of various cluster flavors, like with/without a private network or bare metal.

+- [**Develop and test your own node-images**](/docs/caph/02-topics/02-node-image.md): Learn how to use your own machine images for production systems.

-In addition to the pure creation and operation of Kubernetes clusters, this provider can also validate and approve certificate signing requests. This increases security as the kubelets of the nodes can be operated with signed certificates, and enables the metrics-server to run securely. [Click here](docs/topics/advanced-caph.md#csr-controller) to read more about the CSR controller.

+In addition to the pure creation and operation of Kubernetes clusters, this provider can also validate and approve certificate signing requests. This increases security as the kubelets of the nodes can be operated with signed certificates, and enables the metrics-server to run securely. [Click here](/docs/caph/02-topics/03-advanced-caph.md#csr-controller) to read more about the CSR controller.

## 🖇️ Compatibility with Cluster API and Kubernetes Versions

@@ -121,21 +121,21 @@ Each version of Cluster API for Hetzner will attempt to support at least two Kub

> [!NOTE]

> Cluster API Provider Hetzner relies on a few prerequisites that must be already installed in the operating system images, such as a container runtime, kubelet, and Kubeadm.

>

-> Reference images are available in kubernetes-sigs/image-builder and [templates/node-image](templates/node-image).

+> Reference images are available in kubernetes-sigs/image-builder and [templates/node-image](/templates/node-image).

>

-> If pre-installation of these prerequisites isn't possible, [custom scripts can be deployed](docs/topics/node-image through the Kubeadm config.md).

+> If pre-installation of these prerequisites isn't possible, [custom scripts can be deployed](/docs/caph/02-topics/02-node-image) through the Kubeadm config.

---

## 📖 Documentation

-Documentation can be found in the `/docs` directory. [Here](docs/README.md) is an overview of our documentation.

+Documentation can be found in the `/docs` directory. [Here](/docs/README.md) is an overview of our documentation.

## 👥 Getting Involved and Contributing

We, maintainers and the community, welcome any contributions to Cluster API Provider Hetzner. For suggestions, contributions, and assistance, contact the maintainers anytime.

-To set up your environment, refer to the [development guide](docs/developers/development.md).

+To set up your environment, refer to the [development guide](/docs/caph/04-developers/01-development-guide.md).

For new contributors, check out issues tagged as [`good first issue`][good_first_issue]. These are typically smaller in scope and great for getting familiar with the codebase.

@@ -148,7 +148,7 @@ do!

## ⚖️ Code of Conduct

-Participation in the Kubernetes community is governed by the [Kubernetes Code of Conduct](code-of-conduct.md).

+Participation in the Kubernetes community is governed by the [Kubernetes Code of Conduct](/code-of-conduct.md).

## :shipit: GitHub Issues

diff --git a/docs/README.md b/docs/README.md

index c1448c2d1..6aa21df9b 100644

--- a/docs/README.md

+++ b/docs/README.md

@@ -2,27 +2,34 @@

This is the official documentation of Cluster API Provider Hetzner. Before starting with this documentation, you should have a basic understanding of Cluster API. Cluster API is a Kubernetes sub-project focused on providing a declarative API to manage Kubernetes clusters. Refer to the Cluster API quick start guide from their [official documentation](https://cluster-api.sigs.k8s.io/user/quick-start.html).

-## Quick start

+## Getting Started

-- [Preparation](topics/preparation.md)

-- [Getting started](topics/quickstart.md)

+- [Introduction](/docs/caph/01-getting-started/01-introduction.md)

+- [Preparation](/docs/caph/01-getting-started/02-preparation)

+- [Quickstart](/docs/caph/01-getting-started/03-quickstart)

## Topics

-- [Managing SSH Keys](topics/managing-ssh-keys.md)

-- [Node Images](topics/node-image.md)

-- [Production Environment](topics/production-environment.md)

-- [Advanced CAPH](topics/advanced-caph.md)

-- [Upgrading CAPH](topics/upgrade.md)

+- [Managing SSH Keys](/docs/caph/02-topics/01-managing-ssh-keys)

+- [Node Images](/docs/caph/02-topics/02-node-image)

+- [Production Environment](/docs/caph/02-topics/03-production-environment)

+- [Advanced CAPH](/docs/caph/02-topics/04-advanced-caph)

+- [Upgrading CAPH](/docs/caph/02-topics/05-upgrading-caph)

+- [Hetzner Baremetal](/docs/caph/02-topics/06-hetzner-baremetal)

## Reference

-- [General](reference/README.md)

-- [HetznerCluster](reference/hetzner-cluster.md)

-- [HCloudMachineTemplate](reference/hcloud-machine-template.md)

-- [HetznerBareMetalHost](reference/hetzner-bare-metal-host.md)

-- [HetznerBareMetalMachineTemplate](reference/hetzner-bare-metal-machine-template.md)

-- [HetznerBareMetalRemediationTemplate](reference/hetzner-bare-metal-remediation-template.md)

+

+- [General](/docs/caph/03-reference/01-introduction)

+- [HetznerCluster](/docs/caph/03-reference/02-hetzner-cluster)

+- [HCloudMachineTemplate](/docs/caph/03-reference/03-hcloud-machine-template)

+- [HCloudMachineTemplate](/docs/caph/03-reference/04-hcloud-remediation-template)

+- [HetznerBareMetalHost](/docs/caph/03-reference/05-hetzner-bare-metal-host)

+- [HetznerBareMetalMachineTemplate](/docs/caph/03-reference/06-hetzner-bare-metal-machine-template)

+- [HetznerBareMetalRemediationTemplate](/docs/caph/03-reference/07-hetzner-bare-metal-remediation-template)

+

## Development

-- [Development guide](developers/development.md)

-- [Tilt](developers/tilt.md)

-- [Releasing](developers/releasing.md)

+

+- [Development guide](/docs/caph/04-developers/01-development-guide)

+- [Tilt](/docs/caph/04-developers/02-tilt)

+- [Releasing](/docs/caph/04-developers/03-releasing)

+- [Updating Kubernetes version](/docs/caph/04-developers/04-updating-kubernetes-version)

diff --git a/docs/caph/01-getting-started/01-introduction.md b/docs/caph/01-getting-started/01-introduction.md

new file mode 100644

index 000000000..b656fb014

--- /dev/null

+++ b/docs/caph/01-getting-started/01-introduction.md

@@ -0,0 +1,46 @@

+---

+title: Introduction

+---

+

+Welcome to the official documentation for the Cluster API Provider Hetzner (CAPH).

+

+## What is the Cluster API Provider Hetzner

+

+CAPH is a Kubernetes Cluster API provider that facilitates the deployment and management of self-managed Kubernetes clusters on Hetzner infrastructure. The provider supports both cloud and bare-metal instances for consistent, scalable, and production-ready cluster operations.

+

+It is recommended that you have at least a basic understanding of Cluster API before getting started with CAPH. You can refer to the Cluster API Quick Start Guide from its [official documentation](https://cluster-api.sigs.k8s.io).

+

+## Compatibility with Cluster API and Kubernetes Versions

+

+This provider's versions are compatible with the following versions of Cluster API:

+

+| | Cluster API `v1beta1` (`v1.6.x`) | Cluster API `v1beta1` (`v1.7.x`) |

+| --------------------------------- | -------------------------------- | -------------------------------- |

+| Hetzner Provider `v1.0.0-beta.33` | ✅ | ❌ |

+| Hetzner Provider `v1.0.0-beta.34-35` | ❌ | ✅ |

+

+This provider's versions can install and manage the following versions of Kubernetes:

+

+| | Hetzner Provider `v1.0.x` |

+| ----------------- | ------------------------- |

+| Kubernetes 1.23.x | ✅ |

+| Kubernetes 1.24.x | ✅ |

+| Kubernetes 1.25.x | ✅ |

+| Kubernetes 1.26.x | ✅ |

+| Kubernetes 1.27.x | ✅ |

+| Kubernetes 1.28.x | ✅ |

+| Kubernetes 1.29.x | ✅ |

+| Kubernetes 1.30.x | ✅ |

+

+Test status:

+

+- ✅ tested

+- ❔ should work, but we weren't able to test it

+

+Each version of Cluster API for Hetzner will attempt to support at least two Kubernetes versions.

+

+{% callout %}

+

+As the versioning for this project is tied to the versioning of Cluster API, future modifications to this policy may be made to more closely align with other providers in the Cluster API ecosystem.

+

+{% /callout %}

diff --git a/docs/topics/preparation.md b/docs/caph/01-getting-started/02-preparation.md

similarity index 89%

rename from docs/topics/preparation.md

rename to docs/caph/01-getting-started/02-preparation.md

index e99f95a82..091522bad 100644

--- a/docs/topics/preparation.md

+++ b/docs/caph/01-getting-started/02-preparation.md

@@ -1,4 +1,6 @@

-# Preparation

+---

+title: Preparation

+---

## Preparation of the Hetzner Project and Credentials

@@ -8,7 +10,7 @@ There are several tasks that have to be completed before a workload cluster can

1. Create a new [HCloud project](https://console.hetzner.cloud/projects).

2. Generate an API token with read and write access. You'll find this if you click on the project and go to "security".

-3. If you want to use it, generate an SSH key, upload the public key to HCloud (also via "security"), and give it a name. Read more about [Managing SSH Keys](managing-ssh-keys.md).

+3. If you want to use it, generate an SSH key, upload the public key to HCloud (also via "security"), and give it a name. Read more about [Managing SSH Keys](/docs/caph/02-topics/01-managing-ssh-keys).

### Preparing Hetzner Robot

@@ -16,6 +18,7 @@ There are several tasks that have to be completed before a workload cluster can

2. Generate an SSH key. You can either upload it via Hetzner Robot UI or just rely on the controller to upload a key that it does not find in the robot API. This is possible, as you have to store the public and private key together with the SSH key's name in a secret that the controller reads.

---

+

## Bootstrap or Management Cluster Installation

### Common Prerequisites

@@ -33,20 +36,23 @@ It is a common practice to create a temporary, local bootstrap cluster, which is

#### 1. Existing Management Cluster.

-For production use, a “real” Kubernetes cluster should be used with appropriate backup and Disaster Recovery policies and procedures in place. The Kubernetes cluster must be at least a [supported version](../../README.md#fire-compatibility-with-cluster-api-and-kubernetes-versions).

+For production use, a “real” Kubernetes cluster should be used with appropriate backup and Disaster Recovery policies and procedures in place. The Kubernetes cluster must be at least a [supported version](https://github.com/syself/cluster-api-provider-hetzner/blob/main/README.md#%EF%B8%8F-compatibility-with-cluster-api-and-kubernetes-versions).

#### 2. Kind.

[kind](https://kind.sigs.k8s.io/) can be used for creating a local Kubernetes cluster for development environments or for the creation of a temporary bootstrap cluster used to provision a target management cluster on the selected infrastructure provider.

---

+

## Install Clusterctl and initialize Management Cluster

### Install Clusterctl

+

Please use the instructions here: https://cluster-api.sigs.k8s.io/user/quick-start.html#install-clusterctl

or use: `make clusterctl`

### Initialize the management cluster

+

Now that we’ve got clusterctl installed and all the prerequisites are in place, we can transform the Kubernetes cluster into a management cluster by using the `clusterctl init` command. More information about clusterctl can be found [here](https://cluster-api.sigs.k8s.io/clusterctl/commands/commands.html).

For the latest version:

@@ -59,6 +65,7 @@ clusterctl init --core cluster-api --bootstrap kubeadm --control-plane kubeadm -

or for a specific [version](https://github.com/syself/cluster-api-provider-hetzner/releases): `--infrastructure hetzner:vX.X.X`

---

+

## Variable Preparation to generate a cluster-template.

```shell

@@ -72,14 +79,14 @@ export HCLOUD_CONTROL_PLANE_MACHINE_TYPE=cpx31 \

export HCLOUD_WORKER_MACHINE_TYPE=cpx31

```

-* HCLOUD_SSH_KEY: The SSH Key name you loaded in HCloud.

-* HCLOUD_REGION: https://docs.hetzner.com/cloud/general/locations/

-* HCLOUD_IMAGE_NAME: The Image name of your operating system.

-* HCLOUD_X_MACHINE_TYPE: https://www.hetzner.com/cloud#pricing

+- HCLOUD_SSH_KEY: The SSH Key name you loaded in HCloud.

+- HCLOUD_REGION: https://docs.hetzner.com/cloud/general/locations/

+- HCLOUD_IMAGE_NAME: The Image name of your operating system.

+- HCLOUD_X_MACHINE_TYPE: https://www.hetzner.com/cloud#pricing

-For a list of all variables needed for generating a cluster manifest (from the cluster-template.yaml), use `clusterctl generate cluster --infrastructure hetzner: --list-variables hetzner-cluster`:

+For a list of all variables needed for generating a cluster manifest (from the cluster-template.yaml), use `clusterctl generate cluster --infrastructure hetzner: --list-variables hetzner-cluster`

-```

+```shell

$ clusterctl generate cluster --infrastructure hetzner: --list-variables hetzner-cluster

Required Variables:

- HCLOUD_CONTROL_PLANE_MACHINE_TYPE

@@ -96,9 +103,10 @@ Optional Variables:

### Create a secret for hcloud only

-In order for the provider integration hetzner to communicate with the Hetzner API ([HCloud API](https://docs.hetzner.cloud/), we need to create a secret with the access data. The secret must be in the same namespace as the other CRs.

+In order for the provider integration hetzner to communicate with the Hetzner API ([HCloud API](https://docs.hetzner.cloud/)), we need to create a secret with the access data. The secret must be in the same namespace as the other CRs.

`export HCLOUD_TOKEN="" `

+

- HCLOUD_TOKEN: The project where your cluster will be placed. You have to get a token from your HCloud Project.

```shell

@@ -140,4 +148,4 @@ kubectl patch secret robot-ssh -p '{"metadata":{"labels":{"clusterctl.cluster.x-

The secret name and the tokens can also be customized in the cluster template.

-See [node-image](./node-image.md) for more information.

+See [node-image](/docs/caph/02-topics/02-node-image) for more information.

diff --git a/docs/topics/quickstart.md b/docs/caph/01-getting-started/03-quickstart.md

similarity index 83%

rename from docs/topics/quickstart.md

rename to docs/caph/01-getting-started/03-quickstart.md

index d367b2755..97f146de3 100644

--- a/docs/topics/quickstart.md

+++ b/docs/caph/01-getting-started/03-quickstart.md

@@ -1,8 +1,14 @@

-# Quickstart Guide

+---

+title: Quickstart Guide

+---

This guide goes through all the necessary steps to create a cluster on Hetzner infrastructure (on HCloud).

->Note: The cluster templates used in the repository and in this guide for creating clusters are for development purposes only. These templates are not advised to be used in the production environment. However, the software is production-ready and users use it in their production environment. Make your clusters production-ready with the help of Syself Autopilot. For more information, contact .

+{% callout %}

+

+The cluster templates used in the repository and in this guide for creating clusters are for development purposes only. These templates are not advised to be used in the production environment. However, the software is production-ready and users use it in their production environment. Make your clusters production-ready with the help of Syself Autopilot. For more information, contact .

+

+{% /callout %}

## Prerequisites

@@ -37,7 +43,7 @@ There are several tasks that have to be completed before a workload cluster can

1. Create a new [HCloud project](https://console.hetzner.cloud/projects).

1. Generate an API token with read and write access. You'll find this if you click on the project and go to "security".

-1. If you want to use it, generate an SSH key, upload the public key to HCloud (also via "security"), and give it a name. Read more about [Managing SSH Keys](managing-ssh-keys.md).

+1. If you want to use it, generate an SSH key, upload the public key to HCloud (also via "security"), and give it a name. Read more about [Managing SSH Keys](/docs/caph/02-topics/01-managing-ssh-keys).

### Bootstrap or Management Cluster Installation

@@ -56,20 +62,23 @@ It is a common practice to create a temporary, local bootstrap cluster, which is

#### 1. Existing Management Cluster.

-For production use, a “real” Kubernetes cluster should be used with appropriate backup and DR policies and procedures in place. The Kubernetes cluster must be at least a [supported version](../../README.md#fire-compatibility-with-cluster-api-and-kubernetes-versions).

+For production use, a “real” Kubernetes cluster should be used with appropriate backup and DR policies and procedures in place. The Kubernetes cluster must be at least a [supported version](https://github.com/syself/cluster-api-provider-hetzner/blob/main/README.md#%EF%B8%8F-compatibility-with-cluster-api-and-kubernetes-versions).

#### 2. Kind.

[kind](https://kind.sigs.k8s.io/) can be used for creating a local Kubernetes cluster for development environments or for the creation of a temporary bootstrap cluster used to provision a target management cluster on the selected infrastructure provider.

---

+

### Install Clusterctl and initialize Management Cluster

#### Install Clusterctl

+

To install Clusterctl, refer to the instructions available in the official ClusterAPI documentation [here](https://cluster-api.sigs.k8s.io/user/quick-start.html#install-clusterctl).

Alternatively, use the `make install-clusterctl` command to do the same.

#### Initialize the management cluster

+

Now that we’ve got clusterctl installed and all the prerequisites are in place, we can transform the Kubernetes cluster into a management cluster by using the `clusterctl init` command. More information about clusterctl can be found [here](https://cluster-api.sigs.k8s.io/clusterctl/commands/commands.html).

For the latest version:

@@ -78,9 +87,14 @@ For the latest version:

clusterctl init --core cluster-api --bootstrap kubeadm --control-plane kubeadm --infrastructure hetzner

```

->Note: For a specific version, use the `--infrastructure hetzner:vX.X.X` flag with the above command.

+{% callout %}

+

+For a specific version, use the `--infrastructure hetzner:vX.X.X` flag with the above command.

+

+{% /callout %}

---

+

### Variable Preparation to generate a cluster-template

```shell

@@ -94,16 +108,17 @@ export HCLOUD_CONTROL_PLANE_MACHINE_TYPE=cpx31 \

export HCLOUD_WORKER_MACHINE_TYPE=cpx31

```

-* **HCLOUD_SSH_KEY**: The SSH Key name you loaded in HCloud.

-* **HCLOUD_REGION**: The region of the Hcloud cluster. Find the full list of regions [here](https://docs.hetzner.com/cloud/general/locations/).

-* **HCLOUD_IMAGE_NAME**: The Image name of the operating system.

-* **HCLOUD_X_MACHINE_TYPE**: The type of the Hetzner cloud server. Find more information [here](https://www.hetzner.com/cloud#pricing).

+- **HCLOUD_SSH_KEY**: The SSH Key name you loaded in HCloud.

+- **HCLOUD_REGION**: The region of the Hcloud cluster. Find the full list of regions [here](https://docs.hetzner.com/cloud/general/locations/).

+- **HCLOUD_IMAGE_NAME**: The Image name of the operating system.

+- **HCLOUD_X_MACHINE_TYPE**: The type of the Hetzner cloud server. Find more information [here](https://www.hetzner.com/cloud#pricing).

For a list of all variables needed for generating a cluster manifest (from the cluster-template.yaml), use the following command:

-````shell

+```shell

clusterctl generate cluster my-cluster --list-variables

-````

+```

+

Running the above command will give you an output in the following manner:

```shell

@@ -144,15 +159,25 @@ The secret name and the tokens can also be customized in the cluster template.

## Generating the cluster.yaml

The `clusterctl generate cluster` command returns a YAML template for creating a workload cluster.

-It generates a YAML file named `my-cluster.yaml` with a predefined list of Cluster API objects (`Cluster`, `Machines`, `MachineDeployments`, etc.) to be deployed in the current namespace.

+It generates a YAML file named `my-cluster.yaml` with a predefined list of Cluster API objects (`Cluster`, `Machines`, `MachineDeployments`, etc.) to be deployed in the current namespace.

```shell

clusterctl generate cluster my-cluster --kubernetes-version v1.29.4 --control-plane-machine-count=3 --worker-machine-count=3 > my-cluster.yaml

```

->Note: With the `--target-namespace` flag, you can specify a different target namespace.

+

+{% callout %}

+

+With the `--target-namespace` flag, you can specify a different target namespace.

+

Run the `clusterctl generate cluster --help` command for more information.

->**Note**: Please note that ready-to-use Kubernetes configurations, production-ready node images, kubeadm configuration, cluster add-ons like CNI, and similar services need to be separately prepared or acquired to ensure a comprehensive and secure Kubernetes deployment. This is where **Syself Autopilot** comes into play, taking on these challenges to offer you a seamless, worry-free Kubernetes experience. Feel free to contact us via e-mail: .

+{% /callout %}

+

+{% callout %}

+

+Please note that ready-to-use Kubernetes configurations, production-ready node images, kubeadm configuration, cluster add-ons like CNI, and similar services need to be separately prepared or acquired to ensure a comprehensive and secure Kubernetes deployment. This is where **Syself Autopilot** comes into play, taking on these challenges to offer you a seamless, worry-free Kubernetes experience. Feel free to contact us via e-mail: .

+

+{% /callout %}

## Applying the workload cluster

@@ -182,7 +207,11 @@ To verify the first control plane is up, use the following command:

kubectl get kubeadmcontrolplane

```

->Note: The control plane won’t be `ready` until we install a CNI in the next step.

+{% callout %}

+

+The control plane won’t be `ready` until we install a CNI in the next step.

+

+{% /callout %}

After the first control plane node is up and running, we can retrieve the kubeconfig of the workload cluster with:

@@ -205,7 +234,11 @@ KUBECONFIG=$CAPH_WORKER_CLUSTER_KUBECONFIG helm upgrade --install cilium cilium/

You can, of course, also install an alternative CNI, e.g., calico.

->Note: There is a bug in Ubuntu that requires the older version of Cilium for this quickstart guide.

+{% callout %}

+

+There is a bug in Ubuntu that requires the older version of Cilium for this quickstart guide.

+

+{% /callout %}

## Deploy the CCM

@@ -215,7 +248,6 @@ The following `make` command will install the CCM in your workload cluster:

`make install-ccm-in-wl-cluster PRIVATE_NETWORK=false`

-

For a cluster without a private network, use the following command:

```shell

@@ -277,7 +309,11 @@ To move the Cluster API objects from your bootstrap cluster to the new managemen

clusterctl init --core cluster-api --bootstrap kubeadm --control-plane kubeadm --infrastructure hetzner

```

->Note: For a specific version, use the flag `--infrastructure hetzner:vX.X.X` with the above command.

+{% callout %}

+

+For a specific version, use the flag `--infrastructure hetzner:vX.X.X` with the above command.

+

+{% /callout %}

You can switch back to the management cluster with the following command:

@@ -293,10 +329,10 @@ clusterctl move --to-kubeconfig $CAPH_WORKER_CLUSTER_KUBECONFIG

Clusterctl Flags:

-| Flag | Description |

-| ------------------------- | ----------------------------------------------------------------------------------------------------------------------------- |

-| _--namespace_ | The namespace where the workload cluster is hosted. If unspecified, the current context's namespace is used. |

-| _--kubeconfig_ | Path to the kubeconfig file for the source management cluster. If unspecified, default discovery rules apply. |

+| Flag | Description |

+| ------------------------- | --------------------------------------------------------------------------------------------------------------------------------- |

+| _--namespace_ | The namespace where the workload cluster is hosted. If unspecified, the current context's namespace is used. |

+| _--kubeconfig_ | Path to the kubeconfig file for the source management cluster. If unspecified, default discovery rules apply. |

| _--kubeconfig-context_ | Context to be used within the kubeconfig file for the source management cluster. If empty, the current context will be used. |

-| _--to-kubeconfig_ | Path to the kubeconfig file to use for the destination management cluster. |

+| _--to-kubeconfig_ | Path to the kubeconfig file to use for the destination management cluster. |

| _--to-kubeconfig-context_ | Context to be used within the kubeconfig file for the destination management cluster. If empty, the current context will be used. |

diff --git a/docs/topics/managing-ssh-keys.md b/docs/caph/02-topics/01-managing-ssh-keys.md

similarity index 89%

rename from docs/topics/managing-ssh-keys.md

rename to docs/caph/02-topics/01-managing-ssh-keys.md

index 1458ad74f..bcbe347c2 100644

--- a/docs/topics/managing-ssh-keys.md

+++ b/docs/caph/02-topics/01-managing-ssh-keys.md

@@ -1,28 +1,38 @@

-## Managing SSH keys

+---

+title: Managing SSH keys

+---

This section provides details about SSH keys and its importance with regards to CAPH.

-### What are SSH keys?

+## What are SSH keys?

SSH keys are a crucial component of secured network communication. They provide a secure and convenient method for authenticating to and communicating with remote servers over unsecured networks. They are used as an access credential in the SSH (Secure Shell) protocol, which is used for logging in remotely from one system to another. SSH keys come in pairs with a public and a private key and its strong encryption is used for executing remote commands and remotely managing vital system components.

-### SSH keys in CAPH

+## SSH keys in CAPH

-In CAPH, SSH keys help in establishing secure communication remotely with Kubernetes nodes running on Hetzner cloud. They help you get complete access to the underlying Kubernetes nodes that are machines provisioned in Hetzner cloud and retrieve required information related to the system. With the help of these keys, you can SSH into the nodes in case of troubleshooting.

+In CAPH, SSH keys help in establishing secure communication remotely with Kubernetes nodes running on Hetzner cloud. They help you get complete access to the underlying Kubernetes nodes that are machines provisioned in Hetzner cloud and retrieve required information related to the system. With the help of these keys, you can SSH into the nodes in case of troubleshooting.

-### In Hetzner Cloud

-NOTE: You are responsible for uploading your public ssh key to hetzner cloud. This can be done using `hcloud` CLI or hetznercloud console.

-All keys that exist in Hetzner Cloud and are specified in `HetznerCluster` spec are included when provisioning machines. Therefore, they can be used to access those machines via SSH.

+## In Hetzner Cloud

-```bash

+{% callout %}

+

+You are responsible for uploading your public ssh key to hetzner cloud. This can be done using `hcloud` CLI or hetznercloud console.

+

+{% /callout %}

+

+All keys that exist in Hetzner Cloud and are specified in `HetznerCluster` spec are included when provisioning machines. Therefore, they can be used to access those machines via SSH.

+

+```shell

hcloud ssh-key create --name caph --public-key-from-file ~/.ssh/hetzner-cluster.pub

```

+

Once this is done, you'll have to reference it while creating your cluster.

For example, if you've specified four keys in your hetzner cloud project and you reference all of them while creating your cluster in `HetznerCluster.spec.sshKeys.hcloud` then you can access the machines with all the four keys.

+

```yaml

- sshKeys:

- hcloud:

+sshKeys:

+ hcloud:

- name: testing

- name: test

- name: hello

@@ -33,7 +43,8 @@ The SSH keys can be either specified cluster-wide in the `HetznerCluster.spec.ss

If one SSH key is changed in the specs of the cluster, then keep in mind that the SSH key is still valid to access all servers that have been created with it. If it is a potential security vulnerability, then all of these servers should be removed and re-created with the new SSH keys.

-### In Hetzner Robot

+## In Hetzner Robot

+

For bare metal servers, two SSH keys are required. One of them is used for the rescue system, and the other for the actual system. The two can, under the hood, of course, be the same. These SSH keys do not have to be uploaded into Robot API but have to be stored in two secrets (again, the same secret is also possible if the same reference is given twice). Not only the name of the SSH key but also the public and private key. The private key is necessary for provisioning the server with SSH. The SSH key for the actual system is specified in `HetznerBareMetalMachineTemplate` - there are no cluster-wide alternatives. The SSH key for the rescue system is defined in a cluster-wide manner in the specs of `HetznerCluster`.

The secret reference to an SSH key cannot be changed - the secret data, i.e., the SSH key, can. The host that is consumed by the `HetznerBareMetalMachine` object reacts in different ways to the change of the secret data of the secret referenced in its specs, depending on its provisioning state. If the host is already provisioned, it will emit an event warning that provisioned hosts can't change SSH keys. The corresponding machine object should instead be deleted and recreated. When the host is provisioning, it restarts this process again if a change of the SSH key makes it necessary. This depends on whether it is the SSH key for the rescue or the actual system and the exact provisioning state.

diff --git a/docs/topics/node-image.md b/docs/caph/02-topics/02-node-image.md

similarity index 93%

rename from docs/topics/node-image.md

rename to docs/caph/02-topics/02-node-image.md

index 13385c19b..56db9afaf 100644

--- a/docs/topics/node-image.md

+++ b/docs/caph/02-topics/02-node-image.md

@@ -1,4 +1,6 @@

-# Node Images

+---

+title: Node Images

+---

## What are node-images?

@@ -15,7 +17,7 @@ There are several ways to achieve this. In the quick-start guide, we use `pre-ku

For Hcloud, there is an alternative way of doing this using Packer. It creates a snapshot to boot from. This makes it easier to version the images, and creating new nodes using this image is faster. The same is possible for Hetzner BareMetal, as we could use installimage and a prepared tarball, which then gets installed as the OS for your nodes.

-To use CAPH in production, it needs a node image. In Hetzner Cloud, it is not possible to upload your own images directly. However, a server can be created, configured, and then snapshotted.

+To use CAPH in production, it needs a node image. In Hetzner Cloud, it is not possible to upload your own images directly. However, a server can be created, configured, and then snapshotted.

For this, Packer could be used, which already has support for Hetzner Cloud.

In this repository, there is also an example `Packer node-image`. To use it, do the following:

@@ -30,9 +32,9 @@ packer build --debug --on-error=abort templates/node-image/1.28.9-ubuntu-22-04-c

```

The first command is necessary so that Packer is able to create a server in hcloud.

-The second one creates the server with Packer. If you are developing your own packer image, the third command could be helpful to check what's going wrong.

+The second one creates the server with Packer. If you are developing your own packer image, the third command could be helpful to check what's going wrong.

It is essential to know that if you create your own packer image, you need to set a label so that CAPH can find the specified image name. We use for this label the following key: `caph-image-name`

-Please have a look at the image.json of the [example node-image](/templates/node-image/1.28.9-ubuntu-22-04-containerd/image.json).

+Please have a look at the image.json of the [example node-image](https://github.com/syself/cluster-api-provider-hetzner/blob/main/templates/node-image/1.28.9-ubuntu-22-04-containerd/image.json).

If you use your own node image, make sure also to use a cluster flavor that has `packer` in its name. The default one uses preKubeadm commands to install all necessary things. This is very helpful for testing but is not recommended in a production system.

diff --git a/docs/topics/production-environment.md b/docs/caph/02-topics/03-production-environment.md

similarity index 69%

rename from docs/topics/production-environment.md

rename to docs/caph/02-topics/03-production-environment.md

index 57cd71ef7..734c544f9 100644

--- a/docs/topics/production-environment.md

+++ b/docs/caph/02-topics/03-production-environment.md

@@ -1,10 +1,14 @@

-# Production Environment Best Practices

+---

+title: Production Environment Best Practices

+---

## HA Cluster API Components

+

The clusterctl CLI will create all four needed components, such as Cluster API (CAPI), cluster-api-bootstrap-provider-kubeadm (CAPBK), cluster-api-control-plane-kubeadm (KCP), and cluster-api-provider-hetzner (CAPH).

-It uses the respective *-components.yaml from the releases. However, these are not highly available. By scaling the components, we can at least reduce the probability of failure. If this is not enough, add anti-affinity rules and PDBs.

+It uses the respective \*-components.yaml from the releases. However, these are not highly available. By scaling the components, we can at least reduce the probability of failure. If this is not enough, add anti-affinity rules and PDBs.

Scale up the deployments

+

```shell

kubectl -n capi-system scale deployment capi-controller-manager --replicas=2

diff --git a/docs/topics/advanced-caph.md b/docs/caph/02-topics/04-advanced-caph.md

similarity index 85%

rename from docs/topics/advanced-caph.md

rename to docs/caph/02-topics/04-advanced-caph.md

index 78a9e66b7..084268a00 100644

--- a/docs/topics/advanced-caph.md

+++ b/docs/caph/02-topics/04-advanced-caph.md

@@ -1,20 +1,24 @@

-# Advanced CAPH

+---

+title: Advanced CAPH

+---

## CSR Controller

-For the secure operation of Kubernetes, it is necessary to sign the kubelet serving certificates. By default, these are self-signed by kubeadm. By using the kubelet flag `rotate-server-certificates: "true"`, which can be found in initConfiguration/joinConfiguration.nodeRegistration.kubeletExtraArgs, the kubelet will do a certificate signing request (CSR) to the certificates API of Kubernetes.

+For the secure operation of Kubernetes, it is necessary to sign the kubelet serving certificates. By default, these are self-signed by kubeadm. By using the kubelet flag `rotate-server-certificates: "true"`, which can be found in initConfiguration/joinConfiguration.nodeRegistration.kubeletExtraArgs, the kubelet will do a certificate signing request (CSR) to the certificates API of Kubernetes.

These CSRs are not approved by default for security reasons. As described in the docs, this should be done manually by the cloud provider or with a custom approval controller. Since the provider integration is the responsible cloud provider in a way, it makes sense to implement such a controller directly here. The CSR controller that we implemented checks the DNS name and the IP address and thus ensures that only those nodes receive the signed certificate that are supposed to.

-For error-free operation, the following kubelet flags should not be set:

-```

+For error-free operation, the following kubelet flags should not be set:

+

+```shell

tls-cert-file: "/var/lib/kubelet/pki/kubelet-client-current.pem"

-tls-private-key-file: "/var/lib/kubelet/pki/kubelet-client-current.pem"

+tls-private-key-file: "/var/lib/kubelet/pki/kubelet-client-current.pem"

```

-For more information, see:

-* https://kubernetes.io/docs/tasks/administer-cluster/kubeadm/kubeadm-certs/

-* https://kubernetes.io/docs/reference/access-authn-authz/kubelet-tls-bootstrapping/#client-and-serving-certificates

+For more information, see:

+

+- [https://kubernetes.io/docs/tasks/administer-cluster/kubeadm/kubeadm-certs/](https://kubernetes.io/docs/tasks/administer-cluster/kubeadm/kubeadm-certs/)

+- [https://kubernetes.io/docs/reference/access-authn-authz/kubelet-tls-bootstrapping/#client-and-serving-certificates](https://kubernetes.io/docs/reference/access-authn-authz/kubelet-tls-bootstrapping/#client-and-serving-certificates)

## Rate Limits

@@ -28,7 +32,7 @@ We support multi-tenancy. You can start multiple clusters in one Hetzner project

Cluster API allows to [configure Machine Health Checks](https://cluster-api.sigs.k8s.io/tasks/automated-machine-management/healthchecking.html) with custom remediation strategies. This is helpful for our bare metal servers. If the health checks give an outcome that one server cannot be reached, the default strategy would be to delete it. In that case, it would need to be provisioned again. This takes, of course, longer for bare metal servers than for virtual cloud servers. Therefore, we want to try to avoid this with the help of our `HetznerBareMetalRemediationController` and `HCloudRemediationController`. Instead of deleting the object and deprovisioning it, we first try to reboot it and see whether this helps. If it solves the problem, we save a lot of time that is required for re-provisioning it.

-If the MHC is configured to be used with the `HetznerBareMetalRemediationTemplate` (also see the [reference of the object](/docs/reference/hetzner-bare-metal-remediation-template.md)) and `HCloudRemediationTemplate` (also see the [reference of the object](/docs/reference/hcloud-remediation-template.md)), then such an object is created every time the MHC finds an unhealthy machine.

+If the MHC is configured to be used with the `HetznerBareMetalRemediationTemplate` (also see the [reference of the object](/docs/caph/03-reference/07-hetzner-bare-metal-remediation-template)) and `HCloudRemediationTemplate` (also see the [reference of the object](/docs/caph/03-reference/04-hcloud-remediation-template)), then such an object is created every time the MHC finds an unhealthy machine.

The `HetznerBareMetalRemediationController` reconciles this object and then sets an annotation in the relevant `HetznerBareMetalHost` object specifying the desired remediation strategy. At the moment, only "reboot" is supported.

The `HCloudRemediationController` reboots the HCloudMachine directly via the HCloud API. For HCloud servers, there is no other strategy than "reboot" either.

@@ -70,5 +74,4 @@ spec:

type: "Reboot"

retryLimit: 2

timeout: 300s

-

```

diff --git a/docs/topics/upgrade.md b/docs/caph/02-topics/05-upgrading-caph.md

similarity index 76%

rename from docs/topics/upgrade.md

rename to docs/caph/02-topics/05-upgrading-caph.md

index ef45fedec..07f121e47 100644

--- a/docs/topics/upgrade.md

+++ b/docs/caph/02-topics/05-upgrading-caph.md

@@ -1,4 +1,6 @@

-# Upgrading the Kubernetes Cluster API Provider Hetzner

+---

+title: Upgrading the Kubernetes Cluster API Provider Hetzner

+---

This guide explains how to upgrade Cluster API and Cluster API Provider Hetzner (aka CAPH). Additionally, it also references [upgrading your kubernetes version](#external-cluster-api-reference) as part of this guide.

@@ -8,8 +10,8 @@ Connect `kubectl` to the management cluster.

Check, that you are connected to the correct cluster:

-```

-❯ k config current-context

+```shell

+❯ k config current-context

mgm-cluster-admin@mgm-cluster

```

@@ -19,7 +21,7 @@ OK, looks good.

Is clusterctl still up to date?

-```

+```shell

$ clusterctl version -oyaml

clusterctl:

buildDate: "2024-04-09T17:23:12Z"

@@ -35,7 +37,7 @@ clusterctl:

You can see the current version here:

-https://cluster-api.sigs.k8s.io/user/quick-start.html#install-clusterctl

+[https://cluster-api.sigs.k8s.io/user/quick-start.html#install-clusterctl](https://cluster-api.sigs.k8s.io/user/quick-start.html#install-clusterctl)

If your clusterctl is outdated, then upgrade it. See the above URL for details.

@@ -43,7 +45,7 @@ If your clusterctl is outdated, then upgrade it. See the above URL for details.

Have a look at what could get upgraded:

-```

+```shell

$ clusterctl upgrade plan

Checking cert-manager version...

Cert-Manager is already up to date

@@ -67,14 +69,18 @@ Docs: [clusterctl upgrade plan](https://cluster-api.sigs.k8s.io/clusterctl/comma

You might be surprised that for `infrastructure-hetzner`, you see the "Already up to date" message below "NEXT VERSION".

-NOTE: `clusterctl upgrade plan` does not display pre-release versions by default.

+{% callout %}

+

+`clusterctl upgrade plan` does not display pre-release versions by default.

+

+{% /callout %}

## Upgrade cluster-API

We will upgrade cluster API core components to v1.6.3 version.

Use the command, which you saw in the plan:

-```bash

+```shell

$ clusterctl upgrade apply --contract v1beta1

Checking cert-manager version...

Cert-manager is already up to date

@@ -89,9 +95,14 @@ Installing Provider="bootstrap-kubeadm" Version="v1.6.3" TargetNamespace="capi-k

Deleting Provider="control-plane-kubeadm" Version="v1.6.0" Namespace="capi-kubeadm-control-plane-system"

Installing Provider="control-plane-kubeadm" Version="v1.6.3" TargetNamespace="capi-kubeadm-control-plane-system"

```

-Great, cluster-API was upgraded.

-NOTE: If you want to update only one components or update components one by one then there are flags for that under `clusterctl upgrade apply` subcommand like `--bootstrap`, `--control-plane` and `--core`.

+Great, cluster-API was upgraded.

+

+{% callout %}

+

+If you want to update only one components or update components one by one then there are flags for that under `clusterctl upgrade apply` subcommand like `--bootstrap`, `--control-plane` and `--core`.

+

+{% /callout %}

## Upgrade CAPH

@@ -99,7 +110,7 @@ You can find the latest version of CAPH here:

https://github.com/syself/cluster-api-provider-hetzner/tags

-```bash

+```shell

$ clusterctl upgrade apply --infrastructure=hetzner:v1.0.0-beta.33

Checking cert-manager version...

Cert-manager is already up to date

@@ -110,13 +121,18 @@ Installing Provider="infrastructure-hetzner" Version="v1.0.0-beta.33" TargetName

```

After the upgrade, you'll notice the new pod spinning up the `caph-system` namespace.

-```bash

+

+```shell

$ kubectl get pods -n caph-system

NAME READY STATUS RESTARTS AGE

caph-controller-manager-85fcb6ffcb-4sj6d 1/1 Running 0 79s

```

-NOTE: Please note that `clusterctl` doesn't support pre-release of GitHub by default so if you want to use a pre-release, you'll have to specify the version such as `hetzner:v1.0.0-beta.33`

+{% callout %}

+

+Please note that `clusterctl` doesn't support pre-release of GitHub by default so if you want to use a pre-release, you'll have to specify the version such as `hetzner:v1.0.0-beta.33`

+

+{% /callout %}

## Check your cluster

@@ -124,14 +140,15 @@ Check the health of your workload cluster with your preferred tools and ensure t

## External Cluster API Reference

-After upgrading cluster API, you may want to update the Kubernetes version of your controlplane and worker nodes. Those details can be found in the [Cluster API documentation](https://cluster-api.sigs.k8s.io/tasks/upgrading-clusters).

+After upgrading cluster API, you may want to update the Kubernetes version of your controlplane and worker nodes. Those details can be found in the [Cluster API documentation](https://cluster-api.sigs.k8s.io/tasks/upgrading-clusters).

+

+{% callout %}

+

+The update can be done on either management cluster or workload cluster separately as well.

-NOTE: The update can be done on either management cluster or workload cluster separately as well.

+{% /callout %}

-You should upgrade your kubernetes version after considering the following:

+You should upgrade your kubernetes version after considering that a Cluster API minor release supports (when it’s initially created):

-````markdown

-A Cluster API minor release supports (when it’s initially created):

- - 4 Kubernetes minor releases for the management cluster (N - N-3)

- - 6 Kubernetes minor releases for the workload cluster (N - N-5)

-````

+- 4 Kubernetes minor releases for the management cluster (N - N-3)

+- 6 Kubernetes minor releases for the workload cluster (N - N-5)

diff --git a/docs/topics/hetzner-baremetal.md b/docs/caph/02-topics/06-hetzner-baremetal.md

similarity index 76%

rename from docs/topics/hetzner-baremetal.md

rename to docs/caph/02-topics/06-hetzner-baremetal.md

index ae39652b9..2b8cfc291 100644

--- a/docs/topics/hetzner-baremetal.md

+++ b/docs/caph/02-topics/06-hetzner-baremetal.md

@@ -1,41 +1,49 @@

+---

+title: Hetzner Baremetal

+---

-### Hetzner Baremetal

Hetzner have two offerings primarily:

-1. Hetzner Cloud/ Hcloud -> for virtualized servers

-2. Hetzner Dedicated/ Robot -> for bare metal servers

-In this guide, we will focus on creating a cluster from baremetal servers.

+1. `Hetzner Cloud`/`Hcloud` for virtualized servers

+2. `Hetzner Dedicated`/`Robot` for bare metal servers

-### Flavors of Hetzner Baremetal

-Now, there are different ways you can use baremetal servers, you can use them as controlplanes or as worker nodes or both. Based on that we have created some templates and those templates are released as flavors in GitHub releases.

+In this guide, we will focus on creating a cluster from baremetal servers.

+

+## Flavors of Hetzner Baremetal

+

+Now, there are different ways you can use baremetal servers, you can use them as controlplanes or as worker nodes or both. Based on that we have created some templates and those templates are released as flavors in GitHub releases.

These flavors can be consumed using [clusterctl](https://main.cluster-api.sigs.k8s.io/user/quick-start.html#install-clusterctl) tool:

- To use bare metal servers for your deployment, you can choose one of the following flavors:

+To use bare metal servers for your deployment, you can choose one of the following flavors:

+

+| Flavor | What it does |

+| ---------------------------------------------- | ------------------------------------------------------------------------------------------------------------- |

+| `hetzner-baremetal-control-planes-remediation` | Uses bare metal servers for the control plane nodes - with custom remediation (try to reboot machines first) |

+| `hetzner-baremetal-control-planes` | Uses bare metal servers for the control plane nodes - with normal remediation (unprovision/recreate machines) |

+| `hetzner-hcloud-control-planes` | Uses the hcloud servers for the control plane nodes and the bare metal servers for the worker nodes |

+

+{% callout %}

-| Flavor | What it does |

-| -------------------------------------------- | -------------------------------------------------------------------------------------------------------------------------------------------- |

-| hetzner-baremetal-control-planes-remediation | Uses bare metal servers for the control plane nodes - with custom remediation (try to reboot machines first) |

-| hetzner-baremetal-control-planes | Uses bare metal servers for the control plane nodes - with normal remediation (unprovision/recreate machines) |

-| hetzner-hcloud-control-planes | Uses the hcloud servers for the control plane nodes and the bare metal servers for the worker nodes |

+These flavors are only for demonstration purposes and should not be used in production.

+{% /callout %}

-NOTE: These flavors are only for demonstration purposes and should not be used in production.

+## Purchasing Bare Metal Servers

-### Purchasing Bare Metal Servers

+If you want to create a cluster with bare metal servers, you will also need to set up the robot credentials. For setting robot credentials, as described in the [reference](/docs/caph/03-reference/06-hetzner-bare-metal-machine-template), you need to purchase bare metal servers beforehand manually.

-If you want to create a cluster with bare metal servers, you will also need to set up the robot credentials. For setting robot credentials, as described in the [reference](/docs/reference/hetzner-bare-metal-machine-template.md), you need to purchase bare metal servers beforehand manually.

+## Creating a bootstrap cluster

-### Creating a bootstrap cluster

In this guide, we will focus on creating a bootstrap cluster which is basically a local management cluster created using [kind](https://kind.sigs.k8s.io).

-To create a bootstrap cluster, you can use the following command:

+To create a bootstrap cluster, you can use the following command:

-```bash

-kind create cluster

+```shell

+kind create cluster

```

-```bash

+```shell

Creating cluster "kind" ...

✓ Ensuring node image (kindest/node:v1.29.2) 🖼

✓ Preparing nodes 📦

@@ -51,9 +59,9 @@ kubectl cluster-info --context kind-kind

Have a question, bug, or feature request? Let us know! https://kind.sigs.k8s.io/#community 🙂

```

-After creating the bootstrap cluster, it is also required to have some variables exported and the name of the variables that needs to be exported can be known by running the following command:

+After creating the bootstrap cluster, it is also required to have some variables exported and the name of the variables that needs to be exported can be known by running the following command:

-```bash

+```shell

$ clusterctl generate cluster my-cluster --list-variables --flavor hetzner-hcloud-control-planes

Required Variables:

- HCLOUD_CONTROL_PLANE_MACHINE_TYPE

@@ -70,12 +78,13 @@ Optional Variables:

These variables are used during the deployment of Hetzner infrastructure provider in the cluster.

-Installing the Hetzner provider can be done using the following command:

-```bash

+Installing the Hetzner provider can be done using the following command:

+

+```shell

clusterctl init --infrastructure hetzner

-````

+```

-```bash

+```shell

Fetching providers

Installing cert-manager Version="v1.14.2"

Waiting for cert-manager to be available...

@@ -91,55 +100,68 @@ You can now create your first workload cluster by running the following:

clusterctl generate cluster [name] --kubernetes-version [version] | kubectl apply -f -

```

-### Generating Workload Cluster Manifest

+## Generating Workload Cluster Manifest

Once the infrastructure provider is ready, we can create a workload cluster manifest using `clusterctl generate`

-```bash

+```shell

clusterctl generate cluster my-cluster --flavor hetzner-hcloud-control-planes > my-cluster.yaml

```

As of now, our cluster manifest lives in `my-cluster.yaml` file and we will apply this at a later stage after preparing secrets and ssh-keys.

-### Preparing Hetzner Robot

+## Preparing Hetzner Robot

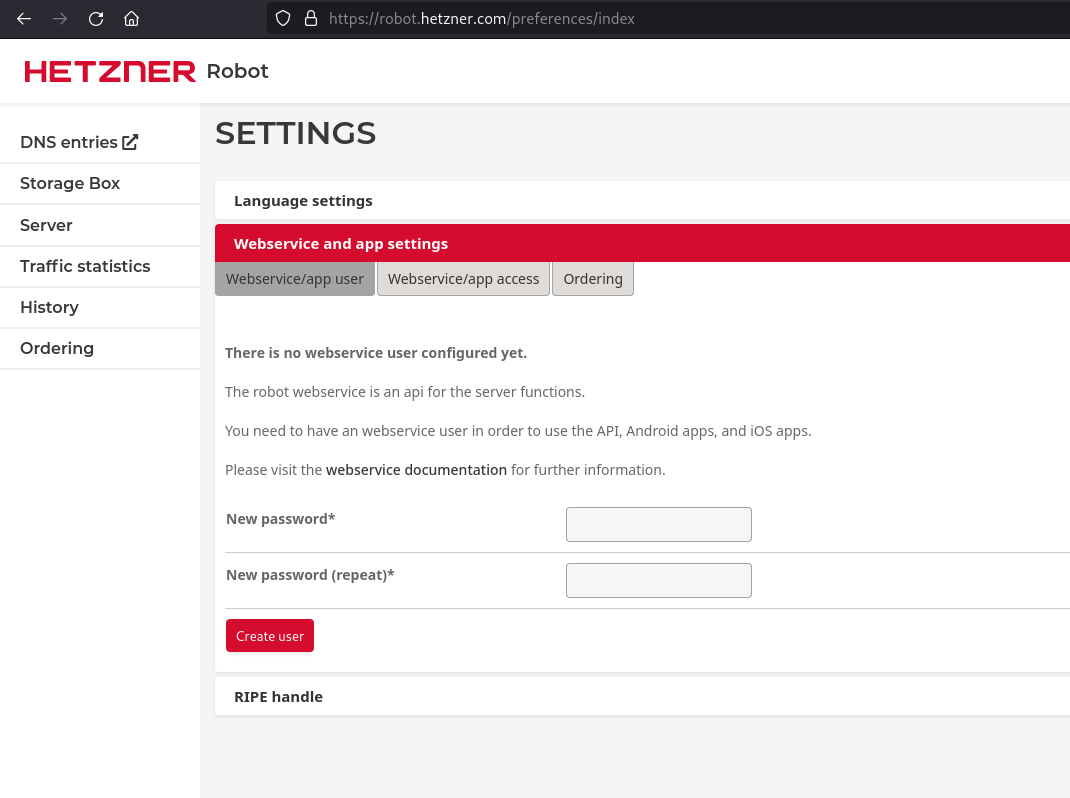

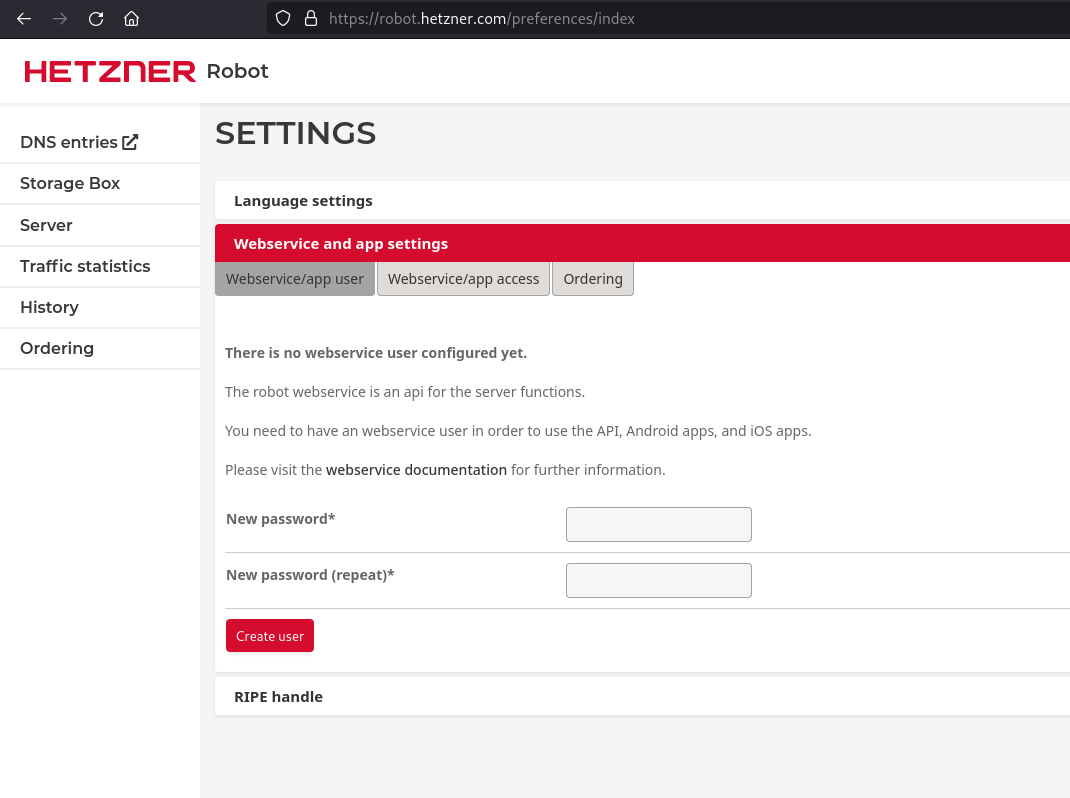

1. Create a new web service user. [Here](https://robot.your-server.de/preferences/index), you can define a password and copy your user name.

2. Generate an SSH key. You can either upload it via Hetzner Robot UI or just rely on the controller to upload a key that it does not find in the robot API. You have to store the public and private key together with the SSH key's name in a secret that the controller reads.

For this tutorial, we will let the controller upload keys to hetzner robot.

-#### Creating new user in Robot

+### Creating new user in Robot

+

To create new user in Robot, click on the `Create User` button in the Hetzner Robot console. Once you create the new user, a user ID will be provided to you via email from Hetzner Robot. The password will be the same that you used while creating the user.

-

+

This is a required for following the next step.

-### creating and verify ssh-key in hcloud

-First you need to create a ssh-key locally and you can `ssh-keygen` command for creation.

-```bash

+## Creating and verify ssh-key in hcloud

+

+First you need to create a ssh-key locally and you can `ssh-keygen` command for creation.

+

+```shell

ssh-keygen -t ed25519 -f ~/.ssh/caph

```

+

Above command will create a public and private key in your `~/.ssh` directory.

You can use the public key `~/.ssh/caph.pub` and upload it to your hcloud project. Go to your project and under `Security` -> `SSH Keys` click on `Add SSH key` and add your public key there and in the `Name` of ssh key you'll use the name `test`.

-NOTE: There is also a helper CLI called [hcloud](https://github.com/hetznercloud/cli) that can be used for the purpose of uploading the SSH key.

+{% callout %}

+

+There is also a helper CLI called [hcloud](https://github.com/hetznercloud/cli) that can be used for the purpose of uploading the SSH key.

+

+{% /callout %}

-In the above step, the name of the ssh-key that is recognized by hcloud is `test`. This is important because we will reference the name of the ssh-key later.

+In the above step, the name of the ssh-key that is recognized by hcloud is `test`. This is important because we will reference the name of the ssh-key later.

This is an important step because the same ssh key is used to access the servers. Make sure you are using the correct ssh key name.

-The `test` is the name of the ssh key that we have created above. It is because the generated manifest references `test` as the ssh key name.

+The `test` is the name of the ssh key that we have created above. It is because the generated manifest references `test` as the ssh key name.

+

```yaml

- sshKeys:

- hcloud:

+sshKeys:

+ hcloud:

- name: test

```

-NOTE: If you want to use some other name then you can modify it accordingly.

+{% callout %}

+

+If you want to use some other name then you can modify it accordingly.

+

+{% /callout %}

-### Create Secrets In Management Cluster (Hcloud + Robot)

+## Create Secrets In Management Cluster (Hcloud + Robot)

In order for the provider integration hetzner to communicate with the Hetzner API ([HCloud API](https://docs.hetzner.cloud/) + [Robot API](https://robot.your-server.de/doc/webservice/en.html#preface)), we need to create secrets with the access data. The secret must be in the same namespace as the other CRs.

@@ -154,11 +176,11 @@ export HETZNER_SSH_PUB_PATH="" \

export HETZNER_SSH_PRIV_PATH=""

```

-- HCLOUD_TOKEN: The project where your cluster will be placed. You have to get a token from your HCloud Project.

-- HETZNER_ROBOT_USER: The User you have defined in Robot under settings/web.

-- HETZNER_ROBOT_PASSWORD: The Robot Password you have set in Robot under settings/web.

-- HETZNER_SSH_PUB_PATH: The Path to your generated Public SSH Key.

-- HETZNER_SSH_PRIV_PATH: The Path to your generated Private SSH Key. This is needed because CAPH uses this key to provision the node in Hetzner Dedicated.

+- `HCLOUD_TOKEN`: The project where your cluster will be placed. You have to get a token from your HCloud Project.

+- `HETZNER_ROBOT_USER`: The User you have defined in Robot under settings/web.

+- `HETZNER_ROBOT_PASSWORD`: The Robot Password you have set in Robot under settings/web.

+- `HETZNER_SSH_PUB_PATH`: The Path to your generated Public SSH Key.

+- `HETZNER_SSH_PRIV_PATH`: The Path to your generated Private SSH Key. This is needed because CAPH uses this key to provision the node in Hetzner Dedicated.

```shell

kubectl create secret generic hetzner --from-literal=hcloud=$HCLOUD_TOKEN --from-literal=robot-user=$HETZNER_ROBOT_USER --from-literal=robot-password=$HETZNER_ROBOT_PASSWORD

@@ -166,7 +188,11 @@ kubectl create secret generic hetzner --from-literal=hcloud=$HCLOUD_TOKEN --from

kubectl create secret generic robot-ssh --from-literal=sshkey-name=test --from-file=ssh-privatekey=$HETZNER_SSH_PRIV_PATH --from-file=ssh-publickey=$HETZNER_SSH_PUB_PATH

```

-> NOTE: sshkey-name should must match the name that is present in hetzner otherwise the controller will not know how to reach the machine.

+{% callout %}

+

+`sshkey-name` should must match the name that is present in hetzner otherwise the controller will not know how to reach the machine.

+

+{% /callout %}

Patch the created secrets so that they get automatically moved to the target cluster later. The following command helps you do that:

@@ -177,9 +203,7 @@ kubectl patch secret robot-ssh -p '{"metadata":{"labels":{"clusterctl.cluster.x-

The secret name and the tokens can also be customized in the cluster template.

-

-### Creating Host Object In Management Cluster

-

+## Creating Host Object In Management Cluster

For using baremetal servers as nodes, you need to create a `HetznerBareMetalHost` object for each bare metal server that you bought and specify its server ID in the specs. Below is a sample manifest for HetznerBareMetalHost object.

@@ -197,7 +221,7 @@ spec:

maintenanceMode: false

```

-If you already know the WWN of the storage device you want to choose for booting, specify it in the `rootDeviceHints` of the object. If not, you can proceed. During the provisioning process, the controller will fetch information about all available storage devices and store it in the status of the object.

+If you already know the WWN of the storage device you want to choose for booting, specify it in the `rootDeviceHints` of the object. If not, you can proceed. During the provisioning process, the controller will fetch information about all available storage devices and store it in the status of the object.

For example, let's consider a `HetznerBareMetalHost` object without specify it's WWN.

@@ -212,10 +236,12 @@ spec:

serverID: # please check robot console

maintenanceMode: false

```

+

In the above server, we have not specified the WWN of the server and we have applied it in the cluster.

-After a while, you will see that there is an error in provisioning of `HetznerBareMetalHost` object that you just applied above. The error will look the following:

-```bash

+After a while, you will see that there is an error in provisioning of `HetznerBareMetalHost` object that you just applied above. The error will look the following:

+

+```shell

$ kubectl get hetznerbaremetalhost -A

default my-cluster-md-1-tgvl5 my-cluster default/test-bm-gpu my-cluster-md-1-t9znj-694hs Provisioning 23m ValidationFailed no root device hints specified

```

@@ -224,26 +250,27 @@ After you see the error, get the YAML output of the `HetznerBareMetalHost` objec

```yaml

storage:

-- hctl: "2:0:0:0"

- model: Micron_1100_MTFDDAK512TBN

- name: sda

- serialNumber: 18081BB48B25

- sizeBytes: 512110190592

- sizeGB: 512

- vendor: 'ATA '

- wwn: "0x500a07511bb48b25"

-- hctl: "1:0:0:0"

- model: Micron_1100_MTFDDAK512TBN

- name: sdb

- serialNumber: 18081BB48992

- sizeBytes: 512110190592

- sizeGB: 512

- vendor: 'ATA '

- wwn: "0x500a07511bb48992"

+ - hctl: "2:0:0:0"

+ model: Micron_1100_MTFDDAK512TBN

+ name: sda

+ serialNumber: 18081BB48B25

+ sizeBytes: 512110190592

+ sizeGB: 512

+ vendor: "ATA "

+ wwn: "0x500a07511bb48b25"

+ - hctl: "1:0:0:0"

+ model: Micron_1100_MTFDDAK512TBN

+ name: sdb

+ serialNumber: 18081BB48992

+ sizeBytes: 512110190592

+ sizeGB: 512

+ vendor: "ATA "

+ wwn: "0x500a07511bb48992"

```

-In the output above, we can see that on this baremetal servers we have two disk with their respective `Wwn`. We can also verify it by making an ssh connection to the rescue system and executing the following command:

-```bash

+In the output above, we can see that on this baremetal servers we have two disk with their respective `Wwn`. We can also verify it by making an ssh connection to the rescue system and executing the following command:

+

+```shell

# lsblk --nodeps --output name,type,wwn

NAME TYPE WWN

sda disk 0x500a07511bb48992

@@ -252,23 +279,37 @@ sdb disk 0x500a07511bb48b25

Since, we are now confirmed about wwn of the two disks, we can use either of them. We will use `kubectl edit` and update the following information in the `HetznerBareMetalHost` object.

-NOTE: Defining `rootDeviceHints` on your baremetal server is important otherwise the baremetal server will not be able join the cluster.

+{% callout %}

+

+Defining `rootDeviceHints` on your baremetal server is important otherwise the baremetal server will not be able join the cluster.

+

+{% /callout %}

```yaml

rootDeviceHints:

wwn: "0x500a07511bb48992"

```

-NOTE: If you've more than one disk then it's recommended to use smaller disk for OS installation so that we can retain the data in between provisioning of machine.

+{% callout %}

+

+If you've more than one disk then it's recommended to use smaller disk for OS installation so that we can retain the data in between provisioning of machine.

+

+{% /callout %}

We will apply this file in the cluster and the provisioning of the machine will be successful.

-To summarize, if you don't know the WWN of your server then there are two ways to find it out:

+To summarize, if you don't know the WWN of your server then there are two ways to find it out:

+

1. Create the HetznerBareMetalHost without WWN and wait for the controller to fetch all information about the available storage devices. Afterwards, look at status of `HetznerBareMetalHost` by running `kubectl get hetznerbaremetalhost -o yaml` in your management cluster. There you will find `hardwareDetails` of all of your bare metal hosts, in which you can see a list of all the relevant storage devices as well as their properties. You can copy+paste the WWN of your desired storage device into the `rootDeviceHints` of your `HetznerBareMetalHost` objects.

2. SSH into the rescue system of the server and use `lsblk --nodeps --output name,type,wwn`

-NOTE: There might be cases where you've more than one disk.

-```bash

+{% callout %}

+

+There might be cases where you've more than one disk.

+

+{% /callout %}

+

+```shell

lsblk -d -o name,type,wwn,size

NAME TYPE WWN SIZE

sda disk 238.5G

@@ -277,19 +318,23 @@ sdc disk 1.8T

sdd disk 1.8T

```

-In the above case, you can use any of the four disks available to you on a baremetal server.

+In the above case, you can use any of the four disks available to you on a baremetal server.

+## Creating Workload Cluster

-### Creating Workload Cluster

+{% callout %}

-NOTE: Secrets as of now are hardcoded given we are using a flavor which is essentially a template. If you want to use your own naming convention for secrets then you'll have to update the templates. Please make sure that you pay attention to the sshkey name.

+Secrets as of now are hardcoded given we are using a flavor which is essentially a template. If you want to use your own naming convention for secrets then you'll have to update the templates. Please make sure that you pay attention to the sshkey name.

+

+{% /callout %}

Since we have already created secret in hetzner robot, hcloud and ssh-keys as secret in management cluster, we can now apply the cluster.

-```bash

+

+```shell

kubectl apply -f my-cluster.yaml

```

-```bash

+```shell

$ kubectl apply -f my-cluster.yaml

kubeadmconfigtemplate.bootstrap.cluster.x-k8s.io/my-cluster-md-0 created

kubeadmconfigtemplate.bootstrap.cluster.x-k8s.io/my-cluster-md-1 created

@@ -308,23 +353,32 @@ hetznerbaremetalmachinetemplate.infrastructure.cluster.x-k8s.io/my-cluster-md-1

hetznercluster.infrastructure.cluster.x-k8s.io/my-cluster created

```

-### Getting the kubeconfig of workload cluster

-After a while, our first controlplane should be up and running. You can verify it using the output of `kubectl get kcp` followed by `kubectl get machines`

+## Getting the kubeconfig of workload cluster

+

+After a while, our first controlplane should be up and running. You can verify it using the output of `kubectl get kcp` followed by `kubectl get machines`

-Once it's up and running, you can get the kubeconfig of the workload cluster using the following command:

+Once it's up and running, you can get the kubeconfig of the workload cluster using the following command:

-```bash

+```shell

clusterctl get kubeconfig my-cluster > workload-kubeconfig

chmod go-r workload-kubeconfig # required to avoid helm warning

```

-### Deploy Cluster Addons

+## Deploy Cluster Addons

+

+{% callout %}

+

+This is important for the functioning of the cluster otherwise the cluster won't work.

+

+{% /callout %}

+

+### Deploying the Hetzner Cloud Controller Manager

-NOTE: This is important for the functioning of the cluster otherwise the cluster won't work.

+{% callout %}

-#### Deploying the Hetzner Cloud Controller Manager

+This requires a secret containing access credentials to both Hetzner Robot and HCloud.

-> This requires a secret containing access credentials to both Hetzner Robot and HCloud.

+{% /callout %}

If you have configured your secret correctly in the previous step then you already have the secret in your cluster.

Let's deploy the hetzner CCM helm chart.

@@ -346,9 +400,11 @@ REVISION: 1

TEST SUITE: None

```

-#### Installing CNI

+### Installing CNI

+

For CNI, let's deploy cilium in the workload cluster that will facilitate the networking in the cluster.

-```bash

+

+```shell

$ helm install cilium cilium/cilium --version 1.15.3 --kubeconfig workload-kubeconfig

NAME: cilium

LAST DEPLOYED: Thu Apr 4 21:11:13 2024

@@ -364,9 +420,11 @@ Your release version is 1.15.3.

For any further help, visit https://docs.cilium.io/en/v1.15/gettinghelp

```

-### verifying the cluster

+### Verifying the cluster

+

Now, the cluster should be up and you can verify it by running the following commands:

-```bash

+

+```shell

$ kubectl get clusters -A

NAMESPACE NAME CLUSTERCLASS PHASE AGE VERSION

default my-cluster Provisioned 10h

@@ -381,16 +439,17 @@ default my-cluster-md-0-2xgj5-tl2jr my-cluster my-cluster-md-0-59cgw

default my-cluster-md-1-cp2fd-7nld7 my-cluster bm-my-cluster-md-1-d7526 hcloud://bm-2317525 Running 9h v1.29.4

default my-cluster-md-1-cp2fd-n74sm my-cluster bm-my-cluster-md-1-l5dnr hcloud://bm-2105469 Running 10h v1.29.4

```

+

Please note that hcloud servers are prefixed with `hcloud://` and baremetal servers are prefixed with `hcloud://bm-`.

## Advanced

### Constant hostnames for bare metal servers

-In some cases it has advantages to fix the hostname and with it the names of nodes in your clusters. For cloud servers not so much as for bare metal servers, where there are storage integrations that allow you to use the storage of the bare metal servers and that work with fixed node names.

+In some cases it has advantages to fix the hostname and with it the names of nodes in your clusters. For cloud servers not so much as for bare metal servers, where there are storage integrations that allow you to use the storage of the bare metal servers and that work with fixed node names.

Therefore, there is the possibility to create a cluster that uses fixed node names for bare metal servers. Please note: this only applies to the bare metal servers and not to Hetzner Cloud servers.

-You can trigger this feature by creating a `Cluster` or `HetznerBareMetalMachine` (you can choose) with the annotation `"capi.syself.com/constant-bare-metal-hostname": "true"`. Of course, `HetznerBareMetalMachines` are not created by the user. However, if you use the `ClusterClass`, then you can add the annotation to a `MachineDeployment`, so that all machines are created with this annotation.

+You can trigger this feature by creating a `Cluster` or `HetznerBareMetalMachine` (you can choose) with the annotation `"capi.syself.com/constant-bare-metal-hostname": "true"`. Of course, `HetznerBareMetalMachines` are not created by the user. However, if you use the `ClusterClass`, then you can add the annotation to a `MachineDeployment`, so that all machines are created with this annotation.

-This is still an experimental feature but it should be safe to use and to also update existing clusters with this annotation. All new machines will be created with this constant hostname.

\ No newline at end of file

+This is still an experimental feature but it should be safe to use and to also update existing clusters with this annotation. All new machines will be created with this constant hostname.

diff --git a/docs/caph/03-reference/01-introduction.md b/docs/caph/03-reference/01-introduction.md

new file mode 100644

index 000000000..ffffe6de5

--- /dev/null

+++ b/docs/caph/03-reference/01-introduction.md

@@ -0,0 +1,18 @@

+---

+title: Object Reference

+---

+

+In this object reference, we introduce all objects that are specific for this provider integration. The naming of objects, servers, machines, etc. can be confusing. Without claiming to be consistent throughout these docs, we would like to give an overview of how we name things here.

+

+First, there are some important counterparts of our objects and CAPI objects.

+

+- `HetznerCluster` has CAPI's `Cluster` object.

+- CAPI's `Machine` object is the counterpart of both `HCloudMachine` and `HetznerBareMetalMachine`.

+

+These two are objects of the provider integration that are reconciled by the `HCloudMachineController` and the `HetznerBareMetalMachineController` respectively.

+

+The `HCloudMachineController` checks whether there is a server in the HCloud API already and if not, buys/creates one that corresponds to a `HCloudMachine` object.

+

+The `HetznerBareMetalMachineController` does not buy new bare metal machines, but instead consumes a host of the inventory of `HetznerBareMetalHosts`, which have a one-to-one relationship to Hetzner dedicated/root/bare metal servers that have been bought manually by the user.

+

+Therefore, there is an important difference between the `HCloudMachine` object and a server in the HCloud API. For bare metal, we have even three terms: the `HetznerBareMetalMachine` object, the `HetznerBareMetalHost` object, and the actual bare metal server that can be accessed through Hetzner's robot API.

diff --git a/docs/caph/03-reference/02-hetzner-cluster.md b/docs/caph/03-reference/02-hetzner-cluster.md

new file mode 100644

index 000000000..b1e19a80d

--- /dev/null

+++ b/docs/caph/03-reference/02-hetzner-cluster.md

@@ -0,0 +1,70 @@

+---

+title: HetznerCluster

+---

+

+In HetznerCluster you can define everything related to the general components of the cluster as well as those properties, which are valid cluster-wide.

+

+There are two different modes for the cluster. A pure HCloud cluster and a cluster that uses Hetzner dedicated (bare metal) servers, either as control planes or as workers.

+

+The HCloud cluster works with Kubeadm and supports private networks.

+

+In a cluster that includes bare metal servers there are no private networks, as this feature has not yet been integrated in cluster-api-provider-hetzner. Apart from SSH, the node image has to support cloud-init, which we use to provision the bare metal machines.

+

+{% callout %}

+

+In clusters with bare metal servers, you need to use [this CCM](https://github.com/syself/hetzner-cloud-controller-manager), as the official one does not support bare metal.

+

+{% /callout %}

+

+[Here](/docs/caph/02-topics/01-managing-ssh-keys) you can find more information regarding the handling of SSH keys. Some of them are specified in `HetznerCluster` to have them cluster-wide, others are machine-scoped.

+

+## Usage without HCloud Load Balancer

+

+It is also possible not to use the cloud load balancer from Hetzner. This is useful for setups with only one control plane, or if you have your own cloud load balancer.

+