Table of Contents:

- Overview

- Linear systems

- Stability and Eigenvalues

- Linearizing Around a Fixed Point

- Controllability

- Inverted Pendulum on a Cart

- Pole Placement for the Inverted Pendulum on a Cart

- LQR Control for the Inverted Pendulum on a Cart

- Full State Esimation

- Writing discription of control system in terms of linear differential equation of inputs and outputs

- Simulating system

- Designing controllers to manipulate behavior of system

- Passive: one time solution, no energy expenditure

- Active: Uses energy

- Open loop: Finding optimal constant input for desired output. Example of open loop control.

- Closed loop feedback control: using controller to manipulate input depending on current state of system. Uses less energy and more precise. Example of closed loop feedback control

| Open Loop | Closed Loop Feedback control | |

|---|---|---|

| Uncertainty refers to internal changes in system. | Uncertainty makes input suboptimal. | Uncertainty is not an issue, as any change is system variables gets detected and input is varied accordingly. |

| Instable | Very stable. | |

| Disturbance referes to external forces. | Cannot take in account for disturbance. | Disturbance also gets detected by sensors and input is vaired accordingly. |

| Inefficient as energy is used continuously | Efficient as energy is used only when required |

A system can be represented by linear system of differential equation as below.

Here x is a vector consisting system coordinates and A is matrix which represents how these variables interact with each other.

Basic solution of this equation can be given as

By using Taylor's series we can write

As this is very difficult to compute we will use eigenvectors of A to convert x in terms of eigenvector coordinates.

Let us consider matrix containing all the eigenvectors T = [E1 E2...En]

and diagonal matrix containing all the eigenvalues as below.

So we can write

Let us assume x is vector containing system coordinates in terms of eigenvector coordinates. So

Even though above equation is easier to compute, we want it in terms of x and not z.

We know AT = TD, so we can write

Putting this equation in previous Taylor's series and simplifing it we get

Watch this video from 15:28 for calculations

Now our final equation becomes

We can use this equation to find state of the system in future time.

If x(t) tends to infinity then the system will be unstable. Which means if value of e^(Dt) tends to infinity then system will be unstable. Value of e^(Dt) is given in terms of eigenvalues.

We can clearly see that if real part of all eigenvalues is negative then the value of x(t) will not tend to infinity and system will become stable.

Till now we were looking at system as continuous time system. But sensors cannot measure physical property continuously. Sensors give readings at discrete time intervals.

We can represent this discrete time system as

where

If we have initial cordinates x0, we can write

Here the value of à is directily proportional to eigenvalue. If some exponential power of eigenvalue tends to infinity then the system will be unstable. Hence the magnitude of eigenvalue should be less than 1 in discrete time system to achieve stability.

Till now we looked at purely linear system. We can also derive linear system from non-linear dyanamical system like dx/dt = f(x) if it has fixed points i.e d/dt of those points is zero. Physically the system will be stable at fixed points assuming there are no disturbances.

Let ̄x be the system coordinates at fixed point. By using Talor's series expansion we get

Where

if x - x̄ is very small, then

If all the eigenvalues have real part then the linearization works, but if some eigenvalues are purely imaginary, then we can't linearize system.

Let us consider a system of dimension n which we want to control. It can be represented by following equation.

where A is nxn matrix which relates system coordinates, B is matrix which represents actuators used to control system and u = -kx is manipulated input to actuators. For most systems A and B are fixed. To check if system is controllable or not consider matrix

If and only if rank of C is a full rank matrix i.e rank(C) = n then the system is controllable.

Singular Value Decomposition of matrix C gives information about which states of system are easier to control and which are not.

- System is controllable.

- If and only if system is controllable, arbitrary eigenvalue(pole) placement is possible.

- If the system if controllabe, then there is some control input

$u$ which will steer the system to any state x. Reachable set is collection of all states for which there is an control input u(t) so that x(t) denotes that state.

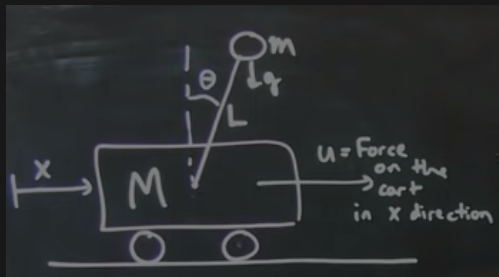

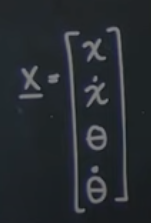

Let us consider a inverted pendulum on a cart having state vector x as below.

This system can be represented by non-linear equation dx/dt = f(x) It can be linearized for fixed points given below.

We can find Jacobian matrix for these fixed points. In case where pendulum is inverted(Θ = π), some of the eigenvalues of matrix A comeout to be positive. To stabalize the pendulum in this position we can use pole placement

After linearising and including control term we get equation as below. dx/dt = (A - Bk)x Here K is controller vector which manipulates eigenvalues of A - Bk to achieve stability.

In Matlab we can specify eigenvalues and find out vector k. And then we can simulate the system.

Negative eigenvalues will stabalize the system. Increasing the magnitude of eigenvalue will result in faster stabalization. But increasing it beyond certain value the non-linear system dynamics become unstable.

LQR stands for linear quadratic control. It gives us optimal value for matrix k by penalising change in system coordinates and power consumption according to our need.

Here Q is matrix which represents how much penalty should be given if system deviates from desired state and R represents penalty for using power. Optimal K will be the one which minimizes this quadratic cost function.

In Matlab we can find K using command k = lqr(A, B, Q, R)

We would like to have full state measurements of system

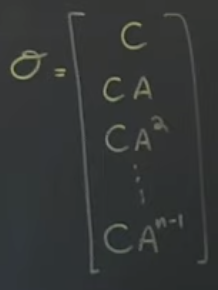

Observability is whether we can estimate full state from measurements y(t) Consider observability matrix as below

If rank(O) = n, system is observable.

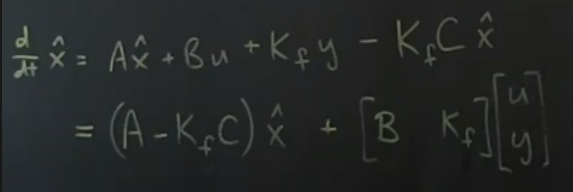

To estimate the full state we need to design a estimator which accepts u and y as input and outputs x̂ which is estimated full state. This estimator itself is a linear dynamical system. It can be represented as

Here Kf is Kalman filter gain matrix. The term

updates estimated x̂ based on new values of y.