-

Notifications

You must be signed in to change notification settings - Fork 2

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Updated landing page, copied over workshop instructions

- Loading branch information

Showing

58 changed files

with

1,249 additions

and

16 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,64 @@ | ||

| # 01 | The Paradigm Shift | ||

|

|

||

| Streamlining the end-to-end development workflow for modern "AI apps" requires a paradigm shift from **MLOps** to **LLMOps** that acknowledges the common roots while being mindful of the growing differences. We can view this shift in terms of how it causes us to rethink three things (_mindset_, _workflows_, _tools_) to be more effective in developing generative AI applications. | ||

|

|

||

|

|

||

| --- | ||

|

|

||

| ## Rethink Mindset | ||

|

|

||

| Traditional "AI apps" can be viewed as "ML apps". They took data inputs and used custom-trained models to return relevant predictions as output. Modern "AI apps" tend to refer to generative AI apps that take natural language inputs (prompts), use pre-trained large language models (LLM), and return original content to users as the response. This shift is reflected in many ways. | ||

|

|

||

| - **The target audience is different** ➡ App developers, not data scientists. | ||

| - **The generated assets are different** ➡ Emphasize integrations, not predictions. | ||

| - **The evaluation metrics are different** ➡ Focus on fairness, groundedness, token usage. | ||

| - **The underlying ML models are different.** Pre-trained "Models-as-a-Service" vs. build. | ||

|

|

||

|  | ||

|

|

||

| --- | ||

|

|

||

| ## Rethink Workflow | ||

|

|

||

| **With MLOps**, end-to-end application development involved a [complex data science lifecycle](https://learn.microsoft.com/azure/architecture/ai-ml/guide/_images/data-science-lifecycle-diag.png). To "fit" into software development processes, this was mapped to [a higher-level workflow](https://learn.microsoft.com/azure/architecture/ai-ml/guide/mlops-technical-paper#machine-learning-model-solution) visualized as shown below. The complex data science lifecycle steps (data preparation, model engineering & model evaluation) are now encapsulated into the _experimentation_ phase. | ||

|

|

||

|  | ||

|

|

||

| **With LLMOps**, those steps need to be rethought in the context of new requirements like using natural langauge inputs (prompts), new techniques for improving quality (RAG, Fine-Tuning), new metrics for evaluation (groundedness, coherence, fluency) and responsible AI (assessment). This leads us to a revised versio of the 3-phase application development lifecycle as shown: | ||

|

|

||

|  | ||

|

|

||

| We can unpack each phase to get a sense of individual steps in workflows that are now designed around prompt-based inputs, token-based pricing, and region-based availability of large language models and Azure AI services for provisioning. | ||

|

|

||

|  | ||

|

|

||

| !!!example "Building LLM Apps: From Prompt Engineering to LLM Ops" | ||

|

|

||

| In the accompanying workshop, we'll walk through the end-to-end development process for our RAG-based LLM App from _prompt engineering_ (ideation, augmentation) to _LLM Ops_ (operationalization). We hope that helps make some of these abstract concepts feel more concrete when viewed in action. | ||

|

|

||

| --- | ||

|

|

||

| ## Rethink Tools | ||

|

|

||

| We can immediately see how this new application development lifecycle requires corresponding _innovation in tooling_ to streamline the end-to-end development process from ideation to operationalization. The [Azure AI platform](https://learn.microsoft.com/ai) has been retooled with exactly these requirements in mind. It is centered around [Azure AI Studio](https://ai.azure.comx), a unified web portal that: | ||

|

|

||

| - lets you "Explore" models, capabilities, samples & responsible AI tools | ||

| - gives you _single pane of glass_ visibility to "Manage" Azure AI resources | ||

| - provides UI-based development flows to "Build" your Azure AI projects | ||

| - has Azure AI SDK and Azure AI CLI options for "Code-first" development | ||

|

|

||

|  | ||

|

|

||

| The Azure AI platform is enhanced by other developer tools and resources including [PromptFlow](https://github.com/microsoft/promptflow), [Visual Studio Code Extensions](https://marketplace.visualstudio.com/VSCode) and [Responsible AI guidance](https://learn.microsoft.com/azure/ai-services/responsible-use-of-ai-overview) with built-in support for [content-filtering](https://learn.microsoft.com/azure/ai-studio/concepts/content-filtering). We'll cover some of these in the Concepts and Tooling sections of this guide. | ||

|

|

||

| !!!abstract "Using The Azure AI Platform" | ||

| The abstract workflow will feel more concrete when we apply the concepts to a real use case. In the Workshop section, you'll get hands-on experience with these tools to give you a sense of their roles in streamlining your end-to-end developer experience. | ||

|

|

||

| - **Azure AI Studio**: Build & Manage Azure AI project and resources. | ||

| - **Prompt Flow**: Build, Evaluate & Deploy a RAG-based LLM App. | ||

| - **Visual Studio Code**: Use Azure, PromptFlow, GitHub Copilot, Jupyter Notebook extensions. | ||

| - **Responsible AI**: Content filtering, guidance for responsible prompts usage. | ||

|

|

||

|

|

||

|

|

||

|

|

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,92 @@ | ||

| # 02 | The App Scenario | ||

| !!!example "Consider this familiar enterprise scenario!" | ||

|

|

||

| You're a new hire in the Contoso Outdoors organization. They are an e-commerce company with a successful product catalog and loyal customer base focused on outdoor activities like camping and hiking. Their website is hugely popular but their customer service agents are being overwhelmed by calls that could be answered by information currently on the site. | ||

|

|

||

| For every call they fail to answer, they are potentially losing not just revenue, but customer loyalty. **You are part of the developer team tasked to build a Customer Support AI into the website** to meet that demand. The objective is to build and deploy a customer service agent that is _friendly, helpful, responsible, and relevant_ in its support interactions. | ||

|

|

||

| Let's walk through how you can make this happen in your organization, using the Azure AI Platform. Well start with the _ideation_ phase which involves identifying the business case, connecting to your data, building the basic prompt flow (LLM App), then iterating locally to extend it for your app requirements. Let's understand how this maps to our workshop. | ||

|

|

||

| --- | ||

|

|

||

| ## Contoso Outdoors (Website) | ||

|

|

||

| The Contoso Chat (LLM App) is being designed for integration into the Contoso Outdoors site (Web App) via the _chat icon_ seen at the bottom right. The website landing page features the Contoso Outdoors _product catalog_ organized neatly into categories like _Tents_ and _Backpacks_ to simplify discovery by customers. | ||

|

|

||

|  | ||

|

|

||

| When a customer clicks an item, they are taken to the _product details_ page with extensive information that they can use to guide their decisions towards a purchase. | ||

|

|

||

|  | ||

|

|

||

| !!!info "Step 1: Identify Business Case" | ||

|

|

||

| The Contoso Chat AI should meet two business objectives. It should _reduce customer support calls_ (to manual operator) by proactively answering customer questions onsite. It should _increase customer product purchases_ by providing timely and contextual information to help them finalize the purchase decision. | ||

|

|

||

| --- | ||

|

|

||

| ## Chat Completion (Basic) | ||

|

|

||

| Let's move to the next step - designing our Contoso Chat AI using the relevant Large Language Model (LLM). Based on our manual customer service calls, we know questions can broadly fall into two categories: | ||

|

|

||

| - **Product** focus ➡ _"What should I buy for my hiking trip to Andalusia?"_ | ||

| - **Customer** focus ➡ _"What backpack should I buy given my previous purchases here?"_ | ||

|

|

||

| We know that Azure OpenAI provides a number of pre-trained models for _chat completion_ so let's see how the baseline model works for our requirements, by using the [Azure AI Studio](https://ai.azure.com) **Playground** capability with a [`gpt-3.5-turbo`](https://learn.microsoft.com/azure/ai-services/openai/concepts/models) model deployment. This model can understand inputs (and generate responses) using natural language. | ||

|

|

||

| Let's see how it responds to the two questions above. | ||

|

|

||

| 1. **No Product Context**. The pre-trained model provides a perfectly valid response to the question _but it lacks the product catalog context for Contoso Outdoors!_. We need to refine this model to use our data! | ||

|  | ||

|

|

||

| 2. **No Customer History**. The pre-trained model makes it clear that it has no access to customer history _and consequently makes general recommendations that may be irrelevant to customer query_. We need to refine this model to understand customer identity and access their purchase history. | ||

|  | ||

|

|

||

| !!!info "Step 2: Connect To Your Data" | ||

|

|

||

| We need a way to _fine-tune_ the model to take our product catalog and customer history into account as relevant context for the query. The first step is to make the data sources available to our workflow. [Azure AI Studio](https://ai.azure.com) makes this easy by helping you setup and manage _connections_ to relevant Azure search and database resources. | ||

|

|

||

| --- | ||

|

|

||

| ## Chat Augmentation (RAG) | ||

|

|

||

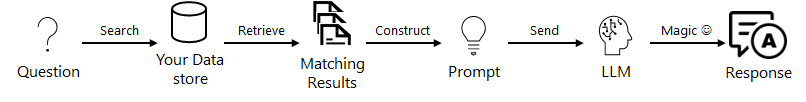

| That brings us to the next step - _prompt engineering_. We need to **augment** the user question (default prompt) with additional query context that ensures Contoso Outdoor product data is prioritized in responses. We use a popular technique know as [Retrieval Augmented Generation (RAG)](https://learn.microsoft.com/azure/ai-studio/concepts/retrieval-augmented-generation) that works as shown below, to _generate responses that are specific to your data_. | ||

|

|

||

|  | ||

|

|

||

| We can now get a more _grounded response_ in our Contoso Chat AI, as shown. | ||

|

|

||

|  | ||

|

|

||

| !!!info "Step 3: Build Basic PromptFlow" | ||

|

|

||

| We now need to add in a step that also takes _customer history_ into account. To implement this, we need a tool that helps us _orchestrate_ these various steps in a more intuitive way, allowing user query and data to "flow" through the processing pipeline to generate the final response. | ||

|

|

||

| --- | ||

|

|

||

| ## Chat Orchestration (Flow) | ||

|

|

||

| The previous step gives us a basic flow that augments predefined model behaviors to add product context. Now, we want to add _another tool_ (or processing function) that looks up customer details for additional prompt engineering. The end result should be a user experience that looks something like this, helping move the user closer to a purchase decision. | ||

|

|

||

|  | ||

|

|

||

| !!!info "Step 4: Develop & Extend Flow" | ||

|

|

||

| Flow orchestration is hard. This is where [PromptFlow](https://aka.ms/promptflow) helps, allowing us to insert a _customer lookup function_ seamlessly into the flow graph, to extend it. With the Azure AI platform, you get PromptFlow capabilities integrated seamlessly into both development (VS Code) and deployment (Azure AI Studio) environments for a streamlined end-to-end developer experience. | ||

|

|

||

| --- | ||

|

|

||

| ## Evaluate & Deploy (E2E) | ||

|

|

||

| This ends the _ideation_ phase of the application lifecycle we saw earlier. PromptFlow works seamlessly with Azure AI Studio to streamline the next two steps of the lifecycle (_evaluate_ and _deploy_), helping get deliver the final Contoso Chat Support Agent AI experience on the Contoso Outdoors website. Here is what a multi-turn conversation with customers might look like now: | ||

|

|

||

|  | ||

|

|

||

| Your customer support AI is a hit! | ||

|

|

||

| Not only can it answer questions grounded in your product catalog, but it can refine or recommend responses based on the customer's purchase history. The conversational experience feels more natural to your customers and reduces their effort in finding relevant products in information-dense websites. | ||

|

|

||

| You find customers are spending more time in chat conversations with your support agent AI, and finding new reasons to purchase your products. | ||

|

|

||

| !!!example "Workshop: Build a production RAG with PromptFlow & Azure AI Studio" | ||

| In the next section, we'll look at how we can bring this story to life, step-by-step, using Visual Studio Code, Azure AI Studio and Prompt Flow. You'll learn how to provision Azure AI Services, engineer prompts with Retrieval-Augmented Generation to use your product data, then extend the PromptFlow to include customer lookup before evaluating and deploying the Chat AI application to Azure for real-world use. |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,35 @@ | ||

| # 03 | The Dev Environment | ||

|

|

||

| The repository is instrumented with [dev container](https://containers.dev) configuration that provides a consistent pre-built development environment deployed in a Docker container. Launch this in the cloud with [GitHub Codespaces](https://docs.github.com/codespaces), or in your local device with [Docker Desktop](https://www.docker.com/products/docker-desktop/). | ||

|

|

||

| --- | ||

|

|

||

| ## Dev Tools | ||

|

|

||

| In addition, we make use of these tools: | ||

|

|

||

| - **[Visual Studio Code](https://code.visualstudio.com/) as the default editor** | Works seamlessly with dev containers. Extensions streamline development with Azure and Prompt Flow. | ||

| - **[Azure Portal](https://portal.azure.com) for Azure subscription management** | Single pane of glass view into all Azure resources, activities, billing and more. | ||

| - **[Azure AI Studio (Preview)](https://ai.azure.com)** | Single pane of glass view into all resources and assets for your Azure AI projects. Currently in preview (expect it to evolve rapidly). | ||

| - **[Azure ML Studio](https://ml.azure.com)** | Enterprise-grade AI service for managing end-to-end ML lifecycle for operationalizing AI models. Used for some configuration operations in our workshop (expect support to move to Azure AI Studio). | ||

| - **[Prompt Flow](https://github.com/microsoft/promptflow)** | Open-source tooling for orchestrating end-to-end development workflow (design, implementation, execution, evaluation, deployment) for modern LLM applications. | ||

|

|

||

| --- | ||

|

|

||

| ## Required Resources | ||

|

|

||

| We make use of the following resources in this lab: | ||

|

|

||

| !!!info "Azure Samples Used | **Give them a ⭐️ on GitHub**" | ||

|

|

||

| - [Contoso Chat](https://github.com/Azure-Samples/contoso-chat) - as the RAG-based AI app _we will build_. | ||

| - [Contoso Outdoors](https://github.com/Azure-Samples/contoso-web) - as the web-based app _using our AI_. | ||

|

|

||

| !!!info "Azure Resources Used | **Check out the Documentation**" | ||

|

|

||

| - [Azure AI Resource](https://learn.microsoft.com/azure/ai-studio/concepts/ai-resources) - Top-level Azure resource for AI Studio, establishes working environment. | ||

| - [Azure AI Project](https://learn.microsoft.com/azure/ai-studio/how-to/create-projects) - saves state and organizes work for AI app development. | ||

| - [Azure AI Search](https://learn.microsoft.com/azure/search/search-what-is-azure-search) - get secure information retrieval at scale over user-owned content | ||

| - [Azure Open AI](https://learn.microsoft.com/azure/ai-services/openai/overview) - provides REST API access to OpenAI's powerful language models. | ||

| - [Azure Cosmos DB](https://learn.microsoft.com/azure/cosmos-db/) - Fully managed, distributed NoSQL & relational database for modern app development. | ||

| - [Deployment Models](https://learn.microsoft.com/azure/ai-studio/how-to/model-catalog) Deployment from model catalog by various criteria. |

66 changes: 66 additions & 0 deletions

66

contoso-chat/docs/02 | Workshop/01 | Lab Overview/README.md

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,66 @@ | ||

| # 1.1 | What You'll Learn | ||

|

|

||

| This is a 60-75 minute workshop that consists of a series of lab exercises that teach you how to build a production RAG (Retrieval Augmented Generation) based LLM application using Promptflow and Azure AI Studio. | ||

|

|

||

| You'll gain hands-on experience with the various steps involved in the _end-to-end application development lifecycle_ from prompt engineering to LLM Ops. | ||

|

|

||

| --- | ||

|

|

||

| ## Learning Objectives | ||

|

|

||

| !!!info "By the end of this lab, you should be able to:" | ||

|

|

||

| 1. Explain **LLMOps** - concepts & differentiation from MLOps. | ||

| 1. Explain **Prompt Flow** - concepts & tools for building LLM Apps. | ||

| 1. Explain **Azure AI Studio** - features & functionality for streamlining E2E app development. | ||

| 1. **Design, run & evaluate** RAG apps - using the Promptflow Extension on VS Code | ||

| 1. **Deploy, test & use** RAG apps - from Azure AI Studio UI (no code experience) | ||

|

|

||

| --- | ||

|

|

||

| ## Pre-Requisites | ||

|

|

||

| !!!info "We assume you have familiarity with the following:" | ||

|

|

||

| 1. Machine Learning & Generative AI _concepts_ | ||

| 1. Python & Jupyter Notebook _programming_ | ||

| 1. Azure, GitHub & Visual Studio Code _tooling_ | ||

|

|

||

| !!!info "You will need the following to complete the lab:" | ||

|

|

||

| 1. Your own laptop (charged) with a modern browser | ||

| 1. A GitHub account with GitHub Codespaces quota. | ||

| 1. An Azure subscription with Azure OpenAI access. | ||

| 1. An Azure AI Search resource with Semantic Ranker enabled. | ||

|

|

||

| --- | ||

|

|

||

| ## Dev Environment | ||

|

|

||

| You'll make use of the following resources in this workshop: | ||

|

|

||

| !!!info "Code Samples (GitHub Repositories)" | ||

|

|

||

| - [Contoso Chat](https://github.com/Azure-Samples/contoso-chat) - source code for the RAG-based LLM app. | ||

| - [Contoso Web](https://github.com/Azure-Samples/contoso-web) - source code for the Next.js-based Web app. | ||

|

|

||

|

|

||

| !!!info "Developer Tools (local and cloud)" | ||

|

|

||

| - [Visual Studio Code](https://code.visualstudio.com/) - as the default editor | ||

| - [Github Codespaces](https://github.com/codespaces) - as the dev container | ||

| - [Azure AI Studio (Preview)](https://ai.azure.com) - for AI projects | ||

| - [Azure ML Studio](https://ml.azure.com) - for minor configuration | ||

| - [Azure Portal](https://portal.azure.com) - for managing Azure resources | ||

| - [Prompt Flow](https://github.com/microsoft/promptflow) - for streamlining end-to-end LLM app dev | ||

|

|

||

| !!!info "Azure Resources (Provisioned in Subscription)" | ||

|

|

||

| - [Azure AI Resource](https://learn.microsoft.com/azure/ai-studio/concepts/ai-resources) - top-level AI resource, provides hosting environment for apps | ||

| - [Azure AI Project](https://learn.microsoft.com/azure/ai-studio/how-to/create-projects) - organize work & save state for AI apps. | ||

| - [Azure AI Search](https://learn.microsoft.com/azure/search/search-create-service-portal) - full-text search, indexing & information retrieval. (product data) | ||

| - [Azure OpenAI Service](https://learn.microsoft.com/azure/ai-services/openai/overview) - chat completion & text embedding models. (chat UI, RAG) | ||

| - [Azure Cosmos DB](https://learn.microsoft.com/azure/cosmos-db/nosql/quickstart-portal) - globally-distributed multi-model database. (customer data) | ||

| - [Azure Static Web Apps](https://learn.microsoft.com/azure/static-web-apps/overview) - optional, deploy Contoso Web application. (chat integration) | ||

|

|

||

| === |

Oops, something went wrong.