The fastest and most memory efficient lattice Boltzmann CFD software, running on all GPUs via OpenCL. Free for non-commercial use.

Update History

- v1.0 (04.08.2022) changes (public release)

- public release

- v1.1 (29.09.2022) changes (GPU voxelization)

- added solid voxelization on GPU (slow algorithm)

- added tool to print current camera position (key G)

- minor bug fix (workaround for Intel iGPU driver bug with triangle rendering)

- v1.2 (24.10.2022) changes (force/torque compuatation)

- added functions to compute force/torque on objects

- added function to translate Mesh

- added Stokes drag validation setup

- v1.3 (10.11.2022) changes (minor bug fixes)

- added unit conversion functions for torque

FORCE_FIELDandVOLUME_FORCEcan now be used independently- minor bug fix (workaround for AMD legacy driver bug with binary number literals)

- v1.4 (14.12.2022) changes (Linux graphics)

- complete rewrite of C++ graphics library to minimize API dependencies

- added interactive graphics mode on Linux with X11

- fixed streamline visualization bug in 2D

- v2.0 (09.01.2023) changes (multi-GPU upgrade)

- added (cross-vendor) multi-GPU support on a single node (PC/laptop/server)

- v2.1 (15.01.2023) changes (fast voxelization)

- made solid voxelization on GPU lightning fast (new algorithm, from minutes to milliseconds)

- v2.2 (20.01.2023) changes (velocity voxelization)

- added option to voxelize moving/rotating geometry on GPU, with automatic velocity initialization for each grid point based on center of rotation, linear velocity and rotational velocity

- cells that are converted from solid->fluid during re-voxelization now have their DDFs properly initialized

- added option to not auto-scale mesh during

read_stl(...), with negativesizeparameter - added kernel for solid boundary rendering with marching-cubes

- v2.3 (30.01.2023) changes (particles)

- added particles with immersed-boundary method (either passive or 2-way-coupled, only supported with single-GPU)

- minor optimization to GPU voxelization algorithm (workgroup threads outside mesh bounding-box return after ray-mesh intersections have been found)

- displayed GPU memory allocation size is now fully accurate

- fixed bug in

write_line()function insrc/utilities.hpp - removed

.exefile extension for Linux/macOS

- v2.4 (11.03.2023) changes (UI improvements)

- added a help menu with key H that shows keyboard/mouse controls, visualization settings and simulation stats

- improvements to keyboard/mouse control (+/- for zoom, mouseclick frees/locks cursor)

- added suggestion of largest possible grid resolution if resolution is set larger than memory allows

- minor optimizations in multi-GPU communication (insignificant performance difference)

- fixed bug in temperature equilibrium function for temperature extension

- fixed erroneous double literal for Intel iGPUs in skybox color functions

- fixed bug in make.sh where multi-GPU device IDs would not get forwarded to the executable

- minor bug fixes in graphics engine (free cursor not centered during rotation, labels in VR mode)

- fixed bug in

LBM::voxelize_stl()size parameter standard initialization

- v2.5 (11.04.2023) changes (raytracing overhaul)

- implemented light absorption in fluid for raytracing graphics (no performance impact)

- improved raytracing framerate when camera is inside fluid

- fixed skybox pole flickering artifacts

- fixed bug where moving objects during re-voxelization would leave an erroneous trail of solid grid cells behind

- v2.6 (16.04.2023) changes (Intel Arc patch)

- patched OpenCL issues of Intel Arc GPUs: now VRAM allocations >4GB are possible and correct VRAM capacity is reported

- v2.7 (29.05.2023) changes (visualization upgrade)

- added slice visualization (key 2 / key 3 modes, then switch through slice modes with key T, move slice with keys Q/E)

- made flag wireframe / solid surface visualization kernels toggleable with key 1

- added surface pressure visualization (key 1 when

FORCE_FIELDis enabled andlbm.calculate_force_on_boundaries();is called) - added binary

.vtkexport function for meshes withlbm.write_mesh_to_vtk(Mesh* mesh); - added

time_step_multiplicatorforintegrate_particles()function in PARTICLES extension - made correction of wrong memory reporting on Intel Arc more robust

- fixed bug in

write_file()template functions - reverted back to separate

cl::Contextfor each OpenCL device, as the shared Context otherwise would allocate extra VRAM on all other unused Nvidia GPUs - removed Debug and x86 configurations from Visual Studio solution file (one less complication for compiling)

- fixed bug that particles could get too close to walls and get stuck, or leave the fluid phase (added boundary force)

- v2.8 (24.06.2023) changes (documentation + polish)

- finally added more documentation

- cleaned up all sample setups in

setup.cppfor more beginner-friendliness, and added required extensions indefines.hppas comments to all setups - improved loading of composite

.stlgeometries, by adding an option to omit automatic mesh repositioning, added more functionality toMeshstruct inutilities.hpp - added

uint3 resolution(float3 box_aspect_ratio, uint memory)function to compute simulation box resolution based on box aspect ratio and VRAM occupation in MB - added

bool lbm.graphics.next_frame(...)function to export images for a specified video length in themain_setupcompute loop - added

VIS_...macros to ease setting visualization modes in headless graphics mode inlbm.graphics.visualization_modes - simulation box dimensions are now automatically made equally divisible by domains for multi-GPU simulations

- fixed Info/Warning/Error message formatting for loading files and made Info/Warning/Error message labels colored

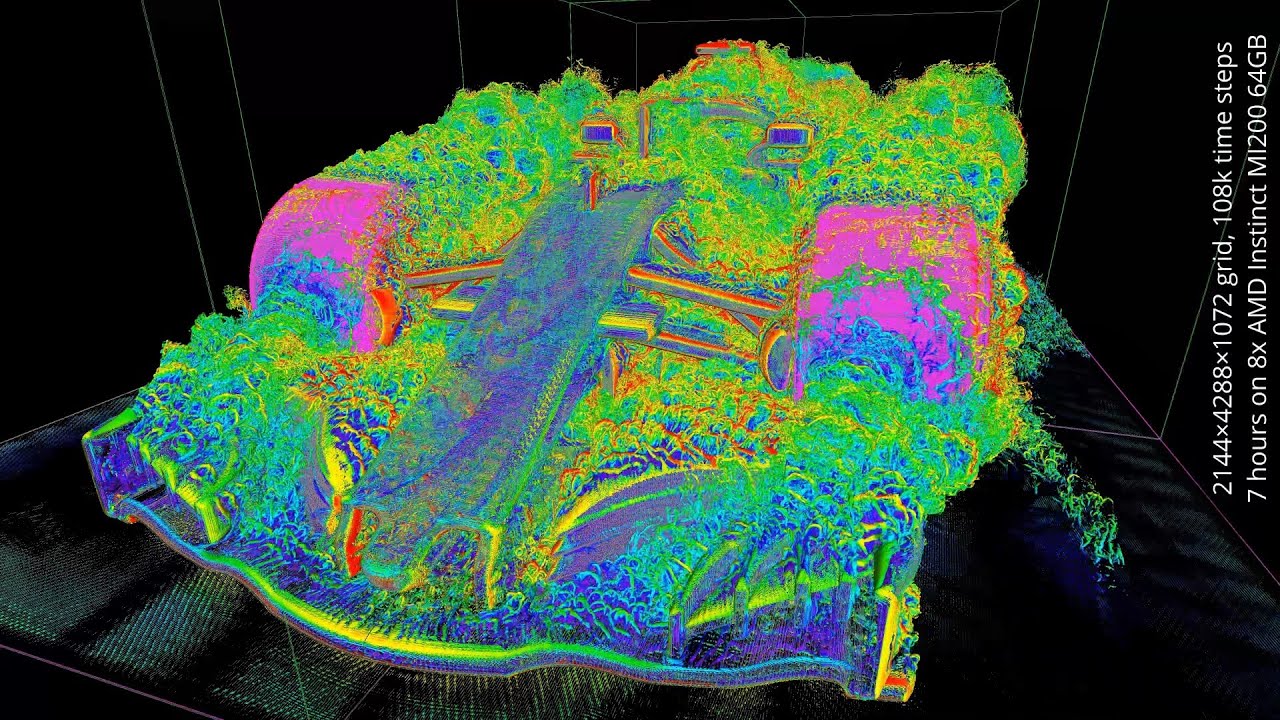

- added Ahmed body setup as an example on how body forces and drag coefficient are computed

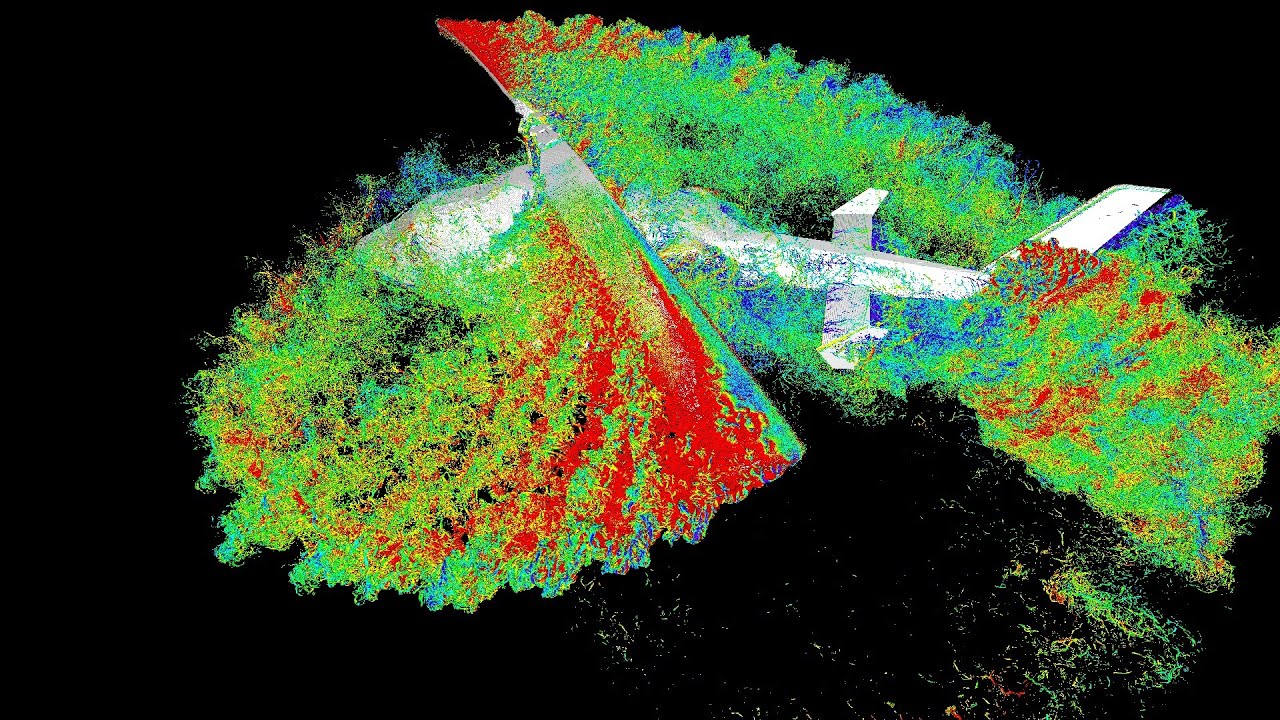

- added Cessna 172 and Bell 222 setups to showcase loading composite .stl geometries and revoxelization of moving parts

- added optional semi-transparent rendering mode (

#define GRAPHICS_TRANSPARENCY 0.7findefines.hpp) - fixed flickering of streamline visualization in interactive graphics

- improved smooth positioning of streamlines in slice mode

- fixed bug where

massandmassexinSURFACEextension were also allocated in CPU RAM (not required) - fixed bug in Q-criterion rendering of halo data in multi-GPU mode, reduced gap width between domains

- removed shared memory optimization from mesh voxelization kernel, as it crashes on Nvidia GPUs with new GPU drivers and is incompatible with old OpenCL 1.0 GPUs

- fixed raytracing attenuation color when no surface is at the simulation box walls with periodic boundaries

- v2.9 (31.07.2023) changes (multithreading)

- added cross-platform

parallel_forimplementation inutilities.hppusingstd::threads - significantly (>4x) faster simulation startup with multithreaded geometry initialization and sanity checks

- faster

calculate_force_on_object()andcalculate_torque_on_object()functions with multithreading - added total runtime and LBM runtime to

lbm.write_status() - fixed bug in voxelization ray direction for re-voxelizing rotating objects

- fixed bug in

Mesh::get_bounding_box_size() - fixed bug in

print_message()function inutilities.hpp

- added cross-platform

- v2.10 (05.11.2023) changes (frustrum culling)

- improved rasterization performance via frustrum culling when only part of the simulation box is visible

- improved switching between centered/free camera mode

- refactored OpenCL rendering library

- unit conversion factors are now automatically printed in console when

units.set_m_kg_s(...)is used - faster startup time for FluidX3D benchmark

- miner bug fix in

voxelize_mesh(...)kernel - fixed bug in

shading(...) - replaced slow (in multithreading)

std::rand()function with standard C99 LCG - more robust correction of wrong VRAM capacity reporting on Intel Arc GPUs

- fixed some minor compiler warnings

- v2.11 (07.12.2023) changes (improved Linux graphics)

- interactive graphics on Linux are now in fullscreen mode too, fully matching Windows

- made CPU/GPU buffer initialization significantly faster with

std::fillandenqueueFillBuffer(overall ~8% faster simulation startup) - added operating system info to OpenCL device driver version printout

- fixed flickering with frustrum culling at very small field of view

- fixed bug where rendered/exported frame was not updated when

visualization_modeschanged

- v2.12 (18.01.2024) changes (faster startup)

- ~3x faster source code compiling on Linux using multiple CPU cores if

makeis installed - significantly faster simulation initialization (~40% single-GPU, ~15% multi-GPU)

- minor bug fix in

Memory_Container::reset()function

- ~3x faster source code compiling on Linux using multiple CPU cores if

- v2.13 (11.02.2024) changes (improved .vtk export)

- data in exported

.vtkfiles is now automatically converted to SI units - ~2x faster

.vtkexport with multithreading - added unit conversion functions for

TEMPERATUREextension - fixed graphical artifacts with axis-aligned camera in raytracing

- fixed

get_exe_path()for macOS - fixed X11 multi-monitor issues on Linux

- workaround for Nvidia driver bug:

enqueueFillBufferis broken for large buffers on Nvidia GPUs - fixed slow numeric drift issues caused by

-cl-fast-relaxed-math - fixed wrong Maximum Allocation Size reporting in

LBM::write_status() - fixed missing scaling of coordinates to SI units in

LBM::write_mesh_to_vtk()

- data in exported

- v2.14 (03.03.2024) changes (visualization upgrade)

- coloring can now be switched between velocity/density/temperature with key Z

- uniform improved color palettes for velocity/density/temperature visualization

- color scale with automatic unit conversion can now be shown with key H

- slice mode for field visualization now draws fully filled-in slices instead of only lines for velocity vectors

- shading in

VIS_FLAG_SURFACEandVIS_PHI_RASTERIZEmodes is smoother now make.shnow automatically detects operating system and X11 support on Linux and only runs FluidX3D if last compilation was successful- fixed compiler warnings on Android

- fixed

make.shfailing on some systems due to nonstandard interpreter path - fixed that

makewould not compile with multiple cores on some systems

Read the FluidX3D Documentation!

-

CFD model: lattice Boltzmann method (LBM)

-

streaming (part 2/2)

f0temp(x,t) = f0(x, t)

fitemp(x,t) = f(t%2 ? i : (i%2 ? i+1 : i-1))(i%2 ? x : x-ei, t) for i ∈ [1, q-1] -

collision

ρ(x,t) = (Σi fitemp(x,t)) + 1

u(x,t) = 1∕ρ(x,t) Σi ci fitemp(x,t)

fieq-shifted(x,t) = wi ρ · ((u°ci)2∕(2c4) - (u°u)∕(2c2) + (u°ci)∕c2) + wi (ρ-1)

fitemp(x, t+Δt) = fitemp(x,t) + Ωi(fitemp(x,t), fieq-shifted(x,t), τ) -

streaming (part 1/2)

f0(x, t+Δt) = f0temp(x, t+Δt)

f(t%2 ? (i%2 ? i+1 : i-1) : i)(i%2 ? x+ei : x, t+Δt) = fitemp(x, t+Δt) for i ∈ [1, q-1] -

variables and notation

variable SI units defining equation description x m x = (x,y,z)T 3D position in Cartesian coordinates t s - time ρ kg∕m³ ρ = (Σi fi)+1 mass density of fluid p kg∕m s² p = c² ρ pressure of fluid u m∕s u = 1∕ρ Σi ci fi velocity of fluid ν m²∕s ν = μ∕ρ kinematic shear viscosity of fluid μ kg∕m s μ = ρ ν dynamic viscosity of fluid fi kg∕m³ - shifted density distribution functions (DDFs) Δx m Δx = 1 lattice constant (in LBM units) Δt s Δt = 1 simulation time step (in LBM units) c m∕s c = 1∕√3 Δx∕Δt lattice speed of sound (in LBM units) i 1 0 ≤ i < q LBM streaming direction index q 1 q ∈ { 9,15,19,27 } number of LBM streaming directions ei m D2Q9 / D3Q15/19/27 LBM streaming directions ci m∕s ci = ei∕Δt LBM streaming velocities wi 1 Σi wi = 1 LBM velocity set weights Ωi kg∕m³ SRT or TRT LBM collision operator τ s τ = ν∕c² + Δt∕2 LBM relaxation time -

velocity sets: D2Q9, D3Q15, D3Q19 (default), D3Q27

-

collision operators: single-relaxation-time (SRT/BGK) (default), two-relaxation-time (TRT)

-

DDF-shifting and other algebraic optimization to minimize round-off error

-

-

optimized to minimize VRAM footprint to 1/6 of other LBM codes

-

traditional LBM (D3Q19) with FP64 requires ~344 Bytes/cell

- 🟧🟧🟧🟧🟧🟧🟧🟧🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟨🟨🟨🟨🟨🟨🟨🟨🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥

(density 🟧, velocity 🟦, flags 🟨, 2 copies of DDFs 🟩/🟥; each square = 1 Byte) - allows for 3 Million cells per 1 GB VRAM

- 🟧🟧🟧🟧🟧🟧🟧🟧🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟨🟨🟨🟨🟨🟨🟨🟨🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥🟥

-

FluidX3D (D3Q19) requires only 55 Bytes/cell with Esoteric-Pull+FP16

-

🟧🟧🟧🟧🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟦🟨🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩🟩

(density 🟧, velocity 🟦, flags 🟨, DDFs 🟩; each square = 1 Byte) -

allows for 19 Million cells per 1 GB VRAM

-

in-place streaming with Esoteric-Pull: eliminates redundant copy

Bof density distribution functions (DDFs) in memory; almost cuts memory demand in half and slightly increases performance due to implicit bounce-back boundaries; offers optimal memory access patterns for single-cell in-place streaming -

decoupled arithmetic precision (FP32) and memory precision (FP32 or FP16S or FP16C): all arithmetic is done in FP32 for compatibility on all hardware, but DDFs in memory can be compressed to FP16S or FP16C: almost cuts memory demand in half again and almost doubles performance, without impacting overall accuracy for most setups

-

only 8 flag bits per lattice point (can be used independently / at the same time)

TYPE_S(stationary or moving) solid boundariesTYPE_Eequilibrium boundaries (inflow/outflow)TYPE_Ttemperature boundariesTYPE_Ffree surface (fluid)TYPE_Ifree surface (interface)TYPE_Gfree surface (gas)TYPE_Xremaining for custom use or further extensionsTYPE_Yremaining for custom use or further extensions

-

-

large cost saving: comparison of maximum single-GPU grid resolution for D3Q19 LBM

GPU VRAM capacity 1 GB 2 GB 3 GB 4 GB 6 GB 8 GB 10 GB 11 GB 12 GB 16 GB 20 GB 24 GB 32 GB 40 GB 48 GB 64 GB 80 GB 94 GB 128 GB 192 GB 256 GB approximate GPU price $25

GT 210$25

GTX 950$12

GTX 1060$50

GT 730$35

GTX 1060$70

RX 470$500

RTX 3080$240

GTX 1080 Ti$75

Tesla M40$75

Instinct MI25$900

RX 7900 XT$205

Tesla P40$600

Instinct MI60$5500

A100$2400

RTX 8000$10k

Instinct MI210$11k

A100>$40k

H100 NVL?

GPU Max 1550~$10k

MI300X- traditional LBM (FP64) 144³ 182³ 208³ 230³ 262³ 288³ 312³ 322³ 330³ 364³ 392³ 418³ 460³ 494³ 526³ 578³ 624³ 658³ 730³ 836³ 920³ FluidX3D (FP32/FP32) 224³ 282³ 322³ 354³ 406³ 448³ 482³ 498³ 512³ 564³ 608³ 646³ 710³ 766³ 814³ 896³ 966³ 1018³ 1130³ 1292³ 1422³ FluidX3D (FP32/FP16) 266³ 336³ 384³ 424³ 484³ 534³ 574³ 594³ 610³ 672³ 724³ 770³ 848³ 912³ 970³ 1068³ 1150³ 1214³ 1346³ 1540³ 1624³

-

-

cross-vendor multi-GPU support on a single PC/laptop/server

- domain decomposition allows pooling VRAM from multiple GPUs for much larger grid resolution

- each domain (GPU) can hold up to 4.29 billion (2³², 1624³) lattice points (225 GB memory)

- GPUs don't have to be identical (not even from the same vendor), but similar VRAM capacity/bandwidth is recommended

- domain communication architecture (simplified)

++ .-----------------------------------------------------------------. ++ ++ | GPU 0 | ++ ++ | LBM Domain 0 | ++ ++ '-----------------------------------------------------------------' ++ ++ | selective /|\ ++ ++ \|/ in-VRAM copy | ++ ++ .-------------------------------------------------------. ++ ++ | GPU 0 - Transfer Buffer 0 | ++ ++ '-------------------------------------------------------' ++ !! | PCIe /|\ !! !! \|/ copy | !! @@ .-------------------------. .-------------------------. @@ @@ | CPU - Transfer Buffer 0 | | CPU - Transfer Buffer 1 | @@ @@ '-------------------------'\ /'-------------------------' @@ @@ pointer X swap @@ @@ .-------------------------./ \.-------------------------. @@ @@ | CPU - Transfer Buffer 1 | | CPU - Transfer Buffer 0 | @@ @@ '-------------------------' '-------------------------' @@ !! /|\ PCIe | !! !! | copy \|/ !! ++ .-------------------------------------------------------. ++ ++ | GPU 1 - Transfer Buffer 1 | ++ ++ '-------------------------------------------------------' ++ ++ /|\ selective | ++ ++ | in-VRAM copy \|/ ++ ++ .-----------------------------------------------------------------. ++ ++ | GPU 1 | ++ ++ | LBM Domain 1 | ++ ++ '-----------------------------------------------------------------' ++ ## | ## ## domain synchronization barrier ## ## | ## || -------------------------------------------------------------> time ||

- domain communication architecture (detailed)

++ .-----------------------------------------------------------------. ++ ++ | GPU 0 | ++ ++ | LBM Domain 0 | ++ ++ '-----------------------------------------------------------------' ++ ++ | selective in- /|\ | selective in- /|\ | selective in- /|\ ++ ++ \|/ VRAM copy (X) | \|/ VRAM copy (Y) | \|/ VRAM copy (Z) | ++ ++ .---------------------.---------------------.---------------------. ++ ++ | GPU 0 - TB 0X+ | GPU 0 - TB 0Y+ | GPU 0 - TB 0Z+ | ++ ++ | GPU 0 - TB 0X- | GPU 0 - TB 0Y- | GPU 0 - TB 0Z- | ++ ++ '---------------------'---------------------'---------------------' ++ !! | PCIe /|\ | PCIe /|\ | PCIe /|\ !! !! \|/ copy | \|/ copy | \|/ copy | !! @@ .---------. .---------.---------. .---------.---------. .---------. @@ @@ | CPU 0X+ | | CPU 1X- | CPU 0Y+ | | CPU 3Y- | CPU 0Z+ | | CPU 5Z- | @@ @@ | CPU 0X- | | CPU 2X+ | CPU 0Y- | | CPU 4Y+ | CPU 0Z- | | CPU 6Z+ | @@ @@ '---------\ /---------'---------\ /---------'---------\ /---------' @@ @@ pointer X swap (X) pointer X swap (Y) pointer X swap (Z) @@ @@ .---------/ \---------.---------/ \---------.---------/ \---------. @@ @@ | CPU 1X- | | CPU 0X+ | CPU 3Y- | | CPU 0Y+ | CPU 5Z- | | CPU 0Z+ | @@ @@ | CPU 2X+ | | CPU 0X- | CPU 4Y+ | | CPU 0Y- | CPU 6Z+ | | CPU 0Z- | @@ @@ '---------' '---------'---------' '---------'---------' '---------' @@ !! /|\ PCIe | /|\ PCIe | /|\ PCIe | !! !! | copy \|/ | copy \|/ | copy \|/ !! ++ .--------------------..---------------------..--------------------. ++ ++ | GPU 1 - TB 1X- || GPU 3 - TB 3Y- || GPU 5 - TB 5Z- | ++ ++ :====================::=====================::====================: ++ ++ | GPU 2 - TB 2X+ || GPU 4 - TB 4Y+ || GPU 6 - TB 6Z+ | ++ ++ '--------------------''---------------------''--------------------' ++ ++ /|\ selective in- | /|\ selective in- | /|\ selective in- | ++ ++ | VRAM copy (X) \|/ | VRAM copy (Y) \|/ | VRAM copy (Z) \|/ ++ ++ .--------------------..---------------------..--------------------. ++ ++ | GPU 1 || GPU 3 || GPU 5 | ++ ++ | LBM Domain 1 || LBM Domain 3 || LBM Domain 5 | ++ ++ :====================::=====================::====================: ++ ++ | GPU 2 || GPU 4 || GPU 6 | ++ ++ | LBM Domain 2 || LBM Domain 4 || LBM Domain 6 | ++ ++ '--------------------''---------------------''--------------------' ++ ## | | | ## ## | domain synchronization barriers | ## ## | | | ## || -------------------------------------------------------------> time ||

-

peak performance on GPUs (datacenter/gaming/professional/laptop)

-

powerful model extensions

- boundary types

- stationary mid-grid bounce-back boundaries (stationary solid boundaries)

- moving mid-grid bounce-back boundaries (moving solid boundaries)

- equilibrium boundaries (non-reflective inflow/outflow)

- temperature boundaries (fixed temperature)

- global force per volume (Guo forcing), can be modified on-the-fly

- local force per volume (force field)

- optional computation of forces from the fluid on solid boundaries

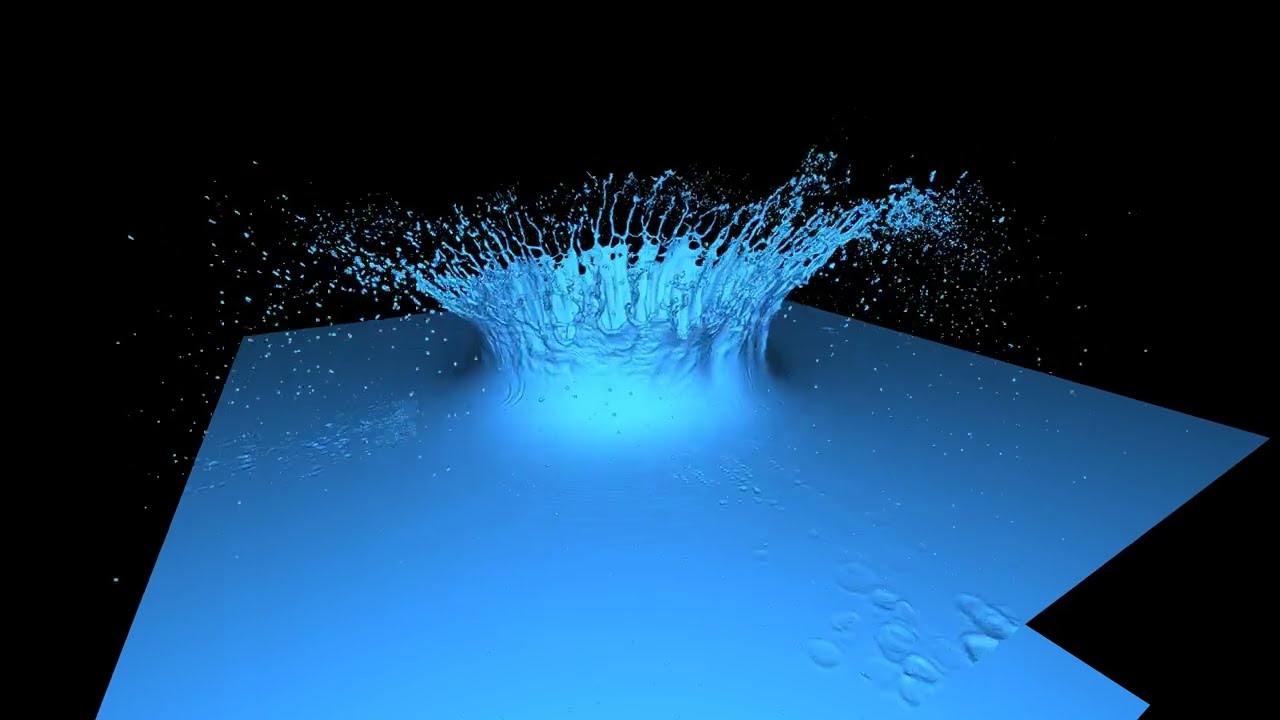

- state-of-the-art free surface LBM (FSLBM) implementation:

- volume-of-fluid model

- fully analytic PLIC for efficient curvature calculation

- improved mass conservation

- ultra efficient implementation with only 4 kernels additionally to

stream_collide()kernel

- thermal LBM to simulate thermal convection

- D3Q7 subgrid for thermal DDFs

- in-place streaming with Esoteric-Pull for thermal DDFs

- optional FP16S or FP16C compression for thermal DDFs with DDF-shifting

- Smagorinsky-Lilly subgrid turbulence LES model to keep simulations with very large Reynolds number stable

Παβ = Σi eiα eiβ (fi - fieq-shifted)

Q = Σαβ Παβ2

______________________

τ = ½ (τ0 + √ τ02 + (16√2)∕(3π2) √Q∕ρ ) - particles with immersed-boundary method (either passive or 2-way-coupled, single-GPU only)

- boundary types

- FluidX3D can do simulations so large that storing the volumetric data for later rendering becomes unmanageable (like 120GB for a single frame, hundreds of TeraByte for a video)

- instead, FluidX3D allows rendering raw simulation data directly in VRAM, so no large volumetric files have to be exported to the hard disk (see my technical talk)

- the rendering is so fast that it works interactively in real time for both rasterization and raytracing

- rasterization and raytracing are done in OpenCL and work on all GPUs, even the ones without RTX/DXR raytracing cores or without any rendering hardware at all (like A100, MI200, ...)

- if no monitor is available (like on a remote Linux server), there is an ASCII rendering mode to interactively visualize the simulation in the terminal (even in WSL and/or through SSH)

- rendering is fully multi-GPU-parallelized via seamless domain decomposition rasterization

- with interactive graphics mode disabled, image resolution can be as large as VRAM allows for (4K/8K/16K and above)

- (interacitive) visualization modes:

- flag wireframe / solid surface (and force vectors on solid cells or surface pressure if the extension is used)

- velocity field (with slice mode)

- streamlines (with slice mode)

- velocity-colored Q-criterion isosurface

- rasterized free surface with marching-cubes

- raytraced free surface with fast ray-grid traversal and marching-cubes, either 1-4 rays/pixel or 1-10 rays/pixel

- FluidX3D is written in OpenCL 1.2, so it runs on any hardware from any vendor (Nvidia, AMD, Intel, ...):

- world's fastest datacenter GPUs, like H100, A100, MI250(X), MI210, MI100, V100(S), P100, ...

- gaming GPUs (desktop or laptop), like Nvidia GeForce, AMD Radeon, Intel Arc

- professional/workstation GPUs, like Nvidia Quadro, AMD Radeon Pro / FirePro

- integrated GPUs

- Intel Xeon Phi (requires installation of Intel OpenCL CPU Runtime (Repo))

- Intel/AMD CPUs (requires installation of Intel OpenCL CPU Runtime (Repo))

- even smartphone ARM GPUs

- native cross-vendor multi-GPU implementation

- uses PCIe communication, so no SLI/Crossfire/NVLink/InfinityFabric required

- single-node parallelization, so no MPI installation required

- GPUs don't even have to be from the same vendor, but similar memory capacity and bandwidth are recommended

- works on Windows and Linux with C++17, with limited support also for macOS and Android

- supports importing and voxelizing triangle meshes from binary

.stlfiles, with fast GPU voxelization - supports exporting volumetric data as binary

.vtkfiles - supports exporting triangle meshes as binary

.vtkfiles - supports exporting rendered images as

.png/.qoi/.bmpfiles; encoding runs in parallel on the CPU while the simulation on GPU can continue without delay

Here are performance benchmarks on various hardware in MLUPs/s, or how many million lattice cells are updated per second. The settings used for the benchmark are D3Q19 SRT with no extensions enabled (only LBM with implicit mid-grid bounce-back boundaries) and the setup consists of an empty cubic box with sufficient size (typically 256³). Without extensions, a single lattice cell requires:

- a memory capacity of 93 (FP32/FP32) or 55 (FP32/FP16) Bytes

- a memory bandwidth of 153 (FP32/FP32) or 77 (FP32/FP16) Bytes per time step

- 363 (FP32/FP32) or 406 (FP32/FP16S) or 1275 (FP32/FP16C) FLOPs per time step (FP32+INT32 operations counted combined)

In consequence, the arithmetic intensity of this implementation is 2.37 (FP32/FP32) or 5.27 (FP32/FP16S) or 16.56 (FP32/FP16C) FLOPs/Byte. So performance is only limited by memory bandwidth. The table in the left 3 columns shows the hardware specs as found in the data sheets (theoretical peak FP32 compute performance, memory capacity, theoretical peak memory bandwidth). The right 3 columns show the measured FluidX3D performance for FP32/FP32, FP32/FP16S, FP32/FP16C floating-point precision settings, with the (roofline model efficiency) in round brackets, indicating how much % of theoretical peak memory bandwidth are being used.

If your GPU/CPU is not on the list yet, you can report your benchmarks here.

Colors: 🔴 AMD, 🔵 Intel, 🟢 Nvidia, ⚪ Apple, 🟡 ARM, 🟤 Glenfly

| Device | FP32 [TFlops/s] |

Mem [GB] |

BW [GB/s] |

FP32/FP32 [MLUPs/s] |

FP32/FP16S [MLUPs/s] |

FP32/FP16C [MLUPs/s] |

|---|---|---|---|---|---|---|

| 🔴 Instinct MI250 (1 GCD) | 45.26 | 64 | 1638 | 5638 (53%) | 9030 (42%) | 8506 (40%) |

| 🔴 Instinct MI210 | 45.26 | 64 | 1638 | 6517 (61%) | 9547 (45%) | 8829 (41%) |

| 🔴 Instinct MI100 | 46.14 | 32 | 1228 | 5093 (63%) | 8133 (51%) | 8542 (54%) |

| 🔴 Instinct MI60 | 14.75 | 32 | 1024 | 3570 (53%) | 5047 (38%) | 5111 (38%) |

| 🔴 Radeon VII | 13.83 | 16 | 1024 | 4898 (73%) | 7778 (58%) | 5256 (40%) |

| 🔵 Data Center GPU Max 1100 | 22.22 | 48 | 1229 | 3487 (43%) | 6209 (39%) | 3252 (20%) |

| 🟢 H100 PCIe 80GB | 51.01 | 80 | 2000 | 11128 (85%) | 20624 (79%) | 13862 (53%) |

| 🟢 A100 SXM4 80GB | 19.49 | 80 | 2039 | 10228 (77%) | 18448 (70%) | 11197 (42%) |

| 🟢 A100 PCIe 80GB | 19.49 | 80 | 1935 | 9657 (76%) | 17896 (71%) | 10817 (43%) |

| 🟢 PG506-243 / PG506-242 | 22.14 | 64 | 1638 | 8195 (77%) | 15654 (74%) | 12271 (58%) |

| 🟢 A100 SXM4 40GB | 19.49 | 40 | 1555 | 8522 (84%) | 16013 (79%) | 11251 (56%) |

| 🟢 A100 PCIe 40GB | 19.49 | 40 | 1555 | 8526 (84%) | 16035 (79%) | 11088 (55%) |

| 🟢 CMP 170HX | 6.32 | 8 | 1493 | 7684 (79%) | 12392 (64%) | 6859 (35%) |

| 🟢 A30 | 10.32 | 24 | 933 | 5004 (82%) | 9721 (80%) | 5726 (47%) |

| 🟢 Tesla V100 SXM2 32GB | 15.67 | 32 | 900 | 4471 (76%) | 8947 (77%) | 7217 (62%) |

| 🟢 Tesla V100 PCIe 16GB | 14.13 | 16 | 900 | 5128 (87%) | 10325 (88%) | 7683 (66%) |

| 🟢 Quadro GV100 | 16.66 | 32 | 870 | 3442 (61%) | 6641 (59%) | 5863 (52%) |

| 🟢 Titan V | 14.90 | 12 | 653 | 3601 (84%) | 7253 (86%) | 6957 (82%) |

| 🟢 Tesla P100 16GB | 9.52 | 16 | 732 | 3295 (69%) | 5950 (63%) | 4176 (44%) |

| 🟢 Tesla P100 12GB | 9.52 | 12 | 549 | 2427 (68%) | 4141 (58%) | 3999 (56%) |

| 🟢 GeForce GTX TITAN | 4.71 | 6 | 288 | 1460 (77%) | 2500 (67%) | 1113 (30%) |

| 🟢 Tesla K40m | 4.29 | 12 | 288 | 1131 (60%) | 1868 (50%) | 912 (24%) |

| 🟢 Tesla K80 (1 GPU) | 4.11 | 12 | 240 | 916 (58%) | 1642 (53%) | 943 (30%) |

| 🟢 Tesla K20c | 3.52 | 5 | 208 | 861 (63%) | 1507 (56%) | 720 (27%) |

| 🔴 Radeon RX 7900 XTX | 61.44 | 24 | 960 | 3665 (58%) | 7644 (61%) | 7716 (62%) |

| 🔴 Radeon PRO W7900 | 61.30 | 48 | 864 | 3107 (55%) | 5939 (53%) | 5780 (52%) |

| 🔴 Radeon RX 7900 XT | 51.61 | 20 | 800 | 3013 (58%) | 5856 (56%) | 5986 (58%) |

| 🔴 Radeon PRO W7800 | 45.20 | 32 | 576 | 1872 (50%) | 4426 (59%) | 4145 (55%) |

| 🔴 Radeon RX 7600 | 21.75 | 8 | 288 | 1250 (66%) | 2561 (68%) | 2512 (67%) |

| 🔴 Radeon RX 6900 XT | 23.04 | 16 | 512 | 1968 (59%) | 4227 (64%) | 4207 (63%) |

| 🔴 Radeon RX 6800 XT | 20.74 | 16 | 512 | 2008 (60%) | 4241 (64%) | 4224 (64%) |

| 🔴 Radeon PRO W6800 | 17.83 | 32 | 512 | 1620 (48%) | 3361 (51%) | 3180 (48%) |

| 🔴 Radeon RX 6700 XT | 13.21 | 12 | 384 | 1408 (56%) | 2883 (58%) | 2908 (58%) |

| 🔴 Radeon RX 6800M | 11.78 | 12 | 384 | 1439 (57%) | 3190 (64%) | 3213 (64%) |

| 🔴 Radeon RX 6700M | 10.60 | 10 | 320 | 1194 (57%) | 2388 (57%) | 2429 (58%) |

| 🔴 Radeon RX 5700 XT | 9.75 | 8 | 448 | 1368 (47%) | 3253 (56%) | 3049 (52%) |

| 🔴 Radeon RX 5600 XT | 6.73 | 6 | 288 | 1136 (60%) | 2214 (59%) | 2148 (57%) |

| 🔴 Radeon RX Vega 64 | 13.35 | 8 | 484 | 1875 (59%) | 2878 (46%) | 3227 (51%) |

| 🔴 Radeon RX 580 4GB | 6.50 | 4 | 256 | 946 (57%) | 1848 (56%) | 1577 (47%) |

| 🔴 Radeon R9 390X | 5.91 | 8 | 384 | 1733 (69%) | 2217 (44%) | 1722 (35%) |

| 🔴 Radeon HD 7850 | 1.84 | 2 | 154 | 112 (11%) | 120 ( 6%) | 635 (32%) |

| 🔵 Arc A770 LE | 19.66 | 16 | 560 | 2663 (73%) | 4568 (63%) | 4519 (62%) |

| 🔵 Arc A750 LE | 17.20 | 8 | 512 | 2555 (76%) | 4314 (65%) | 4047 (61%) |

| 🔵 Arc A580 | 12.29 | 8 | 512 | 2534 (76%) | 3889 (58%) | 3488 (52%) |

| 🔵 Arc A380 | 4.20 | 6 | 186 | 622 (51%) | 1097 (45%) | 1115 (46%) |

| 🟢 GeForce RTX 4090 | 82.58 | 24 | 1008 | 5624 (85%) | 11091 (85%) | 11496 (88%) |

| 🟢 RTX 6000 Ada | 91.10 | 48 | 960 | 4997 (80%) | 10249 (82%) | 10293 (83%) |

| 🟢 L40S | 91.61 | 48 | 864 | 3788 (67%) | 7637 (68%) | 7617 (68%) |

| 🟢 GeForce RTX 4080 | 55.45 | 16 | 717 | 3914 (84%) | 7626 (82%) | 7933 (85%) |

| 🟢 GeForce RTX 4070 Ti Super | 44.10 | 16 | 672 | 3694 (84%) | 6435 (74%) | 7295 (84%) |

| 🟢 GeForce RTX 4070 | 29.15 | 12 | 504 | 2646 (80%) | 4548 (69%) | 5016 (77%) |

| 🟢 GeForce RTX 4080M | 33.85 | 12 | 432 | 2577 (91%) | 5086 (91%) | 5114 (91%) |

| 🟢 GeForce RTX 3090 Ti | 40.00 | 24 | 1008 | 5717 (87%) | 10956 (84%) | 10400 (79%) |

| 🟢 GeForce RTX 3090 | 39.05 | 24 | 936 | 5418 (89%) | 10732 (88%) | 10215 (84%) |

| 🟢 GeForce RTX 3080 Ti | 37.17 | 12 | 912 | 5202 (87%) | 9832 (87%) | 9347 (79%) |

| 🟢 GeForce RTX 3080 12GB | 32.26 | 12 | 912 | 5071 (85%) | 9657 (81%) | 8615 (73%) |

| 🟢 RTX A6000 | 40.00 | 48 | 768 | 4421 (88%) | 8814 (88%) | 8533 (86%) |

| 🟢 GeForce RTX 3080 10GB | 29.77 | 10 | 760 | 4230 (85%) | 8118 (82%) | 7714 (78%) |

| 🟢 GeForce RTX 3070 | 20.31 | 8 | 448 | 2578 (88%) | 5096 (88%) | 5060 (87%) |

| 🟢 GeForce RTX 3060 Ti | 16.49 | 8 | 448 | 2644 (90%) | 5129 (88%) | 4718 (81%) |

| 🟢 RTX A4000 | 19.17 | 16 | 448 | 2500 (85%) | 4945 (85%) | 4664 (80%) |

| 🟢 RTX A5000M | 16.59 | 16 | 448 | 2228 (76%) | 4461 (77%) | 3662 (63%) |

| 🟢 GeForce RTX 3060 | 13.17 | 12 | 360 | 2108 (90%) | 4070 (87%) | 3566 (76%) |

| 🟢 GeForce RTX 3060M | 10.94 | 6 | 336 | 2019 (92%) | 4012 (92%) | 3572 (82%) |

| 🟢 GeForce RTX 3050M Ti | 7.60 | 4 | 192 | 1181 (94%) | 2341 (94%) | 2253 (90%) |

| 🟢 GeForce RTX 3050M | 7.13 | 4 | 192 | 1180 (94%) | 2339 (94%) | 2016 (81%) |

| 🟢 Titan RTX | 16.31 | 24 | 672 | 3471 (79%) | 7456 (85%) | 7554 (87%) |

| 🟢 Quadro RTX 6000 | 16.31 | 24 | 672 | 3307 (75%) | 6836 (78%) | 6879 (79%) |

| 🟢 Quadro RTX 8000 Passive | 14.93 | 48 | 624 | 2591 (64%) | 5408 (67%) | 5607 (69%) |

| 🟢 GeForce RTX 2080 Ti | 13.45 | 11 | 616 | 3194 (79%) | 6700 (84%) | 6853 (86%) |

| 🟢 GeForce RTX 2080 Super | 11.34 | 8 | 496 | 2434 (75%) | 5284 (82%) | 5087 (79%) |

| 🟢 Quadro RTX 5000 | 11.15 | 16 | 448 | 2341 (80%) | 4766 (82%) | 4773 (82%) |

| 🟢 GeForce RTX 2060 Super | 7.18 | 8 | 448 | 2503 (85%) | 5035 (87%) | 4463 (77%) |

| 🟢 Quadro RTX 4000 | 7.12 | 8 | 416 | 2284 (84%) | 4584 (85%) | 4062 (75%) |

| 🟢 GeForce RTX 2060 KO | 6.74 | 6 | 336 | 1643 (75%) | 3376 (77%) | 3266 (75%) |

| 🟢 GeForce RTX 2060 | 6.74 | 6 | 336 | 1681 (77%) | 3604 (83%) | 3571 (82%) |

| 🟢 GeForce GTX 1660 Super | 5.03 | 6 | 336 | 1696 (77%) | 3551 (81%) | 3040 (70%) |

| 🟢 Tesla T4 | 8.14 | 15 | 300 | 1356 (69%) | 2869 (74%) | 2887 (74%) |

| 🟢 GeForce GTX 1660 Ti | 5.48 | 6 | 288 | 1467 (78%) | 3041 (81%) | 3019 (81%) |

| 🟢 GeForce GTX 1660 | 5.07 | 6 | 192 | 1016 (81%) | 1924 (77%) | 1992 (80%) |

| 🟢 GeForce GTX 1650M 896C | 2.72 | 4 | 192 | 963 (77%) | 1836 (74%) | 1858 (75%) |

| 🟢 GeForce GTX 1650M 1024C | 3.20 | 4 | 128 | 706 (84%) | 1214 (73%) | 1400 (84%) |

| 🟢 T500 | 3.04 | 4 | 80 | 339 (65%) | 578 (56%) | 665 (64%) |

| 🟢 Titan Xp | 12.15 | 12 | 548 | 2919 (82%) | 5495 (77%) | 5375 (76%) |

| 🟢 GeForce GTX 1080 Ti | 12.06 | 11 | 484 | 2631 (83%) | 4837 (77%) | 4877 (78%) |

| 🟢 GeForce GTX 1080 | 9.78 | 8 | 320 | 1623 (78%) | 3100 (75%) | 3182 (77%) |

| 🟢 GeForce GTX 1060 6GB | 4.57 | 6 | 192 | 997 (79%) | 1925 (77%) | 1785 (72%) |

| 🟢 GeForce GTX 1060M | 4.44 | 6 | 192 | 983 (78%) | 1882 (75%) | 1803 (72%) |

| 🟢 GeForce GTX 1050M Ti | 2.49 | 4 | 112 | 631 (86%) | 1224 (84%) | 1115 (77%) |

| 🟢 Quadro P1000 | 1.89 | 4 | 82 | 426 (79%) | 839 (79%) | 778 (73%) |

| 🟢 GeForce GTX 970 | 4.17 | 4 | 224 | 980 (67%) | 1721 (59%) | 1623 (56%) |

| 🟢 Quadro M4000 | 2.57 | 8 | 192 | 899 (72%) | 1519 (61%) | 1050 (42%) |

| 🟢 Tesla M60 (1 GPU) | 4.82 | 8 | 160 | 853 (82%) | 1571 (76%) | 1557 (75%) |

| 🟢 GeForce GTX 960M | 1.51 | 4 | 80 | 442 (84%) | 872 (84%) | 627 (60%) |

| 🟢 GeForce GTX 770 | 3.33 | 2 | 224 | 800 (55%) | 1215 (42%) | 876 (30%) |

| 🟢 GeForce GTX 680 4GB | 3.33 | 4 | 192 | 783 (62%) | 1274 (51%) | 814 (33%) |

| 🟢 Quadro K2000 | 0.73 | 2 | 64 | 312 (75%) | 444 (53%) | 171 (21%) |

| 🟢 GeForce GT 630 (OEM) | 0.46 | 2 | 29 | 151 (81%) | 185 (50%) | 78 (21%) |

| 🟢 Quadro NVS 290 | 0.03 | 0.256 | 6 | 1 ( 2%) | 1 ( 1%) | 1 ( 1%) |

| 🟤 Arise 1020 | 1.50 | 2 | 19 | 6 ( 5%) | 6 ( 2%) | 6 ( 2%) |

| ⚪ M2 Max GPU 38CU 32GB | 9.73 | 22 | 400 | 2405 (92%) | 4641 (89%) | 2444 (47%) |

| ⚪ M1 Ultra GPU 64CU 128GB | 16.38 | 98 | 800 | 4519 (86%) | 8418 (81%) | 6915 (67%) |

| ⚪ M1 Max GPU 24CU 32GB | 6.14 | 22 | 400 | 2369 (91%) | 4496 (87%) | 2777 (53%) |

| ⚪ M1 Pro GPU 16CU 16GB | 4.10 | 11 | 200 | 1204 (92%) | 2329 (90%) | 1855 (71%) |

| ⚪ M1 GPU 8CU 16GB | 2.05 | 11 | 68 | 384 (86%) | 758 (85%) | 759 (86%) |

| 🔴 Radeon 780M (Z1 Extreme) | 8.29 | 8 | 102 | 443 (66%) | 860 (65%) | 820 (62%) |

| 🔴 Radeon Vega 8 (4750G) | 2.15 | 27 | 57 | 263 (71%) | 511 (70%) | 501 (68%) |

| 🔴 Radeon Vega 8 (3500U) | 1.23 | 7 | 38 | 157 (63%) | 282 (57%) | 288 (58%) |

| 🔵 Iris Xe Graphics (i7-1265U) | 1.92 | 13 | 77 | 342 (68%) | 621 (62%) | 574 (58%) |

| 🔵 UHD Graphics Xe 32EUs | 0.74 | 25 | 51 | 128 (38%) | 245 (37%) | 216 (32%) |

| 🔵 UHD Graphics 770 | 0.82 | 30 | 90 | 342 (58%) | 475 (41%) | 278 (24%) |

| 🔵 UHD Graphics 630 | 0.46 | 7 | 51 | 151 (45%) | 301 (45%) | 187 (28%) |

| 🔵 UHD Graphics P630 | 0.46 | 51 | 42 | 177 (65%) | 288 (53%) | 137 (25%) |

| 🔵 HD Graphics 5500 | 0.35 | 3 | 26 | 75 (45%) | 192 (58%) | 108 (32%) |

| 🔵 HD Graphics 4600 | 0.38 | 2 | 26 | 105 (63%) | 115 (35%) | 34 (10%) |

| 🟡 Mali-G610 MP4 (Orange Pi 5 Plus) | 0.06 | 16 | 34 | 43 (19%) | 59 (13%) | 19 ( 4%) |

| 🟡 Mali-G72 MP18 (Samsung S9+) | 0.24 | 4 | 29 | 14 ( 7%) | 17 ( 5%) | 12 ( 3%) |

| 🟡 Qualcomm Adreno 530 (LG G6) | 0.33 | 2 | 30 | 1 ( 1%) | 1 ( 0%) | 1 ( 0%) |

| 🔴 2x EPYC 9654 | 29.49 | 1536 | 922 | 1381 (23%) | 1814 (15%) | 1801 (15%) |

| 🔵 2x Xeon CPU Max 9480 | 13.62 | 256 | 614 | 2037 (51%) | 1520 (19%) | 1464 (18%) |

| 🔵 2x Xeon Platinum 8480+ | 14.34 | 512 | 614 | 2162 (54%) | 1845 (23%) | 1884 (24%) |

| 🔵 2x Xeon Platinum 8380 | 11.78 | 2048 | 410 | 1410 (53%) | 1159 (22%) | 1298 (24%) |

| 🔵 2x Xeon Platinum 8358 | 10.65 | 256 | 410 | 1285 (48%) | 1007 (19%) | 1120 (21%) |

| 🔵 1x Xeon Platinum 8358 | 5.33 | 128 | 205 | 444 (33%) | 463 (17%) | 534 (20%) |

| 🔵 2x Xeon Platinum 8256 | 1.95 | 1536 | 282 | 396 (22%) | 158 ( 4%) | 175 ( 5%) |

| 🔵 2x Xeon Platinum 8153 | 4.10 | 384 | 256 | 691 (41%) | 290 ( 9%) | 328 (10%) |

| 🔵 2x Xeon Gold 6128 | 2.61 | 192 | 256 | 254 (15%) | 185 ( 6%) | 193 ( 6%) |

| 🔵 Xeon Phi 7210 | 5.32 | 192 | 102 | 415 (62%) | 193 (15%) | 223 (17%) |

| 🔵 4x Xeon E5-4620 v4 | 2.69 | 512 | 273 | 460 (26%) | 275 ( 8%) | 239 ( 7%) |

| 🔵 2x Xeon E5-2630 v4 | 1.41 | 64 | 137 | 264 (30%) | 146 ( 8%) | 129 ( 7%) |

| 🔵 2x Xeon E5-2623 v4 | 0.67 | 64 | 137 | 125 (14%) | 66 ( 4%) | 59 ( 3%) |

| 🔵 2x Xeon E5-2680 v3 | 1.92 | 64 | 137 | 209 (23%) | 305 (17%) | 281 (16%) |

| 🔵 Core i7-13700K | 2.51 | 64 | 90 | 481 (82%) | 374 (32%) | 373 (32%) |

| 🔵 Core i7-1265U | 1.23 | 32 | 77 | 128 (26%) | 62 ( 6%) | 58 ( 6%) |

| 🔵 Core i9-11900KB | 0.84 | 32 | 51 | 109 (33%) | 195 (29%) | 208 (31%) |

| 🔵 Core i9-10980XE | 3.23 | 128 | 94 | 286 (47%) | 251 (21%) | 223 (18%) |

| 🔵 Core i5-9600 | 0.60 | 16 | 43 | 146 (52%) | 127 (23%) | 147 (27%) |

| 🔵 Core i7-8700K | 0.71 | 16 | 51 | 152 (45%) | 134 (20%) | 116 (17%) |

| 🔵 Xeon E-2176G | 0.71 | 64 | 42 | 201 (74%) | 136 (25%) | 148 (27%) |

| 🔵 Core i7-7700HQ | 0.36 | 12 | 38 | 81 (32%) | 82 (16%) | 108 (22%) |

| 🔵 Core i7-4770 | 0.44 | 16 | 26 | 104 (62%) | 69 (21%) | 59 (18%) |

| 🔵 Core i7-4720HQ | 0.33 | 16 | 26 | 58 (35%) | 13 ( 4%) | 47 (14%) |

Multi-GPU benchmarks are done at the largest possible grid resolution with cubic domains, and either 2x1x1, 2x2x1 or 2x2x2 of these domains together. The (percentages in round brackets) are single-GPU roofline model efficiency, and the (multiplicators in round brackets) are scaling factors relative to benchmarked single-GPU performance.

Colors: 🔴 AMD, 🔵 Intel, 🟢 Nvidia, ⚪ Apple, 🟡 ARM, 🟤 Glenfly

| Device | FP32 [TFlops/s] |

Mem [GB] |

BW [GB/s] |

FP32/FP32 [MLUPs/s] |

FP32/FP16S [MLUPs/s] |

FP32/FP16C [MLUPs/s] |

|---|---|---|---|---|---|---|

| 🔴 1x Instinct MI250 (1 GCD) | 45.26 | 64 | 1638 | 5638 (53%) | 9030 (42%) | 8506 (40%) |

| 🔴 1x Instinct MI250 (2 GCD) | 90.52 | 128 | 3277 | 9460 (1.7x) | 14313 (1.6x) | 17338 (2.0x) |

| 🔴 2x Instinct MI250 (4 GCD) | 181.04 | 256 | 6554 | 16925 (3.0x) | 29163 (3.2x) | 29627 (3.5x) |

| 🔴 4x Instinct MI250 (8 GCD) | 362.08 | 512 | 13107 | 27350 (4.9x) | 52258 (5.8x) | 53521 (6.3x) |

| 🔴 1x Instinct MI210 | 45.26 | 64 | 1638 | 6347 (59%) | 8486 (40%) | 9105 (43%) |

| 🔴 2x Instinct MI210 | 90.52 | 128 | 3277 | 7245 (1.1x) | 12050 (1.4x) | 13539 (1.5x) |

| 🔴 4x Instinct MI210 | 181.04 | 256 | 6554 | 8816 (1.4x) | 17232 (2.0x) | 16892 (1.9x) |

| 🔴 8x Instinct MI210 | 362.08 | 512 | 13107 | 13546 (2.1x) | 27996 (3.3x) | 27820 (3.1x) |

| 🔴 16x Instinct MI210 | 724.16 | 1024 | 26214 | 18094 (2.9x) | 37360 (4.4x) | 37922 (4.2x) |

| 🔴 24x Instinct MI210 | 1086.24 | 1536 | 39322 | 22056 (3.5x) | 45033 (5.3x) | 44631 (4.9x) |

| 🔴 32x Instinct MI210 | 1448.32 | 2048 | 52429 | 23881 (3.8x) | 50952 (6.0x) | 48848 (5.4x) |

| 🔴 1x Radeon VII | 13.83 | 16 | 1024 | 4898 (73%) | 7778 (58%) | 5256 (40%) |

| 🔴 2x Radeon VII | 27.66 | 32 | 2048 | 8113 (1.7x) | 15591 (2.0x) | 10352 (2.0x) |

| 🔴 4x Radeon VII | 55.32 | 64 | 4096 | 12911 (2.6x) | 24273 (3.1x) | 17080 (3.2x) |

| 🔴 8x Radeon VII | 110.64 | 128 | 8192 | 21946 (4.5x) | 30826 (4.0x) | 24572 (4.7x) |

| 🔵 1x DC GPU Max 1100 | 22.22 | 48 | 1229 | 3487 (43%) | 6209 (39%) | 3252 (20%) |

| 🔵 2x DC GPU Max 1100 | 44.44 | 96 | 2458 | 6301 (1.8x) | 11815 (1.9x) | 5970 (1.8x) |

| 🔵 4x DC GPU Max 1100 | 88.88 | 192 | 4915 | 12162 (3.5x) | 22777 (3.7x) | 11759 (3.6x) |

| 🟢 1x A100 PCIe 80GB | 19.49 | 80 | 1935 | 9657 (76%) | 17896 (71%) | 10817 (43%) |

| 🟢 2x A100 PCIe 80GB | 38.98 | 160 | 3870 | 15742 (1.6x) | 27165 (1.5x) | 17510 (1.6x) |

| 🟢 4x A100 PCIe 80GB | 77.96 | 320 | 7740 | 25957 (2.7x) | 52056 (2.9x) | 33283 (3.1x) |

| 🟢 1x PG506-243 / PG506-242 | 22.14 | 64 | 1638 | 8195 (77%) | 15654 (74%) | 12271 (58%) |

| 🟢 2x PG506-243 / PG506-242 | 44.28 | 128 | 3277 | 13885 (1.7x) | 24168 (1.5x) | 20906 (1.7x) |

| 🟢 4x PG506-243 / PG506-242 | 88.57 | 256 | 6554 | 23097 (2.8x) | 41088 (2.6x) | 36130 (2.9x) |

| 🟢 1x A100 SXM4 40GB | 19.49 | 40 | 1555 | 8543 (84%) | 15917 (79%) | 8748 (43%) |

| 🟢 2x A100 SXM4 40GB | 38.98 | 80 | 3110 | 14311 (1.7x) | 23707 (1.5x) | 15512 (1.8x) |

| 🟢 4x A100 SXM4 40GB | 77.96 | 160 | 6220 | 23411 (2.7x) | 42400 (2.7x) | 29017 (3.3x) |

| 🟢 8x A100 SXM4 40GB | 155.92 | 320 | 12440 | 37619 (4.4x) | 72965 (4.6x) | 63009 (7.2x) |

| 🟢 1x A100 SXM4 40GB | 19.49 | 40 | 1555 | 8522 (84%) | 16013 (79%) | 11251 (56%) |

| 🟢 2x A100 SXM4 40GB | 38.98 | 80 | 3110 | 13629 (1.6x) | 24620 (1.5x) | 18850 (1.7x) |

| 🟢 4x A100 SXM4 40GB | 77.96 | 160 | 6220 | 17978 (2.1x) | 30604 (1.9x) | 30627 (2.7x) |

| 🟢 1x Tesla V100 SXM2 32GB | 15.67 | 32 | 900 | 4471 (76%) | 8947 (77%) | 7217 (62%) |

| 🟢 2x Tesla V100 SXM2 32GB | 31.34 | 64 | 1800 | 7953 (1.8x) | 15469 (1.7x) | 12932 (1.8x) |

| 🟢 4x Tesla V100 SXM2 32GB | 62.68 | 128 | 3600 | 13135 (2.9x) | 26527 (3.0x) | 22686 (3.1x) |

| 🟢 1x Tesla K40m | 4.29 | 12 | 288 | 1131 (60%) | 1868 (50%) | 912 (24%) |

| 🟢 2x Tesla K40m | 8.58 | 24 | 577 | 1971 (1.7x) | 3300 (1.8x) | 1801 (2.0x) |

| 🟢 3x K40m + 1x Titan Xp | 17.16 | 48 | 1154 | 3117 (2.8x) | 5174 (2.8x) | 3127 (3.4x) |

| 🟢 1x Tesla K80 (1 GPU) | 4.11 | 12 | 240 | 916 (58%) | 1642 (53%) | 943 (30%) |

| 🟢 1x Tesla K80 (2 GPU) | 8.22 | 24 | 480 | 2086 (2.3x) | 3448 (2.1x) | 2174 (2.3x) |

| 🟢 1x RTX A6000 | 40.00 | 48 | 768 | 4421 (88%) | 8814 (88%) | 8533 (86%) |

| 🟢 2x RTX A6000 | 80.00 | 96 | 1536 | 8041 (1.8x) | 15026 (1.7x) | 14795 (1.7x) |

| 🟢 4x RTX A6000 | 160.00 | 192 | 3072 | 14314 (3.2x) | 27915 (3.2x) | 27227 (3.2x) |

| 🟢 8x RTX A6000 | 320.00 | 384 | 6144 | 19311 (4.4x) | 40063 (4.5x) | 39004 (4.6x) |

| 🟢 1x Quadro RTX 8000 Pa. | 14.93 | 48 | 624 | 2591 (64%) | 5408 (67%) | 5607 (69%) |

| 🟢 2x Quadro RTX 8000 Pa. | 29.86 | 96 | 1248 | 4767 (1.8x) | 9607 (1.8x) | 10214 (1.8x) |

| 🟢 1x GeForce RTX 2080 Ti | 13.45 | 11 | 616 | 3194 (79%) | 6700 (84%) | 6853 (86%) |

| 🟢 2x GeForce RTX 2080 Ti | 26.90 | 22 | 1232 | 5085 (1.6x) | 10770 (1.6x) | 10922 (1.6x) |

| 🟢 4x GeForce RTX 2080 Ti | 53.80 | 44 | 2464 | 9117 (2.9x) | 18415 (2.7x) | 18598 (2.7x) |

| 🟢 7x 2080 Ti + 1x A100 40GB | 107.60 | 88 | 4928 | 16146 (5.1x) | 33732 (5.0x) | 33857 (4.9x) |

| 🔵 1x A770 + 🟢 1x Titan Xp | 24.30 | 24 | 1095 | 4717 (1.7x) | 8380 (1.7x) | 8026 (1.6x) |

-

What physical model does FluidX3D use?

FluidX3D implements the lattice Boltzmann method, a type of direct numerical simulation (DNS), the most accurate type of fluid simulation, but also the most computationally challenging. Optional extension models include volume force (Guo forcing), free surface (volume-of-fluid and PLIC), a temperature model and Smagorinsky-Lilly subgrid turbulence model. -

FluidX3D only uses FP32 or even FP32/FP16, in contrast to FP64. Are simulation results physically accurate?

Yes, in all but extreme edge cases. The code has been specially optimized to minimize arithmetic round-off errors and make the most out of lower precision. With these optimizations, accuracy in most cases is indistinguishable from FP64 double-precision, even with FP32/FP16 mixed-precision. Details can be found in this paper. -

Why is the domain size limited to 2³² grid points?

The 32-bit unsigned integer grid index will overflow above this number. Using 64-bit index calculation would slow the simulation down by ~20%, as 64-bit uint is calculated on special function units and not the regular GPU cores. 2³² grid points with FP32/FP16 mixed-precision is equivalent to 225GB memory and single GPUs currently are only at 128GB, so it should be fine for a while to come. For higher resolutions above the single-domain limit, use multiple domains (typically 1 per GPU, but multiple domains on the same GPU also work). -

Compared to the benchmark numbers stated here, efficiency seems much lower but performance is slightly better for most devices. How can this be?

In that paper, the One-Step-Pull swap algorithm is implemented, using only misaligned reads and coalesced writes. On almost all GPUs, the performance penalty for misaligned writes is much larger than for misaligned reads, and sometimes there is almost no penalty for misaligned reads at all. Because of this, One-Step-Pull runs at peak bandwidth and thus peak efficiency.

Here, a different swap algorithm termed Esoteric-Pull is used, a type of in-place streaming. This makes the LBM require much less memory (93 vs. 169 (FP32/FP32) or 55 vs. 93 (FP32/FP16) Bytes/cell for D3Q19), and also less memory bandwidth (153 vs. 171 (FP32/FP32) or 77 vs. 95 (FP32/FP16) Bytes/cell per time step for D3Q19) due to so-called implicit bounce-back boundaries. However memory access now is half coalesced and half misaligned for both reads and writes, so memory access efficiency is lower. For overall performance, these two effects approximately cancel out. The benefit of Esoteric-Pull - being able to simulate domains twice as large with the same amount of memory - clearly outweights the cost of slightly lower memory access efficiency, especially since performance is not reduced overall. -

Why don't you use CUDA? Wouldn't that be more efficient?

No, that is a wrong myth. OpenCL is exactly as efficient as CUDA on Nvidia GPUs if optimized properly. Here I did roofline model and analyzed OpenCL performance on various hardware. OpenCL efficiency on modern Nvidia GPUs can be 100% with the right memory access pattern, so CUDA can't possibly be any more efficient. Without any performance advantage, there is no reason to use proprietary CUDA over OpenCL, since OpenCL is compatible with a lot more hardware. -

Why no multi-relaxation-time (MRT) collision operator?

The idea of MRT is to linearly transform the DDFs into "moment space" by matrix multiplication and relax these moments individually, promising better stability and accuracy. In practice, in the vast majority of cases, it has zero or even negative effects on stability and accuracy, and simple SRT is much superior. Apart from the kinematic shear viscosity and conserved terms, the remaining moments are non-physical quantities and their tuning is a blackbox. Although MRT can be implemented in an efficient manner with only a single matrix-vector multiplication in registers, leading to identical performance compared to SRT by remaining bandwidth-bound, storing the matrices vastly elongates and over-complicates the code for no real benefit.

-

Can FluidX3D run on multiple GPUs at the same time?

Yes. The simulation grid is then split in domains, one for each GPU (domain decomposition method). The GPUs essentially pool their memory, enabling much larger grid resolution and higher performance. Rendering is parallelized across multiple GPUs as well; each GPU renders its own domain with a 3D offset, then rendered frames from all GPUs are overlayed with their z-buffers. Communication between domains is done over PCIe, so no SLI/Crossfire/NVLink/InfinityFabric is required. All GPUs must however be installed in the same node (PC/laptop/server). Even unholy combinations of Nvidia/AMD/Intel GPUs will work, although it is recommended to only use GPUs with similar memory capacity and bandwidth together. Using a fast gaming GPU and slow integrated GPU together would only decrease performance due to communication overhead. -

I'm on a budget and have only a cheap computer. Can I run FluidX3D on my toaster PC/laptop?

Absolutely. Today even the most inexpensive hardware, like integrated GPUs or entry-level gaming GPUs, support OpenCL. You might be a bit more limited on memory capacity and grid resolution, but you should be good to go. I've tested FluidX3D on very old and inexpensive hardware and even on my Samsung S9+ smartphone, and it runs just fine, although admittedly a bit slower. -

I don't have an expensive workstation GPU, but only a gaming GPU. Will performance suffer?

No. Efficiency on gaming GPUs is exactly as good as on their "professional"/workstation counterparts. Performance often is even better as gaming GPUs have higher boost clocks. -

Do I need a GPU with ECC memory?

No. Gaming GPUs work just fine. Some Nvidia GPUs automatically reduce memory clocks for compute applications to almost entirely eliminate memory errors. -

My GPU does not support CUDA. Can I still use FluidX3D?

Yes. FluidX3D uses OpenCL 1.2 and not CUDA, so it runs on any GPU from any vendor since around 2012. -

I don't have a dedicated graphics card at all. Can I still run FluidX3D on my PC/laptop?

Yes. FluidX3D also runs on all integrated GPUs since around 2012, and also on CPUs. -

I need more memory than my GPU can offer. Can I run FluidX3D on my CPU as well?

Yes. You only need to install the Intel OpenCL CPU Runtime. -

In the benchmarks you list some very expensive hardware. How do you get access to that?

As a PhD candidate in computational physics, I used FluidX3D for my research, so I had access to BZHPC, SuperMUC-NG and JSC JURECA-DC supercomputers.

-

I don't have an RTX/DXR GPU that supports raytracing. Can I still use raytracing graphics in FluidX3D?

Yes, and at full performance. FluidX3D does not use a bounding volume hierarchy (BVH) to accelerate raytracing, but fast ray-grid traversal instead, implemented directly in OpenCL C. This is much faster than BVH for moving isosurfaces in the LBM grid (~N vs. ~N²+log(N) runtime; LBM itself is ~N³), and it does not require any dedicated raytracing hardware. Raytracing in FluidX3D runs on any GPU that supports OpenCL 1.2. -

I have a datacenter/mining GPU without any video output or graphics hardware. Can FluidX3D still render simulation results?

Yes. FluidX3D does all rendering (rasterization and raytracing) in OpenCL C, so no display output and no graphics features like OpenGL/Vulkan/DirectX are required. Rendering is just another form of compute after all. Rendered frames are passed to the CPU over PCIe and then the CPU can either draw them on screen through dedicated/integrated graphics or write them to the hard drive. -

I'm running FluidX3D on a remote (super-)computer and only have an SSH terminal. Can I still use graphics somehow?

Yes, either directly as interactive ASCII graphics in the terminal or by storing rendered frames on the hard drive and then copying them over via `scp -r user@server.url:"~/path/to/images/folder" .`.

-

I want to learn about programming/software/physics/engineering. Can I use FluidX3D for free?

Yes. Anyone can use FluidX3D for free for public research, education or personal use. Use by scientists, students and hobbyists is free of charge and well encouraged. -

I am a scientist/teacher with a paid position at a public institution. Can I use FluidX3D for my research/teaching?

Yes, you can use FluidX3D free of charge. This is considered research/education, not commercial use. To give credit, the references listed below should be cited. If you publish data/results generated by altered source versions, the altered source code must be published as well. -

I work at a company in CFD/consulting/R&D or related fields. Can I use FluidX3D commercially?

No. Commercial use is not allowed with the current license. -

Is FluidX3D open-source?

No. "Open-source" as a technical term is defined as freely available without any restriction on use, but I am not comfortable with that. I have written FluidX3D in my spare time and no one should milk it for profits while I remain uncompensated, especially considering what other CFD software sells for. The technical term for the type of license I choose is "source-available no-cost non-commercial". The source code is freely available, and you are free to use, to alter and to redistribute it, as long as you do not sell it or make a profit from derived products/services, and as long as you do not use it for any military purposes (see the license for details). -

Will FluidX3D at some point be available with a commercial license?

Maybe I will add the option for a second, commercial license later on. If you are interested in commercial use, let me know. For non-commercial use in science and education, FluidX3D is and will always be free.

- OpenCL-Headers for GPU parallelization (Khronos Group)

- Win32 API for interactive graphics in Windows (Microsoft)

- X11/Xlib for interactive graphics in Linux (The Open Group)

- marching-cubes tables for isosurface generation on GPU (Paul Bourke)

src/lodepng.cppandsrc/lodepng.hppfor.pngencoding and decoding (Lode Vandevenne)- SimplexNoise class in

src/utilities.hppfor generating continuous noise in 2D/3D/4D space (Stefan Gustavson) skybox/skybox8k.pngfor free surface raytracing (HDRI Hub)

- Lehmann, M.: Computational study of microplastic transport at the water-air interface with a memory-optimized lattice Boltzmann method. PhD thesis, (2023)

- Lehmann, M.: Esoteric Pull and Esoteric Push: Two Simple In-Place Streaming Schemes for the Lattice Boltzmann Method on GPUs. Computation, 10, 92, (2022)

- Lehmann, M., Krause, M., Amati, G., Sega, M., Harting, J. and Gekle, S.: Accuracy and performance of the lattice Boltzmann method with 64-bit, 32-bit, and customized 16-bit number formats. Phys. Rev. E 106, 015308, (2022)

- Lehmann, M.: Combined scientific CFD simulation and interactive raytracing with OpenCL. IWOCL'22: International Workshop on OpenCL, 3, 1-2, (2022)

- Lehmann, M., Oehlschlägel, L.M., Häusl, F., Held, A. and Gekle, S.: Ejection of marine microplastics by raindrops: a computational and experimental study. Micropl.&Nanopl. 1, 18, (2021)

- Lehmann, M.: High Performance Free Surface LBM on GPUs. Master's thesis, (2019)

- Lehmann, M. and Gekle, S.: Analytic Solution to the Piecewise Linear Interface Construction Problem and Its Application in Curvature Calculation for Volume-of-Fluid Simulation Codes. Computation, 10, 21, (2022)

- FluidX3D is solo-developed and maintained by Dr. Moritz Lehmann.

- For any questions, feedback or other inquiries, contact me at dr.moritz.lehmann@gmail.com.

- Updates are posted on Mastodon via @ProjectPhysX/#FluidX3D and on YouTube.