This repository contains the crowdsourcing annotations for topical relevance referenced in the following paper:

- Oana Inel, Giannis Haralabopoulos, Dan Li, Christophe Van Gysel, Zoltán Szlávik, Elena Simperl, Evangelos Kanoulas and Lora Aroyo: Studying Topical Relevance with Evidence-based Crowdsourcing. CIKM 2018.

If you find this data useful in your research, please consider citing:

@inproceedings{inel2018studying,

title={Studying Topical Relevance with Evidence-based Crowdsourcing},

author={Inel, Oana and Haralabopoulos, Giannis and Li, Dan and Van Gysel, Christophe and Szlávik, Zoltán and Simperl, Elena and Kanoulas, Evangelos and Aroyo, Lora},

booktitle={To Appear in the Proceedings of the 27th ACM International Conference on Information and Knowledge Management (CIKM)},

year={2018},

organization={ACM}

}

To run and regenerate the results, you need to install the stable version of the crowdtruth==2.0 package from PyPI using:

pip install crowdtruth==2.0

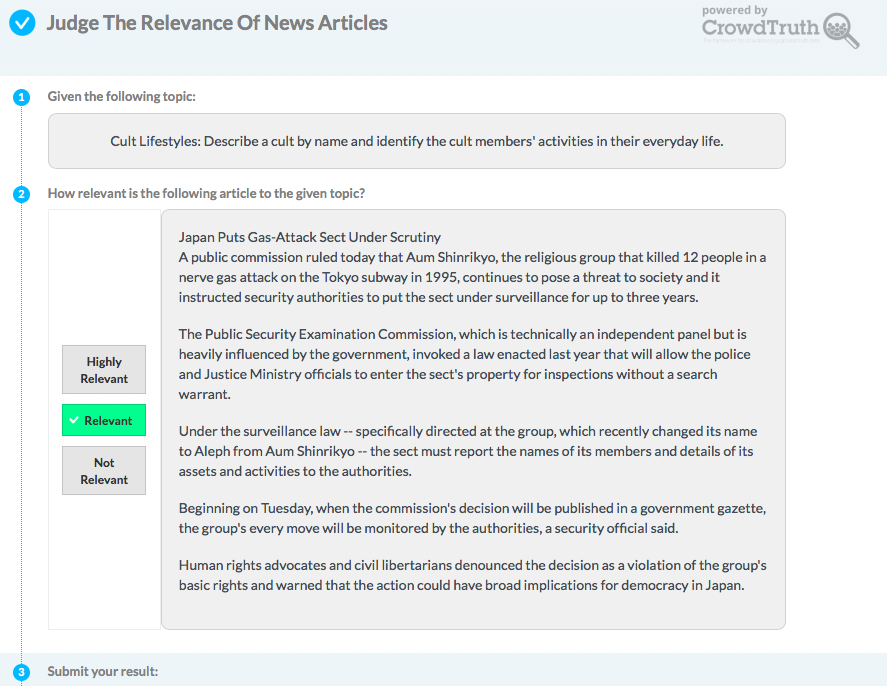

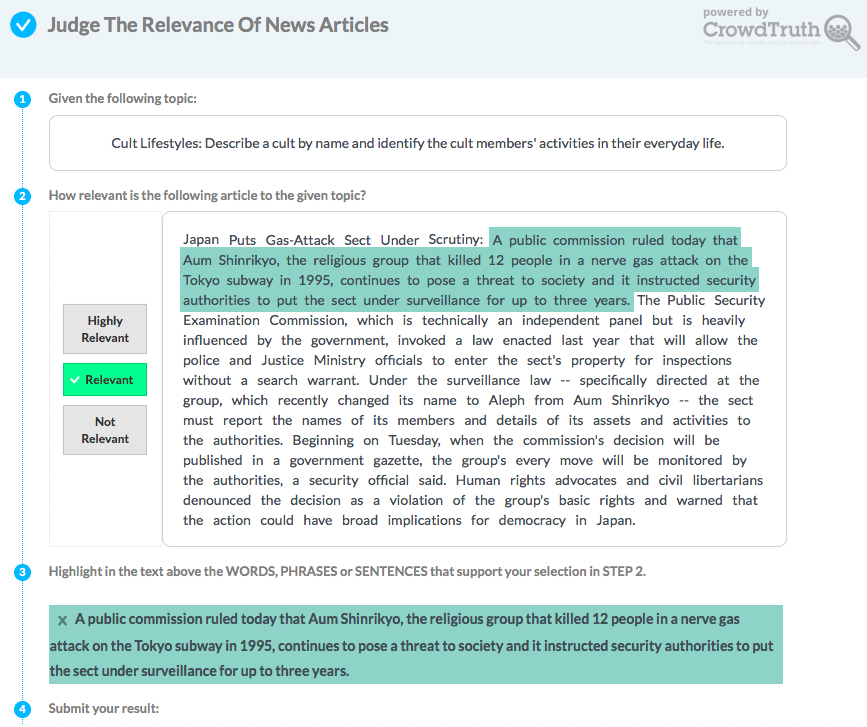

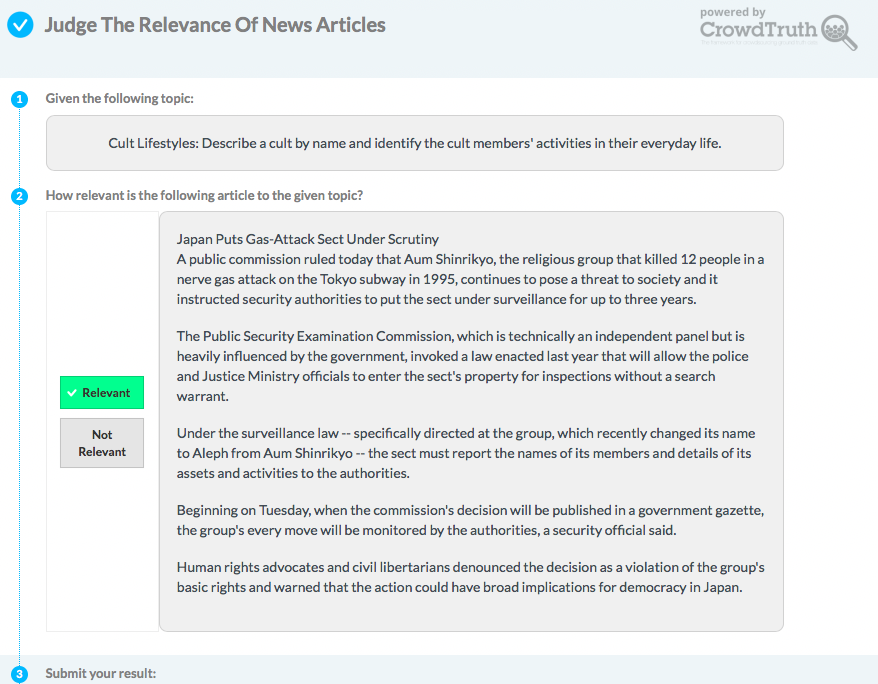

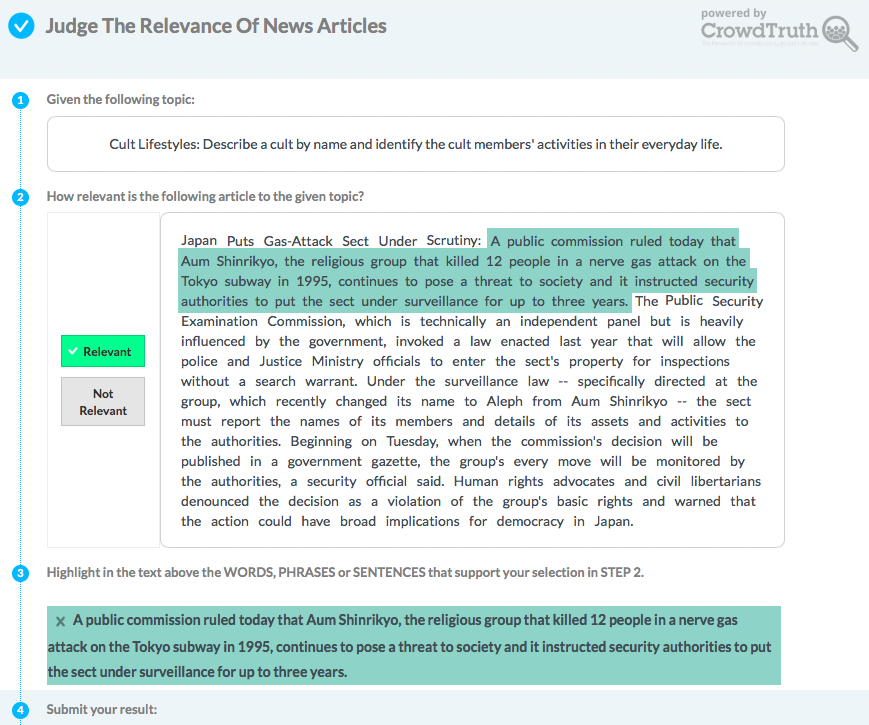

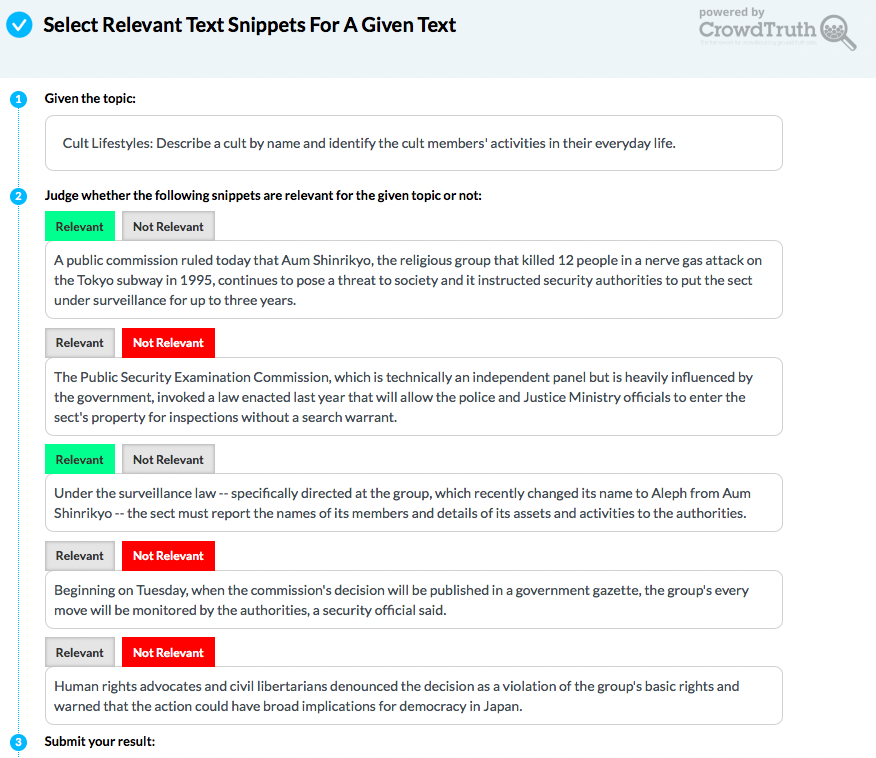

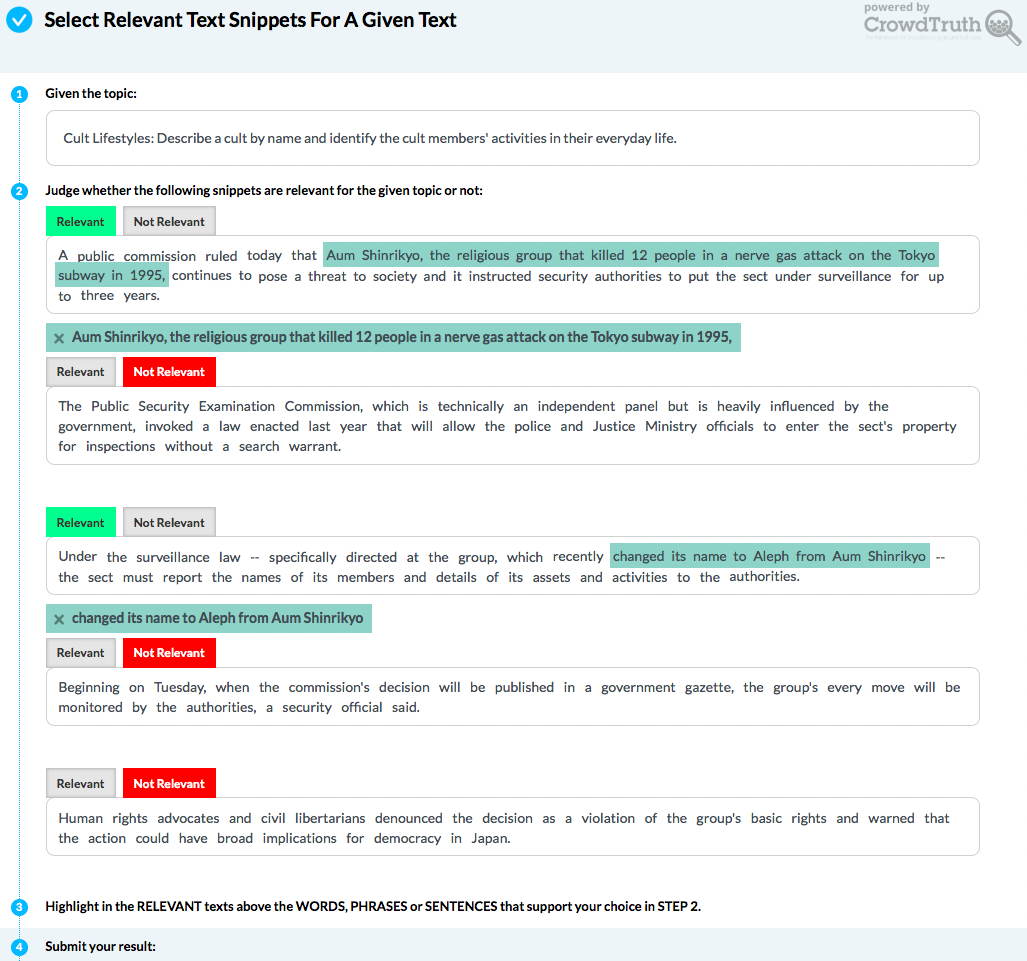

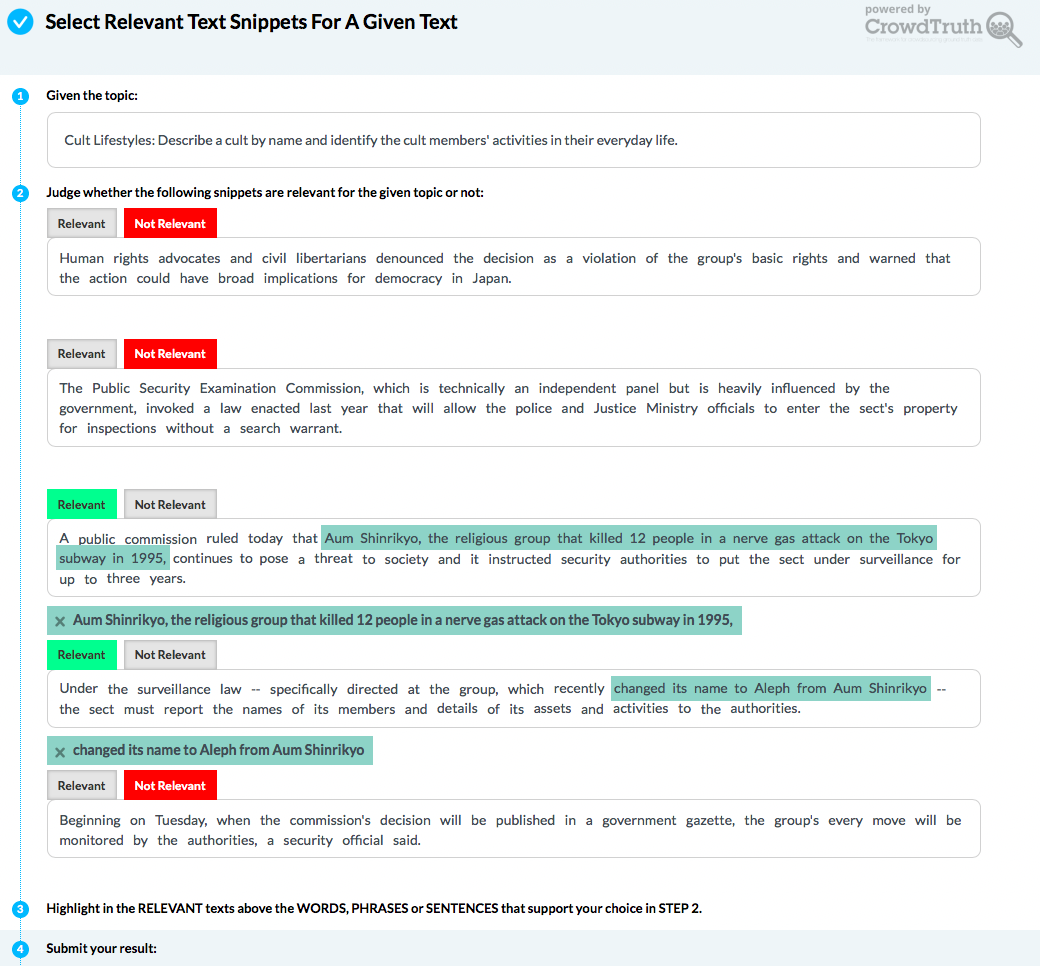

The following crowdsourcing templates have been used in the aforementioned article. We use the same experiment notation as in the article. To check each crowdsourcing annotation template, click on the small template icon. The image will open in a new tab.