This repository includes the source code of the paper "Detection by Attack: Detecting Adversarial Samples by Undercover Attack". Please cite our paper when you use this program! 😍 This paper has been accepted to the conference "European Symposium on Research in Computer Security (ESORICS20)". This paper can be downloaded here.

@inproceedings{zhou2020detection,

title={Detection by attack: Detecting adversarial samples by undercover attack},

author={Zhou, Qifei and Zhang, Rong and Wu, Bo and Li, Weiping and Mo, Tong},

booktitle={European Symposium on Research in Computer Security},

pages={146--164},

year={2020},

organization={Springer}

}

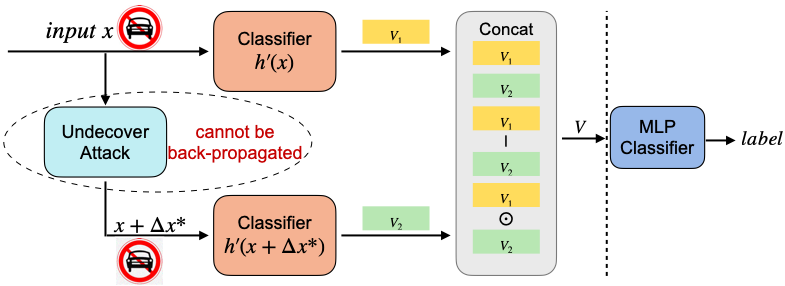

The pipeline of our framework consists of two steps:

- Injecting adversarial samples to train the classification model.

- Training a simple multi-layer perceptron (MLP) classifier to judge whether the sample is adversarial.

We take MNIST and CIFAR as examples: the mnist_undercover_train.py and cifar_undercover_train.py refer to the step one; the mnist_DBA.ipynb and cifar_DBA.ipynb refer to the step two.

Please let us know if you encounter any problems.

The contact email is qifeizhou@pku.edu.cn