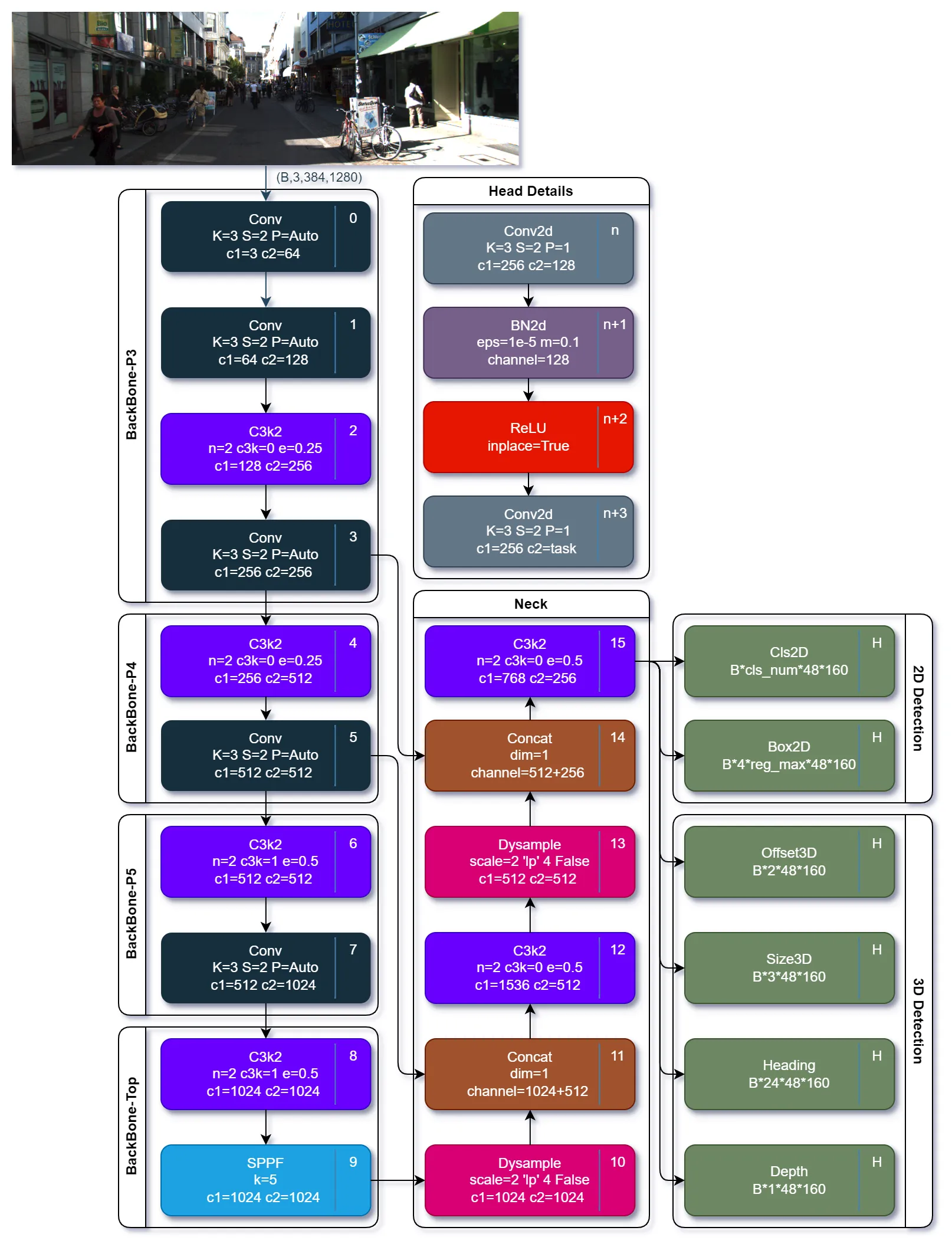

Explore lightweight practices for monocular 3D inspection

探索单目3D检测的轻量级实践

[中文][English]

Note that we are an engineering project, the code will be updated synchronously, currently in the early stages of the project, if you want to help, please check out our projects!

注意,我们是工程化项目,代码会同步更新,目前处于项目的早期阶段,如果你想提供帮助,请查阅我们的projects!

我们将神经网络训练中最重要的部件分离了出来,而其他针对模型的操作,如训练/测试/评估/导出,则作为一种任务文件被不同的实验共用。

| Model | Dataset | info |

|---|---|---|

| MonoLite | Kitti |

| Model | Input size (MB) | Params size (MB) | Total params | Total mult-adds |

|---|---|---|---|---|

| MonoLite | 94.37 | 109.04 | 27,260,609 | 903.20 |

*We used the BN layer, so a value of >=2 is recommended

| Task | GPU(GB) | RAM(GB) | Batch size | Speed(it/s) |

|---|---|---|---|---|

| train | 1.2 | 2.2 | 1 | |

| train | 1.8 | 2.2 | 2 | |

| eval | 2.2 | 2.0 | 1 | 43 |

依据此pytorch_issue中的讨论,我们将虚拟环境迁移至miniforge

conda create -n monolite python=3.12

| 系统 | 组件 | 下载URL | 备注 |

|---|---|---|---|

| windows | Visual Studio 2022 | download | 注意不同版本间的冲突 |

| windows | Cmake | download | 3.30.5 |

| windows | MSbuild | 通过VS2022下载 | |

| windows | MSVC | 通过VS2022下载 | 手动添加至环境变量PATH |

pip install torch==2.5.1 torchvision==0.20.1 torchaudio==2.5.1 --index-url https://download.pytorch.org/whl/cu124

pip install -r requirements.txt

set DOCKER_BUILDKIT=0

docker build -t monolite .

docker run -d --privileged=true --net host --name {any_name} --shm-size 4G --ulimit memlock=-1 --gpus=all -it -v C:\:/windows/ monolite:latest /bin/bashhttps://github.com/DaoCloud/public-image-mirror

TODO

| Model | URL | Trainning log |

|---|---|---|

| MonoLite_Baseline | [百度网盘][谷歌网盘] | https://swanlab.cn/@Sail2Dream/monolite/overview |

python tools\detect.py --cfg C:\workspace\github\monolite\experiment\monolite_YOLO11_centernet

python tools\train.py --cfg C:\workspace\github\monolite\experiment\monolite_YOLO11_centernet

TODO

TODO

python tools\export_onnx.py --cfg C:\workspace\github\monolite\experiment\monolite_YOLO11_centernet

python tools\export_ts.py --cfg C:\workspace\github\monolite\experiment\monolite_YOLO11_centernet

python tools\export_ep.py --cfg C:\workspace\github\monolite\experiment\monolite_YOLO11_centernet

python tools\export_pt.py --cfg C:\workspace\github\monolite\experiment\monolite_YOLO11_centernet

我们衷心感谢所有为这个神经网络开源项目做出贡献的个人和组织。特别感谢以下贡献者:

| type | name | url | title |

|---|---|---|---|

| CVPR 2021 | MonoDLE | monodle github | Delving into Localization Errors for Monocular 3D Object Detection |

| 3DV 2024 | MonoLSS | monolss github | Learnable Sample Selection For Monocular 3D Detection |

| TTFNet | |||

| community | kitti_object_vis | kitti_vis github | KITTI Object Visualization (Birdview, Volumetric LiDar point cloud ) |

| community | mmdet3d | mmdet3d github | OpenMMLab's next-generation platform for general 3D object detection. |

| community | ultralytics | ultralytics github | YOLOv8/v11+v9/v10 |

| community | netron | netron web | Visualizer for neural network, deep learning and machine learning models |

| community | mkdocs-material | mkdocs github | Documentation that simply works |

正是这种协作和共享的精神,让开源项目得以蓬勃发展,并为科技进步做出贡献。我们期待未来有更多的合作和创新,共同推动人工智能领域的发展。

再次感谢每一位支持者,你们的贡献是无价的。