1.TensorMSA

- Tensor Micro Service Architecture is a project started to make TensorFlow more accessable from Java legacy systems with out modifying too much source codes.

- We know there are AI platforms like Azure ML,Nvidia digits, AWS ML and etc, but our goal is little bit diffrent form theirs.

- More focus on enterprise support (continuous training, neural network management, history management, user managemetn and etc)

- More focus on neural network (UI/UX based network configuration, work flow based management and etc)

2. Function

- REST APIs corresponding to Tensorflow

- JAVA API component interface with python REST APIS

- Easy to use UI component provide NN configuration, train remotly, save & load NN models, handling train data sets

- Train NN models via Spark cluster supported

- Android mobile SDK are also part of the plan (gather data and predict)

3. Schedule

- start project : 2016.8

- start dev : 2016.9

- pilot version : 2016.12

- version 0.1 target date : 2017.4

4. Stack

- FE : React(ES6), SVG, D3, Pure CSS

- BE : Django F/W, Tensorflow, PostgreSQL, Spark

5. Methodology

- Agile (CI, TDD, Pair programming and Cloud)

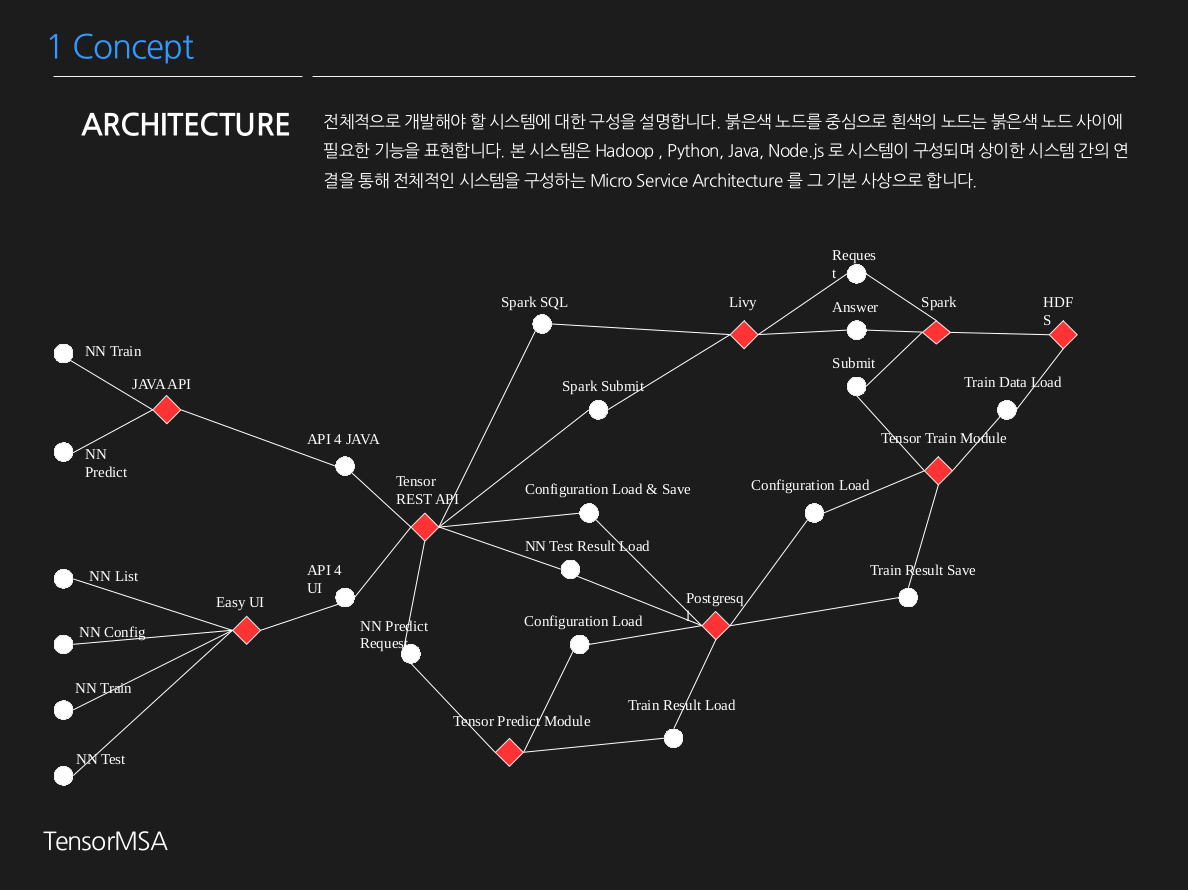

Like described bellow, purpose of this project is provide deep learning management system via rest service so that non python legacy systems can use deep learning easily

Docker(Cluster Mode)(Docker Hub)**(usage)

- Docker Packages

data-master : docker pull tmddno1/tfmsa_name_node:v2

data-slave : docker pull tmddno1/tfmsa_data_node:v2

tfmsa-was : docker pull tmddno1/tensormsa:v4

CI tools : docker pull tmddno1/jenkins:v1 - Start master/slave node container (set up)

docker run --net=host -d tmddno1/tfmsa_name_node:v2

docker run --net=host -d tmddno1/tfmsa_name_node:v2- Start tfmsa-was container (dev purpose)

docker run -it --env="DISPLAY" --env="QT_X11_NO_MITSHM=1" --volume="/tmp/.X11-unix:/tmp/.X11-unix:rw" -p 8989:8989 -p 8888:8888 -p 5432:5432 tmddno1/tensormsa:v4- postgres tool (dev purpose)

pgadmin3 &- Start tfmsa-was container (dev purpose)

docker run -it -p 8989:8989 -d --name tmddno1/tensormsa:v1- Start CI Tool

docker run -it -p 8080:8080 -d --name tmddno1/jenkins:v1- Check servers

TensorMSA : http://locahost:8989

Jenkins : http://locahost:8080

Hadoop :http://localhost:50070

Yarn : http://localhost:8088

Hbase : http://localhost:9095- Server information

path : /home/dev/TensorMSA/TensorMSA/settings.py

# custom setting need for tensormsa

SPARK_HOST = '8b817bad1154:7077'

SPARK_CORE = '1'

SPARK_MEMORY = '1G'

SPARK_WORKER_CORE = '2'

SPARK_WORKER_MEMORY = '4G'

HBASE_HOST = '52.78.179.14'

HBASE_HOST_PORT = 9090

FILE_ROOT = '/tensormsa'

HDFS_HOST = '587ed1df9441:9000'

HDFS_ROOT = '/tensormsa'

HDFS_DF_ROOT = '/tensormsa/dataframe'

HDFS_IMG_ROOT = '/tensormsa/image'

HDFS_CONF_ROOT = '/tensormsa/config'

HDFS_MODEL_ROOT = '/tensormsa/model'

TRAIN_SERVER1 = '52.78.20.251:8989'(Base Size Trouble Shooting) : if you suffer "not enough space" related error with docker

Install*(link)*

1.Install Anaconda

- download Anaconda : https://www.continuum.io/downloads

- install (make sure anaconda works as default interpreter)

bash /home/user/Downloads/Anaconda2-4.1.1-Linux-x86_64.sh vi ~/.bashrc

export PATH="$HOME/anaconda2/bin;$PATH"2.Install Tensorflow

- install Tensorflow using conda

conda create -n tensorflow python=2.7

source activate tensorflow

conda install -c conda-forge tensorflow3.Install Django

- install Django, Django Rest Framework and Postgresql plugin

[Django]

conda install -c anaconda django=1.9.5

[Django Rest Frame Work]

conda install -c ioos djangorestframework=3.3.3

[postgress plugin]

conda install -c anaconda psycopg2=2.6.1

[pygments]

conda install -c anaconda pygments=2.1.34.Install Postgresql

- install

yum install postgresql-server- check account and set pass

cat /etc/passwd | grep postgres

sudo passwd postgres- check PGDATA

cat /var/lib/pgsql/.bash_profile

env | grep PGDATA- init and run

sudo -i -u postgres

initdb

pg_ctl start

ps -ef | grep postgress- connect and create database

# psql

postgres=# create database tensormsa ;

postgres=# select * from pg_database ;- create user for TesorMsA

postgres=#CREATE USER tfmsauser WITH PASSWORD '1234';

postgres=#ALTER ROLE tfmsauser SET client_encoding TO 'utf8';

postgres=#ALTER ROLE tfmsauser SET default_transaction_isolation TO 'read committed';

postgres=#ALTER ROLE testuser SET imezone TO 'UTC';

postgres=#GRANT ALL PRIVILEGES ON DATABASE tensormsa TO tfmsauser;5.get TensorMSA form git

git clone https://github.com/TensorMSA/TensorMSA.git5.migrate database

- get to project folder where you can see 'manage.py'

python manage.py makemigrations

python manage.py migrate6.run server

- run server with bellow command

ip addr | grep "inet "

python manage.py runserver localhost:8989- we are still on research process

- will be prepared on 2017

Contributions (Desigin Link)

1. Data Base

- Train history data

- Work Flow data

- Data Preprocess with spark & UI

- GPU Cluster server info

- SSO & Authority (manager, servers, mobile users)

- Neural Network UI/UX config data

- odit columns

- schedule job info

- plugin info

- convert vchar field to json (case use json)

- store file type data on postgresql

- store raw text data

- store dictionary (for RNN)

- store video, audio

- Code based Custom Neural Net info store

2. View

- Intro Page : notice pops up and etc

- Top Menu : server management, user management, workflow, batch jobs, neural nets, plugins, etc

- NeuralNet Menu : steps we have now, but will be related on workflow nodes

- WorkFlow Menu : define extract data(ETL), preprocess, neuralnet, etc

- Batch jobs : time or event based Workflow waker

- Server Management : manage Hadoop, Hbase, Spark, Database, etc server ip & port

3. Neural Net

- basic : linear regression, logistic regression, clustering

- more nets : rnn, residual, lrcn, auto encoder