-

Notifications

You must be signed in to change notification settings - Fork 170

πFlow部署说明V1.9

1.已部署Spark3,Hadoop,Yarn,Hive(可选),Docker(可选)

2.JDK1.8

3.scala 2.12.18

4.需使用的端口包括

- 8002: πFlow Server

- 50002: h2db port of πFlow Server

- 6002: πFlow Web service

- 6001: πFlow web Page access address

- 6443: the default https listening port of tomcat

https://github.com/cas-bigdatalab/piflow/releases/tag/v1.9

https://github.com/cas-bigdatalab/piflow/releases/download/v1.9/piflow-server-v1.9.tar.gz

将piflow-server-v1.9.tar.gz解压,如下图所示:

-

bin为πFlow命令行工具;

-

classpath为用户自定开发组件Stop放置路径;

-

config.properties为配置文件;

-

lib为πFlow Server所需jar包;piflow-server-0.9.jar为πFlow Server本身jar

-

logs为πFlow日志目录

-

start.sh、restart.sh、stop.sh、status.sh为πFlow Server启动停止脚本。

-

scala为可编程脚本存放目录

-

sparkJar为spark依赖jar包目录

-

flowFile为流水线及流水线组json存放目录

-

example为流水线及流水线组配置样例

spark.master=yarn

spark.deploy.mode=cluster

#hdfs default file system

fs.defaultFS=hdfs://master:9000

#yarn resourcemanager.hostname

yarn.resourcemanager.hostname=master

#if you want to use hive, set hive metastore uris

#hive.metastore.uris=thrift://master:9083

#show data in log, set 0 if you do not want to show data in logs

data.show=5

#server ip and port, ip can not be set to localhost or 127.0.0.1

server.ip=127.0.0.1

server.port=8002

#h2db port,path

h2.port=50002

#h2.path=test

monitor.throughput=false

#If you want to upload python stop,please set hdfs configs

#example hdfs.cluster=hostname:hostIP

hdfs.cluster=master:127.0.0.1

hdfs.web.url=master:9870

checkpoint.path=/piflow/tmp/checkpoint/

#unstructured.parse

unstructured.parse=false

#host can not be set to localhost or 127.0.0.1

# if port is not be set, default 8000

#unstructured.port=8000

#embed_models_path=/data/testingStuff/models/

配置集群的环境变量(自定义按需配置)

export JAVA_HOME=/opt/java

export JRE_HOME=/opt/java/jre

export CLASSPATH=.:$JAVA_HOME/lib:$JRE_HOME/lib:$CLASSPATH

export PATH=$JAVA_HOME/bin:$JRE_HOME/bin:$PATH

export HADOOP_HOME=/opt/hadoop-3.3.0

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

export HIVE_HOME=/opt/apache-hive-2.3.6-bin

export PATH=$PATH:$HIVE_HOME/bin

export SPARK_HOME=/opt/spark-3.4.0-bin-hadoop3.3

export PATH=$PATH:$SPARK_HOME/bin

export SCALA_HOME=/opt/scala-2.12.18

export PATH=$PATH:$SCALA_HOME/bin

export PIFLOW_HOME=/data/piflowServer

export PATH=$PATH:${PIFLOW_HOME}/bin

export DISPLAY=

配置Spark-env.sh文件,环境根据实际需求进行修改

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/lib64/python3.6/site-packages/jep/

./start.sh

-

MYSQL5.7

-

JDK1.8

下载安装文件:

https://github.com/cas-bigdatalab/piflow-web/releases/download/v1.9/piflow-web-v1.9.tar.gz

将piflow-web-v1.9.tar.gz解压,如下图所示:

解压后内容说明:

(1)、piflow-tomcat 为piflow-web启动容器。

(2)、config.properties为配置文件。

(3)、start.sh 为启动脚本

(4)、stop.sh 为停止脚本

(5)、status.sh 为查看状态脚本

(6)、restart.sh 为重启脚本

(7)、logs 为日志路径

(8)、storage 为web文件存储路径

(9)、temp_v0.7.sh 为平滑升级的补丁脚本(不需要执行)

config.properties配置文件如下;

server.servlet.session.timeout=3600

syspara.interfaceUrlHead=http://127.0.0.1:8002

syspara.livyServer=http://master:8998

syspara.isIframe=true

# Total maximum value of uploaded files

spring.servlet.multipart.max-request-size=512MB

# Maximum value of a single file

spring.servlet.multipart.max-file-size=512MB

# data source

sysParam.datasource.type=mysql

# MySQL Configuration

#Configure the connection address of MySQL

spring.datasource.url = jdbc:mysql://127.0.0.1:3306/piflow_web_v1.9?createDatabaseIfNotExist=true&useUnicode=true&characterEncoding=UTF-8&useSSL=false&allowMultiQueries=true&autoReconnect=true&failOverReadOnly=false

#Configure database user name

spring.datasource.username=root

#Configuration database password

spring.datasource.password=root

#Configure JDBC Driver

# Can not be configured, according to the URL automatic identification, recommended configuration

spring.datasource.driver-class-name=com.mysql.cj.jdbc.Driver

spring.flyway.locations=classpath:db/flyway-mysql/

# Log Coordination Standard

logging.level.cn.cnic.*.mapper.*=warn

logging.level.root=warn

logging.level.org.flywaydb=warn

logging.level.org.springframework.security=warn

logging.level.org.hibernate.SQL=warn

# If you need to upload python stop,please set docker.host

push_to_harbor=false

#docker_central_warehouse=127.0.0.1:5100

运行

cd piflow-web

./start.sh

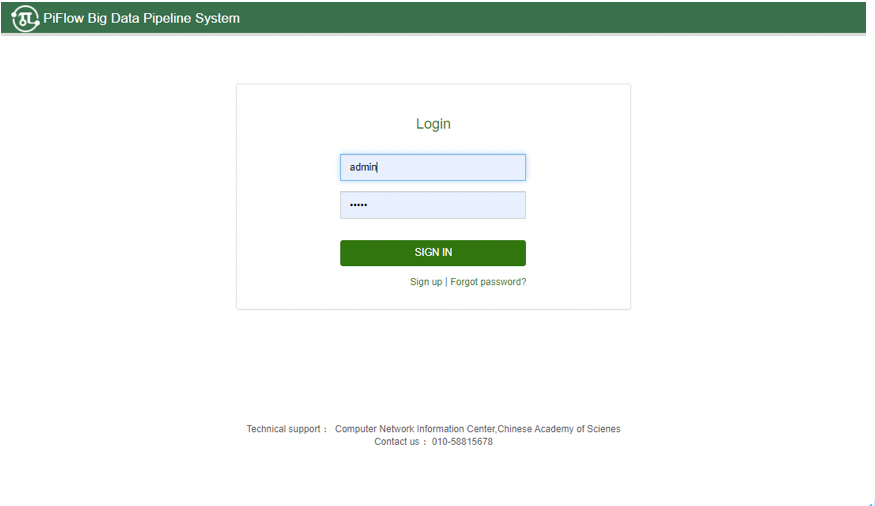

访问进行登陆注册:http://serverIp:6001