This code trains dense image features then computes optical flow and image correspondence using them (dense features are a primary goal, optical flow is just a metric). It was built out of Yu Xiang's DA-RNN code base.

This project is by no means done. The infastructure to train and test models on multiple datasets is completed, as well as trained models that perform reasonably well. The next steps at this point are investigating better losses to use when training and creating a high quality real world datasets (our best modesl were trained on synthetic data (although they do transfer to real world data pretty well)).

Testing data and a trained model can be downloaded here.

-

Install TensorFlow. We use Python 2.7, a virtualenv is recommended. Make sure to install CUDA and CUDNN along the way.

-

Clone the repo

git clone https://github.com/daweim0/Just-some-image-features.git- Compile all the cython and c files. This consists of running

cd lib/triplet_flow_loss && ./build_cython.sh && cd ../..

cd lib/triplet_flow_loss && ./make_with_cuda.sh && cd ../.. (or make_cpu.sh if you want debugging symbols)

cd lib/gt_lov_correspondence_layer && ./build_cython.sh && cd ../..

cd lib/gt_lov_synthetic_layer && ./build_cython.sh && cd ../..-

Download the training data from here

-

Run one of the scripts in /experiments/scripts. They should be run from the repo's root directory. For example, to train features on the synthetic LOV dataset using GPU 0:

./experiments/scripts/lov_features_synthetic.sh 0

-

Once a model is trained it can be tested like so

./experiments/scripts/lov_features_synthetic_60.sh 0 output/lov_synthetic_features_fpn_2x/batch_size_3_loss_L2_optimizer_ADAM_network_net_labeled_fpn_fixed_2017-8-14-17-41-39/vgg16_flow_lov_features_iter_26000.ckpt

(or you can use your own checkpoints)

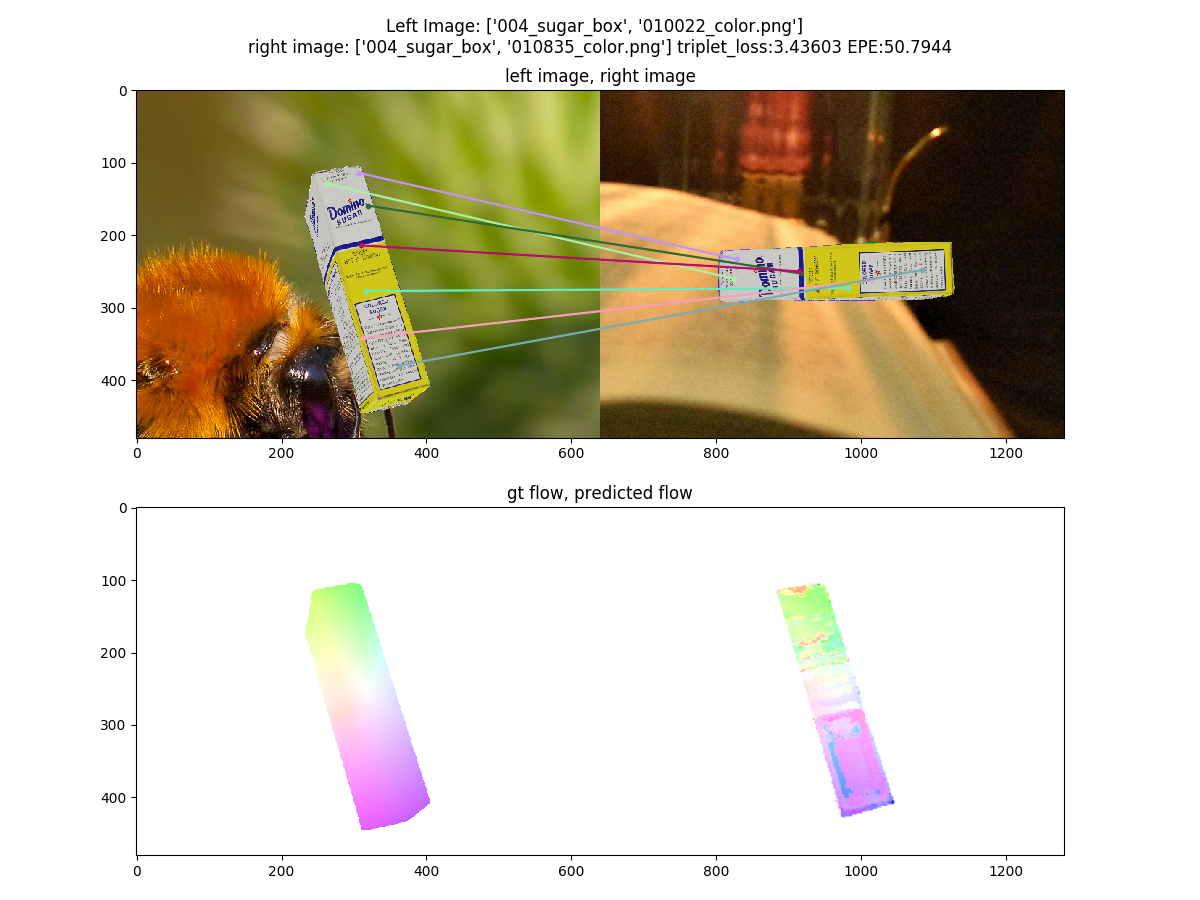

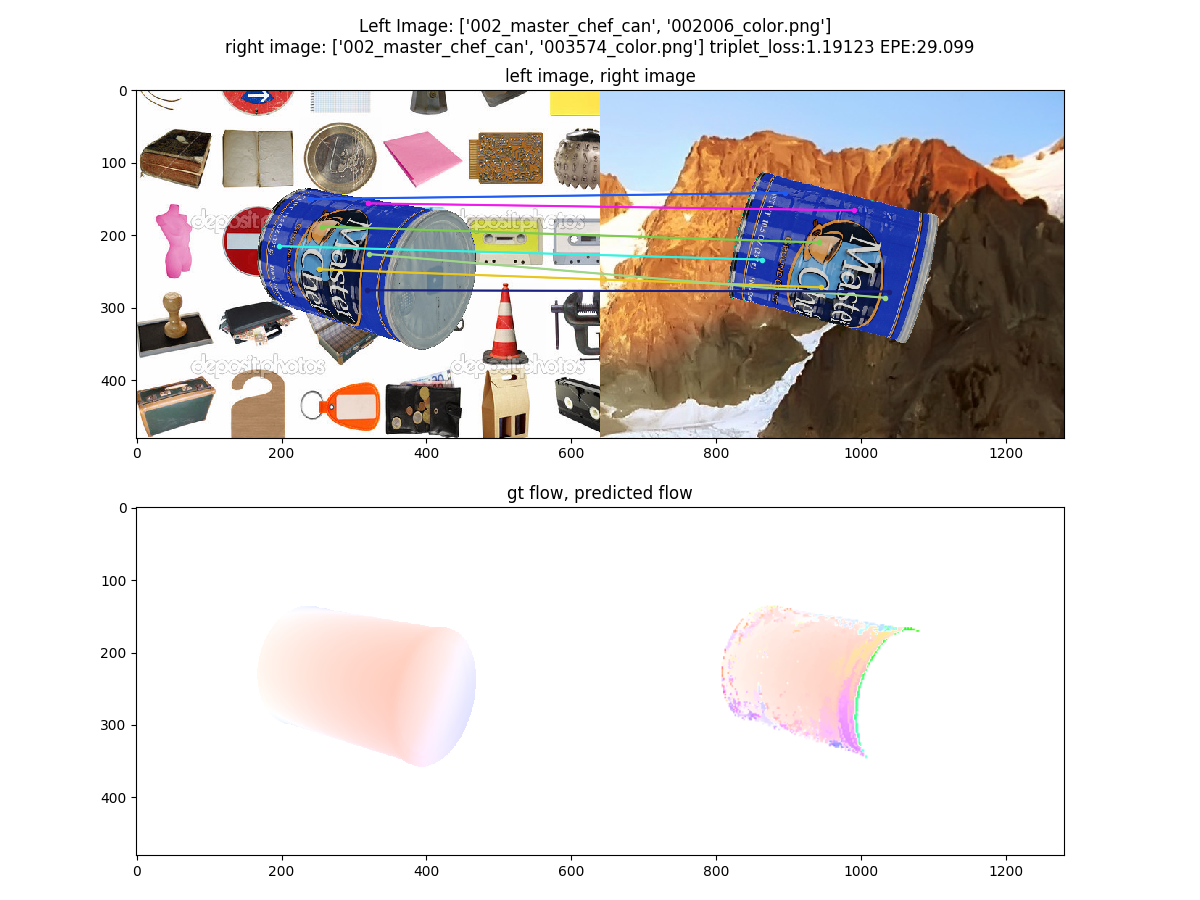

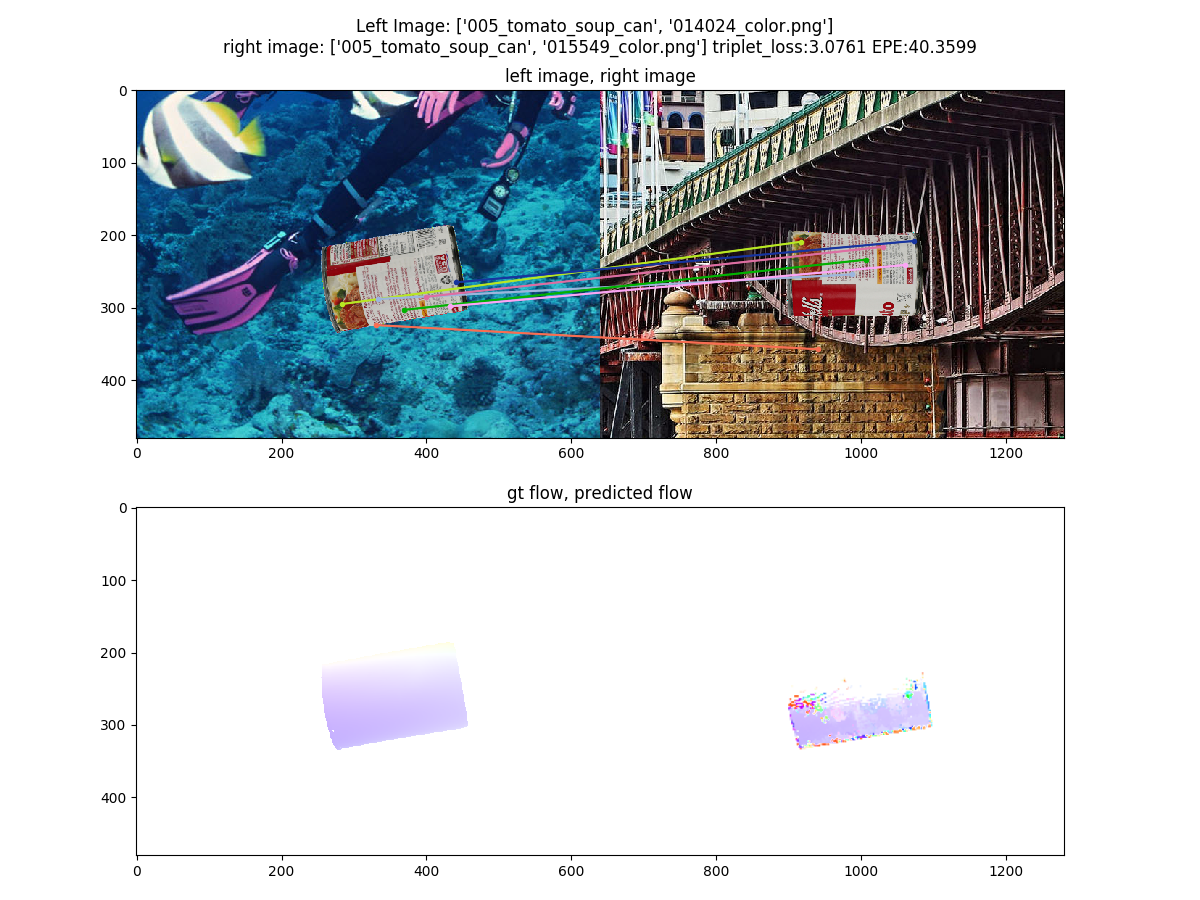

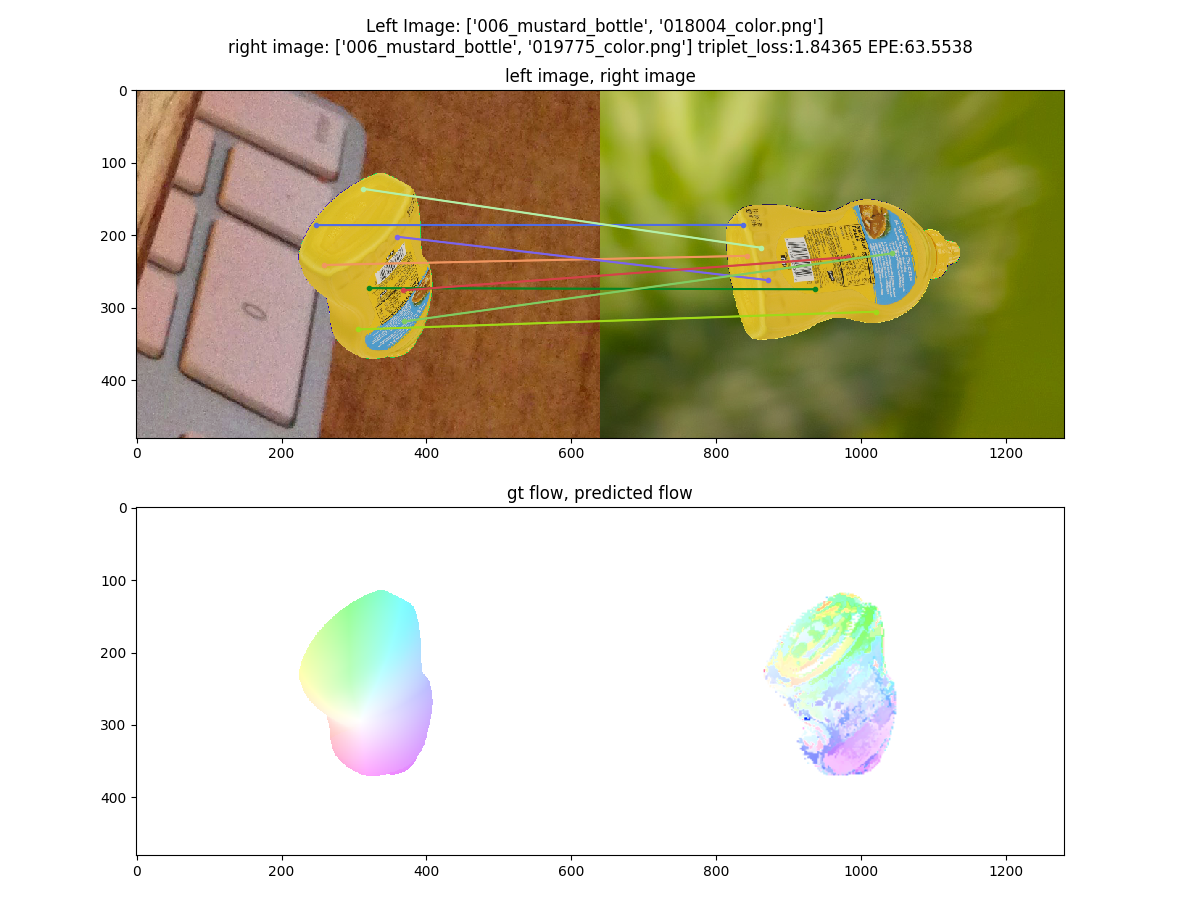

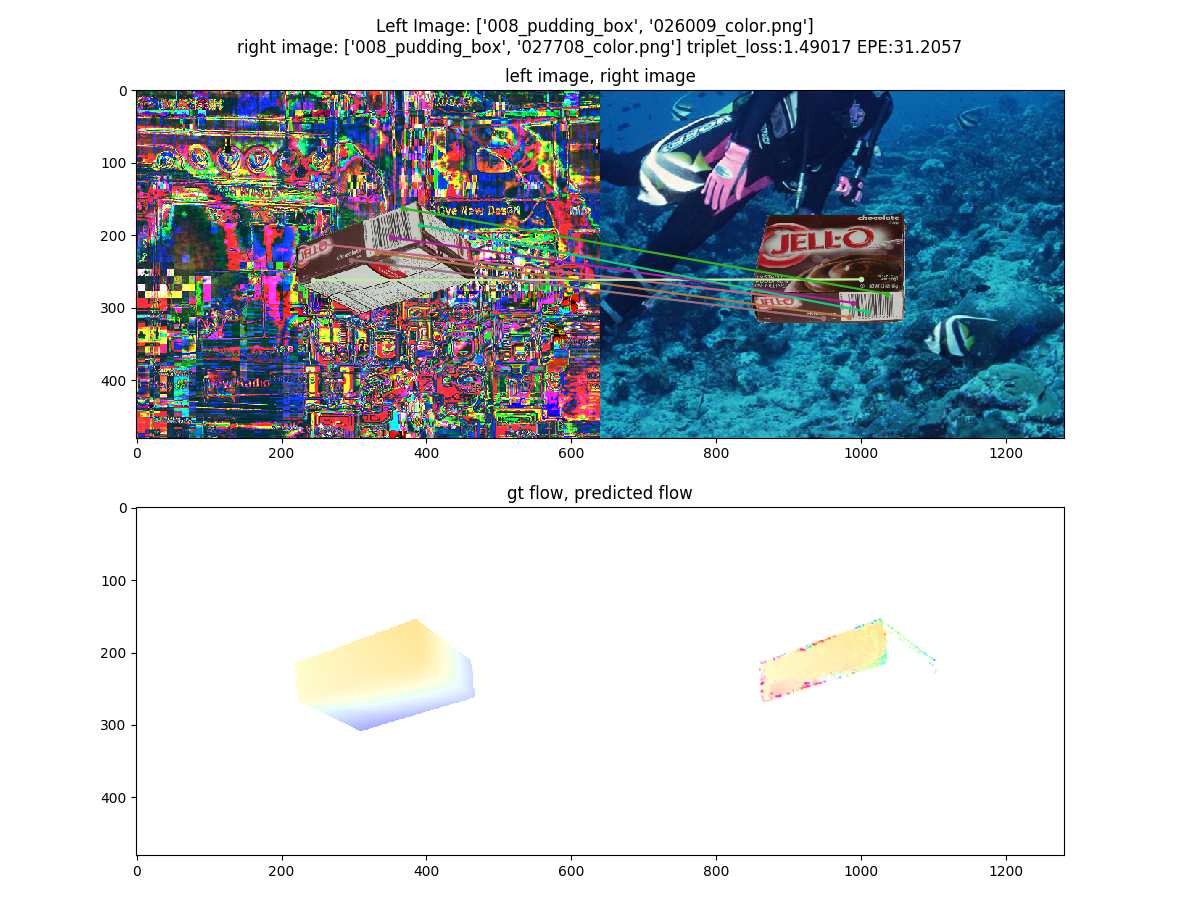

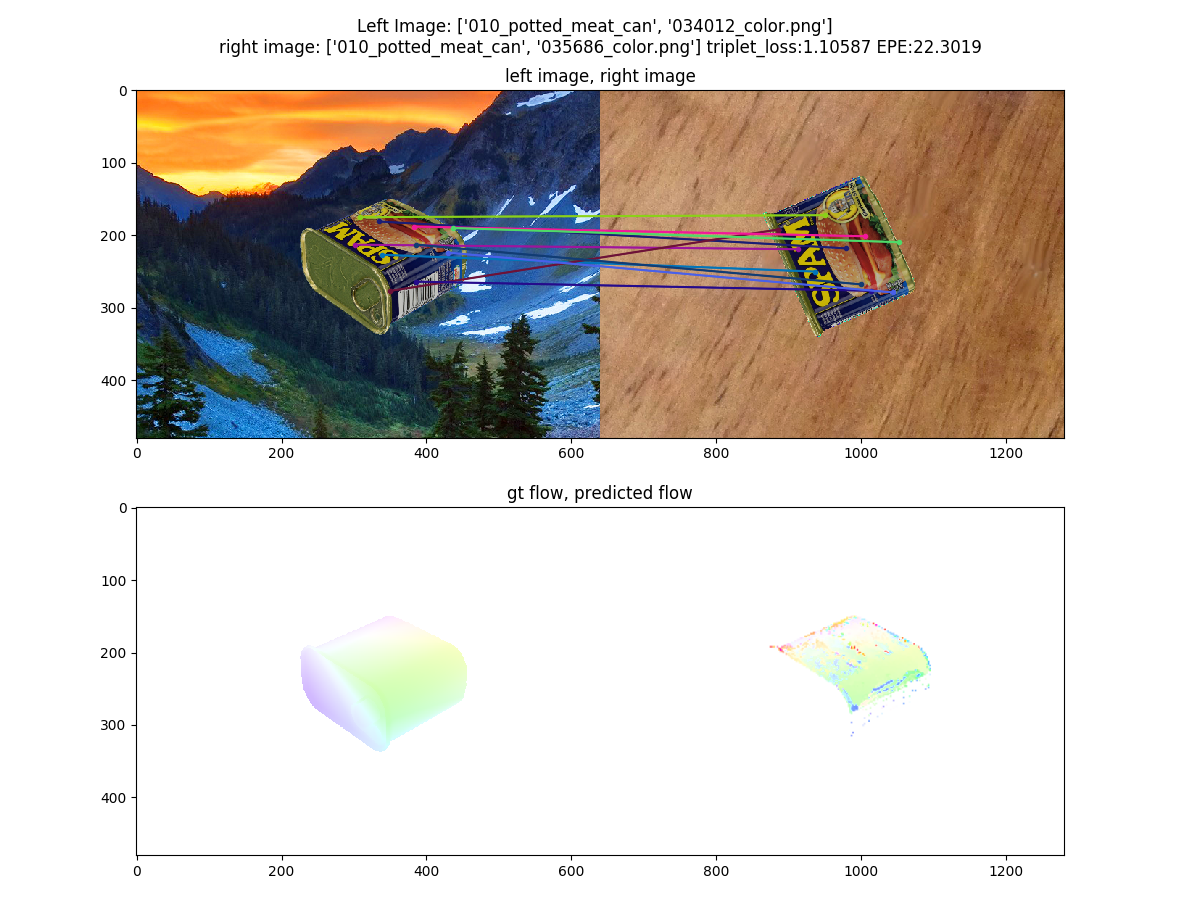

The following images show optical flow calculated by a nearest neighbor search on image feature maps, individually taking every pixel in the left image and looking for the most similar pixel in the right image. The visualization is direct output from the nearest neighbor search, no post-processing or smoothing has been done (since the point is to demonstrate how discriminative the features are, not fancy optical flow algorithms).

This code is released under the MIT License (refer to the LICENSE file for details).