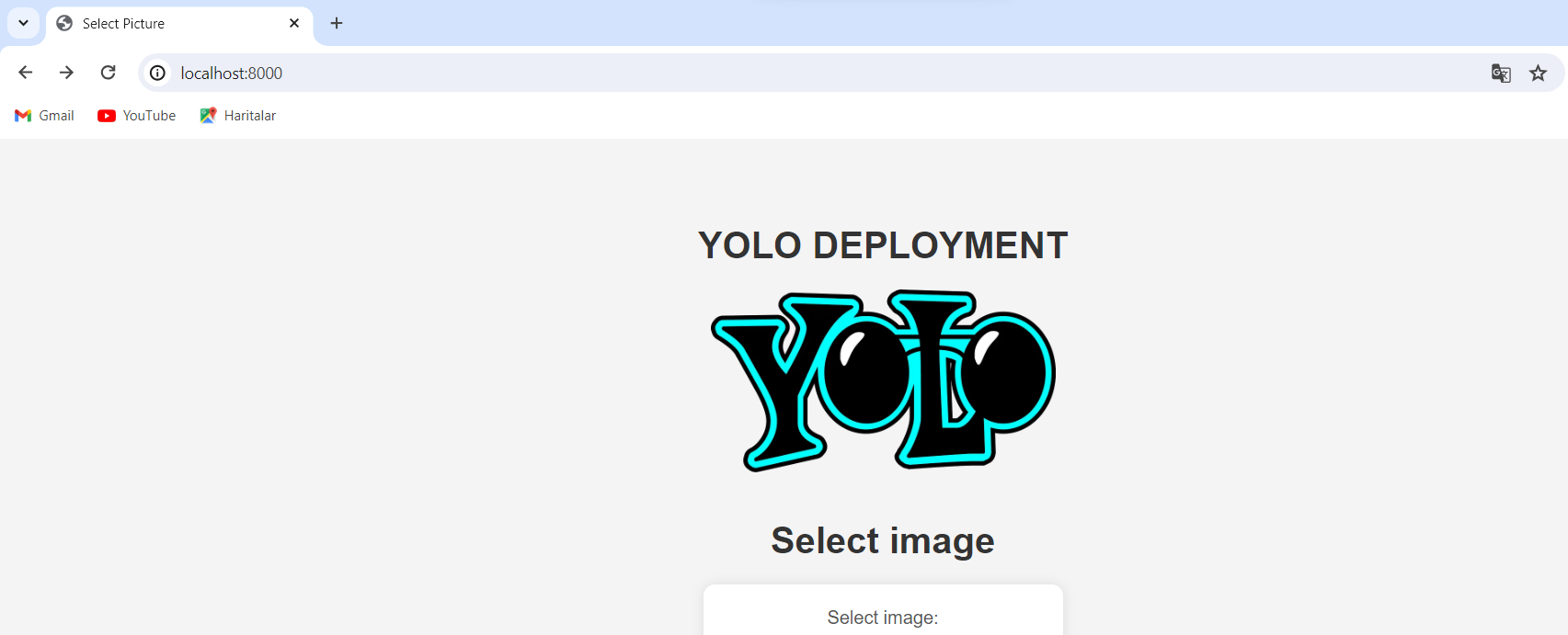

This project provides a microservice for object detection using the YOLO (You Only Look Once) model. The application is containerized with Docker, making it easy to deploy and run.

To run the project locally, ensure that Docker is installed on your machine. Follow these steps to set up the project:

-

Clone this repository to your local machine:

git clone https://github.com/enesagu/Object_detection_fastAPI_docker.git

-

Navigate to the project directory:

cd Object_detection_fastAPI_docker cd Object_Detection_Yolo_with_FastAPI

-

Build the Docker image and run the application:

docker build -t object_detection . docker run -d -p 8000:8000 object_detection

After successful execution of these steps, you can access the API at http://localhost:8000.

You can use the following API endpoint to detect objects:

- POST /detect/<label?>: Use this endpoint to upload an image file and detect objects with a specific label. The label parameter is optional.

Example requests: COMMAND

curl -X POST 'http://localhost:8000/detect/person' -H 'accept: application/json' -H 'Content-Type: multipart/form-data' -F 'image=@bus.JPG;type=image/jpeg'Python: request.py

import requests

# API endpoint URL

api_endpoint = "http://localhost:8000/detect/"

# Resim dosyası

image_path = "test_image1_bus_people.jpg"

# POST isteği yapma

files = {"image": open(image_path, "rb")}

response = requests.post(api_endpoint, files=files)

# Yanıtı kontrol etme

if response.status_code == 200:

result = response.json()

print("Detection Results:", result)

else:

print("API request failed:", response.text)This project is built using the FastAPI framework, chosen for its high performance and ease of use. The YOLO model is utilized for object detection due to its fast and accurate performance. Additionally, the pre-trained YOLO model is converted to the ONNX format for improved efficiency.

The project is designed to run in a Docker environment. Uploaded image files must adhere to specific dimensions and formats.

To run tests, you can use the docker_image_test.py file located in the test_images folder. Ensure that you have Python installed on your system.

python docker_image_test.pyThe tests should produce the following output:

Test Status: {'Test 1': 'Success', 'Test 2': 'Success', 'Test 3': 'Success'}

See below for a quickstart installation and usage example, and see the YOLOv8 Docs for full documentation on training, validation, prediction and deployment.

Install

Pip install the ultralytics package including all requirements in a Python>=3.8 environment with PyTorch>=1.8.

pip install ultralyticsFor alternative installation methods including Conda, Docker, and Git, please refer to the Quickstart Guide.

Usage

YOLOv8 may be used directly in the Command Line Interface (CLI) with a yolo command:

yolo predict model=yolov8n.pt source='https://ultralytics.com/images/bus.jpg'yolo can be used for a variety of tasks and modes and accepts additional arguments, i.e. imgsz=640. See the YOLOv8 CLI Docs for examples.

YOLOv8 may also be used directly in a Python environment, and accepts the same arguments as in the CLI example above:

from ultralytics import YOLO

model = YOLO('yolov8n.pt')

model.export(format='onnx')

print("Model exported to ONNX format successfully.")

# Load the YOLO model

onnx_model = YOLO(onnx_model_path, task='detect')

source = str("https://ultralytics.com/images/bus.jpg")

# Perform object detection

# results image saving

result = onnx_model(source, save=True)See YOLOv8 Python Docs for more examples.

Docker image with Uvicorn managed by Gunicorn for high-performance FastAPI web applications in Python with performance auto-tuning. Optionally in a slim version or based on Alpine Linux.

GitHub repo: https://github.com/tiangolo/uvicorn-gunicorn-fastapi-docker

Docker Hub image: https://hub.docker.com/r/tiangolo/uvicorn-gunicorn-fastapi/

FastAPI has shown to be a Python web framework with one of the best performances, as measured by third-party benchmarks, thanks to being based on and powered by Starlette.

The achievable performance is on par with (and in many cases superior to) Go and Node.js frameworks.

This image has an auto-tuning mechanism included to start a number of worker processes based on the available CPU cores. That way you can just add your code and get high performance automatically, which is useful in simple deployments.

For example, your Dockerfile could look like:

FROM python:3.9

WORKDIR /code

COPY ./requirements.txt /code/requirements.txt

RUN pip install --no-cache-dir --upgrade -r /code/requirements.txt

COPY ./app /code/app

CMD ["uvicorn", "app.main:app", "--host", "0.0.0.0", "--port", "80"]You should consider using this Docker image if your application is sufficiently simple that you don't need to finely tune the number of processes (at least not yet), and you can simply use an automated default, and if you are running it on a single server, not a cluster.

If you are deploying to a single server (not a cluster) using Docker Compose, and you don't have an easy way to manage replication of containers (with Docker Compose) while preserving shared network and load balancing, then you may want to use a single container with a Gunicorn process manager starting multiple Uvicorn worker processes, as provided by this Docker image.

There may be other reasons why having multiple processes within a single container is preferable to having multiple containers, each with a single process.

For instance, you might have a tool like a Prometheus exporter that needs access to all requests. If you had multiple containers, Prometheus would, by default, only retrieve metrics for one container at a time (the container that handled that specific request), rather than aggregating metrics from all replicated containers.

In such cases, it might be simpler to have one container with multiple processes, with a local tool (e.g., a Prometheus exporter) collecting Prometheus metrics for all internal processes and exposing those metrics on that single container.

You don't need to clone the GitHub repo.

You can use this image as a base image for other images.

Assuming you have a file requirements.txt, you could have a Dockerfile like this:

FROM tiangolo/uvicorn-gunicorn-fastapi:python3.11

COPY ./requirements.txt /app/requirements.txt

RUN pip install --no-cache-dir --upgrade -r /app/requirements.txt

COPY ./app /appIt will expect a file at /app/app/main.py.

Or otherwise a file at /app/main.py.

And will expect it to contain a variable app with your FastAPI application.

Then you can build your image from the directory that has your Dockerfile, e.g:

docker build -t myimage ./- Go to your project directory.

- Create a

Dockerfilewith:

FROM tiangolo/uvicorn-gunicorn-fastapi:python3.11

COPY ./requirements.txt /app/requirements.txt

RUN pip install --no-cache-dir --upgrade -r /app/requirements.txt

COPY ./app /app- Create an

appdirectory and enter in it. - Create a

main.pyfile with:

from fastapi import FastAPI

app = FastAPI()

@app.get("/")

def read_root():

return {"Hello": "World"}

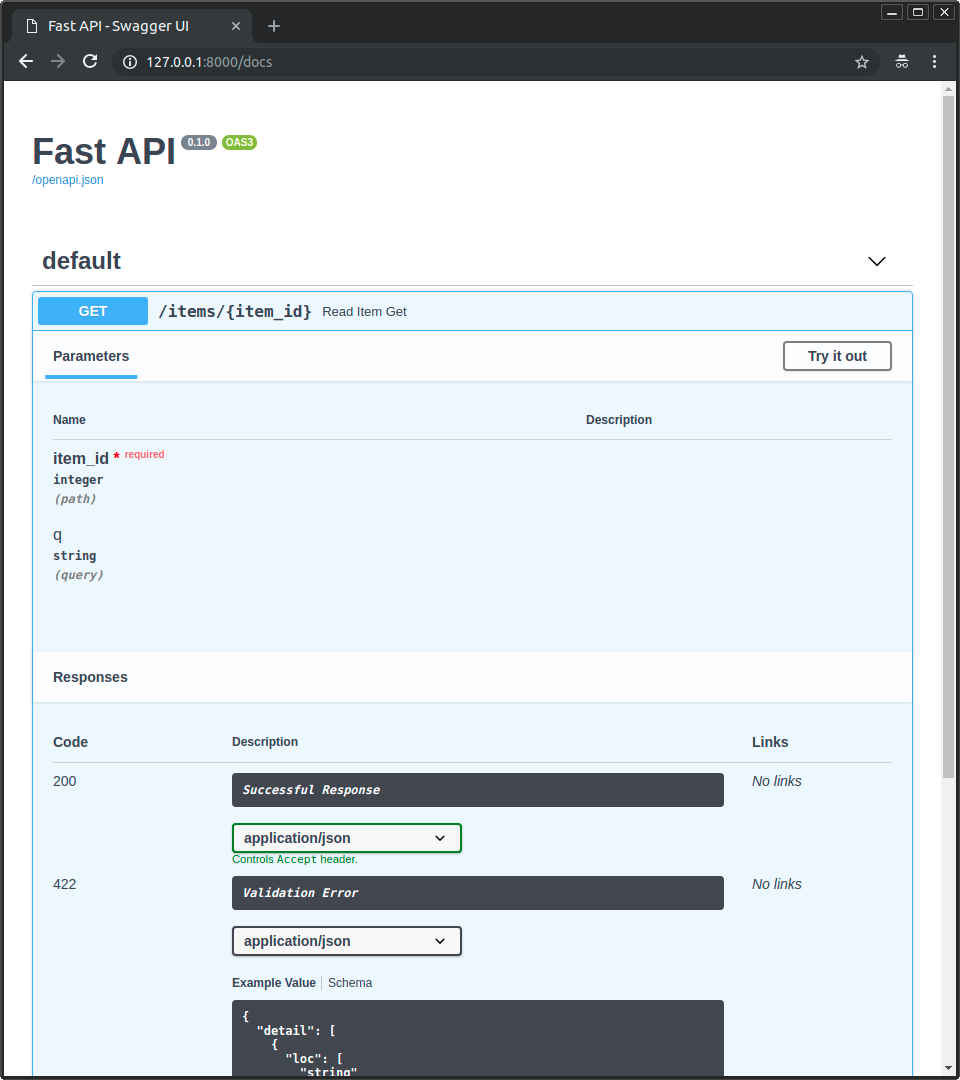

@app.get("/items/{item_id}")

def read_item(item_id: int, q: str = None):

return {"item_id": item_id, "q": q}- You should now have a directory structure like:

.

├── app

│ └── main.py

└── Dockerfile

- Go to the project directory (in where your

Dockerfileis, containing yourappdirectory). - Build your FastAPI image:

docker build -t myimage_docker .- Run a container based on your image:

docker run -d --name mycontainer -p 80:80 myimage_dockerNow you have an optimized FastAPI server in a Docker container. Auto-tuned for your current server (and number of CPU cores).

http://127.0.0.1/items/5?q=somequery

You will see something like:

{"item_id": 42, "q": "nothing"}Now you can go to http://192.168.99.100/docs or http://127.0.0.1/docs (or equivalent, using your Docker host).

You will see the automatic interactive API documentation (provided by Swagger UI):

These are the environment variables that you can set in the container to configure it and their default values:

The Python "module" (file) to be imported by Gunicorn, this module would contain the actual application in a variable.

By default:

app.mainif there's a file/app/app/main.pyormainif there's a file/app/main.py

For example, if your main file was at /app/custom_app/custom_main.py, you could set it like:

docker run -d -p 80:80 -e MODULE_NAME="custom_app.custom_main" myimageThe variable inside of the Python module that contains the FastAPI application.

By default:

app

For example, if your main Python file has something like:

from fastapi import FastAPI

api = FastAPI()

@api.get("/")

def read_root():

return {"Hello": "World"}In this case api would be the variable with the FastAPI application. You could set it like:

docker run -d -p 80:80 -e VARIABLE_NAME="api" myimageThe string with the Python module and the variable name passed to Gunicorn.

By default, set based on the variables MODULE_NAME and VARIABLE_NAME:

app.main:appormain:app

You can set it like:

docker run -d -p 80:80 -e APP_MODULE="custom_app.custom_main:api" myimageThe path to a Gunicorn Python configuration file.

By default:

/app/gunicorn_conf.pyif it exists/app/app/gunicorn_conf.pyif it exists/gunicorn_conf.py(the included default)

You can set it like:

docker run -d -p 80:80 -e GUNICORN_CONF="/app/custom_gunicorn_conf.py" myimageYou can use the config file from the base image as a starting point for yours.

This image will check how many CPU cores are available in the current server running your container.

It will set the number of workers to the number of CPU cores multiplied by this value.

By default:

1

You can set it like:

docker run -d -p 80:80 -e WORKERS_PER_CORE="3" myimageIf you used the value 3 in a server with 2 CPU cores, it would run 6 worker processes.

You can use floating point values too.

So, for example, if you have a big server (let's say, with 8 CPU cores) running several applications, and you have a FastAPI application that you know won't need high performance. And you don't want to waste server resources. You could make it use 0.5 workers per CPU core. For example:

docker run -d -p 80:80 -e WORKERS_PER_CORE="0.5" myimageIn a server with 8 CPU cores, this would make it start only 4 worker processes.

Note: By default, if WORKERS_PER_CORE is 1 and the server has only 1 CPU core, instead of starting 1 single worker, it will start 2. This is to avoid bad performance and blocking applications (server application) on small machines (server machine/cloud/etc). This can be overridden using WEB_CONCURRENCY.

Set the maximum number of workers to use.

You can use it to let the image compute the number of workers automatically but making sure it's limited to a maximum.

This can be useful, for example, if each worker uses a database connection and your database has a maximum limit of open connections.

By default it's not set, meaning that it's unlimited.

You can set it like:

docker run -d -p 80:80 -e MAX_WORKERS="24" myimageThis would make the image start at most 24 workers, independent of how many CPU cores are available in the server.

Override the automatic definition of number of workers.

By default:

- Set to the number of CPU cores in the current server multiplied by the environment variable

WORKERS_PER_CORE. So, in a server with 2 cores, by default it will be set to2.

You can set it like:

docker run -d -p 80:80 -e WEB_CONCURRENCY="2" myimageThis would make the image start 2 worker processes, independent of how many CPU cores are available in the server.

The "host" used by Gunicorn, the IP where Gunicorn will listen for requests.

It is the host inside of the container.

So, for example, if you set this variable to 127.0.0.1, it will only be available inside the container, not in the host running it.

It's is provided for completeness, but you probably shouldn't change it.

By default:

0.0.0.0

The port the container should listen on.

If you are running your container in a restrictive environment that forces you to use some specific port (like 8080) you can set it with this variable.

By default:

80

You can set it like:

docker run -d -p 80:8080 -e PORT="8080" myimageThe actual host and port passed to Gunicorn.

By default, set based on the variables HOST and PORT.

So, if you didn't change anything, it will be set by default to:

0.0.0.0:80

You can set it like:

docker run -d -p 80:8080 -e BIND="0.0.0.0:8080" myimageThe log level for Gunicorn.

One of:

debuginfowarningerrorcritical

By default, set to info.

If you need to squeeze more performance sacrificing logging, set it to warning, for example:

You can set it like:

docker run -d -p 80:8080 -e LOG_LEVEL="warning" myimageThe class to be used by Gunicorn for the workers.

By default, set to uvicorn.workers.UvicornWorker.

The fact that it uses Uvicorn is what allows using ASGI frameworks like FastAPI, and that is also what provides the maximum performance.

You probably shouldn't change it.

But if for some reason you need to use the alternative Uvicorn worker: uvicorn.workers.UvicornH11Worker you can set it with this environment variable.

You can set it like:

docker run -d -p 80:8080 -e WORKER_CLASS="uvicorn.workers.UvicornH11Worker" myimageWorkers silent for more than this many seconds are killed and restarted.

Read more about it in the Gunicorn docs: timeout.

By default, set to 120.

Notice that Uvicorn and ASGI frameworks like FastAPI are async, not sync. So it's probably safe to have higher timeouts than for sync workers.

You can set it like:

docker run -d -p 80:8080 -e TIMEOUT="20" myimageThe number of seconds to wait for requests on a Keep-Alive connection.

Read more about it in the Gunicorn docs: keepalive.

By default, set to 2.

You can set it like:

docker run -d -p 80:8080 -e KEEP_ALIVE="20" myimageTimeout for graceful workers restart.

Read more about it in the Gunicorn docs: graceful-timeout.

By default, set to 120.

You can set it like:

docker run -d -p 80:8080 -e GRACEFUL_TIMEOUT="20" myimageThe access log file to write to.

By default "-", which means stdout (print in the Docker logs).

If you want to disable ACCESS_LOG, set it to an empty value.

For example, you could disable it with:

docker run -d -p 80:8080 -e ACCESS_LOG= myimageThe error log file to write to.

By default "-", which means stderr (print in the Docker logs).

If you want to disable ERROR_LOG, set it to an empty value.

For example, you could disable it with:

docker run -d -p 80:8080 -e ERROR_LOG= myimageAny additional command line settings for Gunicorn can be passed in the GUNICORN_CMD_ARGS environment variable.

Read more about it in the Gunicorn docs: Settings.

These settings will have precedence over the other environment variables and any Gunicorn config file.

For example, if you have a custom TLS/SSL certificate that you want to use, you could copy them to the Docker image or mount them in the container, and set --keyfile and --certfile to the location of the files, for example:

docker run -d -p 80:8080 -e GUNICORN_CMD_ARGS="--keyfile=/secrets/key.pem --certfile=/secrets/cert.pem" -e PORT=443 myimageNote: instead of handling TLS/SSL yourself and configuring it in the container, it's recommended to use a "TLS Termination Proxy" like Traefik. You can read more about it in the FastAPI documentation about HTTPS.

The path where to find the pre-start script.

By default, set to /app/prestart.sh.

You can set it like:

docker run -d -p 80:8080 -e PRE_START_PATH="/custom/script.sh" myimageIn summary, it's generally recommended to avoid using Alpine for Python projects and opt for the slim Docker image versions instead.

While Alpine Linux is often praised for its lightweight nature, it may not be the best choice for Python projects. Unlike languages like Go, where you can build a static binary and copy it to a simple Alpine image, Python's reliance on specific tooling for building extensions can cause complications.

When installing Python packages in Alpine, you may encounter difficulties due to the lack of precompiled installable packages ("wheels"). This often leads to installing additional tooling and building dependencies, resulting in an image size comparable to, or sometimes even larger than, using a standard Python image based on Debian.

Using Alpine for Python images can also significantly increase build times and resource consumption. Building dependencies takes longer, requiring more CPU time and energy for each build, ultimately increasing the carbon footprint of the project.

For those seeking slim Python images, it's advisable to consider using the "slim" versions based on Debian. These images offer a balance between size and usability, providing a more efficient solution for Python development.

https://github.com/tiangolo/uvicorn-gunicorn-fastapi-docker/blob/master/README.md