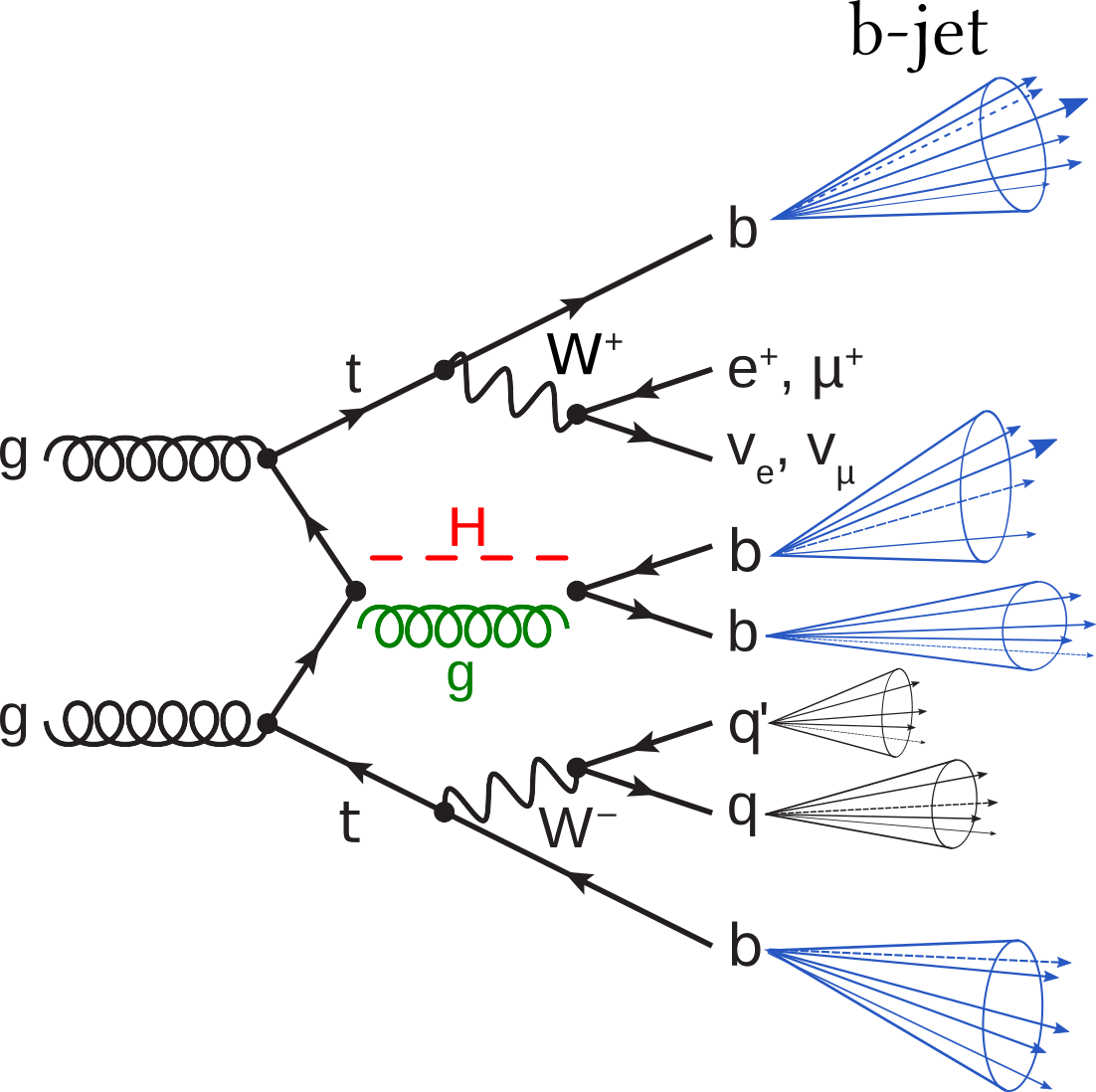

Example of Leading Order (LO) Feynman diagram of the signal process in red and the dominant background process in green[1]. The Higgs Boson is produced in association with via gluon fusion and it decays to

. The channel is semi-leptonic as only one of the W bosons decays into leptons.

Datasets[1]

- Download the optimal dataset.

- Use only the training set, we don't need the validation and test set for training GAN models.

- Take only the positive class (y = 1): the events which the Higgs are being produced, as we are not interested in reproducing the background data.

- The 67 features in the dataset are arranged as follow:

-

1st jet, 2nd jet, 3rd jet, ..., 7th jet, MET, lepton

- The jets in total have 7𝑥8=56 features, where every jet has 8 features, which are ordered as:

["pt", "eta", "phi", "en", "px", "py", "pz", "btag"]

- MET has 4 features, which are ordered as:

["phi", "pt", "px", "py"]

- lepton has 7 features, which are ordered as:

["pt", "eta", "phi", "en", "px", "py", "pz"]

-

- We then preprocess the training set so that the training set contains:

- Only the two b-jets (jets with btag=1) with the highest "pt".

- For every jets, only keep the "pt", "eta", "phi", "en" features.

Notes:

MET = missing transverse momentum

lepton = (electron or muon)

btag = either 1 or 0, 1 means the jet is the b-jet, 0 otherwise

In total, the final training set should have 2𝑥4=8 features.

DijetGAN[2] (Edo)

Settings: optimal dataset, 200 epochs, batch size 128, optimizer as explained in reference [2].

- generator loss: MSE

| Filter Size | Stride | Wasserstein distance |

|---|---|---|

| 5 | 1 | 0.126243 |

| 3 | 1 | 0.050658 |

- generator loss: cross-entropy

| Generator Filter Size | Discriminator Filter Size | Generator Stride | Discriminator Stride | Wasserstein distance | Notes |

|---|---|---|---|---|---|

| 7 | 7 | 1 | 1 | 0.054331 | - |

| 5 | 5 | 1 | 1 | 0.070837 | - |

| 5 | 5 | 2 | 2 | not converging | - |

| 3 | 3 | 1 | 1 | 0.045924 | - |

| 3 | 3 | 1 | 1 | 0.040866 | With extra TransposeConv2D layer with 64 channels, convert the dataset's range to [0, 1] |

| 3 | 3 | 2 | 2 | not converging | - |

| 3 | 2 | 1 | 1 | 0.046144 | - |

| 2 | 2 | 1 | 1 | 0.050755 | - |

| 2 | 3 | 1 | 1 | 0.048710 | - |

| 2 | 5 | 1 | 1 | 0.057567 | - |

- WGAN but with DijetGAN architecture (Wasserstein loss)

| Filter Size | Stride | Wasserstein distance |

|---|---|---|

| 5 | 1 | |

| 3 | 1 |