-

-

Notifications

You must be signed in to change notification settings - Fork 264

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

docs: Add docs for installation, configuration and development

- Loading branch information

1 parent

fa30522

commit 2f21ef2

Showing

27 changed files

with

284 additions

and

462 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,24 @@ | ||

| --- | ||

| slug: / | ||

| --- | ||

|

|

||

| # Introduction | ||

|

|

||

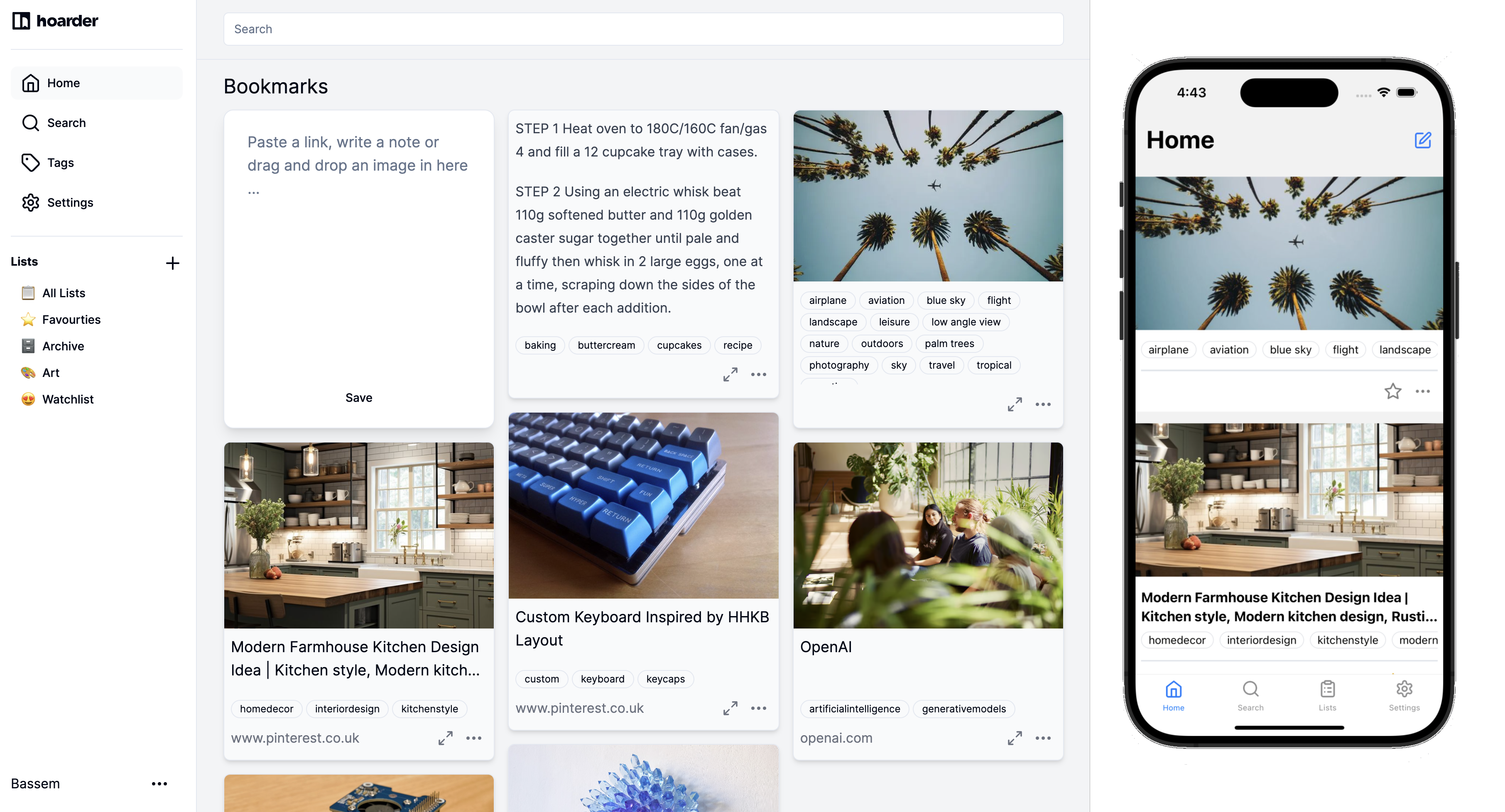

| Hoarder is an open source "Bookmark Everything" app that uses AI for automatically tagging the content you throw at it. The app is built with self-hosting as a first class citizen. | ||

|

|

||

|  | ||

|

|

||

|

|

||

| ## Features | ||

|

|

||

| - 🔗 Bookmark links, take simple notes and store images. | ||

| - ⬇️ Automatic fetching for link titles, descriptions and images. | ||

| - 📋 Sort your bookmarks into lists. | ||

| - 🔎 Full text search of all the content stored. | ||

| - ✨ AI-based (aka chatgpt) automatic tagging. | ||

| - 🔖 [Chrome plugin](https://chromewebstore.google.com/detail/hoarder/kgcjekpmcjjogibpjebkhaanilehneje) for quick bookmarking. | ||

| - 📱 [iOS shortcut](https://www.icloud.com/shortcuts/78734b46624c4a3297187c85eb50d800) for bookmarking content from the phone. A minimal mobile app is in the works. | ||

| - 💾 Self-hosting first. | ||

| - [Planned] Archiving the content for offline reading. | ||

|

|

||

| **⚠️ This app is under heavy development and it's far from stable.** |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,64 @@ | ||

| # Installation | ||

|

|

||

| ## Docker (Recommended) | ||

|

|

||

| ### Requirements | ||

|

|

||

| - Docker | ||

| - Docker Compose | ||

|

|

||

| ### 1. Create a new directory | ||

|

|

||

| Create a new directory to host the compose file and env variables. | ||

|

|

||

| ### 2. Download the compose file | ||

|

|

||

| Download the docker compose file provided [here](https://github.com/MohamedBassem/hoarder-app/blob/main/docker/docker-compose.yml). | ||

|

|

||

| ``` | ||

| $ wget https://raw.githubusercontent.com/MohamedBassem/hoarder-app/main/docker/docker-compose.yml | ||

| ``` | ||

|

|

||

| ### 3. Populate the environment variables | ||

|

|

||

| You can use the env file template provided [here](https://github.com/MohamedBassem/hoarder-app/blob/main/.env.sample) and fill it manually using the documentation [here](/configuration). | ||

|

|

||

| ``` | ||

| $ wget https://raw.githubusercontent.com/MohamedBassem/hoarder-app/main/.env.sample | ||

| $ mv .env.sample .env | ||

| ``` | ||

|

|

||

| Alternatively, here is a minimal `.env` file to use: | ||

|

|

||

| ``` | ||

| NEXTAUTH_SECRET=super_random_string | ||

| NEXTAUTH_URL=<YOUR DEPLOYED URL> | ||

| HOARDER_VERSION=release | ||

| MEILI_ADDR=http://meilisearch:7700 | ||

| MEILI_MASTER_KEY=another_random_string | ||

| ``` | ||

|

|

||

| You can use `openssl rand -base64 36` to generate the random strings. | ||

|

|

||

| Persistent storage and the wiring between the different services is already taken care of in the docker compose file. | ||

|

|

||

| ### 4. Setup OpenAI | ||

|

|

||

| To enable automatic tagging, you'll need to configure open ai. This is optional though but hightly recommended. | ||

|

|

||

| - Follow [OpenAI's help](https://help.openai.com/en/articles/4936850-where-do-i-find-my-openai-api-key) to get an API key. | ||

| - Add `OPENAI_API_KEY=<key>` to the env file. | ||

|

|

||

| Learn more about the costs of using openai [here](/openai). | ||

|

|

||

|

|

||

| ### 5. Start the service | ||

|

|

||

|

|

||

| Start the service by running: | ||

|

|

||

| ``` | ||

| $ docker compose up -d | ||

| ``` |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,14 @@ | ||

| # Configuration | ||

|

|

||

| The app is mainly configured by environment variables. All the used environment variables are listed in [packages/shared/config.ts](https://github.com/MohamedBassem/hoarder-app/blob/main/packages/shared/config.ts). The most important ones are: | ||

|

|

||

| | Name | Required | Default | Description | | ||

| | ---------------- | ------------------------------------- | --------- | ----------------------------------------------------------------------------------------------------------------------------- | | ||

| | DATA_DIR | Yes | Not set | The path for the persistent data directory. This is where the db and the uploaded assets live. | | ||

| | NEXTAUTH_SECRET | Yes | Not set | Random string used to sign the JWT tokens. Generate one with `openssl rand -base64 36`. | | ||

| | NEXTAUTH_URL | Yes | Not set | The url on which the service will be running on. E.g. (`https://demo.hoarder.app`). | | ||

| | REDIS_HOST | Yes | localhost | The address of redis used by background jobs | | ||

| | REDIS_POST | Yes | 6379 | The port of redis used by background jobs | | ||

| | OPENAI_API_KEY | No | Not set | The OpenAI key used for automatic tagging. If not set, automatic tagging won't be enabled. More on that in [here](/openai). | | ||

| | MEILI_ADDR | No | Not set | The address of meilisearch. If not set, Search will be disabled. E.g. (`http://meilisearch:7700`) | | ||

| | MEILI_MASTER_KEY | Only in Prod and if search is enabled | Not set | The master key configured for meilisearch. Not needed in development environment. Generate one with `openssl rand -base64 36` | |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,17 @@ | ||

| # Quick Sharing Extensions | ||

|

|

||

| The whole point of Hoarder is making it easy to hoard the content. That's why there are a couple of | ||

|

|

||

| ## Mobile Apps | ||

|

|

||

| <img src="/img/quick-sharing/mobile.png" alt="mobile screenshot" width="300"/> | ||

|

|

||

|

|

||

| - iOS app: TODO | ||

| - Android App: The app is built in using a cross-platform framework (react native). So technically, the android app should just work, but I didn't test it. If there's enough demand, I'll publish it to the google play store. | ||

|

|

||

| ## Chrome Extensions | ||

|

|

||

| <img src="/img/quick-sharing/extension.png" alt="mobile screenshot" width="300"/> | ||

|

|

||

| - To quickly bookmark links, you can also use the chrome extension [here](https://chromewebstore.google.com/detail/hoarder/kgcjekpmcjjogibpjebkhaanilehneje). |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,11 @@ | ||

| # OpenAI Costs | ||

|

|

||

| This service uses OpenAI for automatic tagging. This means that you'll incur some costs if automatic tagging is enabled. There are two type of inferences that we do: | ||

|

|

||

| ## Text Tagging | ||

|

|

||

| For text tagging, we use the `gpt-3.5-turbo-0125` model. This model is [extremely cheap](https://openai.com/pricing). Cost per inference varies depending on the content size per article. Though, roughly, You'll be able to generate tags for almost 1000+ bookmarks for less than $1. | ||

|

|

||

| ## Image Tagging | ||

|

|

||

| For image uploads, we use the `gpt-4-vision-preview` model for extracting tags from the image. You can learn more about the costs of using this model [here](https://platform.openai.com/docs/guides/vision/calculating-costs). To lower the costs, we're using the low resolution mode (fixed number of tokens regardless of image size). The gpt-4 model, however, is much more expensive than the `gpt-3.5-turbo`. Currently, we're using around 350 token per image inference which ends up costing around $0.01 per inference. So around 10x more expensive than the text tagging. |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,68 @@ | ||

| # Setup | ||

|

|

||

| ## Manual Setup | ||

| ### First Setup | ||

|

|

||

| - You'll need to prepare the environment variables for the dev env. | ||

| - Easiest would be to set it up once in the root of the repo and then symlink it in each app directory. | ||

| - Start by copying the template by `cp .env.sample .env`. | ||

| - The most important env variables to set are: | ||

| - `DATA_DIR`: Where the database and assets will be stored. This is the only required env variable. You can use an absolute path so that all apps point to the same dir. | ||

| - `REDIS_HOST` and `REDIS_PORT` default to `localhost` and `6379` change them if redis is running on a different address. | ||

| - `MEILI_ADDR`: If not set, search will be disabled. You can set it to `http://127.0.0.1:7700` if you run meilisearch using the command below. | ||

| - `OPENAI_API_KEY`: If you want to enable auto tag inference in the dev env. | ||

|

|

||

| ### Dependencies | ||

|

|

||

| #### Redis | ||

|

|

||

| Redis is used as the background job queue. The easiest way to get it running is with docker `docker run -p 6379:6379 redis:alpine`. | ||

|

|

||

| #### Meilisearch | ||

|

|

||

| Meilisearch is the provider for the full text search. You can get it running with `docker run -p 7700:7700 getmeili/meilisearch:v1.6`. | ||

|

|

||

| Mount persistent volume if you want to keep index data across restarts. You can trigger a re-index for the entire items collection in the admin panel in the web app. | ||

|

|

||

| #### Chrome | ||

|

|

||

| The worker app will automatically start headless chrome on startup for crawling pages. You don't need to do anything there. | ||

|

|

||

| ### Web App | ||

|

|

||

| - Run `pnpm web` in the root of the repo. | ||

| - Go to `http://localhost:3000`. | ||

|

|

||

| > NOTE: The web app kinda works without any dependencies. However, search won't work unless meilisearch is running. Also, new items added won't get crawled/indexed unless redis is running. | ||

| ### Workers | ||

|

|

||

| - Run `pnpm workers` in the root of the repo. | ||

|

|

||

| > NOTE: The workers package requires having redis working as it's the queue provider. | ||

| ### iOS Mobile App | ||

|

|

||

| - `cd apps/mobile` | ||

| - `pnpm exec expo prebuild --no-install` to build the app. | ||

| - Start the ios simulator. | ||

| - `pnpm exec expo run:ios` | ||

| - The app will be installed and started in the simulator. | ||

|

|

||

| Changing the code will hot reload the app. However, installing new packages requires restarting the expo server. | ||

|

|

||

| ### Browser Extension | ||

|

|

||

| - `cd apps/browser-extension` | ||

| - `pnpm dev` | ||

| - This will generate a `dist` package | ||

| - Go to extension settings in chrome and enable developer mode. | ||

| - Press `Load unpacked` and point it to the `dist` directory. | ||

| - The plugin will pop up in the plugin list. | ||

|

|

||

| In dev mode, opening and closing the plugin menu should reload the code. | ||

|

|

||

|

|

||

| ## Docker Dev Env | ||

|

|

||

| If the manual setup is too much hassle for you. You can use a docker based dev environment by running `docker compose -f docker/docker-compose.dev.yml up` in the root of the repo. This setup wasn't super reliable for me though. |

Oops, something went wrong.