ControlNet, 8K VAE decoding

🚀 ControlNet comes to 🧨 Diffusers!

Thanks to an amazing collaboration with community member @takuma104 🙌, diffusers fully supports ControlNet! All 8 control models from the paper are available for you to use: depth, scribbles, edges, and more. Best of all is that you can take advantage of all the other goodies and optimizations that Diffusers provides out of the box, making this an ultra fast implementation of ControlNet. Take it for a spin to see for yourself.

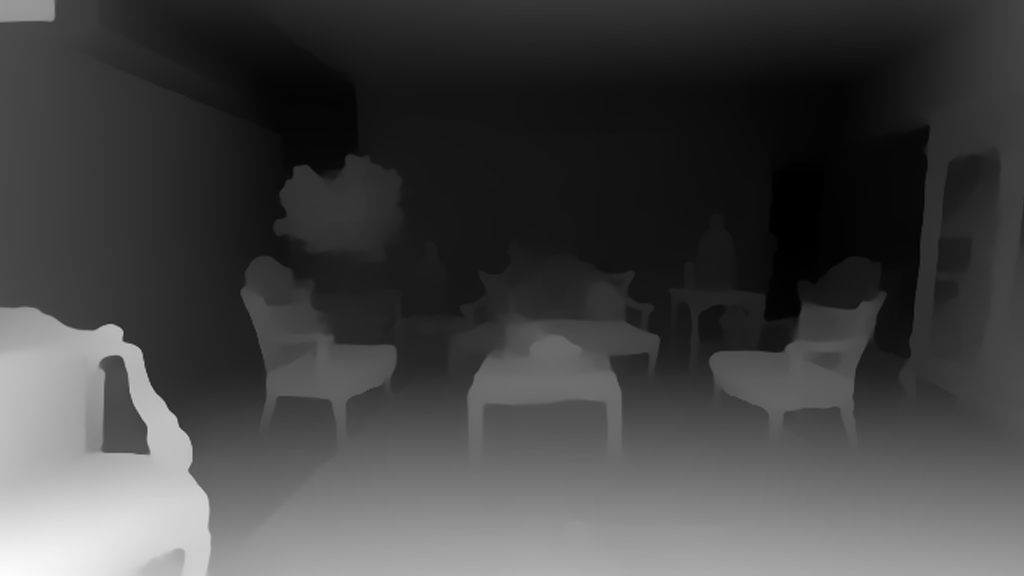

ControlNet works by training a copy of some of the layers of the original Stable Diffusion model on additional signals, such as depth maps or scribbles. After training, you can provide a depth map as a strong hint of the composition you want to achieve, and have Stable Diffusion fill in the details for you. For example:

| Before | After |

|---|---|

|

|

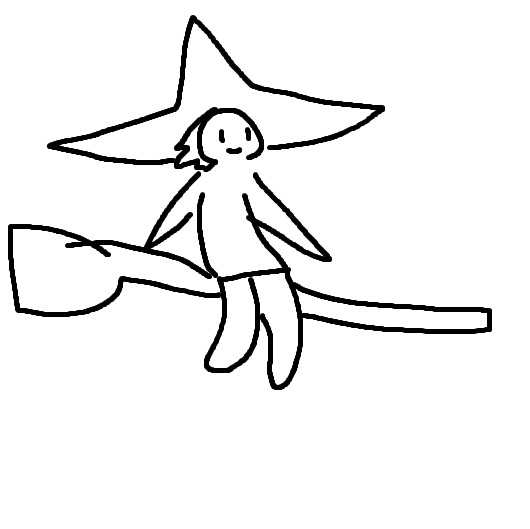

Currently, there are 8 published control models, all of which were trained on runwayml/stable-diffusion-v1-5 (i.e., Stable Diffusion version 1.5). This is an example that uses the scribble controlnet model:

| Before | After |

|---|---|

|

|

Or you can turn a cartoon into a realistic photo with incredible coherence:

How do you use ControlNet in diffusers? Just like this (example for the canny edges control model):

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel

import torch

controlnet = ControlNetModel.from_pretrained("lllyasviel/sd-controlnet-canny", torch_dtype=torch.float16)

pipe = StableDiffusionControlNetPipeline.from_pretrained(

"runwayml/stable-diffusion-v1-5", controlnet=controlnet, torch_dtype=torch.float16

)As usual, you can use all the features in the diffusers toolbox: super-fast schedulers, memory-efficient attention, model offloading, etc. We think 🧨 Diffusers is the best way to iterate on your ControlNet experiments!

Please, refer to our blog post and documentation for details.

(And, coming soon, ControlNet training – stay tuned!)

💠 VAE tiling for ultra-high resolution generation

Another community member, @kig, conceived, proposed and fully implemented an amazing PR that allows generation of ultra-high resolution images without memory blowing up 🤯. They follow a tiling approach during the image decoding phase of the process, generating a piece of the image at a time and then stitching them all together. Tiles are blended carefully to avoid visible seems between them, and the final result is amazing. This is the additional code you need to use to enjoy high-resolution generations:

pipe.vae.enable_tiling()That's it!

For a complete example, refer to the PR or the code snippet we reproduce here for your convenience:

import torch

from diffusers import StableDiffusionPipeline

pipe = StableDiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5", revision="fp16", torch_dtype=torch.float16)

pipe = pipe.to("cuda")

pipe.enable_xformers_memory_efficient_attention()

pipe.vae.enable_tiling()

prompt = "a beautiful landscape photo"

image = pipe(prompt, width=4096, height=2048, num_inference_steps=10).images[0]

image.save("4k_landscape.jpg")All commits

- [Docs] Add a note on SDEdit by @sayakpaul in #2433

- small bugfix at StableDiffusionDepth2ImgPipeline call to check_inputs and batch size calculation by @mikegarts in #2423

- add demo by @yiyixuxu in #2436

- fix: code snippet of instruct pix2pix from the docs. by @sayakpaul in #2446

- Update train_text_to_image_lora.py by @haofanwang in #2464

mpstest fixes by @pcuenca in #2470- Fix test

train_unconditionalby @pcuenca in #2481 - add MultiDiffusion to controlling generation by @omerbt in #2490

- image_noiser -> image_normalizer comment by @williamberman in #2496

- [Safetensors] Make sure metadata is saved by @patrickvonplaten in #2506

- Add 4090 benchmark (PyTorch 2.0) by @pcuenca in #2503

- [Docs] Improve safetensors by @patrickvonplaten in #2508

- Disable ONNX tests by @patrickvonplaten in #2509

- attend and excite batch test causing timeouts by @williamberman in #2498

- move pipeline based test skips out of pipeline mixin by @williamberman in #2486

- pix2pix tests no write to fs by @williamberman in #2497

- [Docs] Include more information in the "controlling generation" doc by @sayakpaul in #2434

- Use "hub" directory for cache instead of "diffusers" by @pcuenca in #2005

- Sequential cpu offload: require accelerate 0.14.0 by @pcuenca in #2517

- is_safetensors_compatible refactor by @williamberman in #2499

- [Copyright] 2023 by @patrickvonplaten in #2524

- Bring Flax attention naming in sync with PyTorch by @pcuenca in #2511

- [Tests] Fix slow tests by @patrickvonplaten in #2526

- PipelineTesterMixin parameter configuration refactor by @williamberman in #2502

- Add a ControlNet model & pipeline by @takuma104 in #2407

- 8k Stable Diffusion with tiled VAE by @kig in #1441

- Textual inv make save log both steps by @isamu-isozaki in #2178

- Fix convert SD to diffusers error by @fkunn1326 in #1979)

- Small fixes for controlnet by @patrickvonplaten in #2542

- Fix ONNX checkpoint loading by @anton-l in #2544

- [Model offload] Add nice warning by @patrickvonplaten in #2543

Significant community contributions

The following contributors have made significant changes to the library over the last release:

- @takuma104

- Add a ControlNet model & pipeline (#2407)

New Contributors

- @mikegarts made their first contribution in #2423

- @fkunn1326 made their first contribution in #2529

Full Changelog: v0.13.0...v0.14.0