This project was bootstrapped with Create React App.

In the project directory, you can run:

Runs the app in the development mode.

Open http://localhost:3000 to view it in the browser.

The page will reload if you make edits.

You will also see any lint errors in the console.

Builds the app for production to the build folder.

It correctly bundles React in production mode and optimizes the build for the best performance.

When we develop a web app we can think browsers like a swiss knifes, these include a bunch of utilities (APIs), one of them is get media devices access througth mediaDevices API from the navigator object, this allows to devs create features related with the user media devices, this features migth be create voice notes, like Whatsapp Web does.

Today we gonna create an app that records the user's voice and then saves the recorded voice on a <audio> tag will be played later, this app look likes this

Apart mediaDevices API we require

MediaRecorderconstructor, this creates a recorder object from the requested media device througthmediaDevices.getUserMedia()method.Blobconstructor, this one allows create a blob object from the data adquired fromMediaRecorderinstance.URL.createObjectURL(blob)method, this creates a URL, the URL contains the data (voice) create previously from theBlobinstance and it is gonna be use like<audio src=URL/.

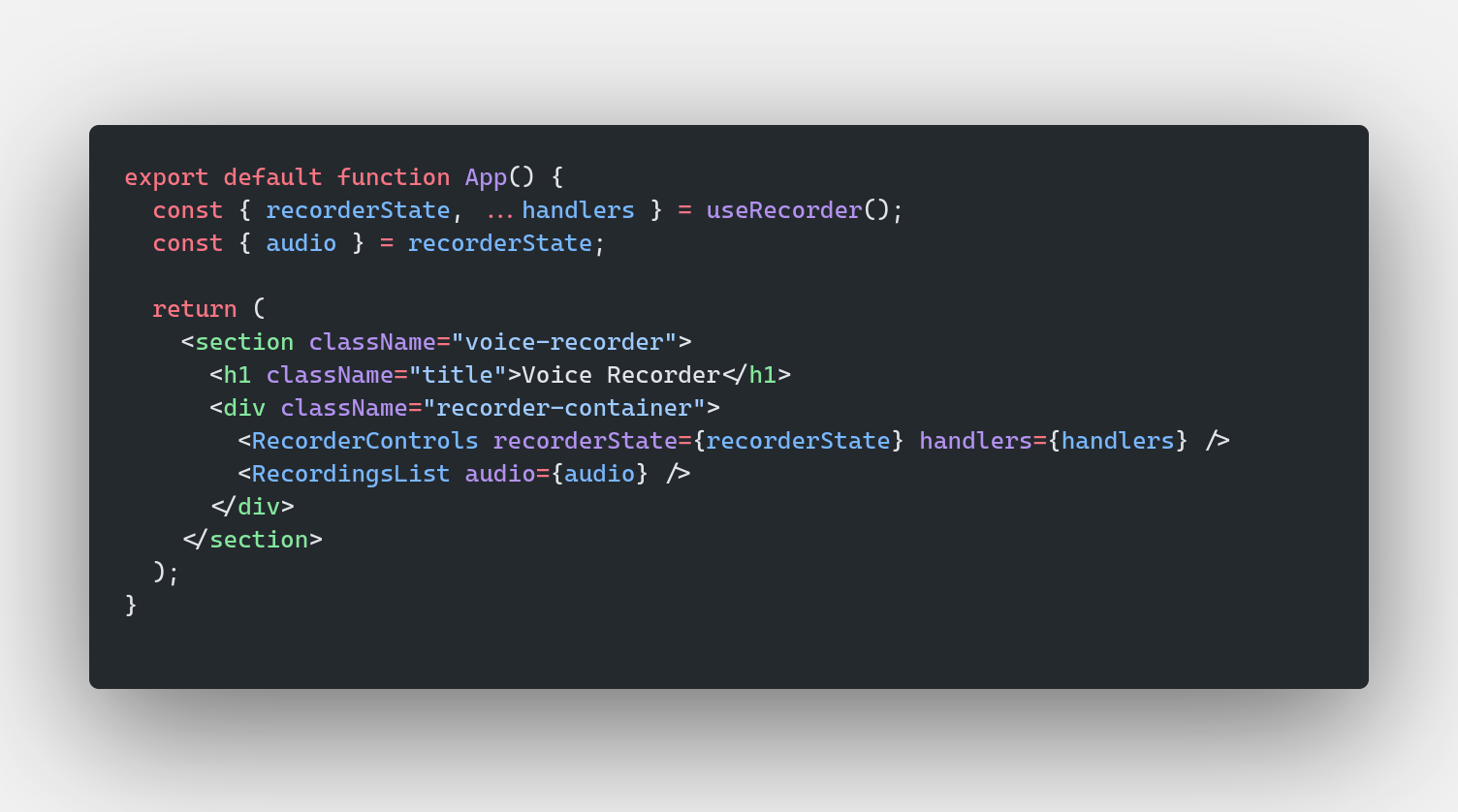

If you don't understand, don't worry, I'll explain you below. First, look at the <App/> component.

<App/> consumes a custom hook that provides the recorderState and several handlers. If you don't know how to use a custom hook I share with you a post about this.

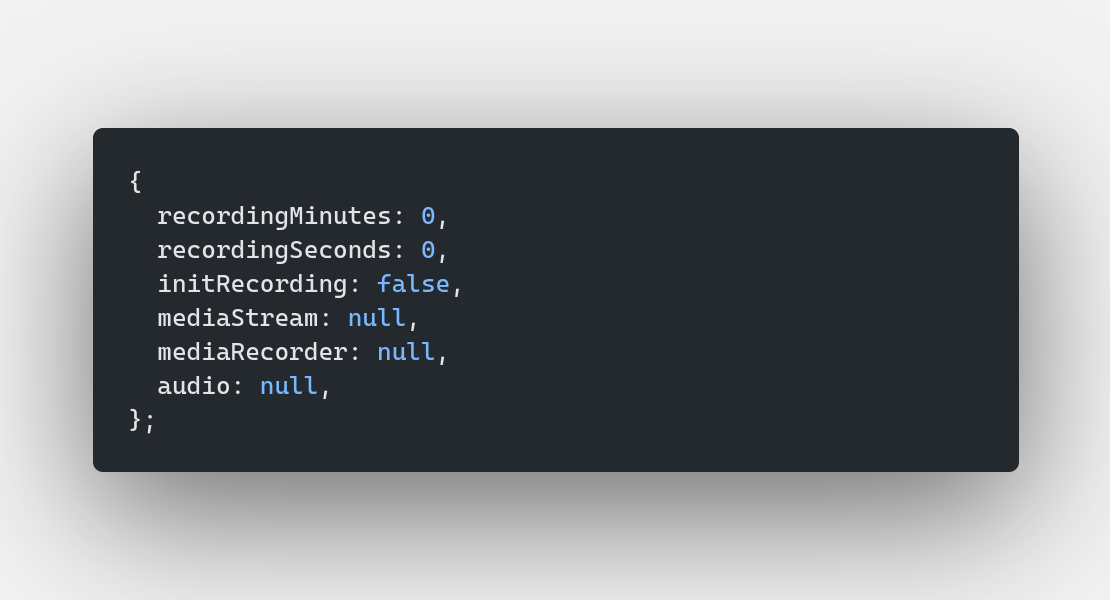

The recorderState is like this:

recordingMinutesandrecordingSecondsare use to show the recording time andinitRecordinginitializates the recorder.- The other parts of the state,

mediaStreamwill be the media device provide bymediaDevices.getUserMedia()andmediaRecorderwill be the instance ofMediaRecorder,audiowill be the URL mentioned previously.

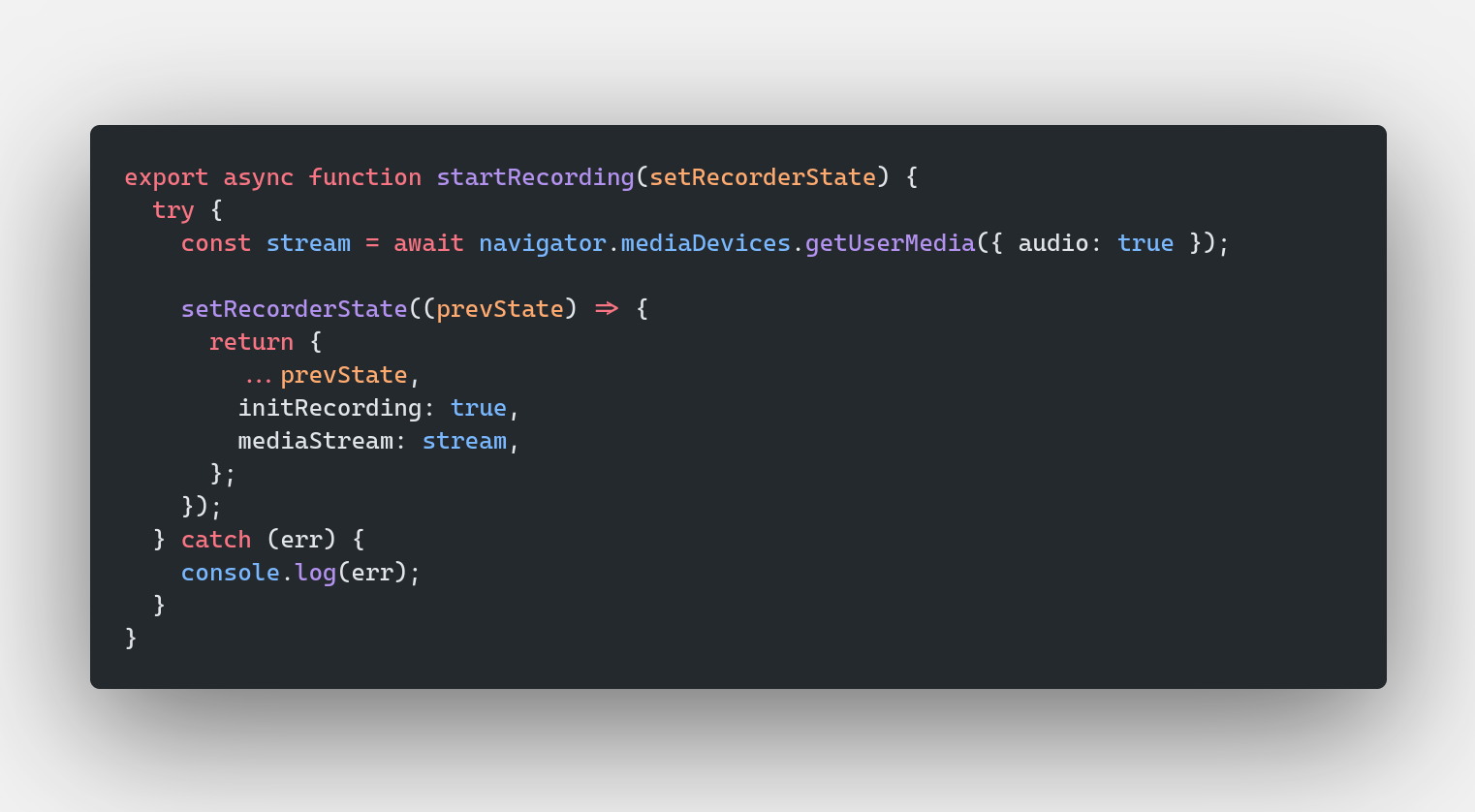

mediaStream is set by the handler startRecording

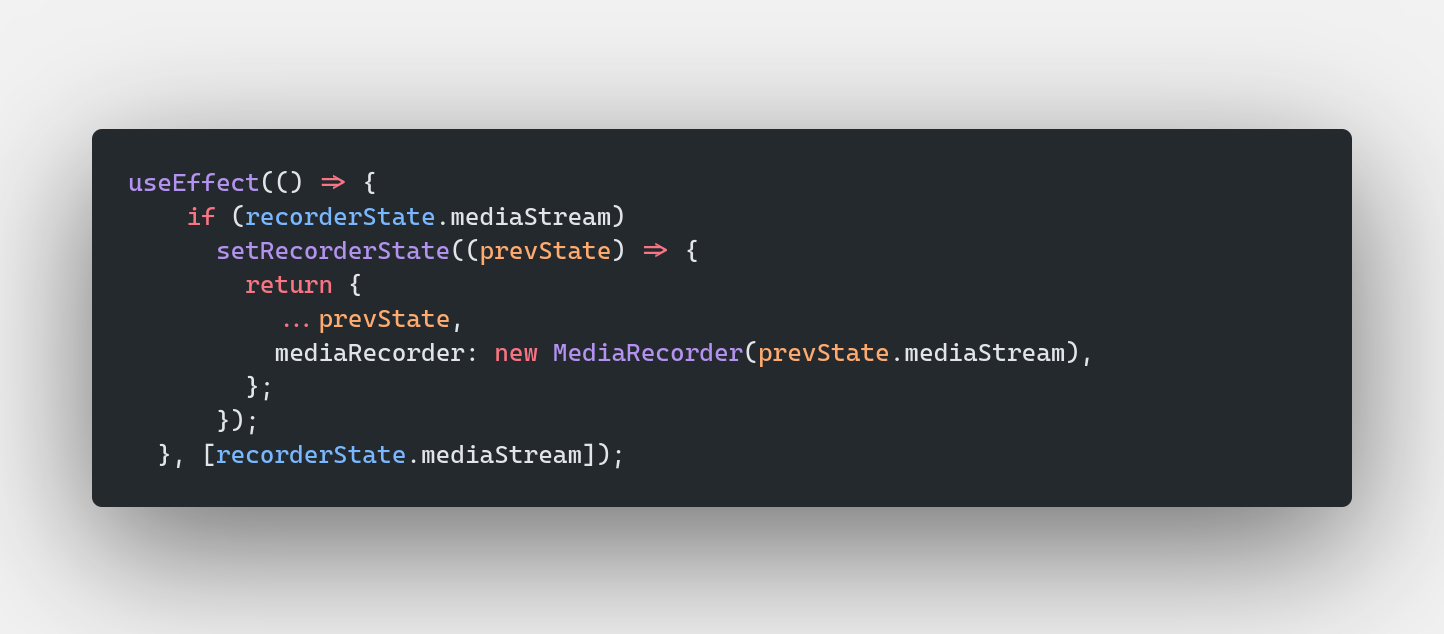

After set the mediaStream, MediaRecorder instance is created

To adquire the voice and create the audio mediaRecorder needs create two event listeners ondataavailable and onstop the first one gets chunks of the voice and pushes it to the array chunks and the second one is use to create the blob througth chunks then audio is created. The stop event is fired by saveRecording handler or the effect cleanup function, the cleanup function is called when recording is cancel.

Now take a look at the components <RecorderControls/> and <RecordingsList/>.

<RecorderControls/> have the prop handlers and this is used by the jsx

<RecordingsList/> receives audio and consumes a custom hook that pushes the audio created previously.

The handler deleteAudio is like this

And that's it! With React we can make use of useEffect to access the user devices and create related features.