Perception Test: A Diagnostic Benchmark for Multimodal Models is a multimodal benchmark that aims to comprehensively evaluate perception and reasoning skills of multimodal models. The Perception Test dataset introduces real-world videos designed to show perceptually interesting situations and defines multiple tasks that require understanding of memory, abstract patterns, physics, and semantics – across visual, audio, and text modalities.

GoogleForm-quiz to try the Perception Test yourself

Playlist of more example videos in the Perception Test

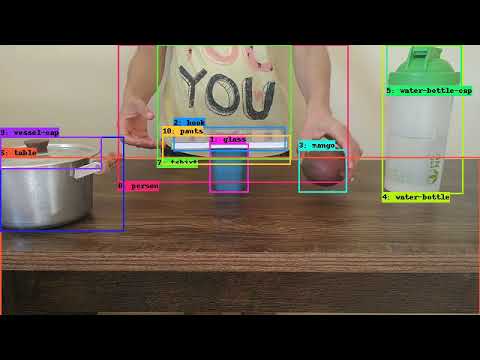

The dataset consists of 11.6k videos (with audio), of 23s average length, and filmed by around 100 participants worldwide. The videos are annotated with six types of labels: object and point tracks, temporal action and sound segments, multiple-choice video question-answers and grounded video question-answers; see above example of annotations for object tracking.

The dataset probes pre-trained models for their transfer capabilities, in either zero-shot or fine-tuning regime.

This repo contains a Colab that demonstrates how to access, parse and visualise examples of the training split of the dataset. The complete training and validation dataset splits will be available soon. The test split will be available through an evaluation server.

We hope this will inspire and contribute to progress towards more general perception models. If you have any comments, suggestions, concerns about the dataset, please contact us at perception-test at google dot com

@techreport{perceptiontestv1,

title = {{Perception Test: A Diagnostic Benchmark for Multimodal Models}},

author = {Viorica Pătrăucean and Lucas Smaira and Ankush Gupta and Adrià Recasens Continente and Larisa Markeeva and Dylan Banarse and Mateusz Malinowski and Yi Yang and Carl Doersch and Tatiana Matejovicova and Yury Sulsky and AntoineMiech and Skanda Koppula and Alex Frechette and Hanna Klimczak and Raphael Koster and Junlin Zhang and StephanieWinkler and Yusuf Aytar and Simon Osindero and Dima Damen and Andrew Zisserman and João Carreira},

year = {2022},

institution = {DeepMind},

month = {10},

Date-Added = {2022-10-12}

}

Copyright 2022 DeepMind Technologies Limited

All software is licensed under the Apache License, Version 2.0 (Apache 2.0); you may not use this file except in compliance with the Apache 2.0 license. You may obtain a copy of the Apache 2.0 license at: https://www.apache.org/licenses/LICENSE-2.0

All other materials are licensed under the Creative Commons Attribution 4.0 International License (CC-BY). You may obtain a copy of the CC-BY license at: https://creativecommons.org/licenses/by/4.0/legalcode

Unless required by applicable law or agreed to in writing, all software and materials distributed here under the Apache 2.0 or CC-BY licenses are distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the licenses for the specific language governing permissions and limitations under those licenses.

This is not an official Google product.