SLAM (Simultaneous Localization And Mapping) is the process of creating a map of an environment while tracking the agent's location within it.

SLAM is a framework for temporal modeling of states that is commonly used in autonomous navigation. It is heavily based on principles of probability, making inferences on posterior and prior probability distributions of states and measurements and the relationship between the two. [1]

We estimate our current position with a motion model by using previous positions, the current control input, and noise.

Then, in the measurement step, we correct our prediction by using data from our sensors.

Proprioceptive sensors measure internal values - like acceleration, motor speeds, battery voltage, etc. For this project, I'll be simulating an inertial measurement unit by taking discrete measurement of artificially noisy (possibly systematic noise) linear and angular acceleration data.

Exteroceptive sensors collect data about the robot's environment, like distances to objects, light, sound, etc. For this project, I'll be simulating a collection of distance sensors by casting rays out from a "sensor" and recording the distances they travel.

Kalman Filters are a way for us to estimate the (unknown) actual state of an system (our agent's position and orientation) through the collection of noisy data.

We can give more weight to data that we know is more accurate by increasing the Kalman Gain associated with that measurement.

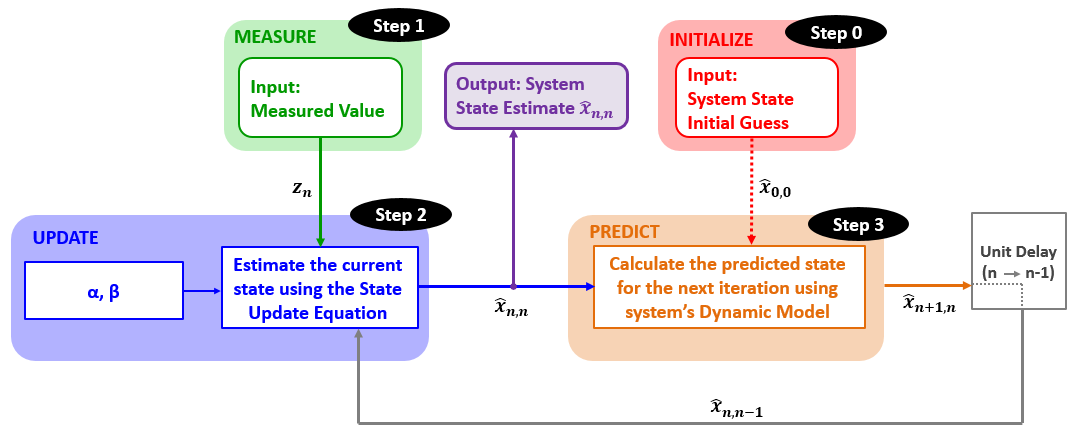

We take the state of a system and use a set of equations (ex. laws of physics) to extrapolate a predicted future state.

The Five Kalman Filter Equations:

State Update Equation:

State Extrapolation Equations:

Kalman Gain Equation:

Covariance Update Equation

Covariance Extrapolation Equation

Next, we take a measurement, tweak our estimation of the state, and then once again predict the future state.