forked from erigontech/erigon

-

Notifications

You must be signed in to change notification settings - Fork 42

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Erigon3 upstream e4eb9fc #483

Merged

Merged

Conversation

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

- Updated gopsutil version as it has improvements in getting processes and memory info.

`murmur3.New*` methods return interface. And need call minimum 3 methods on it. `16ns` -> `11ns` Also i did bench `github.com/segmentio/murmur3` vs `github.com/twmb/murmur3` on 60bytes hashed string 2nd is faster but adding asm deps. So i stick to go's dep (because asm deps are not friendly for cross-compilation), maybe will try it later - after our new release pipeline is ready. Bench results: intel: `20ns` -> `14ns` amd: `31ns` -> `26ns`

Before this PR we called heimdall.Synchronize as part of heimdall.CheckpointsFromBlock and heimdall.MilestonesFromBlock. The previous implementation of Synchronize was waiting on all scrappers to be synchronised. This is inefficient because `heimdall.CheckpointsFromBlock` needs only the `checkpoints` scrapper to be synchronised. For the initial sync we first only need to wait for the checkpoints to be downloaded and then we can start downloading blocks from devp2p. While we are doing that we can let the spans and milestones be scrapped in the background. Note this is based on the fact that fetching checkpoints has been optimised by doing bulk fetching and finishes in seconds, while fetching Spans has not yet been optimised and for bor-mainnet can take a long time. Changes in the PR: - splits Synchronize into 3 more fine grained SynchronizeCheckpoints, SynchronizeMilestones and SynchronizeSpans calls which are invoked by the Sync algorithm at the right time - Optimises SynchronizeSpans to check if it already has the corresponding span for the given block number before blocking - Moves synchronisation point for Spans and State Sync Events in `Sync.commitExecution` just before we call ExecutionEngine.UpdateForkChoice to make it clearer what data is necessary to be sync-ed before calling Execution - Changes EventNotifier and Synchronize funcs to return err if ctx is cancelled or other errors have happened - Input consistency between the heimdallSynchronizer and bridgeSynchronizer - use blockNum instead of *type.Header - Interface tidy ups

Make Cell unexported Remove ProcessTree/Keys/Update Reviewed and refreshed all unit/bench/fuzz tests related to commitment erigontech#11326

Change test scheduling and timeouts after Ottersync introduction. Now we can execute tests more frequently due to the significant reduction in test time. Scheduled to run every night: - tip-tracking - snap-download - sync-from-scratch for mainnet, minimal node Scheduled to run on Sunday: - sync-from-scratch for testnets, archive node

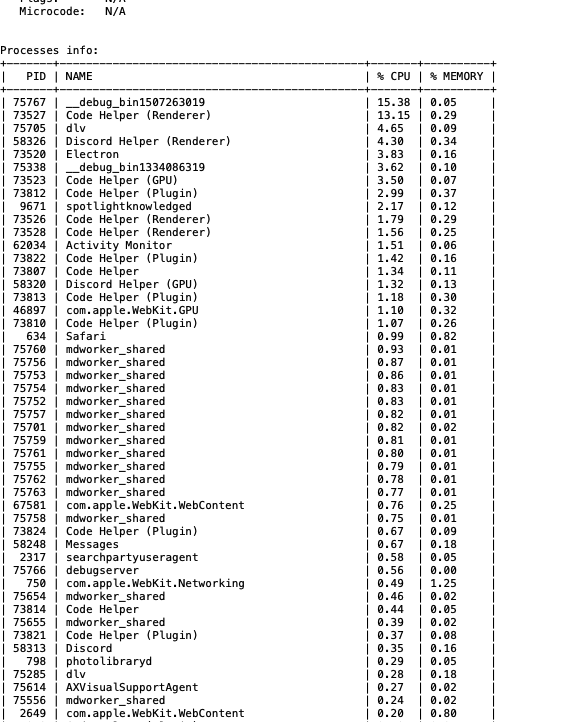

- Collecting CPU and Memory usage info about all processes running on the machine - Running loop 5 times with 2 seconds delay and to calculate average - Sort by CPU usage - Write result to report file Result:

closes erigontech#11173 Adds tests for the Heimdall Service which cover: - Milestone scrapping - Span scrapping - Checkpoint scrapping - `Producers` API - compares the results with results from the `bor_getSnapshotProposerSequence` RPC API

- Added totals for CPU and Memory usage to processes table - Added CPU usage by cores Example output:

forgot to silence the logging in the heimdall service tests in a previous PR the logging lvl can be tweaked at times of need if debugging is necessary

Refactored table utils to have an option to generate table and return it as string which will used for saving data to file.

…ot and added clearIndexing command (erigontech#11539) Main checks: * No gap in steps/blocks * Check if all indexing present * Check if all idx, history, domain present

closes erigontech#11177 - adds unwind logic to the new polygon sync stage which uses astrid - seems like we've never done running for bor heimdall so removing empty funcs

Refactored printing cpu info: - move CPU details to table - move CPU usage next to details table - refactor code

…ch#11549) relates to: erigontech#10734 erigontech#11387 restart Erigon with `SAVE_HEAP_PROFILE = true` env variable wait until we reach 45% or more alloc in stage_headers when "noProgressCounter >= 5" or "Rejected header marked as bad"

and also move `design` into `docs` in order to reduce the number of top-level directories

Before we had transaction-wide cache (map) Now i changing it to evm-wide. EVM - is thread-unsafe object - it's ok to use thread-unsafe LRU. But ExecV3 already using 1-ENV per worker. Means we will share between blocks (not on chain-tip for now) bench: - on `mainnet`: it shows 12% improvement on large eth_getLogs call (re-exec large historical range of blocks near 6M block) - on hot state. About chain-tip: - don't see much impact (even if make cache global) - because mainnet/bor-mainnet current bottleneck is "flush" changes to db. but `integration loop_exec --unwind=2` shows 5% improvement. - in future PR we can share 1 lru for many new blocks - currently creating new one every stage loop iteration.

- added flags which was applied to run command to report

Notable things: Removed all testing on Total Difficulty or Difficulty as that is a PoW concept Integration tests about difficulty removed as they only test ETH PoW Remove difficulty checks in a test I had to tweak some hashes as I have removed difficulty computation so some hashes were different is some tests (now hardcoded to 1) - It is running on the tip of the chain too, YAY

CL may be able to handle a non-error message better. Eventually I plan on improving this corner case, as FCU has an 8s timeout and I don't think EL waits that long before declaring busy. This causes some (or all) CL(s) to miss slots, as they don't know what to do now

part of erigontech#11149 All events are supported now

Co-authored-by: alex.sharov <AskAlexSharov@gmail.com>

Bor mainnet <img width="532" alt="Screenshot 2024-08-14 at 12 33 55" src="https://github.com/user-attachments/assets/317498a2-94b3-4973-b136-2546aea30351">

Tidying up bor contracts code: - Rename `GenesisContractsClient` to `StateReceiver` for clarity - Remove unused `LastStateId` func in `StateReceiver` - Move `StateReceiver` into its own file `state_receiver.go` - Create a gomock for it in `state_receiver_mock.go` and use in test - Remove unused function input for `ChainSpanner`

kurtosis assertoor tests in ci decision points: - since the job takes about 45 minutes to run, I decided not to run it on every PR, but rather we run it every 12 hours at 2AM/PM UTC. - all tests are present in https://github.com/ethpandaops/assertoor-test/tree/master/assertoor-tests. This ci runs [these](https://github.com/erigontech/erigon/pull/11464/files#diff-b45e49409c33f39133315225d30c02d8cfacfd9a53d1157bca61284683a4c498R22) tests. - test is for ubuntu only; not for mac or windows. Many tests are failing; specially the validator related ones. Tracked separately - erigontech#11590

I'd like to add `MarshallKey`, `MarshallValue` (and corresponding `Unmarshall`) receiver functions on `EventRecordWithTime` which hide away the `abi` detail so callers don't have to deal with the ABI as a function input everywhere in the code base - PR erigontech#11620 However there is a circular dependency which blocks me from doing that because `EventRecordWithTime` is in package `polygon/heimdall` and it imports `polygon/bor` package for `bor.GenesisContractStateReceiverABI`, however `polygon/bor` imports `polygon/heimdall` for `EventRecordWithTime` due to the [bor event fallback hack](https://github.com/erigontech/erigon/blob/main/polygon/bor/bor.go#L1516-L1556) we have in Bor consensus engine (it is pending removal once we fix the underlying issue that led to it appearing which is a WIP). For now, to unblock myself I am moving the bor ABIs in a sub-package `polygon/bor/borabi`

…bi (erigontech#11620) Relates to and inspired by erigontech#11225 Main motivation for this change is to simplify the Bridge Store interface by removing the need to pass an `ABI` in the function signatures. But it ended up tidying up other bits of the codebase too. We should do the same for the entities in `polygon/heimdall EntityStore` as it is much much cleaner

This reverts PR erigontech#11556, which caused Hive tests (e.g. "Bad Hash on NewPayload") to crash.

addresses follow ups from erigontech#11568 (comment)

Things added: * Keep genesis file stored in datadir * Fixed race conditions in Sentinel * Fixed scheduling of hard fork for same epoch-hardforks * Small refactorings --------- Co-authored-by: Kewei <kewei.train@gmail.com>

Fixed issue with setup diagnostics client on erigon start

- `br.FrozenBlocks()` to ensure that all file types are exist

And write mb/s is also big at prune time - nvme handles it - but on a bit slower disks will affect chain-tip perf. BorMainnet: <img width="1054" alt="Screenshot 2024-08-16 at 08 26 22" src="https://github.com/user-attachments/assets/87e8d227-ac33-4800-a53a-594d872b074f"> Gnosis: <img width="1026" alt="Screenshot 2024-08-16 at 08 36 23" src="https://github.com/user-attachments/assets/1290e57c-3325-4e89-95cd-83baf8fa93c7">

…#11489) - on mainnet: it shows +10% improvement on large eth_getLogs call (re-exec large historical range of blocks near 6M block) - on hot state. on chain tip: - not noticible impact, but now it living only inside current Tx - maybe in future PR's I can share such LRU between block somehow - on loop_exe i also not noticed difference

- Added functionality to grab the heap profile by calling the diagnostics endpoint - Added support to pass heap profile file to diagnostics UI through WebSocket

Save erigon logs into test zip attachment

…rom chaindata (erigontech#11634) We prune `CanonicalHash` and `HeaderNumber ` in `chaindata`. this leads to a -99% in these 2 tables and reduce their total size to 40 pages in TOTAL (from >500k pages). Benchmarks: ``` Total time taken to query blocks from 1000000 to 3000000: 1720.1410 seconds (OLD) Total time taken to query blocks from 1000000 to 3000000: 1747.4729 seconds (New) Amortized delta cost: 0.1ms/req ``` Is the RPC working for those ranges? Yes. ## How does it work? Query canonical hash from snapshots by: `viewHeaderByNumber` -> `header.Hash()` if db hit is missed Query header number from snapshots by: `viewHeaderByHash` -> `header.Num64` if db hit is missed

10% better throughput and latency of: small eth_getLogs, big eth_getLogs increased limit to 4096 - because can re-use lru objects - without worry on "alloc speed"

This adds integrity checking for borevents in the following places 1. Stage Bor Heimdall - check that the event occurs withing the expected time window 2. Dump Events to snapshots - check that the event ids are continuous in and between blocks (will add a time window tests) 3. Index event snapshots - check that the event ids are continuous in and between blocks It also adds an external integrity checker which runs on snapshots checking for continuity and timeliness of events. This can be called using the following command: `erigon snapshots integrity --datadir=~/snapshots/bor-mainnet-patch-1 --from=45500000` (--to specifies an end block) This also now fixes the long running issue with bor events causing unexpected fails in executions: the problem event validation uncovered was a follows: The **kv.BorEventNums** mapping table currently keeps the mapping first event id->block. The code which produces bor-event snapshots to determine which events to put into the snapshot. however if no additional blocks have events by the time the block is stored in the snapshot, the snapshot creation code does not know which events to include - so drops them. This causes problems in two places: * RPC queries & re-execution from snapshots can't find these dropped events * Depending on purge timing these events may erroneously get inserted into future blocks The code change in this PR fixes that bug. It has been tested by running: ``` erigon --datadir=~/chains/e3/amoy --chain=amoy --bor.heimdall=https://heimdall-api-amoy.polygon.technology --db.writemap=false --txpool.disable --no-downloader --bor.milestone=false ``` with validation in place and the confimed by running the following: ``` erigon snapshots rm-all-state-snapshots --datadir=~/chains/e3/amoy rm ~/chains/e3/amoy/chaindata/ erigon --datadir=~/chains/e3/amoy --chain=amoy --bor-heimdall=https://heimdall-api-amoy.polygon.technology --db.writemap=false --no-downloader --bor.milestone=false ``` To recreate the chain from snapshots. It has also been checked with: ``` erigon snapshots integrity --datadir=~/chains/e3/amoy --check=NoBorEventGaps --failFast=true" ``` --------- Co-authored-by: alex.sharov <AskAlexSharov@gmail.com> Co-authored-by: Mark Holt <mark@disributed.vision>

…_e4eb9fc # Conflicts: # cmd/state/exec3/state_recon.go # core/chain_makers.go # core/state/rw_v3.go # core/types/block.go # erigon-lib/go.mod # erigon-lib/go.sum # erigon-lib/kv/tables.go # eth/stagedsync/exec3.go # eth/stagedsync/stage_snapshots.go # go.mod # go.sum # turbo/app/snapshots_cmd.go # turbo/jsonrpc/tracing.go # turbo/stages/headerdownload/header_algo_test.go

setunapo

approved these changes

Aug 20, 2024

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

Upstream latest erigon3