A neural network playground built from scratch using Python.

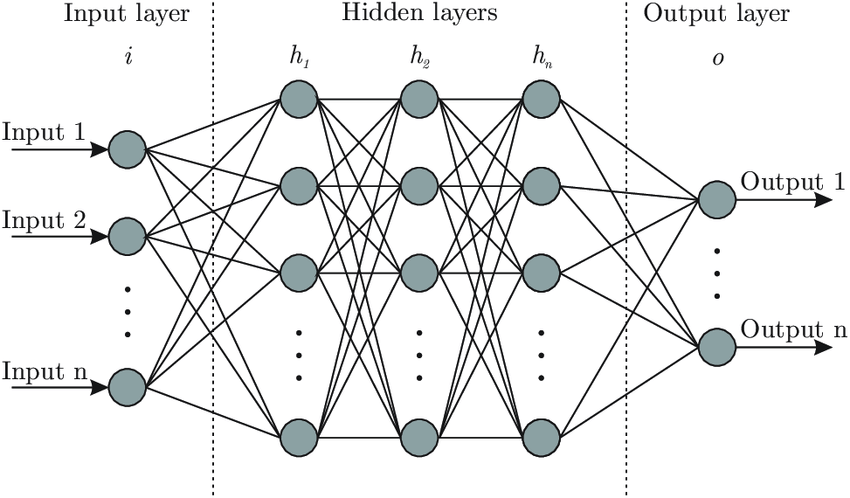

It’s a set of algorithms designed to recognise patterns for building a computer program that learns from data. It is based very loosely on how we think the human brain works. First, a collection of software “neurons” are created and connected together, allowing them to send messages to each other. Next, the network is asked to solve a problem, which it attempts to do over and over, each time strengthening the connections that lead to success and diminishing those that lead to failure.

Today, neural networks are used for solving many business problems such as sales forecasting, customer research etc. They have turned into a very popular and useful tool in solving many problems such as text Classification and Categorization, Regression, pattern recognition, Named Entity Recognition (NER), Part-of-Speech Tagging, Language Generation and Multi-document Summarization, etc.

A model parameter is a configuration variable that is internal to the model and whose value can be estimated from data. Parameters are key to machine learning algorithms. Hyperparameters are adjustable parameters that must be tuned in order to obtain a model with optimal performance. They are the part of the model that is learned from historical training data and are often not set manually by the practitioner.

So, how can we improve the accuracy of the model? Is there any way to speed up the training process? These are critical questions to ask, whether you’re in a hackathon setting or working on a client project. And these aspects become even more prominent when you’ve built a deep neural network. Features like hyperparameter tuning, regularization, batch normalization, etc. helps in fine tuning your model.

Weights - When input enters the neuron, it is multiplied by a weight.For example, if a neuron has two inputs, then each input will have has an associated weight assigned to it. We initialize the weights randomly and these weights are updated during the model training process.

Biases - A bias is added to the result of weight multiplication to the input. It is basically added to change the range of the weight multiplied input. This is the final linear component of the input transformation.

For more information on this we recommend going through Part 2 of the deeplearning.ai course (Deep Learning Specialization) taught by the great Andrew Ng. We saw the basics of neural networks and how to implement them in part 1, and I recommend going through that if you need a quick refresher.

- No. of Epochs

- Batch Size

- #layers

- Learning Rate

- Hidden Units

- Activation Function

- Train/Test/Dev Split

- Manual Search

- Grid Search

- Random Search

- Bayesian Optimization

We wrote this neural network code completely from scratch. We were inspired by our course instructor Andrew Ng and felt the urge to create a platform where everybody could visualise the effects of tuning different parameters, building your own neural network, uploading different datasets and so on.

1. Clone the repository

git clone https://github.com/sherwyn11/Skyplay-NN.git2. Start a virtual environment eg. conda (Recommended)

conda activate <my_virtual_env>3. Download the requirements

cd Skyplay-NN

pip install requirements.txt4. Start the server

python wsgi.py- Python3

- Flask

- Numpy

- Pandas

- Matplotlib

- Seaborn

- D3.js

- JavaScript

- jQuery

- HTML5

- CSS3

- Bootstrap

- Material Design Lite

- Classification/Regression on single class structured data

- Added activation functions

- Added layers

- Added optimizers

- Added loss functions

- Added regularization

- Created UI for model visualization and testing

- Multi-class Classification/Regression

- Unstructured Data Classification/Regression

- Fix small bugs

For more details about our model architecture and components Click here

Made with 💙 by Darlene Nazareth and Sherwyn D'souza

© 2020 Copyright