This is the public repos that contains the code and plots for the paper.

The experiment is carried out in a disturbance-free closed flight arena and an 8-optitrack Flex-13 motion capture system is used to provide the location of the drones. For experimental verification, 5 Crazyflies nano-drones are used along with the software support from USC-ACTLab (https://crazyswarm.readthedocs.io/en/latest/index.html) for implementing the low-level controller. The commands given by the ground station as the velocity commands, where distributed ROS(Noetic) nodes have been implemented for each drone. The algorithm uses many features which are not discussed explicitly like drone collision avoidance, low-level controller, etc. Here each runs the algorithm independently and only the neighbours can share the local information (positions, parameter estimates) with each other.

The experiments are done as hovering example, where height(z) is kept as constant as 0.6m. The region

with

$$

a = \begin{bmatrix} \underline{\beta} & \underline{\beta} & 100 & \underline{\beta} & \cdots & \underline{\beta} & 100 & \underline{\beta} & \underline{\beta}\end{bmatrix}

$$

and with

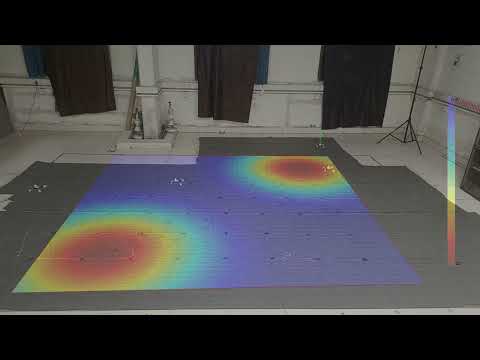

The crazyflies tracjectory along with the final Voronoi partition is given below (with true sensory function heatmap as overlay)

The cost function is shown below (with true sensory function heatmap as overlay)

The initial and final Voronoi partition is shown below along with Gaussian peaks (with true sensory function heatmap as overlay)

The real-time experimental video (5 crazyflies) are shown below along with the overlay of true sensory function as heatmap