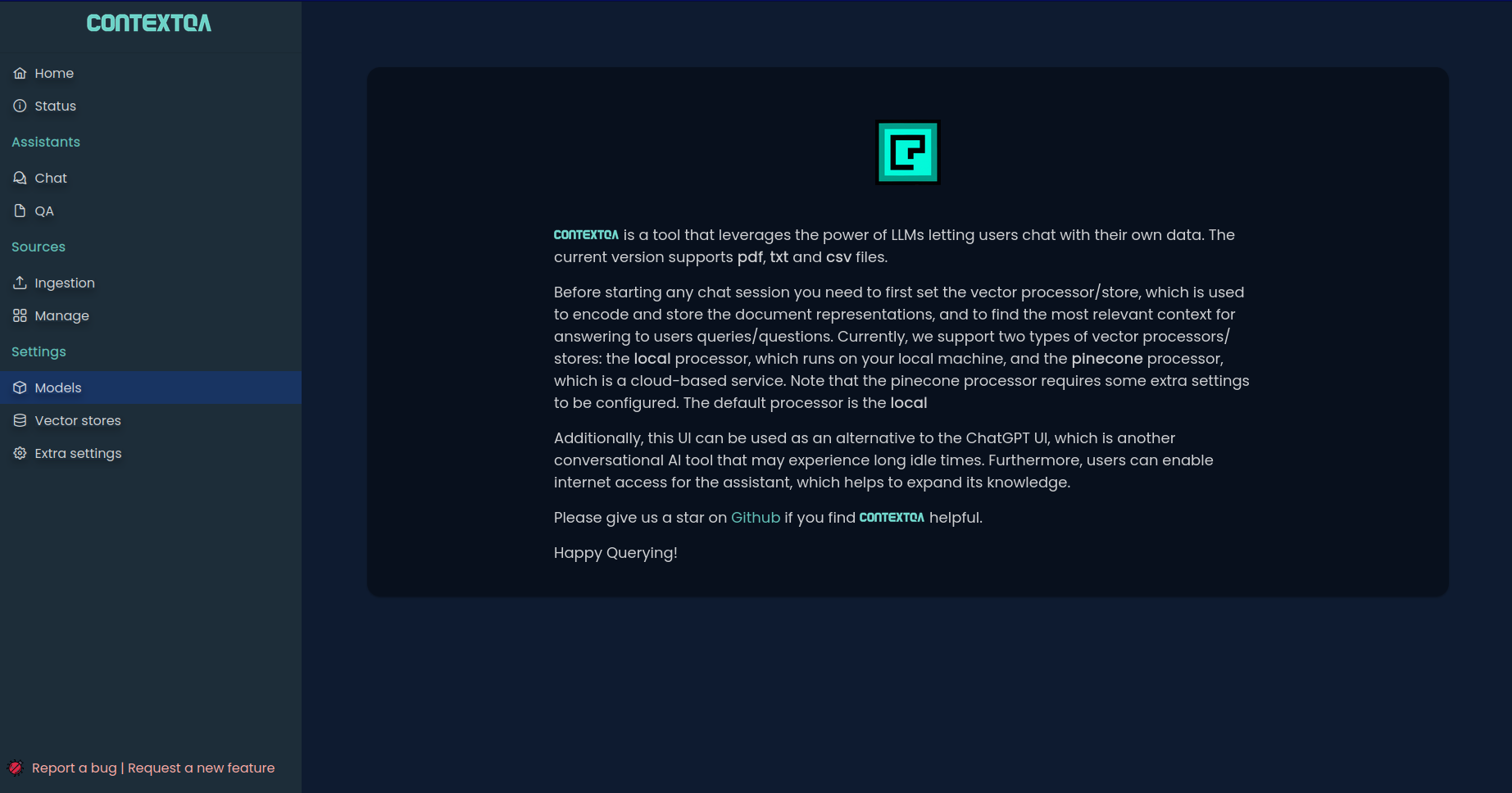

Chat with your data by leveraging the power of LLMs and vector databases

ContextQA is a modern utility that provides a ready-to-use LLM-powered application. It is built on top of giants such as FastAPI, LangChain, and Hugging Face.

Key features include:

- Regular chat supporting knowledge expansion via internet access

- Conversational QA with relevant sources

- Streaming responses

- Ingestion of data sources used in QA sessions

- Data sources management

- LLM settings: Configure parameters such as provider, model, temperature, etc. Currently, the supported providers are OpenAI and Google

- Vector DB settings. Adjust parameters such as engine, chunk size, chunk overlap, etc. Currently, the supported engines are ChromaDB and Pinecone

- Other settings: Choose embedded or external LLM memory (Redis), media directory, database credentials, etc.

pip install contextqaOn installation contextqa provides a CLI tool

contextqa initCheck out the available parameters by running the following command

contextqa init --help$ contextqa init

2024-08-28 01:00:39,586 - INFO - Using SQLite

2024-08-28 01:00:47,850 - INFO - Use pytorch device_name: cpu

2024-08-28 01:00:47,850 - INFO - Load pretrained SentenceTransformer: sentence-transformers/all-mpnet-base-v2

INFO: Started server process [20658]

INFO: Waiting for application startup.

2024-08-28 01:00:47,850 - INFO - Running initial migrations...

2024-08-28 01:00:47,853 - INFO - Context impl SQLiteImpl.

2024-08-28 01:00:47,855 - INFO - Will assume non-transactional DDL.

2024-08-28 01:00:47,860 - INFO - Running upgrade -> 0bb7d192c063, Initial migration

2024-08-28 01:00:47,862 - INFO - Running upgrade 0bb7d192c063 -> b7d862d599fe, Support for store types and related indexes

2024-08-28 01:00:47,864 - INFO - Running upgrade b7d862d599fe -> 3058bf204a05, unique index name

INFO: Application startup complete.

INFO: Uvicorn running on http://localhost:8080 (Press CTRL+C to quit)Open your browser at http://localhost:8080. You will see the initialization stepper which will guide you through the initial configurations

Or the main contextqa view - If the initial configuration has already been set

For detailed usage instructions, please refer to the usage guidelines.

We welcome contributions to ContextQA! To get started, please refer to our CONTRIBUTING.md file for guidelines on how to contribute. Your feedback and contributions help us improve and enhance the project. Thank you for your interest in contributing!