-

Notifications

You must be signed in to change notification settings - Fork 17

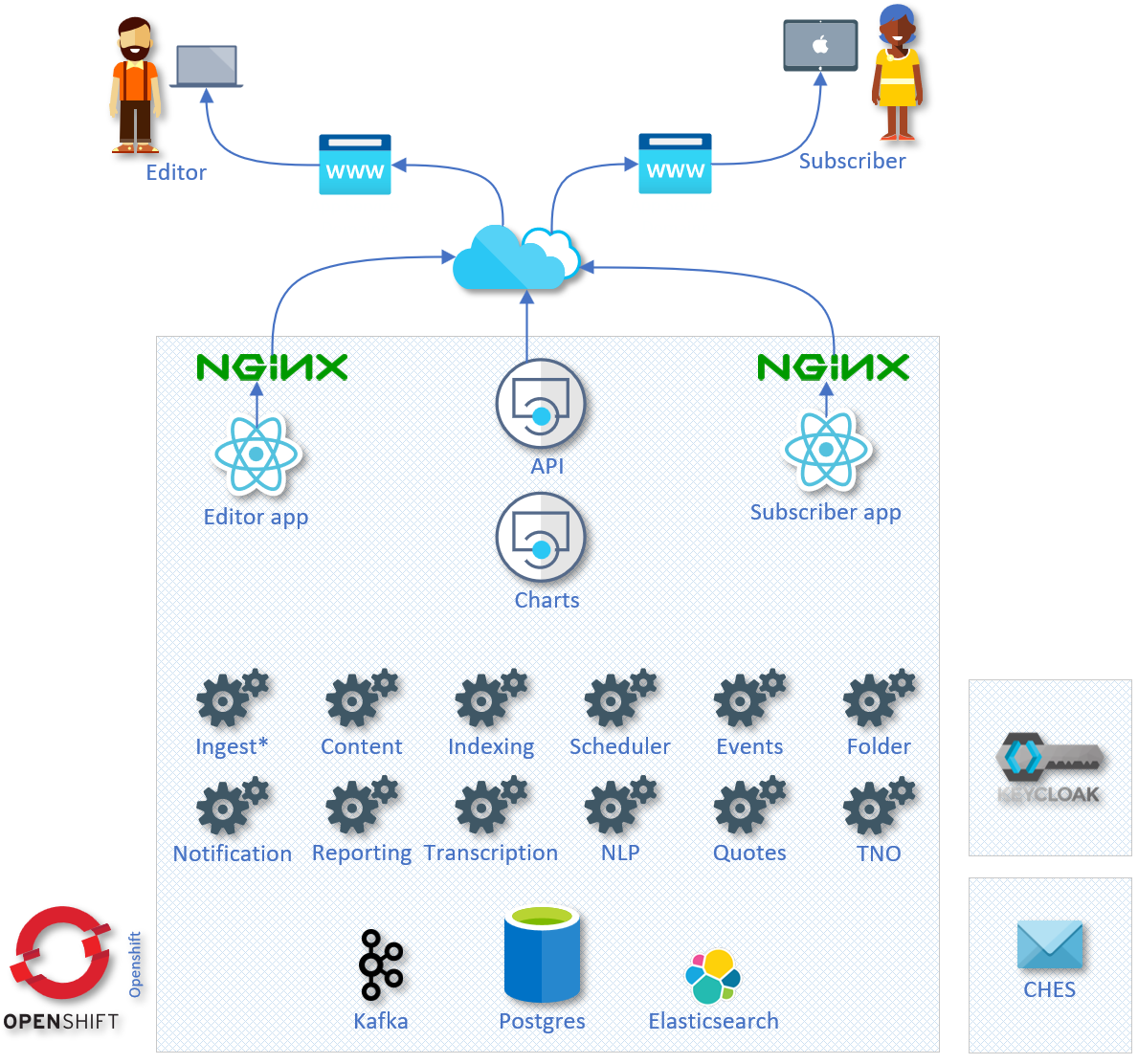

Architecture

- Solution Architecture

Media Monitoring & Insights (MMI) is an event-based micro-service architecture that is hosted on Openshift. Kafka provides the event-based messaging and horizontal parallel scaling. Search is provided by Elasticsearch. Keycloak provides the authentication services through government issued IDIR accounts. The backend services are written primarily in .NET. The frontend applications are written in React and Typescript. The solution is containerized using Docker and can be hosted on any container orchestration platform that supports Docker.

There are two types of services through the solution, Producers and Consumers. A Consumer performs an action when it receives a message from Kafka. A Producer initiates actions by sending messages to Kafka.

The solution uses Oauth2.0 for security (authentication and authorization). A user or a service account must obtain a valid access token from Keycloak before making requests for the web applications or the API. Keycloak is a 'custom realm' hosted and managed by the Exchange Lab. MMI has full control over this realm, and is responsible for configuration.

The primary database that contains all content and configuration uses PostgreSQL, and is managed through the CrunchyDB operator hosted in Openshift.

Files such as audio and video are stored on permanently mapped volumes within Openshift and within BC Government S3 buckets.

SignalR is used to handle communication and messaging to the web applications. This is supported through a Kafka backplane to enable horizontal scaling of the API.

Email is provided by the Common Hosted Email Services (CHES) solution that is also managed by the Exchange Lab.

All access to data is performed by the centralized API. This API provides a single and consistent secure way to interact with the solution. For those who understand a pure event-based micro-service architecture, this would be a anti-pattern. The centralized API leans toward monolithic patterns, however it reduces complexity dramatically without losing many of the benefits gained by micro-services. The outcome however is that all services, while mostly independent still require access to the centralized API.

Nginx is used as a reverse-proxy to the two web applications. This provides the ability to use a single domain name for both the web application and the centralized API. Without a reverse-proxy the API would need its own domain name and as such require CORS and related security configuration. The default router provided through Openshift was found to be unreliable and would randomly stop forwarding requests that were supposed to go to the API to the web applications.

Content is added to the solution through two primary processes. The majority of content is automatically imported through the Ingestion Services. There are several to handle different types of content: Syndication, Papers, Images, and TNO Historical. Secondly, Editors and Subscribers can add content manually to the solution through their respective web applications. For Editors, this is the main source of audio and video content.

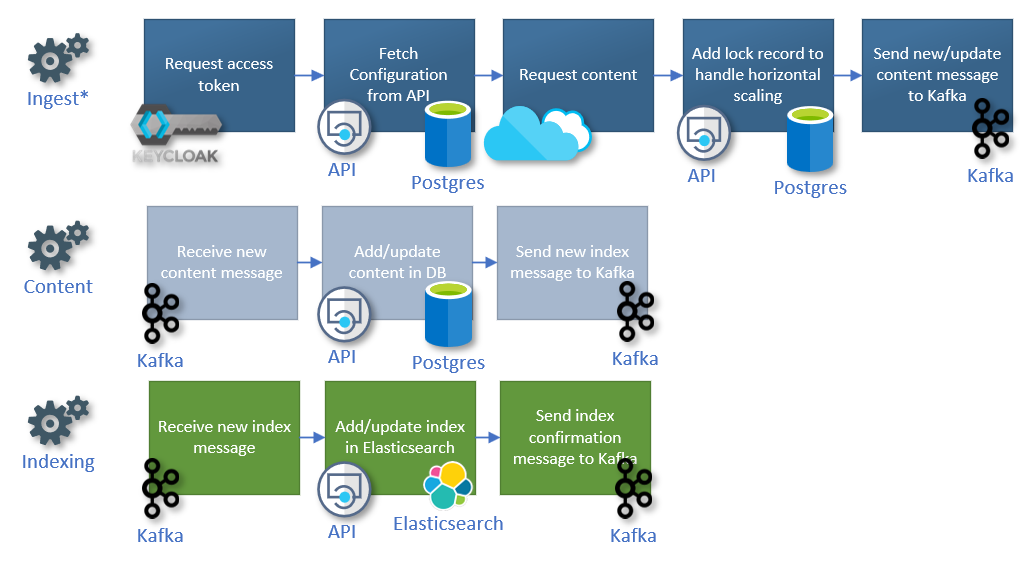

The following diagram explains how the ingestion process works. These services through configuration pull in content from 3rd parties and send messages to Kafka. Then the Content Service, which is a Kafka Consumer and Producer receives these messages and adds the content to the PostgreSQL database. Once it has completed its work it sends a new message to Kafka to ask for the content to be indexed. The Indexing Service, which is a Kafka Consumer and Producer receives this new message and indexes the content in Elasticsearch. Once it has completed its work it sends a new message to Kafka to inform the system that this content is now available to Subscribers. Which also results in other unrelated Kafka Consumer services to pick up the message and perform their respective actions to the content.

The Syndication Service makes HTTPS requests to fetch XML from 3rd party internet sites. Some of these are free, others require contracts to access the data. Syndication feeds are either ATOM or RSS format. However, most news sites that provide this content do not strictly follow syndication standards, as such it requires custom handling of their data.

This is called the File Monitor Service in the code base. It makes various requests for files that are either pushed to an SFTP location, or it makes pull requests for files in a remote SFTP location. These files are proprietary and as such each paper source must be handled differently.

Very similar to the Paper Service, in that these images are provided by various newspapers. This service either makes request for these images, or receives these images through SFTP locations.

This is called the Content Migration Service in the code base. It makes a direct connection to the old Today's News Online (TNO) Oracle database to fetch historical content so that it can be imported into MMI. This process is incredibly slow (we have yet to resolve this).

The centralized API is a .NET RESTful architecture. There are 'areas' that group related access requirements: Admin is for administrators, Editor is for editors, Subscriber is for subscribers, Services are for the services. Each area contains controllers which group related endpoints. All endpoints are configured with authentication and authorization attributes. Models are used to control what is serialized into and from JSON. Generally models are unique to each controller. This is to reduce overlap and regression issues as models are modified over time. The API itself is only focused on handling requests, validating access tokens and then passing the work off to the data access service layer. The data access service layer applies business rules, logic, constraints, and has direct access to the data in the various databases.

The Editor application is a React web application that is for internal staff. This staff curates the content and publishes relevant stories. The Editor application provides the UI for nearly all configuration options with the solution.

The Subscriber application is a React web application that is for external users. Subscribers can read, watch, listen to all the content in MMI. The application draws attention to various categorization of content, such as top stories, features stories, commentary, press gallery and more. Subscribers can request they receive alerts or reports that are part of the product offerings. Additionally, subscriber can create and generate their own reports from the solution.

There are many micro-services to the solution that perform dedicated roles. Many of these services are multi-threaded applications that have a configured limit on how many sequential errors they will allow before they stop running and change their status to 'Failed'. Many of these services will restart automatically after a configured number of seconds, but some do not.

This API is a Node.js Express.js RESTful application. It provides endpoints that generate chart images from the supplied JSON configuration. It uses the Charts.js package to perform this work.

A Kafka Consumer that places imported content into the PostgreSQL database.

A Kafka Consumer that indexes content into Elasticsearch.

A Kafka Consumer that sends out email notifications to subscribers when content is alerted. A request is sent to CHES as a mail merge. This means one request is sent to CHES and it sends it to all the subscribers. A record is kept in MMI for each mail merge sent out.

A Kafka Consumer that generates reports and sends out emails to subscribers. The Reporting Service will generate and/or send reports based on the requested action and configuration of the report. A request is sent to CHES for every single subscriber independently. A record is kept in MMI for each request to CHES. If a report fails to generate or send for any reason it will be added back to the Kafka queue and another attempt will occur. The service will pick up where it left off and only send the report to subscribers who have not yet received it. After a configured number of failures the service will give up and leave the report in a failed state.

A Kafka Consumer that extracts the text from audio content.

A Kafka Producer that initiates reports and notifications based on configured schedules.

A Kafka Consumer that can perform various actions. Currently it is used to empty folders of content.

A Kafka Consumer that adds content to folders based on filters.

A Kafka Consumer that extracts keywords and information from text. This service is currently disabled.

A Kafka Consumer that extract quotes from text. This service is currently disabled.

A Kafka Consumer that converts audio and video files into a format that is supported by common browsers.

A Kafka Consumer that copies files from one location to another. This was originally used to copy files from the server room NAS to Openshift. This service is currently disabled.